Tinker makes DIY fine-tuning of open LLMs go mainstream

Tinker, a managed fine-tuning API from Thinking Machines Lab, lets teams shape open LLMs with simple loops while keeping control of data and weights. Here is how it changes speed, cost, the agent stack, and safe rollout.

The week fine-tuning stopped being a science project

On October 1, 2025, Thinking Machines Lab unveiled Tinker, a managed fine-tuning API that aims to make customizing open weight language models feel as routine as writing a unit test. The team, led by former OpenAI leaders including Mira Murati and John Schulman, positioned Tinker as a way for builders to own model behavior instead of renting it through prompt stacking. Early reports describe a service that abstracts distributed training while leaving the core training loop and data decisions in your hands. For a concise overview, see the Wired coverage of Tinker.

If Tinker delivers on its design goals, it compresses the time needed to shape a model from months to days, shifts budget from ever larger token bills to targeted runs, and brings model ownership within reach for teams that cannot justify a full research infrastructure.

Why this matters now

For the past two years, many teams boosted performance by stacking prompts, retrieval, and guards. It worked, but it was brittle. Prompts drifted when upstream models changed. Latency crept upward as each request juggled more tools. Costs scaled with tokens rather than value delivered.

Fine-tuning was the obvious answer, yet it felt like a research project. Managing clusters, keeping long runs stable, and choosing sensible hyperparameters demanded expertise most product teams lacked. Tinker targets that gap by offering a minimal API surface while it handles the messy parts of distributed training behind the curtain.

Think of it like a professional kitchen. Before, everyone brought takeout and tried to plate it with clever garnish. Now, the ovens are preheated, cookware is ready, and a sous-chef minding timing and temperature keeps service on schedule. You still control the recipe. You still choose the ingredients. The restaurant finally runs on time.

What Tinker actually does

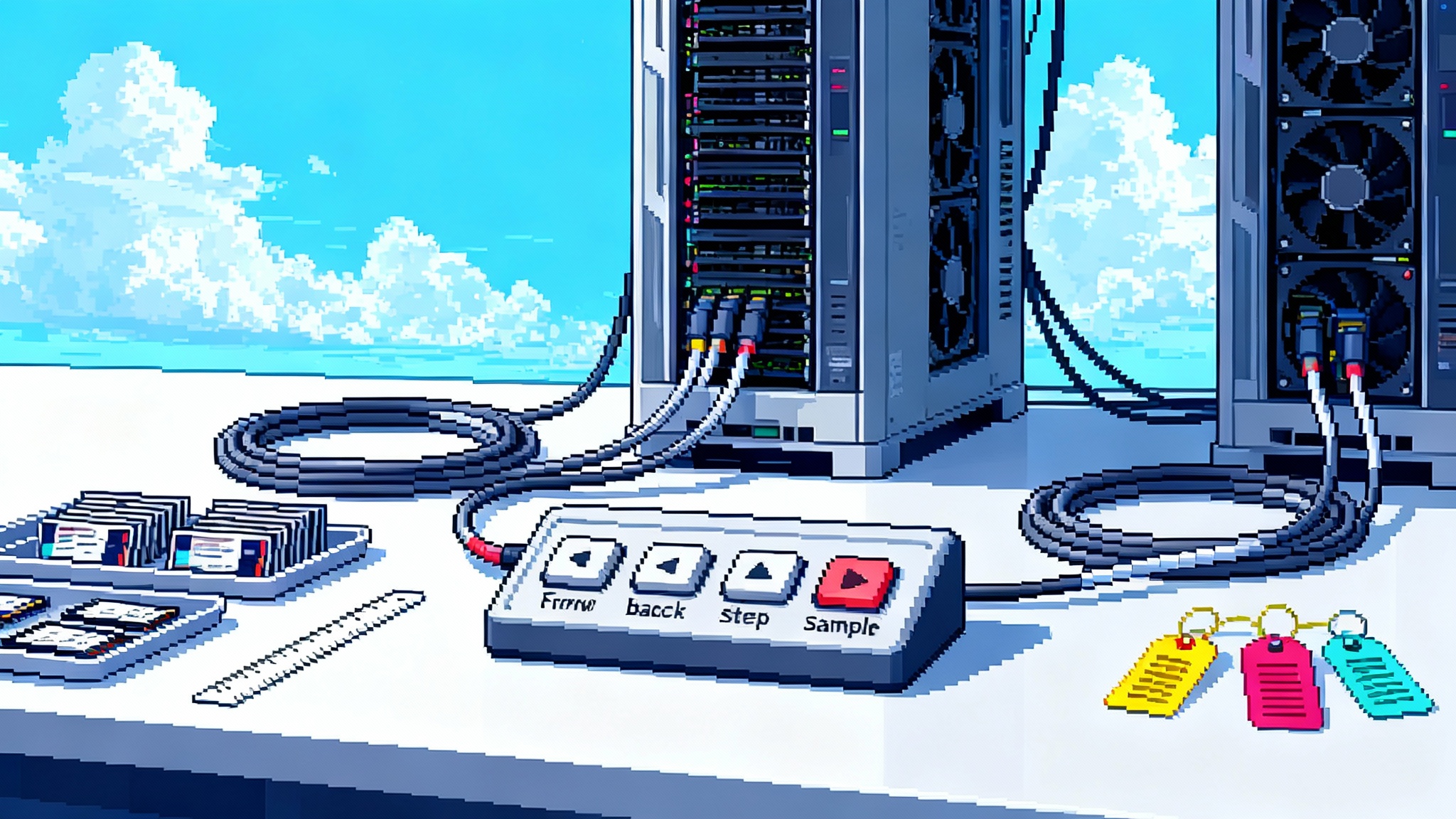

Tinker exposes a small set of primitives that mirror how training really works:

- forward_backward: run a forward pass on a batch, compute the loss you define, and accumulate gradients

- optim_step: step the optimizer and update weights

- sample: generate tokens for interaction, evaluation, or reinforcement learning feedback

- save_state: checkpoint and resume training

Under the hood, Tinker orchestrates distributed compute on large clusters. On the surface, you write a compact loop that can run on your laptop. Today it supports open weight families like Meta Llama and Alibaba Qwen, including very large mixture of experts variants. Tinker emphasizes low rank adaptation, training small adapters rather than rewriting every parameter. The official Tinker API docs detail functions, supported models, and quickstart recipes.

Two practical notes for teams considering a trial:

- Access and pricing: early access is open and the service is free to start, with usage based pricing to follow. That lowers the barrier for a pilot.

- Model lineup and portability: you can download trained weights, which reduces platform lock in and makes multi cloud deployment plausible from day one.

From prompt hacks to owning weights

Owning weights is a mindset shift. It turns model behavior from a volatile prompt trick into an artifact you can version, test, and ship. Here is what changes in practice:

- Reliability: prompts become inputs, not behavior. When replies must not regress, you encode behavior in weights and keep prompts short and stable.

- Latency and cost: a tuned 8 to 13 billion parameter model that knows your task often beats a much larger general model plus retrieval and tool calls on both speed and total cost at scale.

- Privacy and compliance: training data can stay inside your boundary, and tuned weights can be hosted in the region and environment you need.

- Platform independence: downloadable adapters let you switch inference vendors, negotiate better rates, and satisfy local deployment needs.

This does not mean throwing away retrieval. It means your stack learns the predictable parts and retrieves what truly depends on fresh or proprietary context. Retrieval becomes the library. The tuned model becomes your house style.

A concrete path for startups

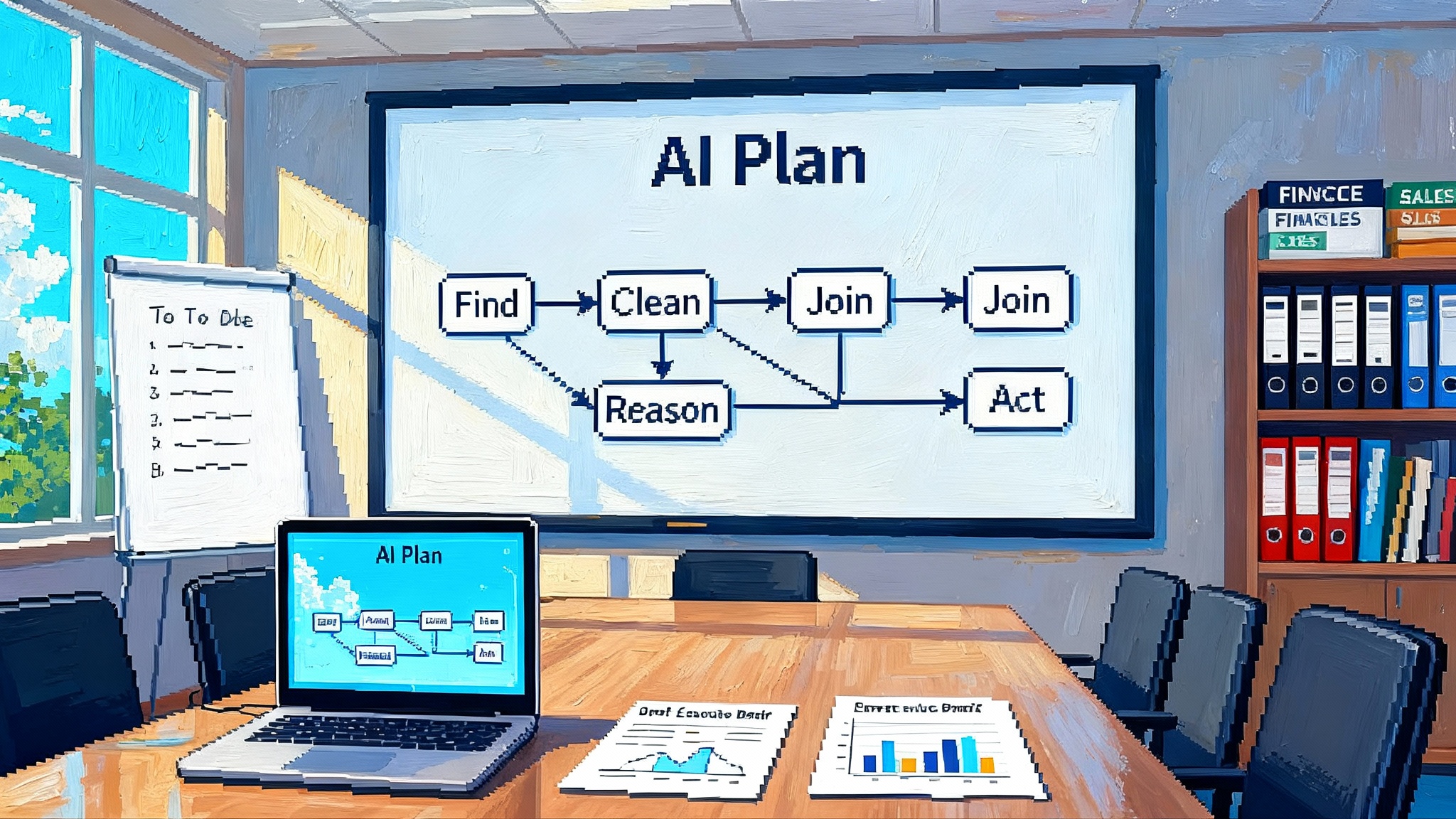

The common fear is that fine-tuning demands a perfect dataset and a PhD. In reality, you can stand up a working pilot in one week if you scope it well. Here is a 7 day plan that works:

- Day 1: choose a single high value workflow where your model struggles today. Scope it tightly. Examples include summarizing messy support tickets into three crisp action items or generating clean SQL from semi structured prompts.

- Day 2: build a seed dataset of 1,000 to 3,000 examples from your own logs. De identify, normalize, and write strict rubrics for what a good answer looks like. Sample for diversity rather than size.

- Day 3: define your loss. For supervised learning, label with target outputs and reasons. For reinforcement learning, write a reward function based on rubric scores, brevity penalties, and domain constraints.

- Day 4: implement a minimal training loop with

forward_backwardandoptim_step, then checkpoint withsave_state. Start with LoRA on a 7 to 13 billion parameter base model. Run short sweeps rather than one long run. - Day 5: use

sampleto generate outputs for your eval set. Track exact match or rubric scores and time to response. Maintain a leaderboard of every run. - Day 6: deploy a canary behind a feature flag for 5 percent of real traffic. Add a one click fallback to your current system.

- Day 7: run an error taxonomy review. Fix the top two systematic failures by adding targeted examples and refining the reward or loss. Rerun for one more day.

By the end of the week you have a model that does one thing well and a loop that makes it better with each new batch of data.

How Tinker compresses the customization cycle

The biggest hidden cost in tuning is not gradient math. It is orchestration and iteration speed. When a run crashes at hour 15 or a batch quietly goes missing, your week slips. Tinker tackles this in three ways:

- Managed reliability: checkpointing and resumption let you treat long runs as resumable jobs rather than sacred events.

- Minimal surface area: a small set of primitives encourages simple, testable training loops instead of sprawling pipelines.

- Model access as a switch: changing from an 8 billion model to a 32 billion model becomes a string change, which makes exploration cheap.

The result is a tighter inner loop. You can try three different losses before lunch and compare side by side before you head home.

The agent and retrieval stack, rethought

Agents and retrieval augmented generation remain important, but the shape changes once you can cheaply own behavior.

- Retrieval becomes a precision tool: use it when the answer depends on current facts, legal text, or customer records. Put style, structure, and multi step scaffolding into weights.

- Agents simplify: instead of a sprawling tool use tree, train the model to plan in a consistent format and to call a small, well typed set of functions. Fewer calls, more reliability.

- Distillation from prompts to weights: start from the prompts and chains that work today. Translate them into instruction pairs or preference data, then tune the model to produce the same behavior from a shorter prompt.

If you are actively building agents, our exploration of how AgentKit compresses the agent stack pairs well with a tuned base that already knows your schema and tone. We also covered how Replit Agent 3 goes autonomous, and why bringing agentic AI into production demands consistent planning and guardrails that are easier to encode in weights than in ever longer prompts. For teams moving from demos to production, see how agentic AI in real operations benefits from predictable behavior and shorter prompts.

A practical architecture to aim for

- Preprocess and rank context candidates with retrieval.

- Ask a tuned model that already knows your schema, tone, and guardrails.

- Call tools only for steps that truly need external systems.

- Log everything and feed it back into your training set each week.

Over time, your prompt shrinks, your weights grow smarter, and your system becomes faster and cheaper.

Build the data pipeline that tuning deserves

Good data beats clever tricks. Here is a pragmatic recipe for assembling training data without pausing your roadmap:

- Mine your logs: extract user prompts, your team’s best responses, and acceptance signals. When a human edits a model output, treat the edit as gold.

- Red team your edge cases: generate synthetic hard negatives that stress rules and style. These are small but high value in preference learning.

- Write rubrics, not vibes: define success criteria in precise language. For example, SQL must be executable, single statement, and use only whitelisted tables. Each criterion becomes a score your reward can see.

- Balance the pile: maintain a hand curated core of 1,000 to 5,000 examples that rarely change. Rotate the rest weekly with fresh, de identified samples.

On privacy, prefer on premise de identification and programmatic filters before any upload. Keep a manifest of every dataset version and the model weights it produced. That change log becomes both your compliance story and your debugging tool.

Evals you can trust

Avoid the trap of a single average score.

- Champion vs challenger: maintain a leaderboard with at least three slices, such as common cases, hard cases, and safety cases.

- Quality and engineering: track exact match or rubric scores, time to first token, and total cost per thousand requests.

- Sharp canaries: a 50 example set that always catches your dumbest failure mode is worth more than a 5,000 example set of generic questions.

- Weekly error taxonomies: label failures by cause such as schema hallucination, instruction noncompliance, or boundary overshoot. Target the top two in your next batch.

For reinforcement learning, start with direct preference optimization or simple binary rewards before you move to complex bandits. Keep the reward function short and legible. If you cannot explain a reward in one paragraph, it is too complicated.

Safety as a first class training signal

Safety is not a filter you bolt on after the model. It belongs in the loss.

- Curate a dedicated safety dataset tailored to your domain. For a legal assistant, that may include confidentiality boundaries, citation rules, and refusal conditions.

- Add capability checks to evals. When your tuned model unlocks a new skill, verify related risk before going wide.

- Gate weight downloads with approvals and audit trails inside your company. Treat adapters like code releases.

- Rate limit and instrument new skills. Watch for sudden jumps in tool usage or query shapes.

- Keep a human in the loop for red flags and maintain a rollback path for weights just like you do for code.

The goal is not to make the model timid. The goal is to shape it to your obligations and to prove that you are doing so deliberately.

Costs, with numbers you can run

Your numbers will vary, but a back of the envelope helps frame decisions.

- Training: assume a 13 billion parameter base with LoRA, a few thousand supervised pairs, and around 50,000 preference comparisons. A well tuned run can complete in the low tens of hours on a modest multi GPU slice. Expect four figures, not five, for a serious pilot.

- Inference: a tuned 13 billion model often delivers quality close to a much larger hosted model for a specific task. If your current stack sends 40 percent of tokens to retrieval and tools, a tuned model can reduce those calls and cut token spend by double digits.

- Latency: trimming tool calls and shrinking prompts often cuts p95 latency by 30 to 60 percent for structured tasks.

The real savings come from product velocity. When your team can add a new skill by drafting 200 examples and running a two hour sweep, you ship features you would have skipped before.

A field guide to decisions you will face

- LoRA vs full fine-tuning: start with adapters. Move to full fine-tuning only when you can prove a measurable gap on a static eval and you can afford the larger compute and MLOps overhead.

- Which base model size: pick the smallest model that meets your quality bar on your evals. Many workflow skills do not need 70 billion parameters. Use larger models mainly for knowledge heavy or composition heavy tasks where smaller models fail your hard cases.

- How to blend with retrieval: keep retrieval for current facts and long documents. If a piece of context shows up in 80 percent of requests, put it in weights. If it is fresh, rare, or personal, keep it in retrieval.

- How to organize teams: give one person ownership of evals, one person ownership of datasets, and one person ownership of the training loop and sweeps. Small, clear roles beat a many headed committee.

What this means for the market

A service like Tinker changes the default for startups and small teams. You no longer need a research lab to ship tuned models. You need a problem worth tuning for, clean data, and the discipline to evaluate and iterate.

For closed model vendors, this raises the bar. If a customer can turn a strong open weight base into a product ready specialist in a few days, the value of a closed model narrows to frontier capabilities and compliance assurances. Expect pricing and packaging to evolve. Expect more vendors to support external adapters and weight import.

For enterprises, model ownership starts to feel like buying rather than renting. Legal and compliance teams can ask better questions because the artifacts are concrete. Weights can be audited and versioned. Risks can be reviewed alongside code.

How to get started this week

- Pick one workflow where latency and cost matter and where examples are plentiful.

- Draft a crisp rubric and build a 2,000 example seed set from your own logs.

- Run a first LoRA pass on a small open weight base. Capture metrics and compare to your current stack.

- Use the canary release pattern with a one click fallback. Keep a daily error taxonomy and fix two classes of errors per day.

- Only after a win on one workflow should you expand. Add a second workflow or step up a model size, but never both at once.

The bottom line

Tinker arrives at the right moment. The industry has learned the limits of duct taped prompts and ever growing retrieval chains. The next gains will come from owning behavior, not renting it. A managed fine-tuning service that preserves control while removing the infrastructure tax makes that shift practical.

If you are a builder, the playbook is straightforward. Make a small dataset that reflects your product. Define a tight eval. Train adapters, not egos. Ship a canary. Learn from the errors. Then do it again next week. The winners will not be the teams with the most tokens. They will be the teams that turn their knowledge into weights and treat those weights like the product itself.