AI’s SEC Moment: Why Labs Need Auditable Model 10‑Ks

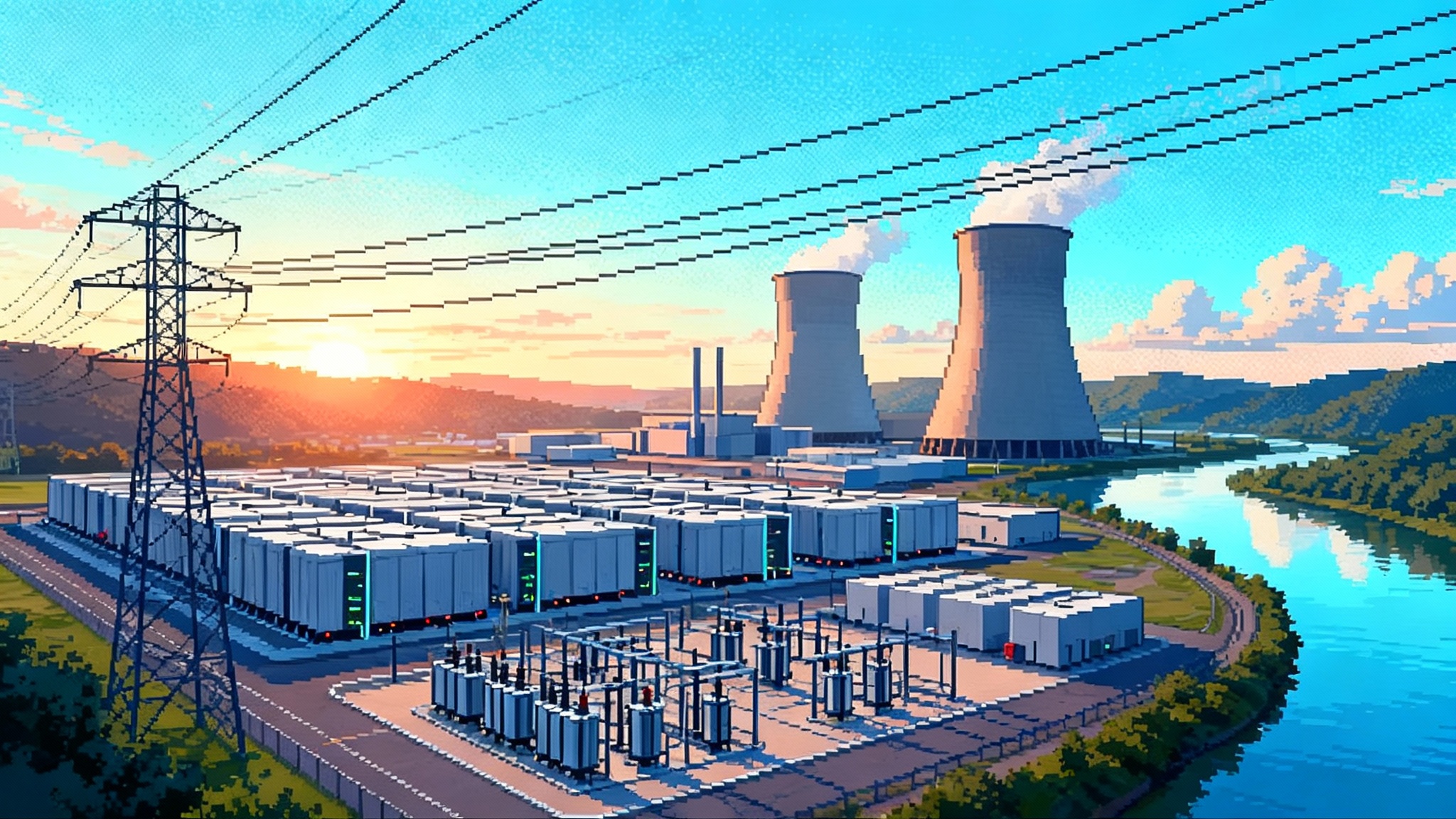

California just enacted SB 53, OpenAI committed six gigawatts of compute, and a 500 megawatt site is in motion in Argentina. AI now touches public grids. The next unlock is standardized, auditable model 10-Ks.

The week AI crossed into public infrastructure

On September 29, 2025, California’s governor signed Senate Bill 53, the Transparency in Frontier Artificial Intelligence Act, creating a first-in-the-nation framework that requires frontier model developers to publish safety frameworks, report critical incidents, and protect whistleblowers. It is the clearest signal yet that frontier labs are being treated less like app startups and more like stewards of public risk. California is formalizing this shift, and it did so in black and white on the governor’s site with the bill’s signing on September 29. You can read the official description at the state page for SB 53, California’s frontier AI law.

One week later, on October 6, OpenAI announced a strategic partnership with AMD to deploy six gigawatts of compute capacity across several chip generations. Six gigawatts is not a marketing flourish. It is the output of multiple large power plants, committed on paper and in schedules. The announcement appears as AMD and OpenAI 6 gigawatt agreement.

On October 10, 2025, OpenAI and Sur Energy signed a letter of intent to pursue a five hundred megawatt data center project in Argentina. That is half a gigawatt at one location. It would be among the largest digital infrastructure projects in the Southern Hemisphere, and it would run on the same grids that power homes, hospitals, and factories.

Taken together, the law, the chips, and the megawatts mark a transition. AI labs are still companies, but their footprints now resemble utilities. The public has skin in the game. So do regulators, grid operators, insurers, bondholders, and local communities. Startups are crossing into quasi-public infrastructure, where reliability, safety, and disclosure move from nice-to-have to non-negotiable.

If you want the larger context for how compute now couples to power, see why the grid is the platform now. What changed this week is that the coupling is no longer theoretical. It is contractual, political, and physical.

From apps to assets that sit on the grid

It used to be that a lab could scale by renting more cloud servers and posting a release note. That era is over for the frontier tier. Six gigawatts of compute means years of capacity planning, long lead times on transmission, water permitting for cooling, substation upgrades, and a supply chain that runs through chip foundries, high voltage transformers, and gas turbines or large solar and storage.

At this scale, a model release is not just a product update. It is a grid event. It can shift power markets, shape land use, and rearrange municipal budgets. It touches state emergency services because outages or safety incidents can have cross-jurisdiction impact. That is why SB 53 routes critical incident reporting through the state’s emergency services apparatus. That is why whistleblower protections are in scope. When software becomes infrastructure, the public will ask for the kinds of disclosures we demand from securities issuers and utilities.

We have written about the governance implications in our piece on conversational OS governance. The through line is simple. Capability without governance increases risk premiums. Governance with disclosure lowers them.

The case for model 10-Ks

The U.S. Securities and Exchange Commission requires public companies to file a Form 10-K that lays out material risks, controls, and performance. Frontier AI needs an equivalent for models. Call it the model 10-K. Not glossy blog posts and retrospective narratives, but standardized and auditable filings that let outsiders verify what is claimed.

A model 10-K should include three pillars that map directly to the concerns of policymakers, financiers, and the public.

1) Pre and post mitigation evaluations

- Capability tests before deployment and again after mitigations are applied, with unchanged seeds and protocols so that results are comparable.

- Red team scopes and methodologies, including coverage for biohazard, cyber intrusion, persuasion, and autonomy risks.

- Measurable thresholds that gate graduation from research to limited release to broad release, with who signed each stage and when.

2) Incident reporting with clocks and thresholds

- A definition of a critical safety incident, including example scenarios and severities, tied to service health and societal risk.

- A clock that starts when an incident is detected, with required updates at fixed intervals until closure.

- A record of corrective actions, rollbacks, or fuse triggers that were executed, with timestamps and responsible owners.

3) Whistleblower guarantees and escalation maps

- Named ombudspersons or independent board members who receive protected disclosures.

- A legal commitment not to retaliate, including an external counsel of choice provision.

- An escalation map that shows when issues are reported to state authorities under laws like SB 53.

This is not about vibes. It is about audit trails and comparability. If two labs claim the same safety posture, their filings should make it easy for insurers, journalists, civil society, and regulators to test the claim. The filings should look similar enough to be useful and rigorous enough to matter.

Why this lowers risk premiums and speeds deployment

Big infrastructure gets built when the cost of capital falls and permits move on time. Transparent, standardized, and auditable disclosures do both.

-

Lower cost of capital: Investors price risk. If you are asking a pension fund to finance substations, power purchase agreements, and multi-year chip purchases, that fund will want to see operational controls and safety telemetry with the same granularity as financial controls. A model 10-K reduces uncertainty, and less uncertainty lowers the interest rate. The same logic unlocked project finance for wind and solar after standard power purchase templates and resource assessments became common.

-

Faster permitting: Land use boards, water districts, and public utility commissions are more likely to approve projects that arrive with clear risk registers, response plans, and validated mitigations. A filing that maps safety commitments to local impact makes hearings calmer and timelines shorter.

-

Better insurance and reinsurance: Underwriters struggle to price emerging, correlated risks like model misuse or model-assisted cyber incidents. A consistent incident taxonomy and proven mitigations give them a baseline. Insurance capacity follows clarity.

-

Smoother grid planning: Independent system operators plan years ahead. If they can see committed compute loads alongside contingency plans, they can sequence interconnections and upgrades with fewer surprises. That reduces curtailment, contractual penalties, and stranded assets.

Counterintuitively, stricter disclosures often accelerate rollout. The result is not fewer projects, but better ones that clear the bar and attract cheaper financing. The reward for clarity is speed.

For labs building assistants that sit between users and everything else, transparency also compounds distribution. We argued in assistants become native gateways that trust is the key to persistent placement. Model 10-Ks are a trust technology.

The coming interoperability layer

States will not build the same rules at the same time. Private certifications will emerge faster than statutes. The market will need an interoperability layer that sits between mandates like SB 53 and independent certification regimes.

Here is what that layer should include.

-

A common taxonomy, similar in spirit to the eXtensible Business Reporting Language used in finance. Call it Safety Reporting Language. It would define labels for capability tests, incident severities, mitigations, and governance responsibilities. Each filing is a signed package of structured data, plus a human readable summary.

-

A schema registry that is public and versioned. When a lab adds a new hazard class or a new mitigation technique, the registry gains a code that others can reuse. This prevents each company from inventing its own names for the same risks.

-

Mutual recognition rules. If a lab earns a certification from an accredited private auditor for a defined capability threshold and mitigation set, a state portal can ingest that certificate and mark the corresponding sections of the filing as satisfied. The rest can be filled by the lab or verified by a state reviewer.

-

Hooks for emergency reporting. The same pipeline that publishes annual filings should publish rapid updates when incidents occur. That lets state emergency services receive machine readable alerts while the public gets a clear human summary.

Over time, this will look like the way building codes and private inspectors interact. The state sets the end conditions. Private inspectors grade compliance against common checklists. Paper flows through compatible pipes.

What builders should do now

Treat safety as product telemetry

- Instrument the model and runtime services so that the signals you need for pre and post mitigation evaluations are collected by default. Examples include jailbreak detection rates, tool invocation patterns for autonomous agents, model self ratings for uncertainty, and human override frequency.

- Store safety telemetry beside service reliability metrics with the same privacy controls and retention policies. If your service has error budgets, your model should have hazard budgets with clear thresholds and escalation rules.

Design for disclosure by default

- As you build, preserve the seeds, prompts, test harnesses, and patches that will populate your model 10-K. The first filing will be the hardest. The second will be a diff.

- Write your incident taxonomy and response runbooks now. Publish them once legal reviews are complete. If you worry that describing a risk invites attention, remember that regulators will ask anyway, and investors will reward clarity.

Compete on clarity of governance, not just capability

- Publish a single page that names who signs off at each gate from research to limited release to broad release. Include the independent directors or advisors involved in hard calls.

- Offer protected channels for internal and external whistleblowing with guaranteed response clocks. Put the details in your careers and trust pages, not only in policy PDFs.

- Commit to a red team diary. List what you tested, what failed, and what you changed before launch. Do not wait for an incident to show that you learn.

A concrete example

Imagine a lab preparing to launch a new general model with tool use and semi-autonomous workflows. Call it G-4. The lab publishes its model 10-K two months before broad release.

-

Scope and capabilities: The filing describes the training data policy, tool access rules, and the known capability envelope. It states that G-4 can compose shell commands, retrieve structured data from internal systems, and plan multi step tasks. It cannot run arbitrary code without a human approving the execution plan.

-

Pre and post mitigation evaluations: The red team designed tests for cyber intrusion, biohazard assistance, and financial fraud. For cyber, they used a suite of fifty prompts to elicit credential theft playbooks. Before mitigations, the model produced sensitive content in nine of fifty trials. After adding a tool permission layer, rate limits, and targeted guardrails, that dropped to two of fifty. Seeds, prompts, and tool policies are published. The difference is verifiable.

-

Hazard budgets and fuses: The lab sets a monthly budget for high severity policy violations per one million requests. If the budget is exceeded for three consecutive days, the fuse triggers. The fuse restricts access to high risk tools, and a responsible executive must sign to reopen. This is shown in the filing as a flow chart and a set of thresholds.

-

Incident reporting: The lab defines a critical safety incident as any event in which the model contributes to a security breach that exposes customer data or elevates a user’s privileges without authorization. The filing lists who gets paged, the time to first public update, and the escalation clock to notify state emergency services under SB 53.

-

Whistleblower guarantees: The filing names two independent directors and one outside counsel who receive protected disclosures. Employees can select outside counsel to represent them at the company’s expense for any safety related complaint. The guarantees are written to survive employment termination.

-

Governance and signoffs: The research lead and the chief risk officer sign the pre mitigation report. The independent director chairs a safety committee that signs the release gate after post mitigation tests pass. Each signature is a real name and date.

-

Public and machine readable pack: The human readable summary appears on the company site. The machine readable pack includes the test schemas, results, and hashes of the exact code that enforced safety policies at launch.

If an incident occurs one week after launch, the same pipeline routes a public incident page within hours and a machine readable alert to the state portal. Insurers and major customers subscribe to those feeds. Trust is earned in hours, not months.

Addressing the hard objections

What about trade secrets

A model 10-K describes controls and results, not the recipe for your weights. Companies already disclose financially material risks without giving away pricing models or customer lists. The same patterns work here. Tests can be public while sensitive parameters remain confidential with auditors.

What about security risk

Some details should stay private. That is why filings can include confidential annexes for verified auditors and regulators. The public version can still show the fact pattern and the mitigation effectiveness without revealing exploit paths.

What about overlapping jurisdictions

The interoperability layer handles this. A lab can file once, attach accredited certificates, and map sections to different state codes. Over time, industry groups will settle on shared schemas. Early movers can shape them.

What about smaller companies

A template filing lowers the cost of doing the right work. Small teams can adopt off the shelf test harnesses and incident taxonomies. Cloud platforms can bundle compliant telemetry pipelines. Investors can fund audits as part of seed rounds.

The new social license

For the past decade, software companies assumed a social license to operate if they followed platform rules and kept outages to a minimum. Frontier AI changes the calculus. With gigawatts on order and half gigawatt sites on the table, the license now depends on safety as a first class system. It will not be granted by hope or branding. It will be earned by filings that outsiders can trust.

California’s SB 53 is a floor, not a ceiling. The AMD and OpenAI agreement shows the scale of the buildout. The Argentina project shows how global the footprint will be. The through line is simple. As models become infrastructure, the public will demand the same discipline that built power plants, bridges, and airlines into reliable systems.

Standardized, auditable model 10-Ks are the next step. They do not slow the future. They let us build it faster, cheaper, and with clearer eyes. The labs that lean in will see their risk premiums fall, their permits move, and their partnerships deepen.

The rest of us get a better map of what is coming and how it will be kept safe. That is a fair trade for the power we are about to plug into our lives.