The Consent Layer Is Coming: When AI Learns to Ask First

Generative media is moving from scrape and release to negotiate and remix. This playbook shows how a consent layer works, why it will win, and how to ship it now with provenance, policy, and payouts built in.

The week AI started to ask

For years, generative systems behaved like universal samplers. They learned from whatever was reachable and released output with little ceremony. Reach was maximized, coordination was minimized, and the world got a crash course in the upside and downside of infinite remix. Then something changed. Prominent product launches began to spotlight visible watermarks, consent prompts, and provenance. OpenAI’s recent framing around Sora emphasized safety systems, including watermarking and provenance, which signaled a wider turn toward permission aware generation. You can see that emphasis in the OpenAI Sora launch notes.

The message is simple. As models move from demos to distribution, consent becomes part of the compute path. Not a press release, not a takedown queue, but a real time check that sits between a prompt and a render.

From scrape and release to negotiate and remix

The first era of generative media optimized for frictionless creation. It produced cultural breakthroughs and meaningful harm. Creators watched their styles and characters diffuse without credit or compensation. Public figures encountered deepfakes that eroded trust. Platforms wrestled with a moderation burden that scaled faster than their tools.

A more durable pattern is now emerging. Instead of assuming the right to generate, systems are beginning to ask. Instead of retroactive objections and emergency triage, they are moving toward preflight checks that codify permission, price, and terms. Remix is not going away. It is getting rules that make it safer to say yes.

If you have followed our coverage of provenance and signatures, you know this shift has been building for a while. We have argued that the web will favor assets that can be verified, not just viewed. For a deeper dive on that foundation, see why content credentials win the web.

Consent as a computational primitive

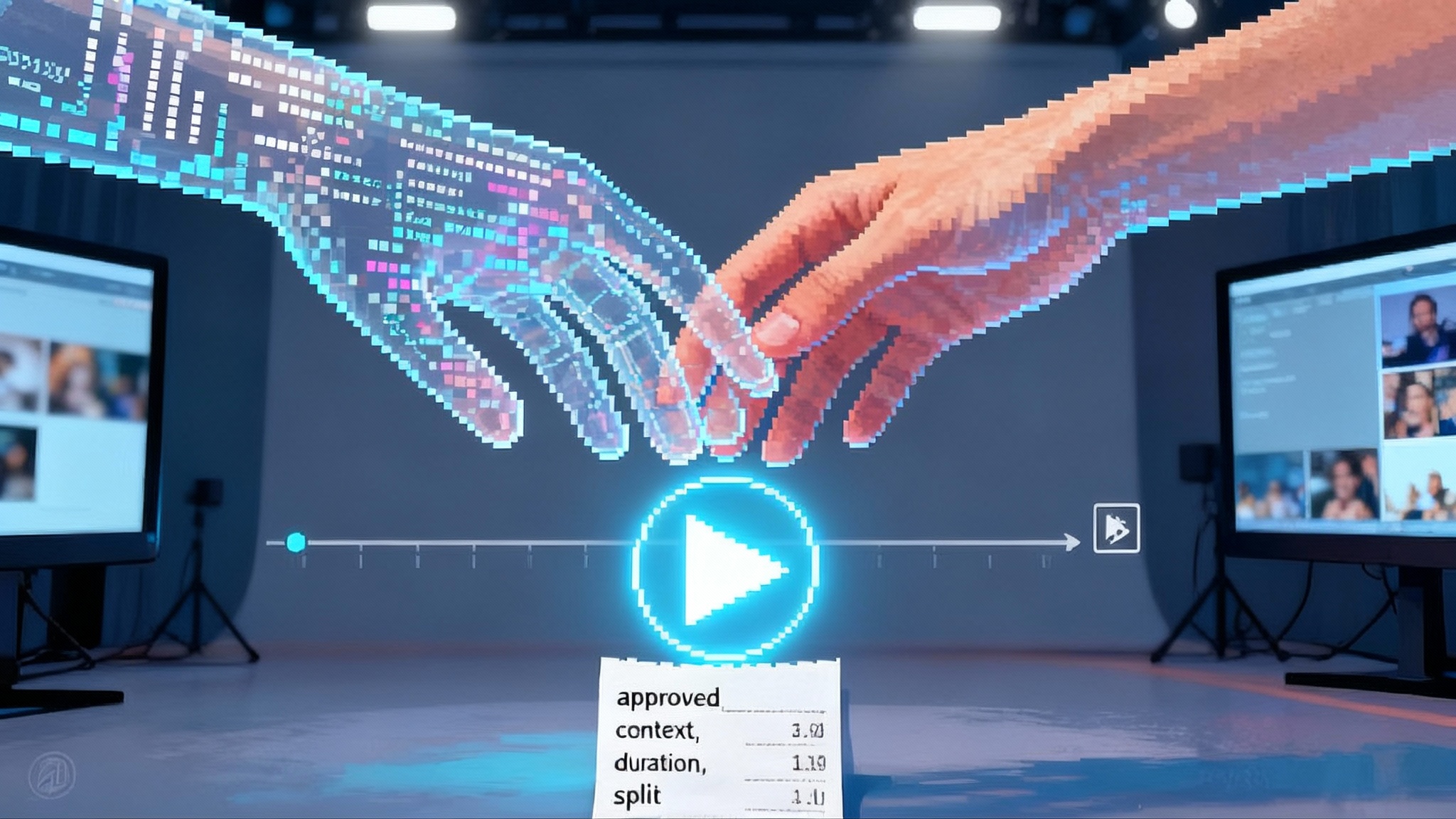

Payments taught the internet a muscle memory. Before a store ships your order, a network asks whether the card is real, the funds are available, and the transaction fits the rules. Media generation can copy that muscle memory. Before a system renders a frame or a voice line, a consent layer asks whether the subject or rights holder allows it, under which conditions, and at what price. The answer becomes a signed receipt that travels with the media.

Treat consent as a first class component, alongside identity, storage, and billing. That mental model unlocks design choices that make permission checks feel natural rather than punitive.

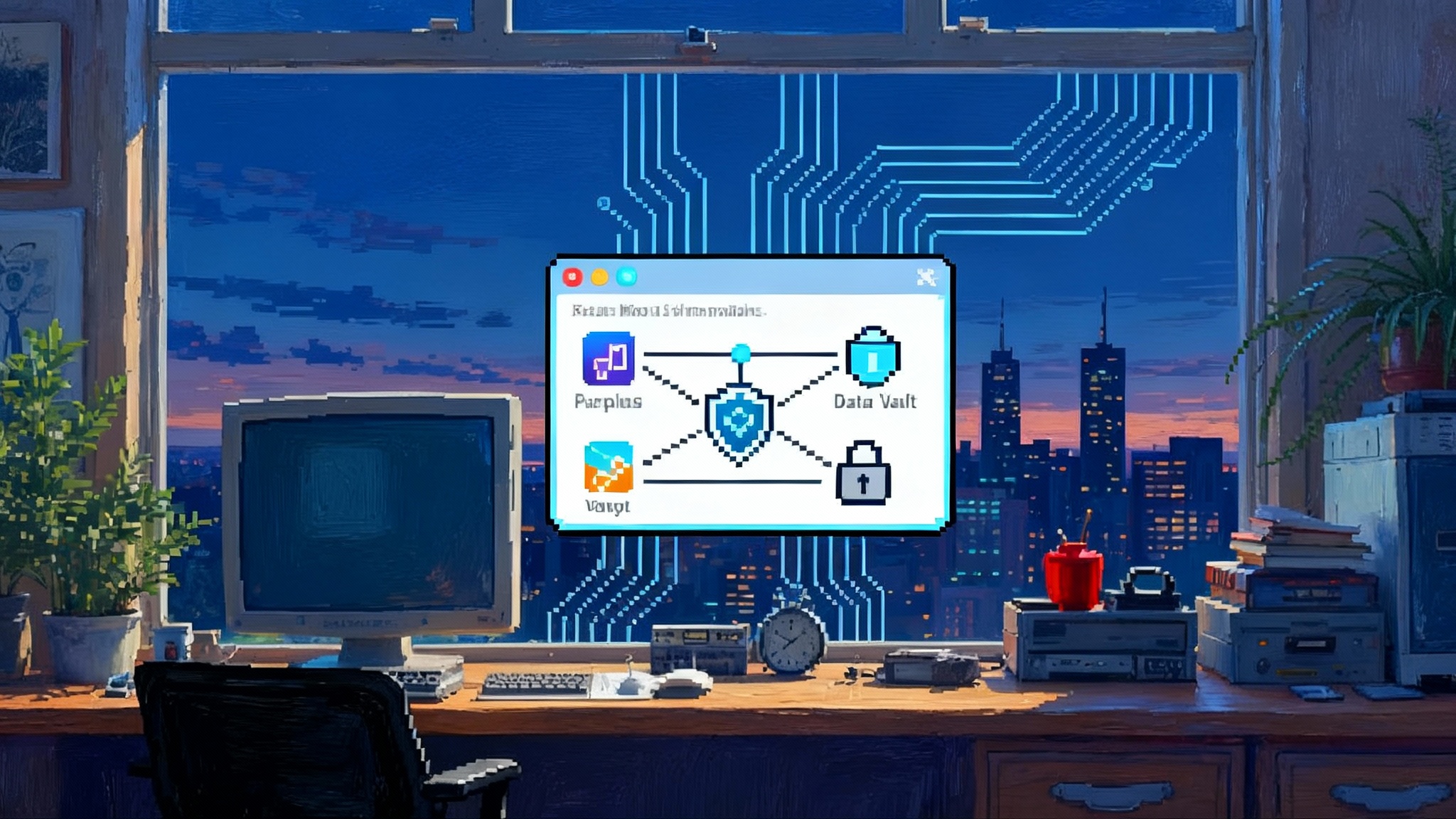

What a consent layer actually does

- Discover assets that might be implicated by a request, such as a name, voice, character, brand, or registered style.

- Evaluate policies defined by rights holders and by the platform, including context filters, distribution limits, and revenue splits.

- Quote terms and price in real time, then return a signed approval or a reasoned denial.

- Sign the output with content credentials so that downstream systems can verify provenance. For the technical standard behind this, learn more about C2PA content credentials.

- Settle microtransactions on a schedule that balances precision and fees, and produce an auditable trail.

Why the shift is happening now

Three forces are converging.

-

Law and norms are hardening. Governments have sharpened rules around deepfakes, impersonation, and election integrity. Even where statutes are still evolving, the social expectation is shifting toward explicit permission for lifelike likeness and voice.

-

Platforms are adapting. High profile releases have shown how quickly a celebratory demo can be overshadowed by questions about rights and consent. Product teams are learning that capability alone does not earn trust. Visible watermarking and provenance are becoming table stakes. Consent prompts are moving from optional to default.

-

Customers are operationalizing. When agents run support threads, when designers test hundreds of visual variants, when marketers mix licensed music with generated voice, leaders want provenance, permission, and predictable cost. That means the consent check has to sit in the hot path, not in a legal inbox.

If you are building agents that execute across browsers, devices, and apps, you will recognize the same pattern we explored when browser native agents arrive. Autonomy requires constraints, and constraints require policy that machines can read.

The stack for a minimum viable consent layer

You do not need a global standard to start. You can ship a credible version in weeks if you scope it and iterate.

1) Rights and identity registry

- Maintain a directory that maps people and organizations to assets they control. Start with names, images, voices, branded marks, and fictional characters. Offer an optional field for style registration for artists who want to participate.

- Store verification keys and policy snippets. Policies should be human readable and machine enforceable. Defaults might include context filters such as no political uses, time bound revocation windows, or noncommercial allowances for fan creations.

- Provide a simple claims workflow. Evidence can be links to official sites, trademark registrations, or cryptographic proofs. Create an appeals process for disputed claims.

2) Policy and price engine at inference

- Parse the user’s request into structured semantics. Detect potential references or matches, and compare against the registry.

- Evaluate three sets of rules: rights holder policy, platform policy, and applicable law. Combine them to produce an approve, deny, or require edits decision.

- On approve, return a machine readable receipt that encodes duration, distribution channels, monetization limits, and revenue splits between creator, platform, and requester. Cache frequent approvals to keep latency low.

3) Built in provenance and audit

- Sign every output with credentials that bind inputs, policy, and model version to the artifact. This is where C2PA or equivalent schemes matter. Pair visible marks with cryptographic signatures so the signal survives screenshots and resaves.

- Maintain a private audit trail that captures prompts, detections, policy versions, and receipts. Obfuscate personal data by default, and escalate retention only for contested cases.

4) Settlement and payouts

- Aggregate microcharges into daily or weekly payouts. Show clear splits and fees so creators can understand what they are being paid for.

- Surface line items to developers before render so they can swap or remove costly elements. Price transparency reduces surprise and teaches users what triggers a license.

A consent handshake in practice

Let us make this concrete with an example.

- A creator types: Two young detectives chase a cartoon marmot through a rainy neon market. Thirty seconds in the vibe of a Saturday morning show.

- The system detects a match to a registered character family and a guarded visual style. The rights owner allows fan use under 45 seconds for noncommercial sharing, disallows political contexts, and requires attribution.

- The engine returns an approval with zero price for noncommercial distribution, plus a note that certain catchphrases are trademarked. The attribution line is included in the receipt.

- The user accepts. The model renders the clip, signs it with credentials, and logs the receipt. If the user tries to boost the clip as an ad, the app blocks that channel because the receipt is noncommercial.

Swap the cartoon for a real person’s cameo, a fashion brand’s logo, or a music loop, and the same handshake works. The actors change. The pattern holds.

What the consent layer unlocks

- Safer fan culture. Studios can welcome playful remix while blocking contexts they never want to see. Fans get a green zone with clear rules and receipts instead of guessing.

- Programmable IP. Characters, worlds, and marks become programmable objects with policy. A studio can express free for kids, paid for ads, embargoed during a theatrical window. Interactive experiences ship faster because legal intent is code.

- Agent to agent licensing. Your design agent can license a brand’s color system and logo for a mockup, then attach the receipt. Your research agent can subscribe to a data feed for a week and respect expiry automatically.

- Microtransactions for individuals. A podcaster licenses her voice for audiobooks with a political filter and a floor price per hour. A college athlete allows training use of his likeness in a specific game with a revenue share and a public opt out.

- Trust by default. Signed media earns distribution advantages in marketplaces that prefer verified assets. That maps to the larger transition we see as ChatGPT becomes the new OS and platforms prioritize safe composition.

Near term playbook for builders

You can start small and compound quickly.

-

Add provenance everywhere. Sign outputs with a content credential and embed useful metadata. Assume visible marks will be stripped, so make sure you can still verify your own assets.

-

Model a simple registry. Begin with your own graph of people, brands, and characters that frequently appear in your app. Give each an allow list, block list, and price card. Expose a dashboard where creators can claim entries and set preferences.

-

Put policy in the hot path. Treat permission like rate limiting. On every generation request, run a check with prompt semantics and detected assets. Return a decision and a human readable receipt. Cache approvals and precompute common cases to keep latency low.

-

Settle small, pay often. Aggregate pennies into weekly payouts. Use clear, simple splits that creators can understand at a glance. Err toward personal use by default and make commercial uses explicit.

-

Offer granular controls for people. If you ship a cameo or likeness feature, give individuals the ability to set context filters, distribution limits, and a kill switch. Assume popularity attracts abuse, then design for the day after.

-

Publish revocation rules. Be explicit about what happens if a creator changes their mind. Preserve existing receipts unless a term allows revocation. Predictability earns trust from both creators and builders.

-

Capture the audit trail. Keep private logs that let you reassemble what happened without leaking personal data. Audit is the safety net that lets you fix mistakes quickly and prove your process when challenged.

The hard parts and how to navigate them

-

False claims and identity games. Bad actors will try to register assets they do not own. Require evidence, add risk scoring, and route high impact claims to manual review. Penalize abuse with cooldowns or bans.

-

Registry capture. Large rights owners might crowd out small creators. Mitigate with default protections, fair discovery, and caps on exclusivity terms in your marketplace. Treat creator onboarding as a product, not a policy page.

-

Latency and cost. Every check feels like friction. Cache decisions, preapprove frequent pairs of requester and asset, and make the negotiation visible so users understand why a block or price appears.

-

Global variation. Consent and publicity rights differ across jurisdictions. Treat location as a policy input and tailor receipts to distribution regions. Do not pretend there is a single global rule.

-

Edge cases. Parody, criticism, and news reporting need special space. Use context tags and allow appeals so lawful expression is not erased by automated filters. When in doubt, route to human review and capture the decision for training.

-

Model hints and prompt scaffolds. LLMs can hallucinate references. Guide them away from real people and protected marks by default, unless a receipt exists. Use a safety classifier that can veto renders even after an initial approval if the output drifts into restricted territory.

Design details that make or break the experience

-

Make consent feel like part of creation. Do not bury the check behind a legal wall. Present it as a quick summary of terms with a clear accept or edit path. If a price appears, let users swap assets or tweak prompts to avoid a charge.

-

Teach with previews. If a user’s prompt triggers a restricted context, show a side by side alternative. Helping the user get to yes is better than a cold denial.

-

Celebrate attribution. When fan use is allowed, make the attribution visible and tasteful. Treat credits as a feature that fans are proud to include.

-

Treat receipts as capabilities. A signed receipt can unlock features such as boosting, ad placement, or export to partner platforms. No receipt, no unlock.

-

Measure the right metrics. Track consent resolution time, approval rate, appeal turnaround, and royalty payout time. These signals tell you if the layer is serving both creators and builders.

A day in the consent layer

You sit down to make a short trailer for your indie game. Your prompt calls for a moody city shot, a synth riff, and a cameo from a voice actor who has opted in for independent projects. The app shows a quick summary: locations approved, music license at 10 dollars, voice cameo at 50 dollars with a nonpolitical clause, distribution limited to your channels for 12 months. You accept. The model renders. The credits populate automatically. The receipts sit behind a tiny shield icon.

Your fans remix the trailer. One uses your licensed riff. Another swaps the cameo for their own voice. The app keeps it all inside the rules because the rules traveled with the media. The culture feels playful, not predatory. Everyone knows where the lines are and how to cross them with permission.

Strategy for product leaders

-

Set a north star that balances creativity and consent. Your goal is not maximal control or maximal freedom. It is a market where more people say yes, more often, because the risk is manageable and the rules are predictable.

-

Sequence the rollout. Start with obvious protected assets such as living people and famous marks, then expand to characters and opt in styles. Communicate the roadmap so creators know when they can participate.

-

Partner with early adopters. Recruit a handful of brands, studios, and independent creators to prove the loop. Feature them inside your app. Publish case studies that highlight payouts, approvals, and time saved.

-

Build a trust desk. Treat high risk reports with a service level agreement. Route appeals to trained reviewers. When you change policy, publish a diff that explains what and why.

-

Keep a public registry. Even a minimal directory of opted in assets helps the community see the green zone. Let creators link to their profiles and receipts. Public clarity reduces private confusion.

The bottom line

Consent is becoming native to the creative stack. Capability is accelerating, so the fabric around it is growing up. If you build with generative media, design for a negotiation before generation. Put policy in the loop, provenance in the file, and payouts in the background. You will protect people, invite rights holders to say yes, and unlock a faster, safer, more creative market.

The internet learned to pay at the point of action. Now it will learn to ask. Start small. Ship the checks. Instrument the path. The consent layer is not a feature. It is infrastructure that will separate the platforms people trust from the ones they do not.