Gigawatt Intelligence: The Grid Becomes AI’s Bottleneck

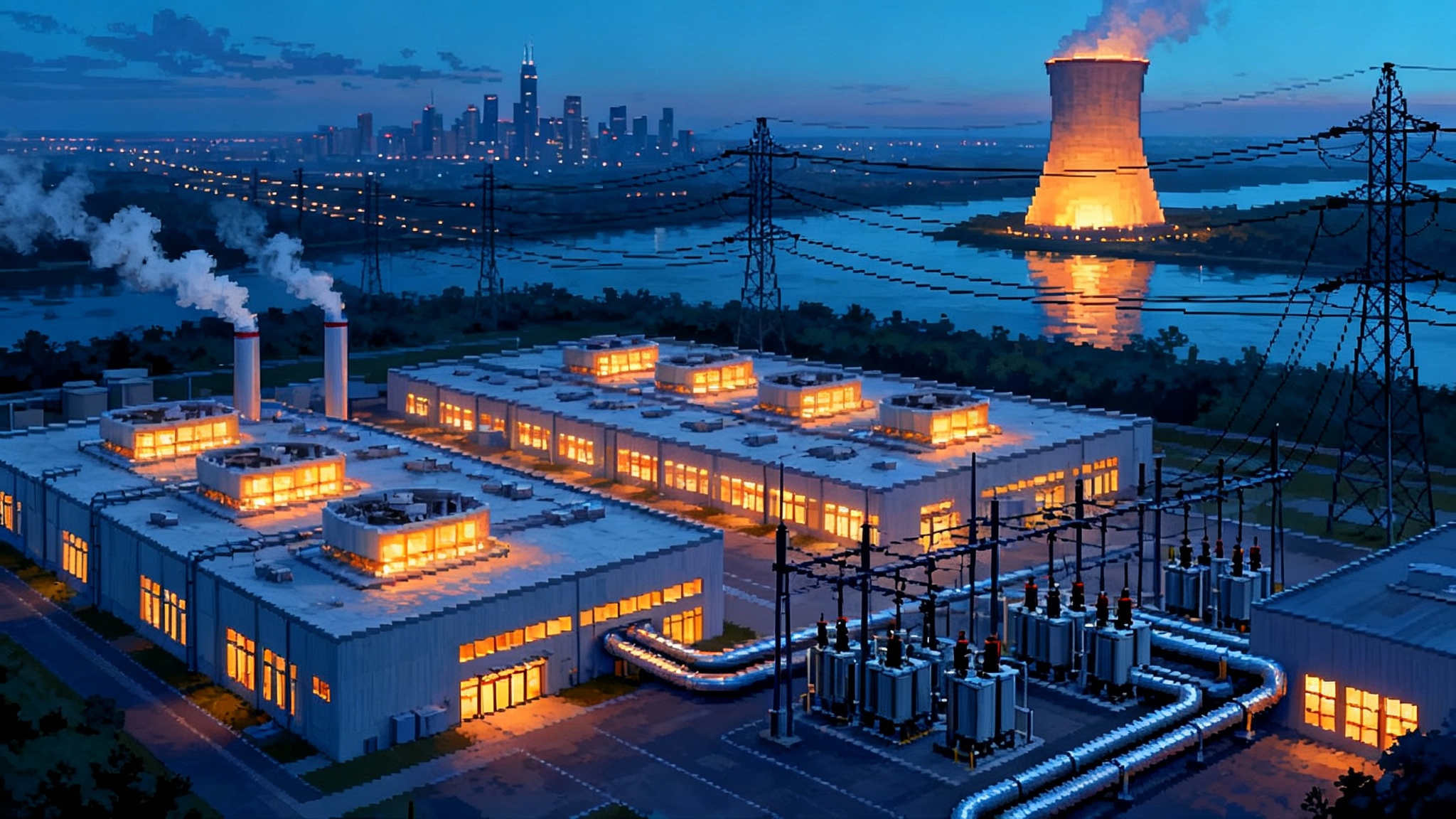

AI’s next leap is not blocked by chips. It is blocked by electricity. From a 6 gigawatt AMD OpenAI pact to nuclear restarts for data centers, the new race is for firm, clean megawatts and the interconnects to deliver them.

The week AI crossed the substation fence

On October 6, 2025, AMD and OpenAI announced a multi year partnership to deploy six gigawatts of GPU capacity, with the first one gigawatt slated for the second half of 2026. It is the kind of number utilities use for a power plant, not a cluster of chips. The announcement was explicit about power at the point of compute, which is why it reads less like a typical silicon deal and more like an energy procurement plan. See the AMD and OpenAI partnership announcement for the details on timing and scale.

This is not an isolated signal. On September 20, 2024, Microsoft signed a 20 year agreement with Constellation Energy to buy power from a restarted Three Mile Island Unit 1, rebranded the Crane Clean Energy Center, targeting roughly 835 megawatts once the unit is back online. The deal ties data center demand to baseload supply on the PJM grid and was framed as a way to match usage with carbon free generation. Constellation’s release, launching the Crane Clean Energy Center, made one point plain. To grow AI, you must buy time on the grid, not just chips.

The shift in framing matters. For years, the frontier was bottlenecked by algorithms and then by accelerators. Today it is power. If intelligence at scale needs a steady river of electrons, then the strategic questions move beyond the board to the breaker, the pipe, and the permit.

The new limiting reagent is a firm, clean megawatt

You can bolt more racks into a hall, but you cannot conjure electrons on demand. A single state of the art training run already consumes tens of megawatt hours per day. Multiply that by continuous training, pretraining refreshes, specialized fine tuning, and an expanding inference footprint, and the curve bends from kilowatts to gigawatts.

This is not only about more energy. It is about the right energy at the right moment in the right place. A gigawatt of wind at midnight in the wrong region does not help a hot zone that needs 100 megawatts by noon. Compute wants firm power that does not blink with clouds or calm winds. The largest buyers also want it clean because their climate pledges now bind procurement choices and customer expectations.

A practical definition of firm is boring but decisive. It is power you can schedule and count on for the duration of a training run or to meet the service levels of a product. When labs begin to speak in gigawatt years and contracted capacity rather than parameters or floating point operations, the center of effort moves. The architecture that matters is no longer only on the board. It is in the substation breaker, the cooling loop, the water permit, and the dispatch stack of a regional transmission operator.

If this sounds like capital expenditure strategy rather than research planning, that is the point. As we argued when AI becomes capital, the moat is moving from code to concrete. Today the concrete includes transformers, transmission, and heat reuse networks.

The real governance layer: utility commissions and interconnect queues

If power is the limiting reagent, then the gatekeepers are not only research boards or platform safety teams. They are public utility commissions that approve rate changes and substation expansions, regional grid coordinators that control interconnection queues, and air and water regulators that determine what can be built and cooled.

Interconnection queues are long because each new large load must be studied for stability and reliability. A 100 megawatt campus is not a plug and play appliance. It is a node that can change flows and fault behavior on a grid whose topology was designed decades ago. Queue studies, transformer lead times, water permits, and local transmission upgrades can stretch timelines beyond the product cycles of models. The result is a governance layer by physics and by process. If your permit slips, your model release slips.

Power purchase agreements have become governance documents too. They encode duration, firming, emissions attributes, and curtailment rights. They can decide which loads keep running during a heat wave and which must scale back. In practice, a long term contract with the right scarcity clauses can preempt arguments about which model gets trained first. Grid rules arbitrate capability.

AI labs are becoming energy market actors

The Three Mile Island arrangement is not a one off. Large technology companies are anchoring nuclear restarts, paying for long lead transformers, underwriting new transmission lines, and securing geothermal baseload where geology allows it. Others are pairing gas with carbon capture to bridge the decade that wind, solar, storage, and new nuclear will need to catch up.

This is a philosophical turn. AI labs once behaved like software companies that purchased hardware on a schedule. They are becoming power developers with machine learning problems. The boundary is blurry because the moat is moving. A lab with exclusive access to clean firm power for the next ten years will out train a rival that has the better paper but an empty substation. Capacity becomes capability.

It also reshapes hiring. Teams that once prioritized compiler engineers now recruit grid interconnection specialists. Procurement offices learn how to hedge around seasonal hydro, not just memory prices. Finance teams price megawatt months as carefully as GPU hours. In that world, a model card without an energy plan reads like a budget without a revenue line.

Grid topology now shapes model capability

Where you place a data center used to be a real estate question. Now it is a topology question. Being inside the footprint of a transmission operator with spare capacity and stable rules is a performance feature. Being next to a nuclear plant that can provide heat reuse options can lower power usage effectiveness and cap cooling pain. Building in a region with winter peaking can give you summer headroom. Siting near a large industrial load that is willing to curtail during your big runs can create a private demand response market.

Topology also shapes the product. If your inference fleet rides a grid with volatile renewables, your serving stack must learn to follow supply. That is not only turning servers off. It is building models that degrade gracefully, cache likely prompts, or adopt precision modes without hurting user experience. The architecture of intelligence is becoming location aware.

Energy aware architectures are the next moat

Most of the industry talks about higher memory bandwidth and faster interconnects. The new edge is energy orchestration. It ties hardware, software, and grid conditions into one scheduling fabric.

-

Heat reuse as a system primitive. Route the hottest clusters to campuses with district heating or industrial neighbors. Sell a megawatt hour twice. First as computation, then as process heat. This is not green fluff. It can add several percentage points of effective efficiency and can turn a local stakeholder into a partner who champions you through permitting.

-

Locality sensitive inference. Ship models, quantization strategies, or sparse experts to where the grid is cleaner or cheaper that hour. Let user queries ride a carbon aware anycast. That demands model families that share weights and can swap tiers without cold starts. It also rewards models that speak silicon, since kernel level awareness reduces waste under tight thermal or power caps.

-

Demand shaping agents. Treat power as an input stream your scheduler learns to predict and trade. Bid into demand response markets. Use tokens per joule as a first class metric in your placement planner. During a transmission constraint, let the planner shift non urgent batch inference to a campus with surplus hydro. The playbook looks a lot like the coordination we described for idle agents that work offscreen, except the clock is the grid, not the calendar.

-

Training that respects outages and ramps. Design checkpointing and optimizer settings for planned dips. Build curriculum schedules that align long context window phases with nights or shoulder seasons. Use synthetic data generation when energy is abundant and schedule expensive human in the loop passes when it is scarce.

These patterns sound arcane. They are how you translate a queue position into a competitive advantage. They also require real engineering. The labs that treat energy as part of the model stack will keep their step.

Vertical integration will set the pace

A slightly accelerationist read is now unavoidable. The teams that vertically integrate power will set the tempo of intelligence. Nuclear restarts are the clearest path to large, firm blocks of clean power on a five to ten year horizon. Advanced geothermal offers promising baseload in specific geologies. Combined cycle gas with carbon capture can bridge the gap where pipelines and geologic storage are feasible and rules allow it. None of these are trivial. All require capital, permitting expertise, and community deals.

The implication is that model roadmaps must be paired with energy roadmaps. It is not enough to forecast parameter counts. You must forecast substations, transformers, water rights, winterization, and where new transmission lines will land. You must be honest about what can be built by 2027 versus what needs 2030 and beyond. That is why executives now spend as much time with grid planners as with chip architects.

Vertical integration also changes risk posture. If you purchase power as a continuous service and your models depend on it, you must understand weather regimes, regulatory timelines, and commodity dynamics. In other words, you outsource less and hedge more. Contracts that were once procurement checkboxes become existential instruments.

What to do next, concretely

For AI labs and hyperscalers

- Build an energy procurement team that sits next to research. Give it the same authority as an architecture group. It should own a portfolio target across firm, dispatchable, and variable sources with clear service levels.

- Map the interconnection queues and transmission projects for your regions of interest. If you are not within an existing substation expansion envelope, your model timeline is at risk.

- Create an internal carbon and reliability price for each training run. Force early design decisions that trade parameter count, precision format, and training duration against the real cost and availability of power.

- Pilot heat reuse at one site in the next 12 months. Treat it like a product launch. Success is measured by community goodwill and a measurable drop in water or chiller energy.

For utilities and grid operators

- Stand up a dedicated large load interconnection lane for compute campuses with standardized study assumptions and pre engineered substation designs. Cut cycle time without cutting rigor.

- Design tariffs and programs that reward demand shaping from data centers. Pay for predictability. Penalize last minute spikes. Make artificial intelligence a grid asset, not only a load.

- Publish a transparent queue dashboard with realistic lead times for transformers, breakers, and line upgrades. Help developers align their product calendars to your physical ones.

For policymakers and regulators

- Accelerate permitting for upgrades at existing thermal and nuclear sites that add load or heat reuse. Focus on safety and environmental justice while removing redundant steps that do not change outcomes.

- Link data center approvals to transmission investment commitments and community benefits. If a project taxes local water or roads, it should pay to improve them.

- Standardize carbon accounting for electricity so buyers cannot claim the same clean megawatt twice. Clarity unlocks long term finance.

For founders and researchers

- Build energy telemetry into the machine learning stack. Expose a simple tokens per joule metric to every team. Make it as familiar as tokens per second.

- Create developer tools that schedule jobs against grid signals. Offer a one line decorator that says run this when the carbon intensity falls below X or when price drops below Y.

- Explore new model shapes that flex with energy. Mixture of experts that can shed experts on command. Quantization schemes that drop to lower precision during scarcity without destroying accuracy.

New metrics for a new frontier

We measure what we value. The industry needs energy native metrics that are as concrete as latency percentiles.

- Megawatt months per training run. This turns a headline training event into a unit a substation engineer can plan for.

- Joules per generated token at a given quality bar. This is the model equivalent of miles per gallon.

- Curtailment aware availability for inference. Track how your serving fleet behaves during grid stress. Reward designs that degrade gracefully.

- Heat recovery ratio. Publish how much useful heat you deliver back to the community per megawatt consumed.

Once these numbers are public, product teams will design for them. Investors will underwrite against them. Utilities will price them.

The philosophical turn

The field has long talked about scaling laws in parameters and data. The next scaling law may live in power. Intelligence is becoming a function of how well we can convert clean, predictable energy into useful computation and then into useful heat or revenue. The labs that align chip roadmaps with grid roadmaps will define the slope of progress.

This week’s announcements put that future on a clock. A six gigawatt plan, a 20 year nuclear commitment, and a visible shift in siting strategy are not software headlines. They are a declaration that the center of gravity for artificial intelligence is moving into energy markets and public infrastructure.

The conclusion is practical. If you want to shape the pace of intelligence, learn the language of utility planners, the patience of permit lawyers, and the pragmatism of grid operators. Put megawatts into your model cards. Put interconnection dates into your roadmaps. Put heat reuse into your site plans. The next frontier belongs to those who can turn power into progress on purpose.