Memory Is the New Moat: Context Becomes Capital in AI

As models converge, memory diverges. This essay shows why portable, governed context becomes the real moat for agents, and how to design wallets, grants, receipts, and ledgers that compound product value.

The quiet September that changed the race

Some platform shifts arrive with fireworks. Others land like a minor software patch that seems small until you feel the ground move. This September was the latter. OpenAI expanded Projects and rolled out conversation branching in ChatGPT, changes that share a visible theme: users carry long‑lived context across work, not just prompts across tabs. You can see the arc in the official ChatGPT release notes. Days later, Mistral introduced Memories for Le Chat and a Model Context Protocol powered connector directory, turning persistent preferences and facts into a first‑class object with user control. Read the details in the Mistral announcement on Le Chat Memories.

These updates do not brag about more parameters. They standardize where context lives and how it moves. That is the beginning of a new political economy for AI: when memory becomes capital, design choices about state, portability, and governance become the real moat.

From parameter count to compounding context

For the last couple of years, release notes have focused on bigger context windows and improved reasoning. Long context is useful, but it remains a per request expense. What this September signaled is that the winning systems will not just read large inputs. They will accumulate, compress, and govern the right bits of user state over time.

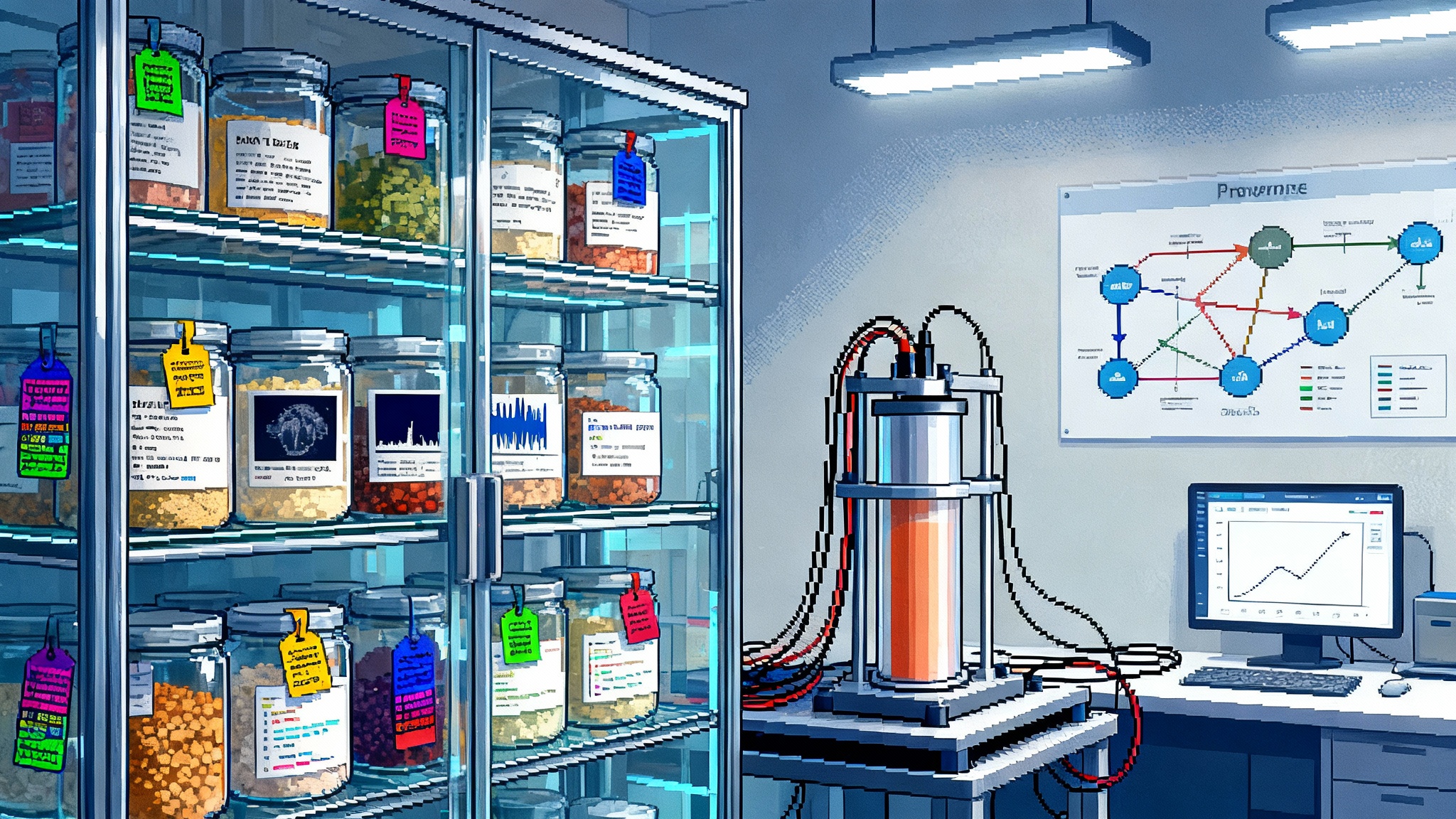

Think of a model as a chef and memory as a pantry. You can always dash to the store and buy ingredients per order. It works, but it is slow and wasteful. A good pantry stocked with the right staples makes everything faster and more reliable. September’s features are new shelving, labels, and inventory rules for that pantry.

Conversation branching matters for another reason. It treats exploration as a tree of context, not a brittle single thread. Branches let you pursue alternative paths while keeping provenance. That is the foundation for a durable graph of how ideas evolve and why certain decisions were made.

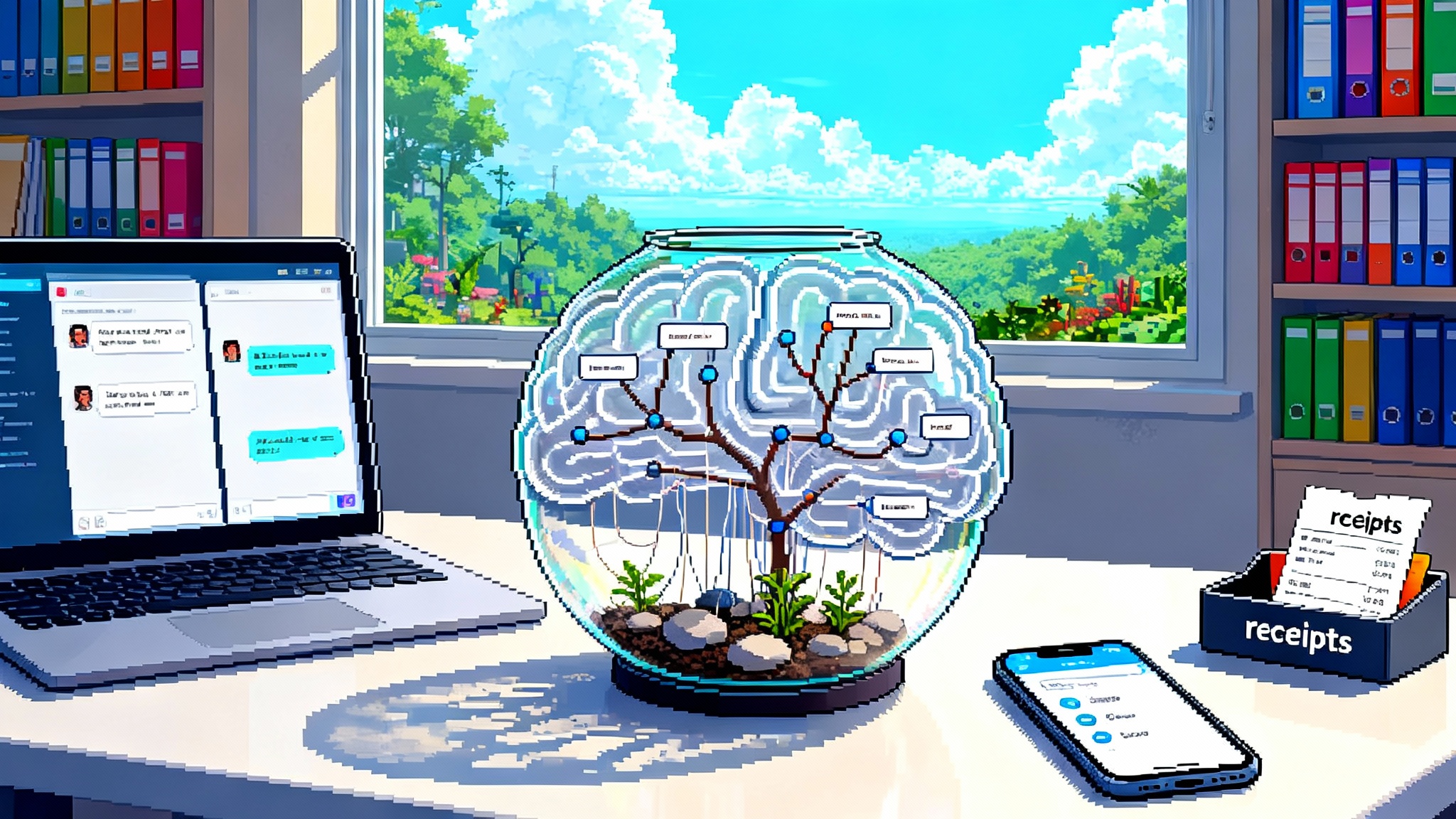

Introducing the memory graph

Most teams talk about memory as a simple list of facts. In practice, the memory that matters looks like a graph:

- Nodes capture facts, preferences, commitments, and artifacts. Example: "Preferred airline is Alaska," "Project Apollo uses a 30 percent gross margin target," "This spreadsheet contains the quarterly plan."

- Edges encode provenance and permissions. Example: "Derived from Q2 finance doc," "Approved by Dana," "Visible to the Sales project only," "Expires in 30 days."

- Weights record recency and trust. Example: "High confidence, verified via calendar invite," "Low confidence, self reported once."

- Scopes define where the memory applies. Example: Personal, Team, Project, Device.

When an agent answers a question or executes a task, it should traverse this graph with policy. That means it can explain which facts were used, why they were chosen, and what was ignored. It is the difference between a helpful assistant and a compliant black box.

If you are exploring how always on systems will use such graphs, see how agents behave in systems that keep working offscreen. The path from idle to trusted runs through strong memory governance.

Long context is not long lived state

Long context windows let you stuff more text into a single request. Long lived state means you can remember and reuse information across requests and across time with guarantees about scope and consent. The two are complements, not substitutes:

- Long context makes one answer smarter today.

- Long lived state makes every answer smarter tomorrow.

You want both, but only long lived state creates compounding returns. If your agent uses each interaction to update well governed memory, two things happen. First, quality improves because retrieval is precise and personal. Second, costs drop because you do not keep re feeding the same background.

Why September’s product choices matter

- Projects on the Free tier normalize project scoped state for everyone. Even hobbyists now experience the difference between a one off chat and a durable workspace that collects files, notes, and settings.

- Branchable conversations turn a linear chat into a navigable history. This reduces the cost of experimentation and improves auditability because you can show where a plan forked.

- Mistral’s Memories turn preferences and facts into a first class data structure with user controls. Combined with a connector directory based on the Model Context Protocol, they create a path for state to flow from tools into the assistant and back out into actions.

Under the surface, all of these features pull in the same direction: define, scope, and move memory with intent.

The new political economy of AI memory

A platform’s power used to hinge on data network effects and distribution. In the agent era, the new lever is the installed base of high quality, permissioned memory graphs. Three forces drive this shift:

-

Capability: Memory graphs unlock multi step reasoning that uses private context. A sales agent that knows a customer’s procurement rules and your team’s discount policy will outperform a general model with a bigger context window.

-

Switching costs: If your memories cannot move, your work is trapped. Export and import paths create credible exit options. Without them, platforms extract value through friction.

-

Trust and governance: People accept agents that remember if they can see, shape, and revoke what is remembered. Memory without receipts and revocation is just surveillance with a nice interface.

When context becomes capital, everything around it becomes policy. You do not win by hoarding state. You win by standardizing how it moves with control.

For a deeper look at verifiability and trust, revisit how capabilities change when proofs back claims in confidential AI with proofs. Policy and math combine to make memory safe to use at scale.

Design primitives for the memory era

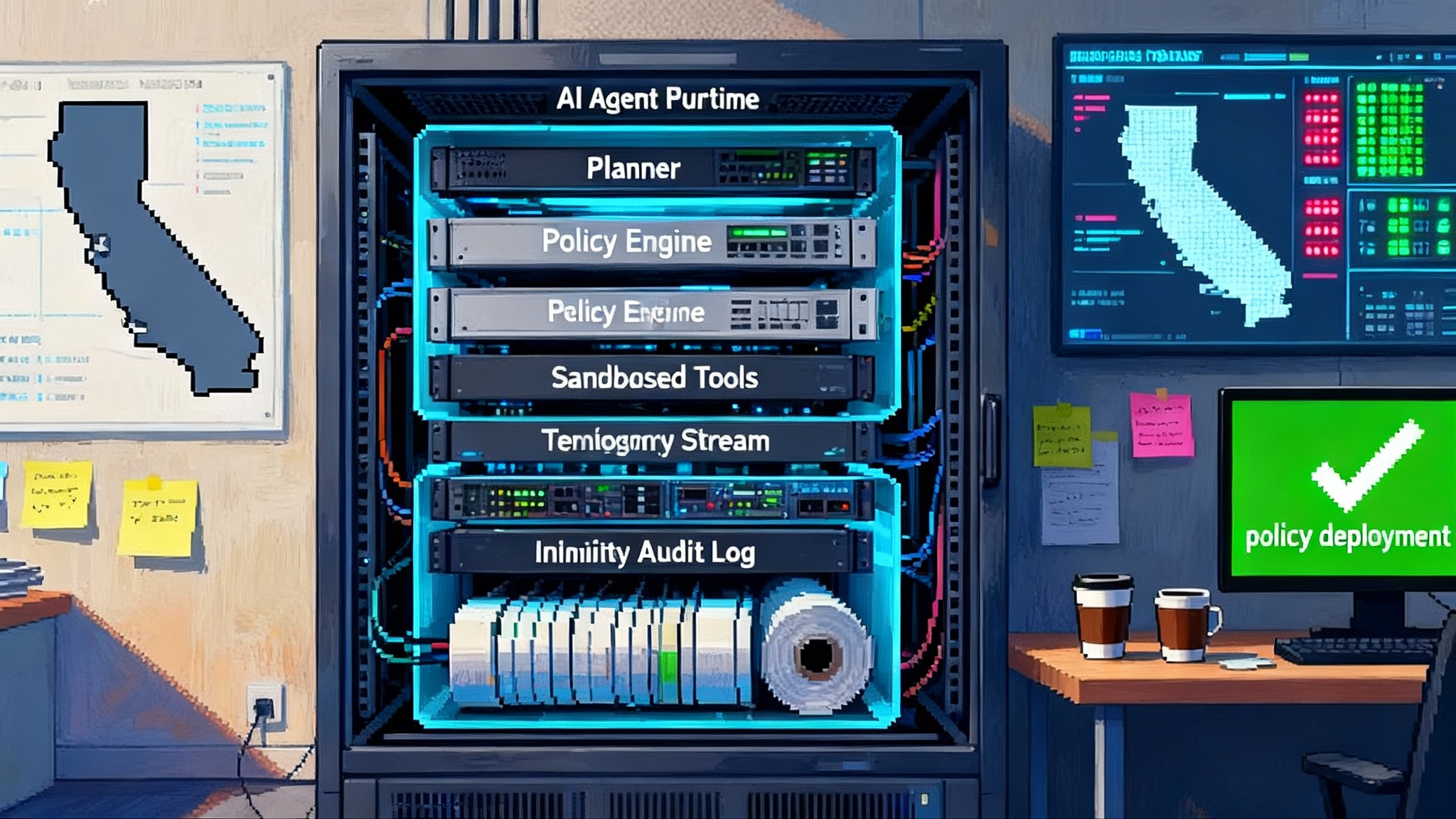

Here are five primitives every product team should adopt. Each comes with a clear what, why, and how.

1) State wallets

- What: A user controlled vault for long lived state, addressable by a stable identity, with APIs for reading and writing facts, preferences, embeddings, and summaries.

- Why: Prevents state from being scattered across hidden caches. Gives users a single account of record for their memories.

- How: Provide a local first option with automatic cloud sync. Use encryption at rest and in transit. Expose read and write through predictable interfaces. Store provenance with each entry: who wrote it, from which conversation, with which connector, and when.

2) Revocable grants

- What: Time boxed, scope limited permissions that allow an agent or connector to read or write specific edges in the memory graph.

- Why: Stops permission creep and supports least privilege. Encourages partners to request only what they need.

- How: Use capability tokens with explicit scopes like read:preferences or write:project:Apollo. Require renewal on a schedule. Show users a pending grants list and an audit log of accesses. If a connector is compromised, revoke the grant and quarantine any affected memories.

3) Memory receipts

- What: A human readable record every time the agent saves or updates a memory. It should state the fact, the reason, the source, and the scope.

- Why: Transparency builds trust. Receipts also create an evidence trail for debugging and compliance.

- How: Attach a receipt to each node and expose a filterable feed. Offer a one click undo with automatic propagation to dependent summaries.

4) Branch ledgers

- What: A structured log that records conversational forks as edges in the memory graph, including the decision point and the rationale.

- Why: Many teams will run multiple what ifs in parallel. Ledgers support comparison, reproducibility, and rollbacks without losing context.

- How: Treat each branch as a view over shared memories plus branch local notes. Persist merge operations like code, with conflict resolution rules.

5) Memory time to live and quarantine

- What: Default expiration dates and conditional quarantine for facts that are stale, sensitive, or unverified.

- Why: Most memory risk comes from old or wrong facts. Automatic decay limits damage. Quarantine prevents bad data from spreading.

- How: Assign a decay function per node. If confidence drops below a threshold, the agent must re verify before use. Quarantine any memory touched by a revoked connector until re validation.

Implementation patterns that work

- Event sourced memory service: Treat every write as an append only event with a schema that includes who, when, why, and scope. Materialize useful views on demand, like a per project index or a per person preference set.

- Typed knowledge over raw text: Store compact, typed facts alongside summaries. Summaries speed recall, but typed facts drive correctness. When a summary and a fact disagree, facts win until re summarized.

- Retrieval with policy: Retrieval should not just rank by similarity. Rank by scope, trust, recency, and purpose. Explain your selection. If a fact is outside the current scope, show the user how to expand it.

- Human in the loop for critical nodes: Mark some nodes as critical, such as prices, deadlines, legal terms, or approvals. Any change demands an explicit confirmation step with a reason.

- First class import and export: Offer export in a portable package that includes facts, receipts, and provenance. Support import with mapping tools that show conflicts and require explicit merges. Think of this like number portability for phone carriers. The provider that makes moving in and out painless earns trust.

Memory portability decides the platform era

In the last platform shift, the most valuable standard was the app store billing stack. In this one, it will be memory portability. The ecosystem that makes it effortless to bring your state in, use it across connectors, and carry it out again without drama will set the default for everyone else.

Mistral’s adoption of a shared connector protocol is a tell. Expect more clients to speak a common language at the tool boundary while competing on memory quality and governance. Expect operating systems and browsers to treat state wallets like a new class of keychain, complete with per app grants and receipts.

For enterprises, portability is not a nice to have. It reduces vendor risk and makes multi agent workflows practical. A procurement team should be able to demand a memory export that includes:

- A machine readable bundle of facts, edges, and scopes

- A human readable index of receipts and grants

- A manifest of connector permissions with last access times

- A verification log that confirms integrity

Vendors that deliver this will win deals where governance matters. And regulators will increasingly ask how decisions were made in context. If you are building in a sensitive domain, align your memory policies with emerging norms about safety and oversight. The frame from law inside the agent loop is useful here.

Risks and mitigations you can adopt today

- Shadow memory: Teams will start copying facts into personal notes, email, and ad hoc embeddings. Mitigation: ship a default state wallet and make it the most convenient place to save. Every save produces a receipt.

- Data poisoning through connectors: A compromised tool can inject false facts. Mitigation: quarantine and require re verification on any memory that flows through a revoked grant. Keep signed receipts.

- Confused scope: Personal preferences bleed into team decisions. Mitigation: treat scope as a first class filter in retrieval. Make scope selection visible in the user interface.

- Stale facts: Old summaries are worse than no summaries. Mitigation: time to live by default, plus periodic re summarization with a diff for human review.

What to build next

If you are a product leader, set a one quarter plan:

- Week 1 to 2: Ship memory receipts for every write. Add a receipts view. Teach your agent to cite receipts in its answers.

- Week 3 to 6: Introduce revocable grants with scope and time limits. Build a quarantine path for revoked grants.

- Week 7 to 10: Launch export and import for memory bundles. Include a manifest with schema, receipts, and signatures.

- Week 11 to 12: Add branch ledgers and a per project memory view.

If you are a developer, start with an event sourced memory service and a typed schema. Add a retrieval layer that ranks by policy, not just similarity. Use a connector model that can be swapped without breaking your memory graph.

If you are a buyer, add memory portability to your evaluation checklist. Ask vendors how receipts work, how grants are revoked, and how export preserves provenance. Run a migration exercise before you sign.

The takeaway

September’s releases looked incremental. In reality, they moved the goalposts. Models will keep getting better, but the compounding advantage will come from what your system remembers, how that state moves, and how it is governed. Treat memory as capital. Build the graph. Give users a wallet. Issue revocable grants. Print receipts.

The platform that standardizes memory portability will not just keep customers. It will define the rules of the next era and make agents trustworthy by default. That is the moat worth building.