When Regulation Becomes Runtime: Law Inside the Agent Loop

California's SB 53 turns AI safety from paperwork into live engineering. As Washington questions industry-led health standards, compliance shifts into code, telemetry, and proofs that run alongside your agents.

Breaking: California just moved compliance into your code

On September 29, 2025, California Governor Gavin Newsom signed SB 53 into law. The statute requires the largest AI companies to publish how they will mitigate catastrophic risks and to report critical incidents, with fines for noncompliance. The law names specific classes of harms such as loss of human control and biothreats, and it applies to major developers above a defined size threshold. Read the basic contours in Reuters on California SB 53.

If you read SB 53 as a product engineer, the implication is simple. You cannot satisfy these requirements with a binder full of policies. You will need runtime evidence that the system is operating within guardrails while it makes plans and calls tools. That means telemetry, tests, proofs, and audit-ready traces that you can surface on demand.

Why this is bigger than a disclosure rule

Compliance used to be an annual ritual. Lawyers updated policies. Engineers annotated a wiki. Auditors sampled logs. That cadence breaks when the state asks how you will prevent loss of control in an agent that composes tools, sends messages, and triggers workflows in milliseconds. If a language agent can write code, issue refunds, schedule deliveries, and call third-party models, then safety is a live property that must be enforced while the agent runs.

Think of aviation. Preflight checklists matter, but the cockpit instruments, black box recorder, and control tower keep you safe during the flight. SB 53 is the cue for AI builders to add cockpit instrumentation to their agent runtimes: guardrails, conformance checks, least privilege execution, drift detection, intervention triggers, and immutable traces.

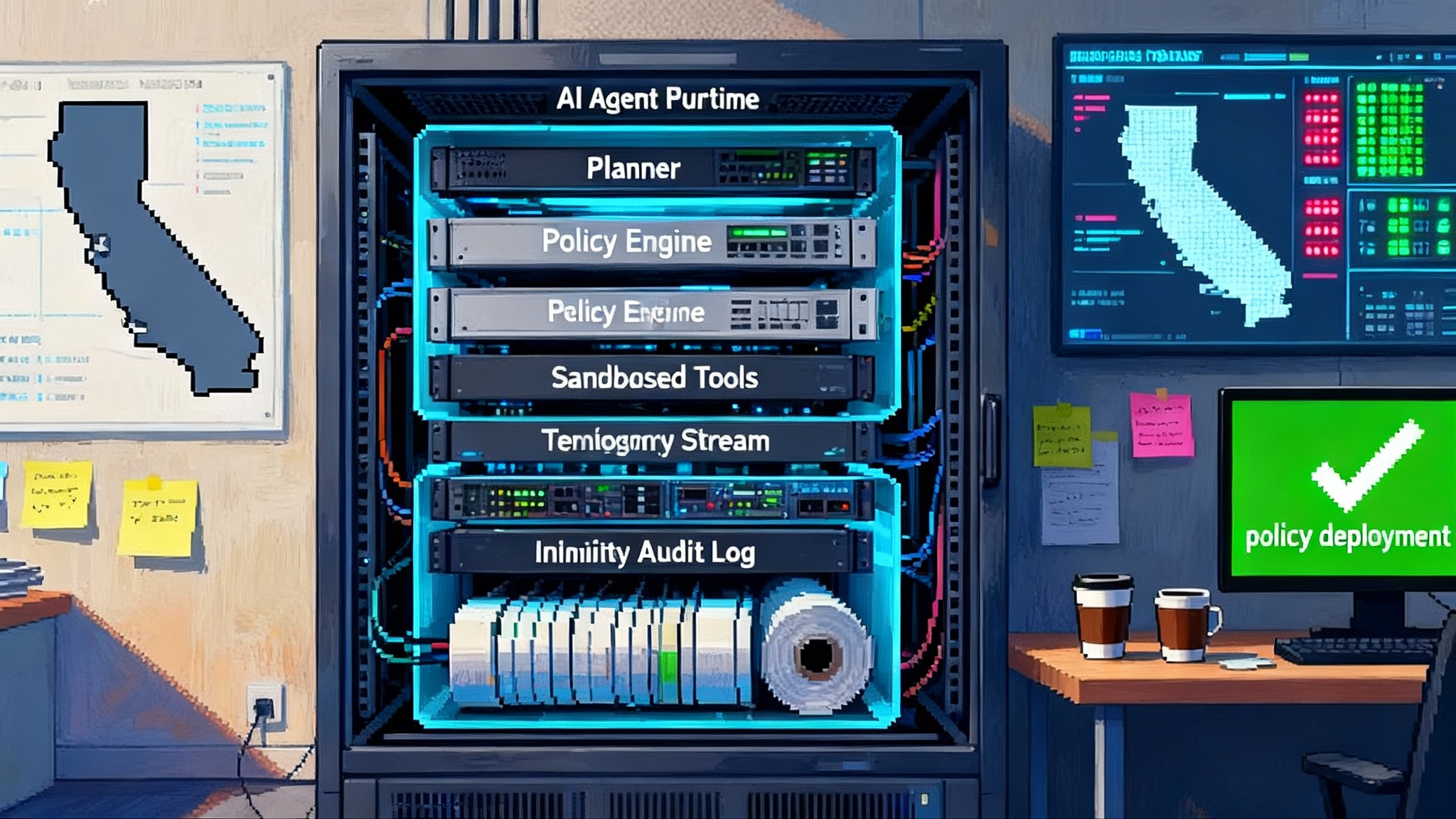

What changes under the hood: from policy docs to conformance engines

SB 53 will be debated for months, but its direction is clear. You cannot prove risk mitigation with static documents. You need software that makes policy executable.

- Conformance engines. Machine readable policies compiled into checks that run before and during tool use. Example: an agent may not call a sequencing lab API unless a verified clinician and a biosafety officer jointly approve the request. The rule is not prose. It is a policy object the runtime evaluates.

- Least privilege by default. Agents begin with no tools, no data, and narrow scopes. Capabilities are granted for the minimum viable task and revoked when it ends. In practice that means time bound tokens per tool, data partitioning per ticket, and capability escalation that always requires a second signal.

- Drift and hazard detection. Models and environments shift. Runtimes need monitors for distribution shifts, prompt injection signatures, role play red teams, and tool abuse sequences. These checks should operate like smoke detectors, not fire marshals who arrive next quarter.

- Proof carrying actions. Every sensitive action should carry the reason it was allowed. This is not a chat transcript. It is a compact proof that includes policy version, inputs, risk score, approvals, and post conditions. If you cannot replay the decision and get the same outcome, you do not have a proof.

- Immutable audit trails. Append only logs with cryptographic sealing so that post incident reviews are credible. This is how disclosures graduate from marketing copy to evidence.

These are product features, not slogans. A regulator can now ask for concrete answers on a timeline measured in days, not quarters.

The surface is fracturing: California codifies while Washington questions private standards

The same week California moved, Washington sent a different signal in health care. Senior officials criticized the Coalition for Health AI and questioned whether a private consortium should set clinical AI rules. Politico reported the administration’s move to distance itself from industry led certification and to emphasize a more government centered approach. See the summary in Politico on CHAI pushback.

This split matters. If states demand runtime evidence while federal actors question private standards, builders face a patchwork. The safest strategy is to make compliance a product capability you can tune per jurisdiction. That favors designs that treat policy as code.

Policy as prompt: governance at the same entry point as the task

Policy as prompt means governance enters at the same place the task does, inside the instruction the agent follows. If your agent accepts a user prompt, it should also accept a policy prompt that constrains its plan and tool use. This is not an output filter that scrubs after the fact. It is a contract that shapes the plan.

Consider a customer service copilot that can issue refunds. It should ingest a policy object that sets refund ceilings by geography, flags risky combinations like address changes plus expedited shipping, and requires a human countersignature above a threshold. The runtime compiles that policy into the planner so the action graph never proposes disallowed steps. Change the policy today, the agent changes today. No one waits for a quarterly audit.

Policy as prompt also intersects with provenance. If you are going to prove what rules an agent followed, you must know what it learned and why it made a given choice. That is why provenance, which we discussed in provenance becomes the edge, belongs in the same control plane as policy.

Common building blocks for policy as prompt:

- Policy objects. Declarative rules with variables, provenance, versioning, and test fixtures. Treat them like tests for the agent’s plan generator. If the plan violates a rule, the test fails, and the plan is replanned.

- Sandboxed tools. Every tool runs with scoped permissions, rate limits, and fully observable calls. The agent declares intent, the sandbox enforces scope, and both stream to telemetry.

- Risk aware planning. Planners score steps by expected risk and route to safer alternates, such as synthetic data previews before live data queries.

- Hard to bypass human in the loop. Callbacks require identity verified approvals and replayable justifications. Bypass is either impossible or recorded as an incident.

Runtime governance as an engineering discipline

Governance often evokes committees. Runtime governance is closer to site reliability engineering for decision systems. You measure uptime. You measure plan safety. You rehearse failure.

Key disciplines:

- Continuous evaluation. Safeguard tests run per build and per session. Red team suites include jailbreak attempts, prompt injection variants, tool abuse, and data exfiltration. Results flow into risk dashboards that product owners actually watch.

- Policy deployment pipelines. Policy updates move through staging, canary, and production. Rollbacks exist. Diff tools show what changed and which agents are impacted.

- Drift response. If monitors detect a shift, agents automatically degrade to safer modes. A sales agent might lose the ability to send external email and switch to draft only until a human clears the drift.

- Evidence by design. Every governance mechanism writes a compact, standardized trace. That trace is the raw input for disclosures, incident forms, and regulator questions. If you structure the trace well, you can answer most questions with a query, not a task force.

A new metric: compliance latency

If policy is code, one question becomes existential. How fast can you align a running agent to a new rule without breaking the customer experience?

Call that compliance latency. It is the time from a policy change to full, verifiably enforced behavior across your agents. California’s law does not use that phrase, but buyers and regulators will.

What to measure:

- Policy propagation time. Seconds from merge to agents honoring the new rule.

- Enforcement coverage. Percentage of active sessions protected by the updated rule within the first hour and first day.

- Evidence completeness. Share of actions above a risk threshold that produce proofs with all required fields.

- Human approval round trip. Median time to obtain necessary approvals for escalations without dropping tasks.

- Plan repair rate. Fraction of disallowed plans that are successfully replanned to safe alternatives rather than blocked.

The companies that win will reduce compliance latency while improving user satisfaction. They will treat compliance not as a tax but as a competitive feature.

Market outlook: the guardrails stack professionalizes

A market is forming around these needs, and it will not look like a single monolith. Expect layers.

- Policy authoring and verification. Tools for writing machine readable rules, testing them against representative tasks, and simulating impact. Think linter plus model checker for governance objects.

- Runtime enforcement. Sidecars, gateways, and orchestrators that sit between agents and tools. They intercept actions, evaluate context, and allow, modify, or block.

- Telemetry and traces. Systems that collect high cardinality events from plans, prompts, tool calls, and approvals, then produce queryable proofs. The goal is audit ready traces on demand.

- Model risk and drift monitoring. Specialized analytics for distribution shifts, hallucination risk, and injection signatures. They connect to enforcement to trigger safe mode behavior.

- Red team and evaluation labs. Continuous adversarial testing with shared corpora and community benchmarks. Some efforts live in open source and vendor labs, but customers will pay for independent attestations.

The winners will connect this stack to identity, secrets, and ticketing. They will also rethink the business model of assistants, because the runtime must understand incentives and tool access. For a broader view of how assistants mediate supply and demand, see why assistants are marketplaces.

Practical playbook: ship runtime governance in three quarters

You can ship this in three quarters without trying to boil the ocean. Start with a control plane, then build evidence and resilience, then reduce compliance latency.

Quarter 1: establish the control plane

- Inventory agents, tools, data stores, and external models. Map risk zones by action type and data sensitivity.

- Choose a policy representation. It should support variables, tests, and versioning. Treat policies like code with reviews and continuous integration.

- Insert a sidecar between agents and tools. Start by enforcing authentication, time bound tokens, and scoped permissions for each tool.

- Build a minimal telemetry schema. Capture prompts, plans, tool intents, tool results, approvals, and errors. Do not wait for perfect schema. Version it.

Quarter 2: build evidence and resilience

- Implement proof carrying actions for the top ten risky operations. Agree on a proof schema and make proofs visible in support tools.

- Add drift monitors. Start with simple checks such as input distribution deltas, refuge words in responses, or anomalous tool call patterns. Auto degrade to safer modes when thresholds are crossed.

- Stand up a red team harness. Run it per release and per week. Wire alerts to the same on call rotation that handles production incidents.

- Create a basic incident form that can be populated from traces. Practice with internal drills.

Quarter 3: reduce compliance latency

- Automate policy deployment. Canary new rules to a small agent cohort. Measure plan repair rates and user impact before full rollout.

- Integrate identity and approvals. Use verified sign offs for escalations. Track the human approval round trip as a user experience metric.

- Prebuild regulator templates. If the state asks for an incident report, aim to populate most of it from traces.

The outcome is not perfection. It is muscle memory. Your organization learns to change agent behavior by editing policy, not by shipping a risky model hotfix.

Edge cases and open questions

- Proof without revealing secrets. How do you publish evidence of safety without leaking prompts, user data, or proprietary methods? Expect more redaction, differential privacy, and third party attestation.

- Cross jurisdiction tuning. A sales agent that operates in California and Texas may need different enforcement. The runtime should load the right policy bundle based on geography, customer type, and task.

- Open models and fine tunes. When customers bring their own models or tools, who carries the duty to maintain guardrails and proofs? Contracts will trend toward shared responsibility matrices with explicit telemetry obligations.

- Benchmark validity. Many red team suites resemble puzzles. Regulators will push for tests tied to real harms and for statistics that hold up. The community will need stronger, standardized evaluation methods and objective datasets.

- Cost of safety. Real time checks and evidence capture add latency and compute. The engineering work is to minimize overhead while maintaining guarantees. That is why compliance latency matters as a product metric.

Acceleration inside the loop

California did not invent safety. It forced a choice. Keep treating governance as a document, or bring the state into the runtime where agents plan and act. The federal debate over industry led standards shows that national policy will zig and zag. That makes runtime governance more valuable. It lets you adapt to policy variation by updating code, not rewriting handbooks.

The companies that thrive will treat policy as prompt, compliance as a latency to beat, and audit evidence as a first class output. They will build agents that can negotiate constraints in real time, surface proofs as naturally as they show results, and recover gracefully when rules change midday. That is not red tape. It is product quality for a world where law sits inside the loop.

If you want a systems level view of how diverse models help with graceful degradation and safe fallback, see why model pluralism wins. Pair that with the provenance lens above and you have a workable blueprint for runtime governance that can survive new rules without derailing the roadmap.