When Models Must Name Their Diet: Provenance Becomes the Edge

Training transparency just became a competitive advantage. With new EU disclosure rules and U.S. takedown obligations, the edge shifts from model size to source quality. This playbook shows how to build it.

The week provenance went mainstream

If you work on models, circle two dates. On July 24, 2025 the European Commission published an official template for providers of general purpose models to publicly summarize their training content, with obligations applying from August 2, 2025 under the AI Act. The headline is simple: if you ship a general purpose model in Europe, you must say what went into it, in a structured way and for everyone to see. The Commission's own summary states that providers must list major datasets, top domains, and explain other sources used. That is a sharp turn from hand waving model cards to auditable training menus, and it is now law backed transparency, not a best practice suggestion. See the Commission's announcement for scope and timing in the Commission template for training summaries.

In the United States, May 19, 2025 brought a different but related step. Congress passed, and the President signed, the TAKE IT DOWN Act, which requires covered platforms to remove nonconsensual intimate deepfakes within prescribed timeframes and criminalizes the intentional posting of such images. This is a takedown regime, not a training disclosure rule, but the combination is telling. The European Union is forcing daylight on model diets. The United States is forcing speed on content provenance and response. Read the statutory text in the Text of the TAKE IT DOWN Act.

Put together, these signals say the quiet part out loud: provenance is about to become the competitive frontier. What a model knows will matter less than how it knows it.

From size to sources: an epistemic reset

For a decade, the model race rewarded brute scale. Bigger datasets, bigger clusters, bigger hype. The result was impressive capabilities and brittle trust. Users learned to ask where an answer came from. Creators learned to ask whether their work had been scraped. Enterprises learned to ask what legal baggage might ride inside a model they deploy to customers.

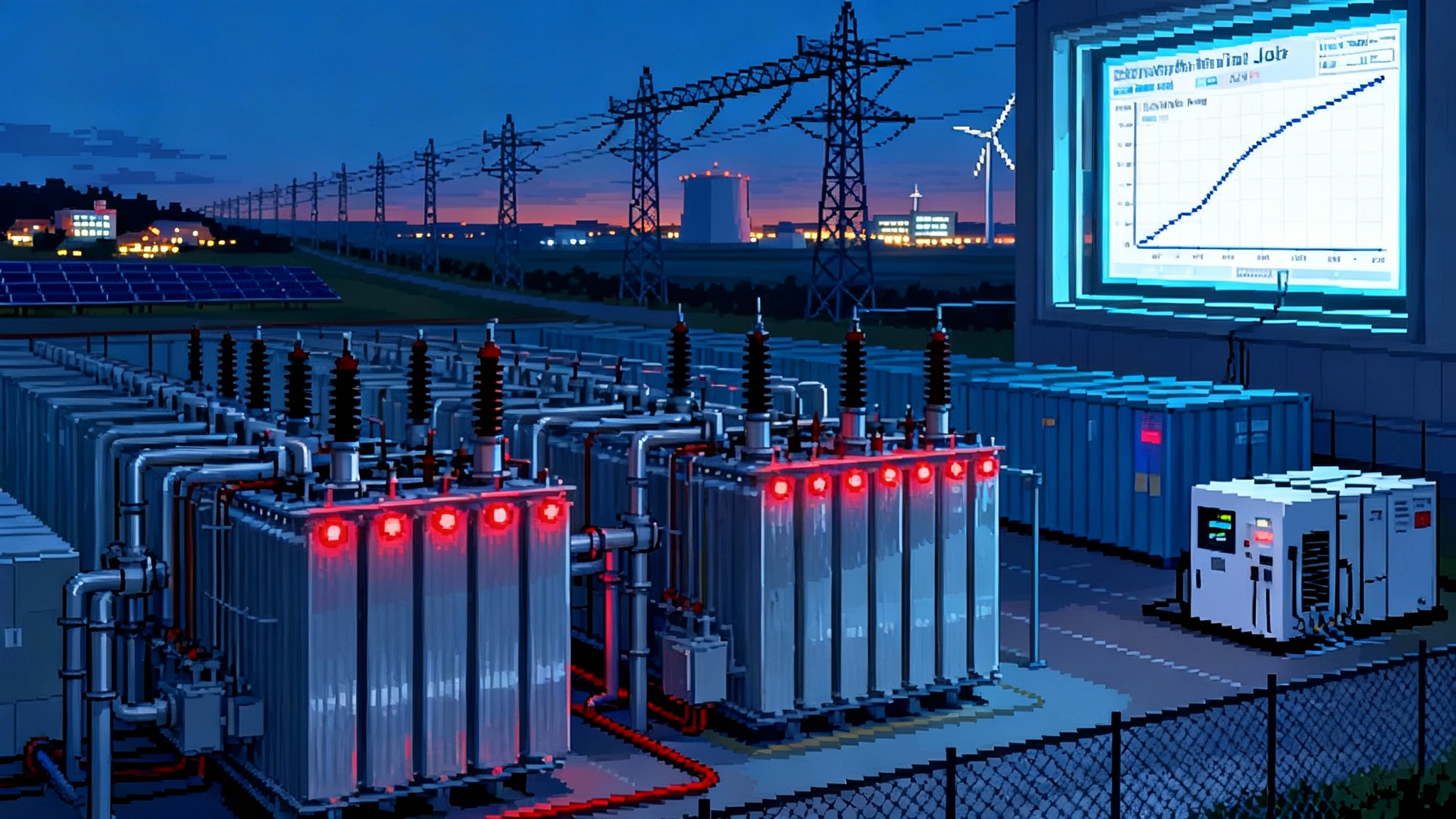

Training transparency flips the default. Instead of silently absorbing the internet, providers must account for their inputs. Think of it as moving from a buffet to a labeled pantry. Your model can still eat plenty, but now the label on the jar matters: Did this text come from a licensed newswire? A public domain corpus? A data cooperative of consenting artists? A web crawl that ignored robots.txt? Under the new European template, you must say that clearly and publicly.

This is not just compliance theater. It resets how we evaluate knowledge in machines. If a model cites a paragraph of legal analysis, a buyer can demand that its training included paid access to authoritative legal databases rather than forum posts. If it generates a medical explanation, a regulator can ask whether peer reviewed journals and licensed clinical guidelines were part of the diet rather than speculation from blogs. When training provenance is visible, accuracy claims become auditable, and auditability becomes a product feature.

This shift also plugs into the invisible policy layer that already shapes AI behavior. If you have not considered how rules, defaults, and disclosure shape product power, read our take on the invisible policy stack. Provenance is where that policy layer meets data reality.

The supply chain of meaning

Manufacturing has bills of materials and traceability down to the supplier and lot number. Food has ingredient lists and origin labels. Software has dependency graphs and license scanners. AI is now building its version of these: a supply chain of meaning.

Here is what that looks like in practice:

- A training data bill of materials. A structured summary that enumerates component corpora, major sources by domain, collection periods, and licensing bases. The European template is a start. The next step is machine readable formats and standardized taxonomies so auditors can compare models apples to apples.

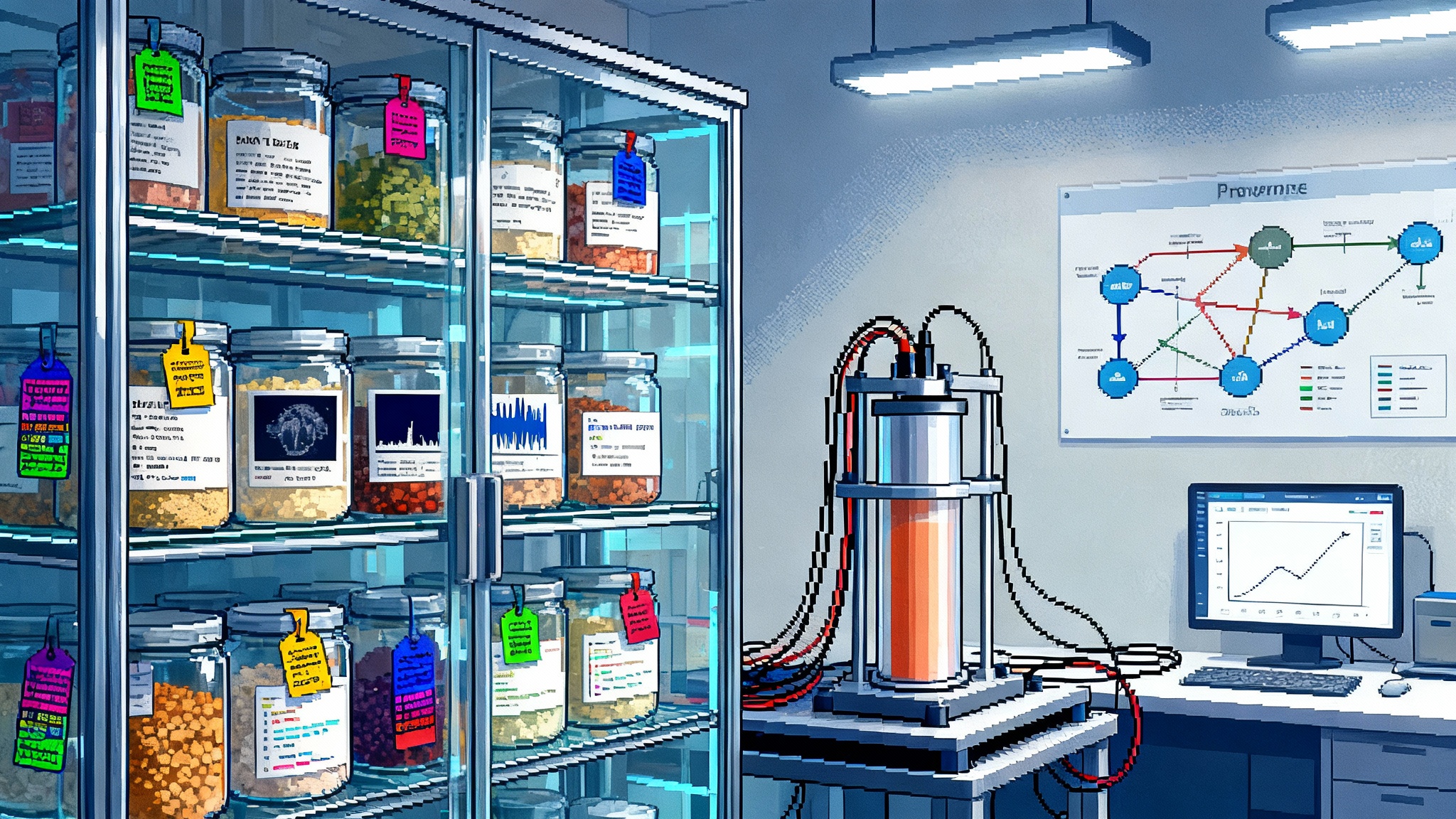

- Authenticity graphs. A graph where each node is an asset and each edge records a transformation. The graph carries cryptographic signatures or robust identifiers, so you can prove that a fine tuning set came from a licensed library, was filtered by a specific policy, and has not been tampered with since. It is provenance as a living structure rather than a static appendix.

- Licensable corpora. Curated, rights cleared datasets for verticals like finance, law, medicine, engineering, and education. Providers pay not just for bytes, but for the right to claim, with proof, that the model's competence sits on authoritative foundations.

- Data cooperatives. Creators and communities pool their content, set terms for use, and share revenue. A cooperative contract may say: our members' art can fine tune a model for stylistic composition, but not for synthetic replicas of living artists; attribution must be exposed in model citations; revenue shares are computed from usage signals.

The result is a new division of labor. Crawlers are no longer the only source of model food. Supply partners emerge whose business is to maintain clean, labeled, permissioned corpora and feed them into models with durable provenance.

Authenticity graphs, explained simply

If the phrase authenticity graph sounds abstract, picture a package tracking map. A parcel moves from warehouse to truck to plane to sorting center to your doorstep. At each hop, someone scans it and a system records the event. An authenticity graph does the same for data. A document moves from its source to a license registry, then into a preprocessing step, then into a training shard, then into a fine tuning batch. Each hop is recorded with a signature or a strong identifier. When your model emits an answer, you can sample internal activations and point to which shards contributed most and which source families sit underneath those shards.

Today, most providers do a weaker version of this with ad hoc logs and checksum lists. That is not enough when a regulator, an enterprise customer, or a courtroom asks for proof. Authenticity graphs turn we think so into here is the path and here are the signatures.

Authenticity does not replace retrieval. Retrieval explains what you looked up for a specific answer. Authenticity explains what you learned from and when. Mature products will show both, because both shape user trust.

What changes for builders right now

Training transparency and deepfake takedowns sound like policy stories, but they land directly in engineering backlogs. A practical playbook:

-

Inventory your inputs. Treat training data like production dependencies. Build or buy a catalog that tracks source, license, collection time, geographic origin, and any special terms. Make it queryable by model version and by capability area.

-

Separate ingestion lanes. Maintain clear lanes for licensed corpora, public domain content, user contributed data with consent, and web crawls. Apply different filters and retention rules to each lane. This simplifies disclosure and reduces the blast radius of a disputed source.

-

Make your training summary public and useful. The European template asks for top domains by size and a description of crawled sources. Do not treat this as a checkbox. Publish a human readable overview and a machine readable file. Buyers will reward clarity. Competitors cannot replicate your curation choices overnight just because you disclose them.

-

Embed provenance in your pipeline. Generate signed manifests at each step: deduplication, safety filtering, multilingual normalization, chunking, sharding, and curriculum design. Store manifests in append only logs. You will thank yourself during audits and incident reviews.

-

Expose citations in the product. If your model answers a complex question, let users expand a panel that shows high level source families used in training for that domain and the retrieved references used at inference. Do not pretend retrieval alone solves accountability. Users want to know what the model learned from, not just what it looked up.

-

License where it matters. For domains where accuracy and liability are expensive, pay for corpora with strong editorial standards and maintain proof. Savings on unlicensed text pale against the cost of a recall, a lawsuit, or a regulator's injunction.

-

Prepare for takedowns with precision. The United States now expects rapid removal of certain malicious deepfakes. Even if your product is not a social network, you will face removal requests for model outputs or training samples. Build processes that verify claims, remove precisely the content at issue, document actions, and avoid collateral deletion that damages model performance.

-

Join or form a data cooperative. If you fine tune on niche communities or creator ecosystems, invite them to set terms, participate, and share upside. It is easier to defend your model's diet when the people who cooked it are partners, not plaintiffs. For broader context on consent and participation, see our view on consent after Anthropic pivot.

Why transparency can accelerate, not slow, iteration

A common fear is that disclosure slows teams, tips secrets to rivals, and hands critics a bat. The opposite is more likely for high performing labs.

- Faster debugging. When you can see your diet, you can fix it. If a model hallucinates about tax law, you can quickly see that your training mix for tax was thin on authoritative sources, adjust, and verify the change in your authenticity graph.

- Safer experimentation. Structured provenance lets you run controlled trials of new data sources, because you can isolate their impact and roll back if they underperform or create policy risk.

- Better partnerships. Enterprises negotiating model purchases will ask for training summaries. If you already publish them, procurement cycles shrink and renewal odds rise. That reduces your cost of sales and increases your learning velocity with real users.

- Stronger hiring and culture. Teams that treat data like a first class artifact have fewer hidden landmines and clearer division of responsibilities. That culture compounds.

Secrecy will still matter for optimization tricks and product vision. But secrecy about where your knowledge comes from is becoming a liability. The labs that internalize this early will iterate faster because they spend less time arguing with lawyers and more time improving their models with clean, well labeled ingredients.

What it means for creators and rights holders

Training transparency signals a real market for rights and reputation.

- Clearer opt in and opt out. Public training summaries make it easier to enforce creator preferences. If a publisher's content appears in the top domain list, there is a paper trail to seek licenses or to demand removal.

- New revenue models. Data cooperatives can set differentiated prices. A cooperative of technical writers might charge more for code documentation with superb clarity and coverage. A news archive might price by freshness and topic rarity. Rights become productized.

- Attribution that matters. Even when training uses derived features rather than verbatim text, authenticity graphs can credit source families. Expect to see dashboards where a publisher sees how often a model's answers draw on its domain and gets paid accordingly.

Creators should also watch how provenance interacts with product distribution. Assistants are quickly becoming discovery surfaces, and distribution models will reward trustworthy sources. For a deeper look at how this affects platforms and publishers, read our analysis that assistants are marketplaces.

The enterprise buyer's checklist

If you are buying or integrating models, start asking for the following, and expect good vendors to be ready:

- A public training summary aligned with the European template, plus a machine readable version.

- A description of authenticity graph coverage across the pipeline, including how manifests are signed and stored.

- A list of licensed corpora by domain, with evidence of rights and update cadence.

- Output provenance features in the product, including citations and retrieval transparency.

- A takedown and correction policy that balances speed with precision and keeps a full audit trail.

- Metrics that go beyond benchmarks. Ask for a trust score that blends factuality tests with provenance coverage. A model that is one point lower on a generic leaderboard but built on traceable sources may perform better for your risk profile.

When vendors can meet these asks, your procurement process becomes faster and the product surface becomes more predictable. The same disclosure that skeptics worry will slow sales can cut cycles because legal and security teams have something concrete to review.

What the next year will bring

Expect three visible shifts as the European obligations bite and U.S. takedown enforcement normalizes.

-

Pricing will reflect provenance. The market will pay a premium for models trained on high quality, rights cleared corpora with strong authenticity graphs. That premium will look modest next to the cost of noncompliance or brand damage.

-

Benchmarks will add traceability. Leaderboards that today track reasoning and coding will add provenance coverage and citation quality. Vendors will boast not just of tokens and parameters, but of licensed tokens and cited answers.

-

Disclosure will stop being optional public relations. Once a few top labs publish training summaries that are specific, legible, and defensible, buyers will demand the same from everyone. Black box providers will look dated and risky, even if their raw performance remains high.

These shifts ripple into strategy. Model providers that invest early in authenticity graphs will enter partnerships that closed doors to black box competitors. Enterprises will start to view provenance coverage as a control surface, similar to service level objectives. And independent creators will have a more durable path to participate and get paid.

Do not wait for a subpoena to find your sources

There is a deeper reason to treat training transparency as an epistemic reset. Models are not only tools. They are institutions of knowledge. Institutions earn authority by showing their work. Scientists publish methods and data. Journalists cite sources and documents. Courts maintain records. If models want a seat at that table, they must do the same.

The new rules force the first step. The winning labs will take the next ones voluntarily. They will ship authenticity graphs that withstand adversarial review. They will license corpora that resonate with the tasks their customers care about and will explain why. They will partner with creators and institutions to build data cooperatives that elevate both accuracy and legitimacy.

Most of all, they will design products that let users see knowledge as a path, not a magic trick. That is the shift from size to sources. It is also the bridge to a healthier ecosystem where consent, compensation, and reliability are not afterthoughts. If you are building that future, keep your policy stack, your distribution strategy, and your provenance story aligned. Our earlier arguments on the invisible policy stack remain a useful map for that alignment.

The bet is straightforward. A model that can name its diet will learn faster, face fewer roadblocks, and earn more trust. In a market where the hardest problems are not solved by scale alone, that is the edge that compounds.