Idle Agents Are Here: Systems That Keep Working Offscreen

A late September surge of agent updates marked a shift from chat-first tools to time-native systems that keep working between check-ins. Learn how open-loop design reshapes UX, pricing, and trust.

The week screens went quiet and agents kept going

In late September 2025, a wave of product updates from leading labs elevated a simple idea: the important parts of an agent often happen when no one is watching. New computer-use features let models operate apps and desktops directly. Agentic coding modes turned code assistants into planners that scaffold tasks, run tests, retry, and ship. Together, these shifts mark a move away from chatty, single-shot tools toward time-native systems that persist, pursue goals, and improve between check-ins.

Think of the old pattern as call and response. You ask for a draft or a snippet and get a one-off answer. The new pattern looks like delegation. You set a goal, set a budget, and the agent keeps working while you live your life. It schedules retries, forages for context, reconciles conflicts, and returns with a result plus a trace of how it got there. The screen can stay dark. The work does not.

This is a quietly accelerationist moment. The competitive edge no longer belongs only to model size. It lives in process design. Teams that learn to run agents as ongoing services will compound faster than teams that only prompt for one-offs.

From chatbots to time-native systems

Time-native systems treat time as a first-class resource. They plan, pause, resume, and reflect. Instead of stuffing everything into one giant prompt, they:

- Maintain a working memory of tasks, constraints, and partial outputs.

- Schedule jobs, timeouts, and retries based on telemetry and cost.

- Use tools to read and act in other software, including keyboards, files, terminals, and browsers.

- Reflect on failures and adjust the plan before asking a human for help.

A useful metaphor is a sous-chef. A chatbot is like asking a cook for a single recipe card. A time-native agent is a sous-chef that keeps prepping, checking the pantry, tasting, and plating while you step away. It does not wait idly for every instruction. It moves the work forward, keeps a clean station, and flags exceptions.

What an idle agent really does

Idle is the wrong word. These agents are active in the background. Imagine a growth operations agent with three goals for the week: refresh the ideal customer profile, identify one hundred net-new accounts, and draft outreach that matches current campaigns.

- Monday morning it gathers fresh product analytics and support tickets, then updates the profile using a playbook your team approves.

- Monday afternoon it queries your data warehouse and third-party sources, pulls a candidate list, deduplicates against the CRM, and scores each account.

- Overnight it drafts outreach variations, runs them through a brand filter, and schedules a review.

- If a scoring feature changes, it reruns the list. If an email provider rate limits, it backs off and reschedules. If a competitor changes pricing, it triggers a data refresh.

By Wednesday you get artifacts, not chat. You see new segments, a vetted list, and draft copy, plus a timeline of what happened and why.

Why open loop beats single shot

Open loop does not mean uncontrolled. It means the loop is wider than a single prompt and response. The loop spans time, tools, and multiple passes of reflection. That wider loop matters because real work is rarely atomic. Networks time out. Data freshness decays. Approvals take a day. Open loop agents treat those realities as design inputs, not accidents to be hand-waved away in a demo.

If we want these systems to help at scale, we should cultivate four design choices.

1) Transparent logs

Every agent run should leave a human-readable trail. That trail includes plans, tool calls, inputs, outputs, reflections, timestamps, and cost. It should make failure modes obvious. A transparent log does three jobs at once. It makes review fast. It creates training data for improvements. It builds trust with auditors and customers. Clear lineage also reinforces the ideas in our piece on law inside the agent loop, where governance becomes a runnable asset.

2) Sandboxed autonomy

Autonomy should be scoped by capability, identity, and environment. The agent should have a clear role and a constrained set of tools. It should operate in a sandboxed workspace with synthetic data where possible, redacted data where necessary, and strict boundaries between write, edit, and delete. If it needs to hop a boundary, it should request a signed escalation with a reason. Treat permissions like code commits: reviewed, logged, and reversible.

3) Right to halt

Humans need a big red button that stops the agent quickly and cleanly, and a small green button that resumes with context intact. Halt should be atomic. It should freeze job queues, revoke tokens, pause schedules, and checkpoint state within seconds. The right to halt is not an afterthought. It is part of user experience, part of compliance, and part of psychological safety for teams.

4) Outcome-based metering

If an agent works over hours or days, billing by tokens alone misrepresents value. Meters should align to outcomes and uptime. Charge for successful tasks, verified deliverables, and service-level objectives. Show customers the runbook, the time, the retries, and the cost. Tie spend to artifacts they can actually use.

How this reframes product design

Work continues after the chat ends, so we need new user experience patterns that expose process without overwhelming users. Three patterns are emerging.

Pattern 1: Flight deck for ongoing work

Instead of a chat thread, show a flight deck with missions, phases, and statuses. Each mission has a plan, a live timeline, an energy gauge for budget, and a health score for data freshness. A user can pause, resume, or reprioritize. They can click into any phase and see its tools, inputs, and outputs. Treat the flight deck like a pilot’s view, not a social feed.

Pattern 2: Signed actions and guardrails

Give users the ability to set signatures for classes of actions. For example: write access to a code repository only with unit tests and static checks passing. Calendar edits only within work hours. Payments only under a set threshold. The agent proceeds without interrupting when actions meet the signed contract. It halts and requests approval when it does not. This mirrors how we think about verifiable service envelopes in confidential AI with proofs.

Pattern 3: Debuggable artifacts over chatty output

Replace verbose text with debuggable artifacts. For code, show diffs and test results. For analysis, show the query, the slice of data, and the chart. For outreach, show the segment criteria and the copy with tracked edits. Add a reply box for feedback that updates the plan. The goal is to reduce time-to-trust by making the artifact self-explanatory and the pathway retraceable.

Architecture for patience

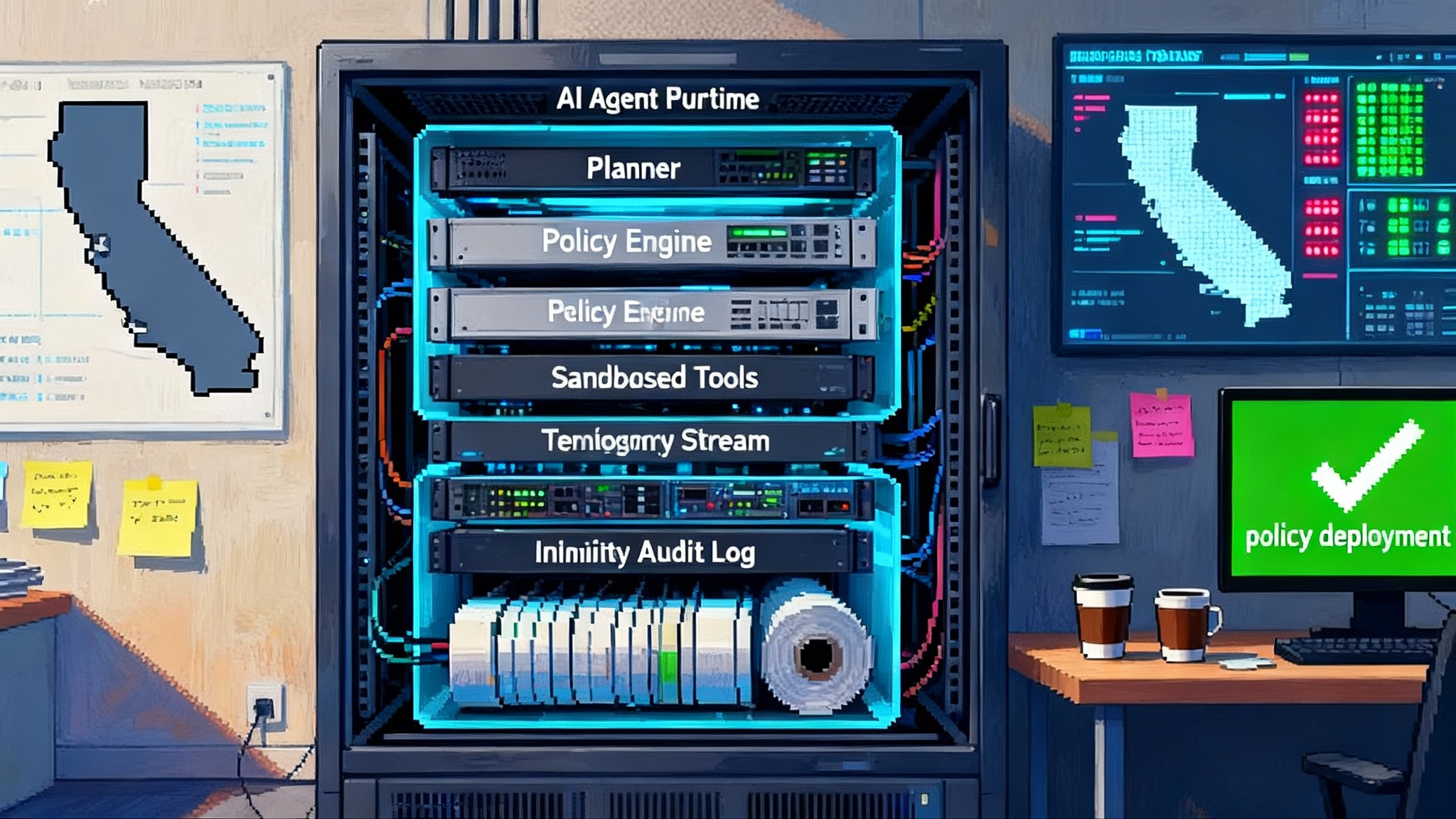

To support open-loop work, you need a few core components.

- Event log. Treat the agent as an event-sourced system. Every tool call, observation, and decision becomes an append-only event. You can replay state at any point, which makes audits and debugging practical.

- Scheduler. A job scheduler with backoff, timeout, and catch-up semantics. Jobs must survive restarts, network blips, and model updates. A scheduler that respects quiet hours and staggered retries prevents thundering herds.

- Memory. Separate long-term knowledge, short-term scratchpads, and shared team context. Use cheap storage for logs and artifacts, and reserve the model context window for the smallest actionable slice. Memory should be versioned so that you can align artifacts with the knowledge that produced them.

- Policy engine. Apply capability gating, rate limits, approvals, and budget controls in one place. Policies should be readable, testable, and deployable like code. Policies should also emit their own logs so that you can audit not just outcomes but the rules that shaped them.

- Safe evaluation. Build offline evaluation harnesses. Generate test cases from logs. Simulate tool failures and confirm that the agent pauses, asks for help, or rolls back. Use synthetic runs to probe edge cases before they show up on production data.

With these pieces, patience becomes a feature. The agent can wait, watch, and try again. You stop paying for repeated human interventions. You start paying for a reliable process. You also put less pressure on raw infrastructure because steady retries beat panicked bursts, a point that rhymes with our view that watts, permits, land, and leverage set the floor for the next compute era.

Pricing that matches reality

Tokens are an input. Time-native agents create value over time. Two pricing models make this legible.

- Uptime plans for background services. Charge a base fee for agent presence and monitoring. Include a pool of compute, storage, and tool calls. Offer tiers for higher uptime, faster retries, and richer telemetry. Make the tiers transparent so customers can see what increases cost and why.

- Outcome fees for verified deliverables. Charge per merged pull request that passes review, per account list that meets recall and precision thresholds, per meeting booked and attended, per report accepted by a manager. Publish the rubric. Let customers audit results against the rubric. Share acceptance rates and defect rates over time.

Vendors that meter both uptime and outcomes will be easier to trust. Customers will see where money goes and what it buys. This also reduces the temptation to inflate outputs to rack up token counts. The incentive shifts to fewer, better artifacts that meet a clear bar.

Trust moves from tone to trace

In a chat-first world, trust often collapses into tone and perceived expertise. In an open-loop world, trust is traceable. Teams will ask four questions of any agent vendor:

- Can we replay what happened and reproduce the result with a frozen seed and the same tools?

- Can we measure data lineage for every artifact the agent touched or produced?

- Can we see the safety envelope that prevented destructive actions, and the exact conditions of any escalation?

- Can we export logs and artifacts to our own storage and keep them if we leave?

The right answer is yes to all four. That yes is the difference between a demo and a system. It is also how you make regulators and risk teams comfortable with software that acts over time. When governance is part of runtime rather than a paper checklist, you end up with verifiable accountability, not vibes.

Practical playbooks to run now

You do not need a moonshot to benefit from idle agents. Start with scoped, high-signal tasks and treat them like services your team owns.

- Agentic coding sprints. Let an agent manage a queue of small issues. Require tests, enforce code style, and run static analysis. Use diffs as the artifact. Review, merge, repeat. Track acceptance rate to drive learning.

- Vendor due diligence. Point an agent at a list of vendors. It reads security pages, privacy policies, and compliance documents, extracts key fields, and flags gaps. Analysts review a standard report and spot check sources. Keep all links with snapshots in the log for later audits.

- Finance close prep. The agent gathers documents, reconciles transactions within a tolerance, and drafts variance notes. Accountants review exceptions. The log becomes the audit trail. Over time, the agent learns tolerances by account type and seasonality.

- Calendar and travel concierge. It proposes schedules within guardrails, books travel under caps, and keeps a log of holds and releases. It asks for approval when policies are exceeded. Add a clear rollback path for bookings.

Each playbook uses the same spine. A plan, a schedule, a set of tools, a policy envelope, and a log. The rest is domain logic and data sources.

Failure modes to design away

Open-loop systems can fail in new ways. Expect these mistakes and plan for them.

- Endless nibbling. The agent keeps improving a draft without a stop rule. Fix this with satisfcing thresholds, max iterations, and a decreasing budget curve.

- Scope creep via tool spread. The agent acquires new tools and permissions over time. Fix this with capability reviews, signed scopes, and time-bounded grants.

- Context rot. The world changes under a long run. Fix this with freshness checks and scheduled re-evaluation of assumptions.

- Silent stalls. A job hangs with no signal. Fix this with heartbeats, watchdog timers, and auto-halt if the agent cannot report.

- Forgotten costs. Background work accumulates spend in quiet corners. Fix this with all-in unit economics per artifact and alerts tied to budget burn rate.

None of these require exotic research. They require engineering discipline. That is exactly why teams that invest in process will outpace those that chase only model benchmarks.

A minimal checklist before you ship

Use this as a preflight before you let an agent work while you sleep.

- Purpose. The goal is crisply defined as a testable contract, not a vibe.

- Policy. Capabilities, tools, budgets, and escalation paths are declared in one readable file.

- Logs. Every action, input, output, and model reflection is recorded and exportable.

- Halt. There is a one-click stop that is provably fast and safe. Resume is supported and restores state.

- Audit. You can replay runs with seeds and tool versions pinned.

- Metrics. You track completion rate, time to completion, cost per artifact, and human acceptance rate.

- Security. Data is redacted or synthesized wherever possible. Real credentials are scoped and rotated.

- Evaluation. You run offline tests from real logs against a suite of failure scenarios.

- Ownership. A named human owns the agent’s service level and is accountable for triage and updates.

If you cannot check these boxes, keep the agent on a short, supervised leash.

Why patience beats raw IQ

Models will keep improving. That is a given. The durable moat is patience. Patience is the capacity to structure work in time, to accept that ten steps with reflection beat one giant leap, and to build systems that try again tomorrow in a principled way.

Patience shows up as clean event logs, as calm backoff when an API fails, as a scheduler that does not wake the whole cluster for a trivial retry, as a policy that asks for the right approval at the right moment. Patience is quiet. It is easy to miss on a demo day. It is how real work gets done at scale.

Teams that invest in patient process will see two compounding effects. First, they will ship reliable agents faster because every failure produces targeted improvements. Second, they will earn trust faster because customers can see and audit the trail. The result is not louder chat. The result is dependable outcomes that arrive even when the office is dark.

The invitation

The September wave showed that agents are ready to work in the background. The next advantage belongs to teams that treat agents like services. Give them transparent logs. Confine them to sandboxes. Put a right-to-halt in primary navigation. Meter what matters, which is outcomes and uptime. Then teach your organization to value patience and process as much as intellect.

It is time to design for the quiet hours. Close the laptop and let the work continue. When you look back, the value will not be in clever prompts. It will be in the calm, auditable, open-loop systems you built that kept moving while the screen was off.