When Trust Becomes Compute: Confidential AI with Proofs

AI privacy is shifting from policy to runtime. Attestation now gates execution and models return proof-carrying outputs with every result. That turns trust into compute and unlocks private assistants, verifiable agents, and capital-safe automation.

Trust moves into the compute path

For a decade, privacy in AI was mostly paperwork. We drafted policies, signed data processing agreements, and relied on trust in systems we could not inspect. In 2025 the center of gravity shifts. Trust is becoming part of the runtime. The environment proves itself before code starts, and the program proves what it did before anyone acts on the result.

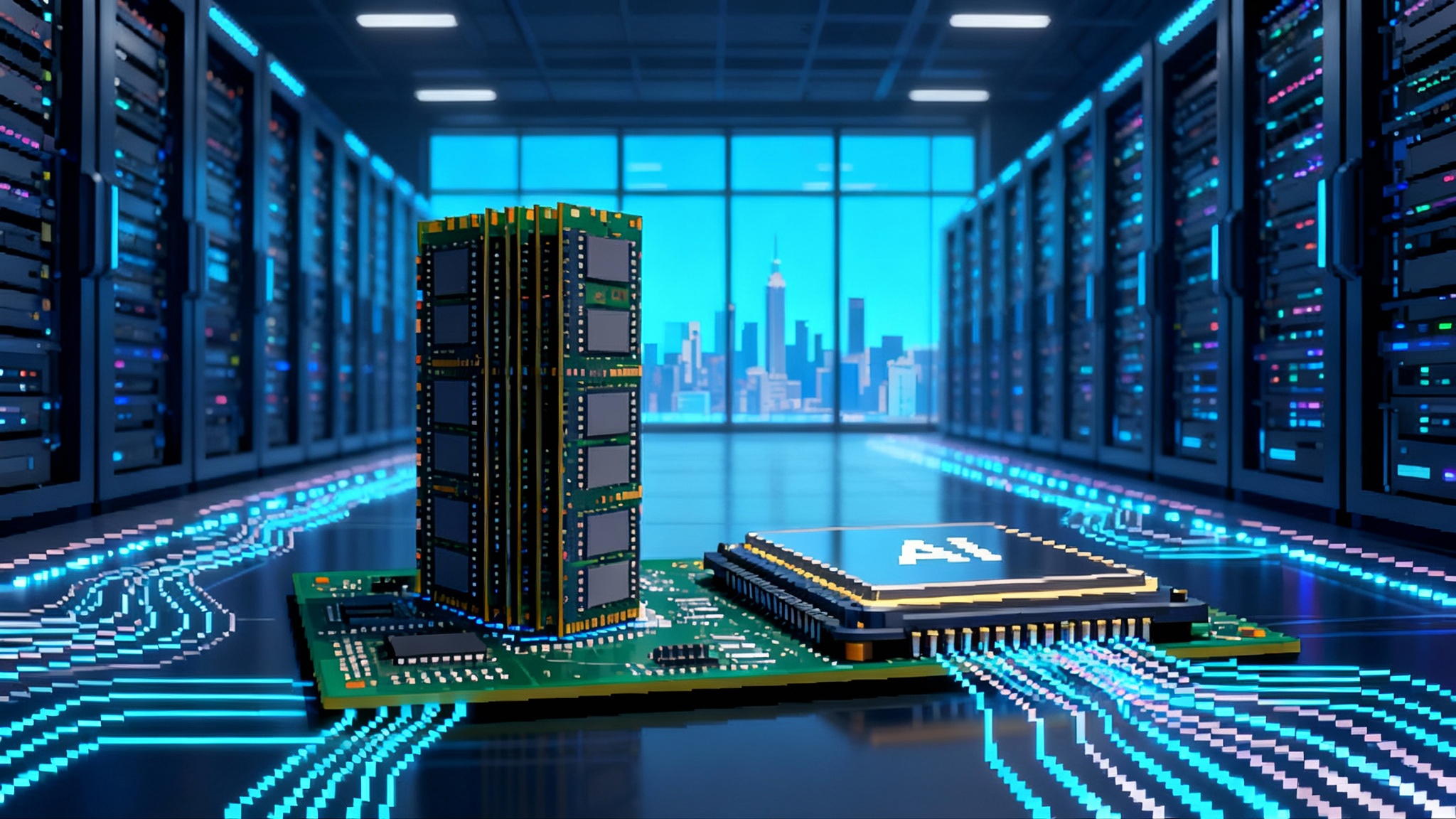

Two forces make this possible. First, confidential computing has arrived on accelerators. Nvidia’s Hopper H100 introduced the first widely available GPU trusted execution environment with device identity, measured boot, and remote attestation. You can inspect the details in Nvidia’s engineering note on confidential computing on H100 GPUs. Second, zero knowledge proof stacks matured from research projects into usable software. zkVMs and zkML toolchains can now produce small, fast proofs that a computation was carried out correctly without revealing the inputs.

The result is a practical change in how we run AI. Instead of trusting the operator, you verify the machine and you verify the math. Privacy stops being a promise and becomes a measurable property of the system.

Why 2025 is the turning point

Cloud platforms crossed the gap from pilot to platform. Confidential virtual machines that attach H100s are now offered by major clouds, with attestation increasingly scripted instead of bespoke. Microsoft moved this forward with Azure confidential VMs with H100 GPUs reaching general availability in September 2024, a clear signal that production workloads are in scope.

At the same time, the next generation of accelerators is being designed with security in the data path. Vendors are prioritizing secure I O and performance near parity between confidential and normal modes. The GPU is no longer only a math engine. It is also a root of trust.

Proof tooling followed a similar arc. Teams building verifiable compute released faster provers, added GPU acceleration to proof generation, and improved compatibility with mainstream ML graphs. Instead of rewriting models in a domain specific language, developers can compile standard programs to a proof friendly representation and aggregate proofs across a pipeline.

Put together, these shifts create a new baseline. You can make the runtime prove its identity, and you can make the result prove its provenance.

From policy to a runtime contract

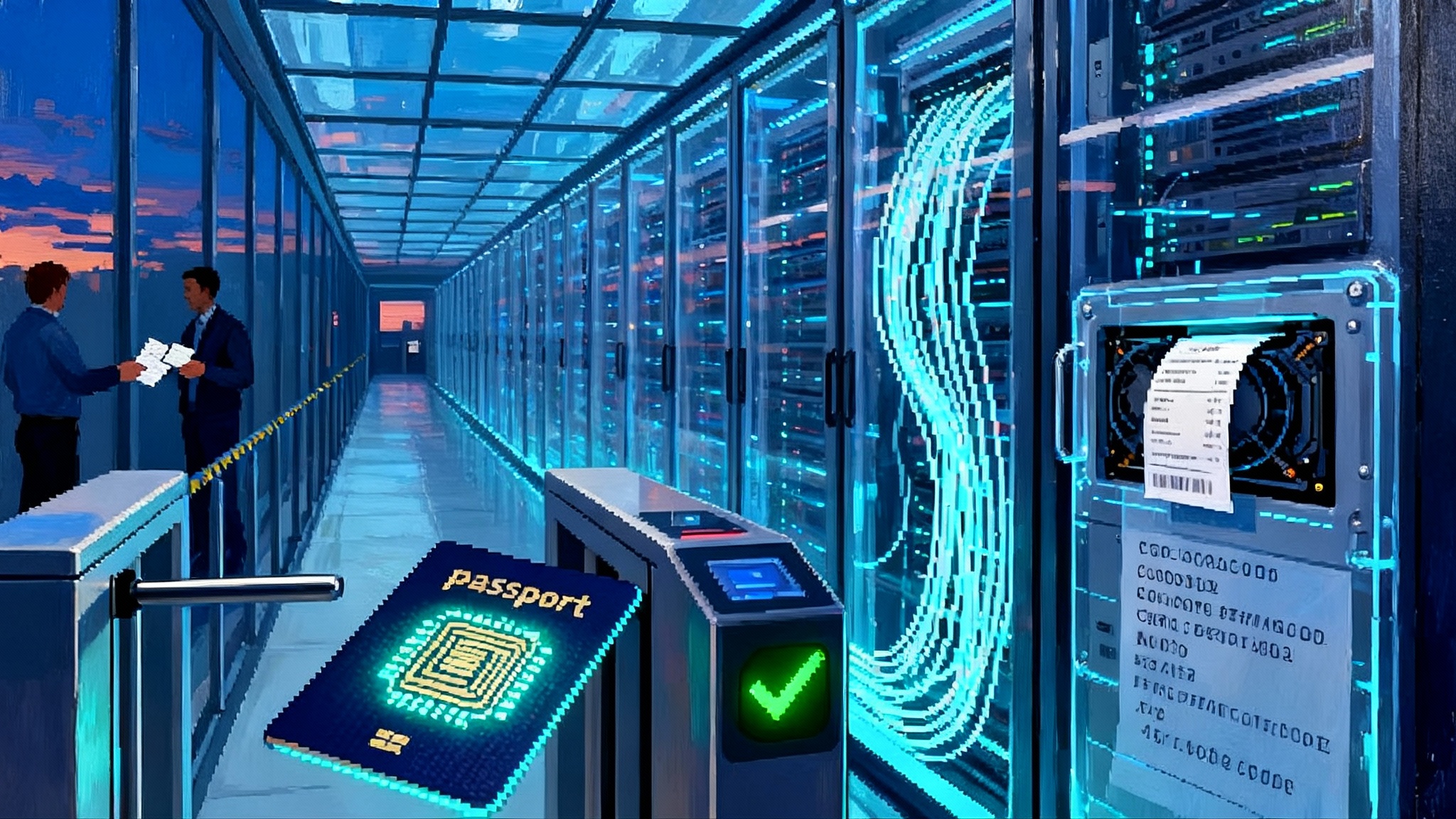

Think of this moment as moving from privacy by policy to privacy by contract. The contract is enforced at two gates.

- Door one is the attestation gate. Nothing runs until the environment proves what it is.

- Door two is the proof gate. Nothing leaves until the computation proves what it did.

That simple structure changes incentives. Operators cannot skip security hardening without getting caught because secrets never enter an unproven environment. Developers cannot ship a broken or misconfigured model without the proof failing downstream.

If you are tracking how laws embed into systems, this shift rhymes with the idea that law moves inside the loop. Policy is still essential, but enforcement travels from binders and audits into schedulers and services.

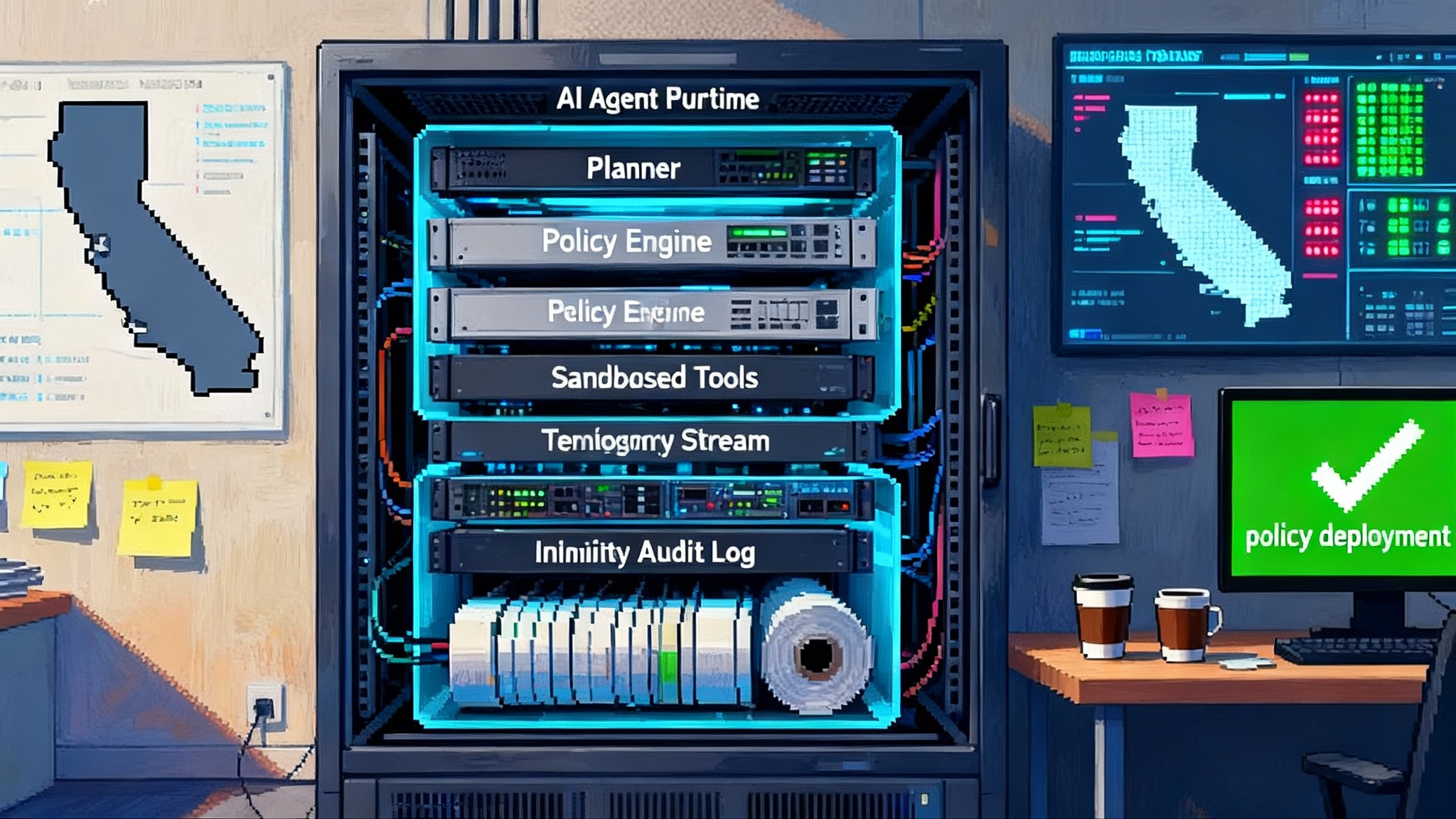

The two gate architecture, step by step

Here is a concrete flow you can implement today.

- Request attestation from the runtime

- Your scheduler asks the target machine for a signed report. For CPU, that is a confidential VM measurement. For GPU, that is the device identity, firmware measurements, and a confidential mode flag.

- The report is verified against the vendor certificate chain and your allowlist of firmware and driver versions.

- Only if the report passes does the orchestrator inject secrets and open access to encrypted datasets.

- Run the job inside the TEE

- Data is decrypted inside memory regions protected by the TEE. The driver talks to the GPU over a secure channel. In confidential mode, memory payloads are fenced from the host and other tenants.

- Inside the enclave, the program logs its configuration and model hash. These become public inputs that will be referenced later in a proof.

- Produce a proof carrying output

- Alongside the normal result, the job emits a succinct cryptographic proof that the declared program, parameters, and constraints produced this specific output. For ML inference, the proof binds a model hash and input commitments to a particular output vector.

- The proof is signed by a workload key scoped to this enclave session, which is itself tied to the attestation report. That creates a chain: device identity to enclave to program to output.

- Verify before acting

- Downstream services accept the result only if the proof verifies under the expected policy. A bank API, a health records system, or a partner agent can validate that the output came from an allowed model, ran in a confidential environment, and respected data constraints.

- Because the proof is succinct, verification fits inside request response paths or on chain checks.

Attestation is the bouncer at the door checking IDs. Proof carrying outputs are the itemized receipt stapled to every cocktail. You do not argue about what happened. You read the receipt.

What this unlocks

- PII grade assistants that stay private

- A tax or medical assistant can ingest unredacted records. The assistant runs only on attested hardware and cannot exfiltrate to untrusted processes. Every answer carries a receipt that names the exact model and data policy. Auditors can verify that no disallowed endpoint touched the data, and regulators can test receipts without viewing the sensitive content.

- Cross organization agents without a trust vacuum

- Data collaborations today rely on contracts and static audits. With attestation and proofs, a procurement agent can query supplier systems with confidential bid data, prove that the scoring function ran as agreed, and reveal only the final ranking. The supplier sees validation without seeing competitor inputs. Disputes shrink because every step becomes verifiable evidence.

- AI that can hold value

- Custodial services can require proof carrying outputs before funds move. A trading bot’s order flow can be gated on a policy that verifies the strategy code hash, the model version, and a risk constraint proof. This makes it possible to allocate real capital to autonomous agents while bounding behavior with math instead of post hoc promises.

- Compliance that is automatic, not performative

- Instead of screenshots of settings pages, you maintain machine readable proofs: which version of which model ran, under which security posture, on which attested devices. Privacy officers can spot check receipts and enforce denial by default, where the absence of valid attestation or a proof means the pipeline does not run.

If model lineage matters in your stack, connect this practice with the idea that model provenance becomes the edge. Receipts are the missing audit trail between policy and production.

Designing a proof first pipeline

You can add this to real systems with a few disciplined choices.

- Define the security contract at build time. Treat the environment and the program as artifacts. Assign identifiers for the CPU TEE version, GPU firmware, driver, model hash, and code commit. Store them with your release metadata.

- Introduce an attestation gate in the scheduler. Before Kubernetes starts a job, call the attestation client in an init container. Cache allowlists and revocation lists. Refuse to mount secrets or data volumes unless the report passes.

- Bind keys to attestation. Generate ephemeral workload keys inside the enclave after attestation, not before. Use those keys to sign output manifests. Rotating the workload key should require re attestation to avoid replay.

- Add proof generation alongside inference. Use a zkVM or zkML library that compiles your computation to a proof friendly circuit. Start with narrow proofs that bind the model hash, input commitment, and output. Expand to constraints, for example that certain thresholds or business rules were respected.

- Aggregate proofs across steps. If the pipeline spans microservices, nest proofs or aggregate them into one receipt attached to the final output so verifiers do not chase fragments.

- Log receipts as first class telemetry. Store the attestation report, the proof, and the manifest in an append only log. Make verifiers stateless by giving them a way to fetch or reference the receipt bundle.

Performance and cost realities

- Side channels still exist. Confidential computing blocks direct reads of memory, but timing and resource usage can leak information. Rate limit, pad, and batch to normalize observables. For sensitive workloads, pick constant time kernels and schedule noisy neighbors to mask patterns.

- Attestation freshness matters. A week old report is not equivalent to a just issued one. Set explicit lifetimes and implement retry on transient verifier outages. If a vendor revokes a certificate or updates a reference integrity manifest, stale reports should fail closed.

- Proofs are not free. Generating evidence costs compute and latency. Budget for it like you budget for tests. Start with low degree circuits and smaller models, then use recursive aggregation or batched proving as volume grows. Track a proof budget per request type and allocate capacity accordingly.

- Do not strand your keys. If the workload needs to decrypt customer data, it must obtain the key only after attestation. Use a secrets service that releases material based on report claims. Keys should never be derivable outside the enclave.

- Watch the performance cliffs. Confidential modes can add overhead when swapping large models or doing large host device transfers. Schedule for locality, prefer larger batches, and amortize proof generation when possible.

If your roadmap includes large training clusters, note how compute supply interacts with confidentiality features. Capacity, siting, and networking will shape adoption. For a deeper dive into those constraints, see our take on the next AI race dynamics.

Operational playbook: day one to day ninety

Day one: choose a single high stakes workflow

- Pick the pipeline where privacy failure is intolerable. Good candidates include fine tuning on customer data or answering support tickets that include account numbers. Add the attestation gate and a minimal output proof.

- Define an allowlist. Name the acceptable CPU TEEs, GPU firmware versions, drivers, and model hashes. Put the list under code review like any other configuration change.

Day thirty: wire proofs into decisions

- Add a proof verifier to the critical decision path. For example, require a valid proof before a refund is issued, an order is placed, or a record is written to a partner system.

- Provide a receipt mailbox. Create a receipt bundle that includes the attestation report, the output proof, the model hash, and policy identifiers. Store it in append only storage with short retention for hot reads and longer retention for audit.

Day sixty: open the door to partners

- Publish a small verifier library or service that checks your receipts and returns a yes or no. Make it easy for others to trust your outputs without calling you.

- Add dashboards that expose receipt rates, proof latency, and attestation freshness. Operations teams need real time visibility into where trust is succeeding and where it is failing closed.

Day ninety: practice the chaos drill

- Simulate a firmware revocation and a key rotation. Your pipeline should fail closed, surface a clear diagnosis, and recover quickly.

- Review cost and latency budgets. Move to recursive proof aggregation where it saves money. Pre compute proofs for batch workflows. Tighten policies where you saw confusion.

Business models where trust is measurable

- Compliance as a query, not a PDF. Auditors can query a timeline of receipts that show exactly which model touched which records under which security posture. Audit cycles shrink from weeks to minutes.

- Data marketplaces with selective disclosure. Providers can sell access to models that accept private inputs, reveal only allowed outputs, and emit proofs that the policy was enforced. Buyers do not receive model weights, yet they gain high trust results.

- Insurance and indemnification around math. If outputs are verifiably bound to code and constraints, insurers can underwrite specific behaviors rather than generic operations. Premiums become tied to proof coverage and attestation posture, not only questionnaires.

Frequently asked questions

What about training, not just inference?

- The same pattern applies, though the cost profile is different. You can attest the training environment, bind datasets to that attestation, and emit periodic proofs about constraint checks. You can also produce compact proofs about data policy adherence rather than the entire gradient path.

Does this break usability or speed?

- For many inference workloads, the overhead is manageable. Verification is typically milliseconds. Proving costs are real, so start where it matters most and expand as libraries mature. Design for batching and use aggregation to keep the hot path responsive.

What about open source models and community weights?

- Provenance becomes a first class concern. Bind the exact commit or weight hash in your receipts. If you rely on community artifacts, require a higher bar for attestation and restrict use to lower risk workflows until your own evaluation is complete.

How do we convince partners to adopt this?

- Give them a verifier, not a slide deck. Make verification one function call. Offer a sandbox with synthetic receipts and clear failure modes. When a partner can check the math on their own infrastructure, the conversation changes from belief to validation.

A short field guide to terms

- Trusted execution environment. A protected region of memory with a measured boot sequence that can produce a signed report about its state. For GPUs this includes device identity, firmware, confidential mode, and driver linkage.

- Remote attestation. The process of a device proving its state to a verifier using signed measurements checked against a vendor authority and your policy.

- Proof carrying outputs. Results that include a succinct cryptographic proof that a specific program, with specific inputs or constraints, produced this exact output.

- zkML and zkVM. Toolchains that compile programs, including ML graphs, into proof friendly forms so that a short proof can be verified quickly by any party.

Conclusion: make trust a default setting

For most of computing history, trust lived in people and processes. We bought reputation, brand, and insurance, then hoped the machine did what it was asked. Confidential AI with proof carrying outputs moves trust into code paths and hardware. It turns trust into something you can meter, escrow, and price.

This is not an abstract ideal. When trust is compute, you can force it at runtime and settle it after the fact with receipts. You can trade it across organizations by verifying the same short proof. You can bake it into schedulers and service meshes so that privacy is a property of the system, not a memo on a wiki.

The next twelve months will separate teams that talk about privacy from teams that can prove it. The playbook is simple enough to start today: attest the machine, prove the result, log the receipt, and verify before you act. If your AI is going to touch real data or real money, that is not paranoia. It is the new minimum.