Chip-literate AI: Models That Learn to Speak Silicon

AI’s edge is shifting from bigger models to better fit. Late September brought real progress on non-CUDA stacks, compilers, and runtimes, turning portability into a competitive moat and making routing part of cognition.

The week the model learned the hardware

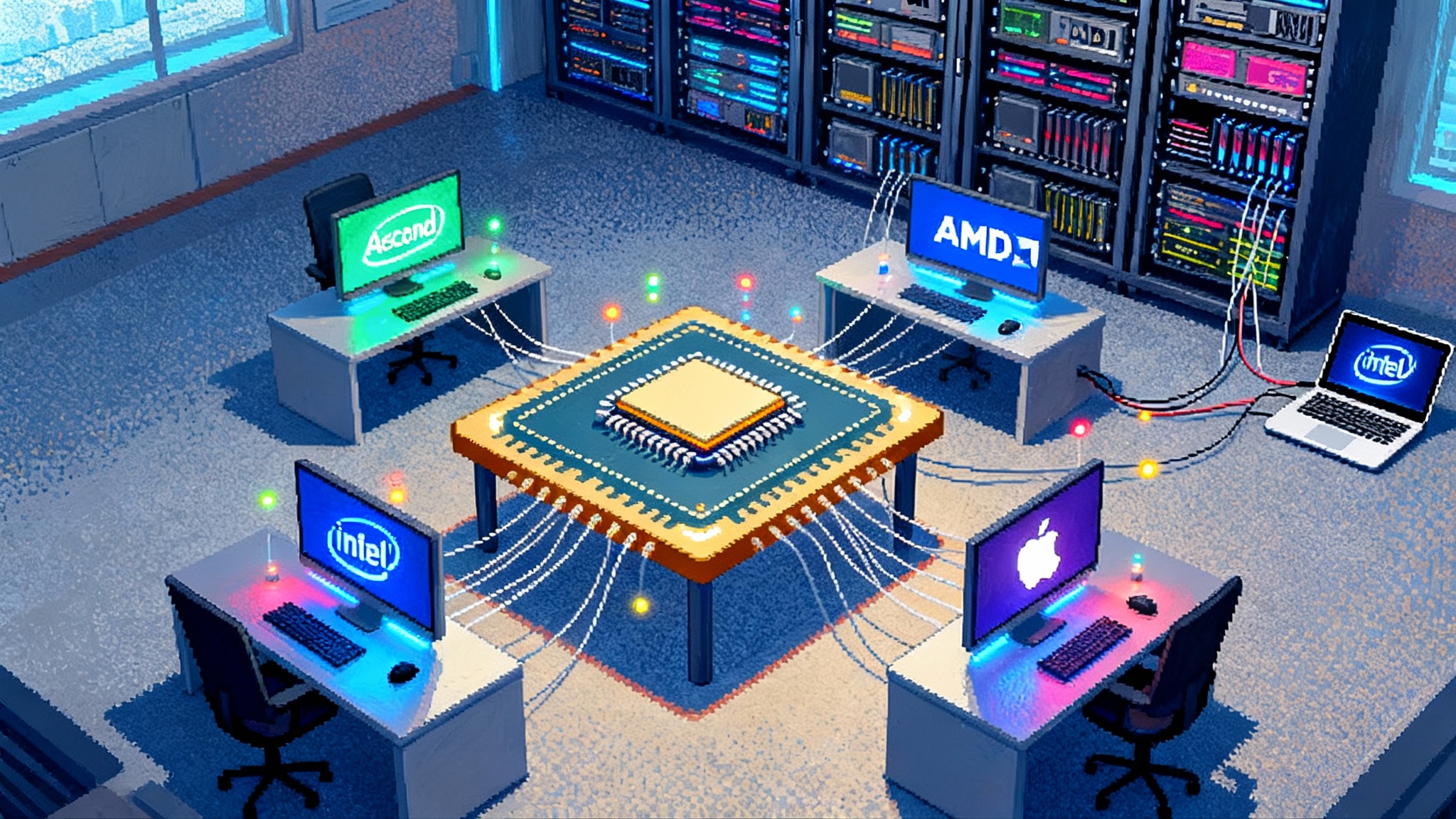

A small shift in late September did not grab front-page headlines, but it will change how artificial intelligence is built and bought. In Shanghai, Huawei said its Ascend team would open source CANN operators and deepen direct integrations with popular frameworks so that large models run natively on Ascend Neural Processing Units rather than relying on compatibility layers. Days later, AMD shipped updates that broadened ROCm coverage on Linux and Windows, tightening the loop between frameworks and non-CUDA hardware. Put simply, the model and the machine started speaking the same language in more places, and not only in Nvidia’s dialect. See Huawei’s announcement in Ascend opens CANN operators and AMD’s details in the ROCm 6.4.4 release notes.

This matters because it marks a transfer of gravity. For two years, teams chased size. The world optimized for bigger parameter counts and higher context windows. Now the bottleneck is not only how big a model is, but how well that model fits the silicon you actually have. A model that adapts to many chips can land closer to the user, waste fewer watts, and clear more service level objectives at lower cost.

From one big brain to many dialects

Think of a model as a multilingual speaker and chips as regional accents. Until recently, most systems spoke one accent fluently: CUDA on Nvidia GPUs. Everything else required an interpreter, which added latency and bugs. The new releases change that. Ascend’s CANN is being exposed in the open, AMD’s ROCm installs more like a first-class citizen on client and cloud, and Intel’s Gaudi software keeps folding mainstream inference engines into its supported stacks. The vocabulary of compute is getting richer and models are learning it.

This is not an abstract trend. The cadence of non-CUDA updates has accelerated, which means features like paged attention, graph execution, quantization-aware kernels, and expert parallelism can arrive on alternative accelerators close to when they land on CUDA. Meanwhile, new model launches are increasingly announced with day-one support for domestic and regional accelerators, not as an afterthought. The center of effort is moving from backporting to co-design.

The compiler is now part of cognition

When you ship a frontier language model, you are no longer shipping only weights. You are shipping a runtime, a compiler, and a library of fused kernels that decide how computation flows through the chip. That stack decides whether a 128,000 token prompt runs in seconds or stalls, whether a mixture-of-experts router burns bandwidth or sips it, and whether a local device can offload vision features without melting the battery.

Here is a concrete way to think about it. The same model can be compiled to use different attention kernels, memory layouts, and precision formats. On AMD hardware with ROCm, a HIP or Vulkan path can choose distinct prefill and decode strategies with tradeoffs between throughput and latency. On Ascend, CANN graph execution can fuse operators so the model completes fewer trips across on-chip memory. On Gaudi, an inference engine can lean on custom collectives and tensor slicing tuned for the Habana interconnect. The model’s thinking style changes with the compiler’s plan.

This shift mirrors how databases evolved. At first, the query was the star. Then query planners became as important as syntax. Today the AI compiler and runtime act like a planner for reasoning steps, scheduling how and where each token is born.

Portability as a moat

For years, competitive advantage in AI came from data, model scale, and a strong research pipeline. Those still matter. But as supply chains stay lumpy and regulations push for locality, the new moat is deployment portability. Portability is not a bumper sticker. It is a checklist you can verify:

- Does the model package include kernels for Ascend, ROCm, Gaudi, and modern CPU paths such as OpenVINO, with clear fallbacks and identical outputs across backends within a defined tolerance?

- Can the runtime scale from a single mobile SoC to a rack of accelerators without changing application code?

- Are quantization recipes, attention variants, and scheduling policies reproducible across backends so debugging is sane?

- Do you have a test matrix that exercises long context, streaming, function calling, and tool use on each backend with the same prompts and asserts?

Teams that can answer yes will win deals, because they can land workloads on whatever silicon is available or compliant that week, and they can switch without a rewrite.

For a deeper look at the physical constraints that make portability valuable, see our discussion of power and siting in [The Next AI Race: Watts, Permits, Land, and Leverage]. And for the hardware bottlenecks that shape kernel choices, see [The New AI Arms Race Is Bandwidth: Memory, Packaging, Fiber].

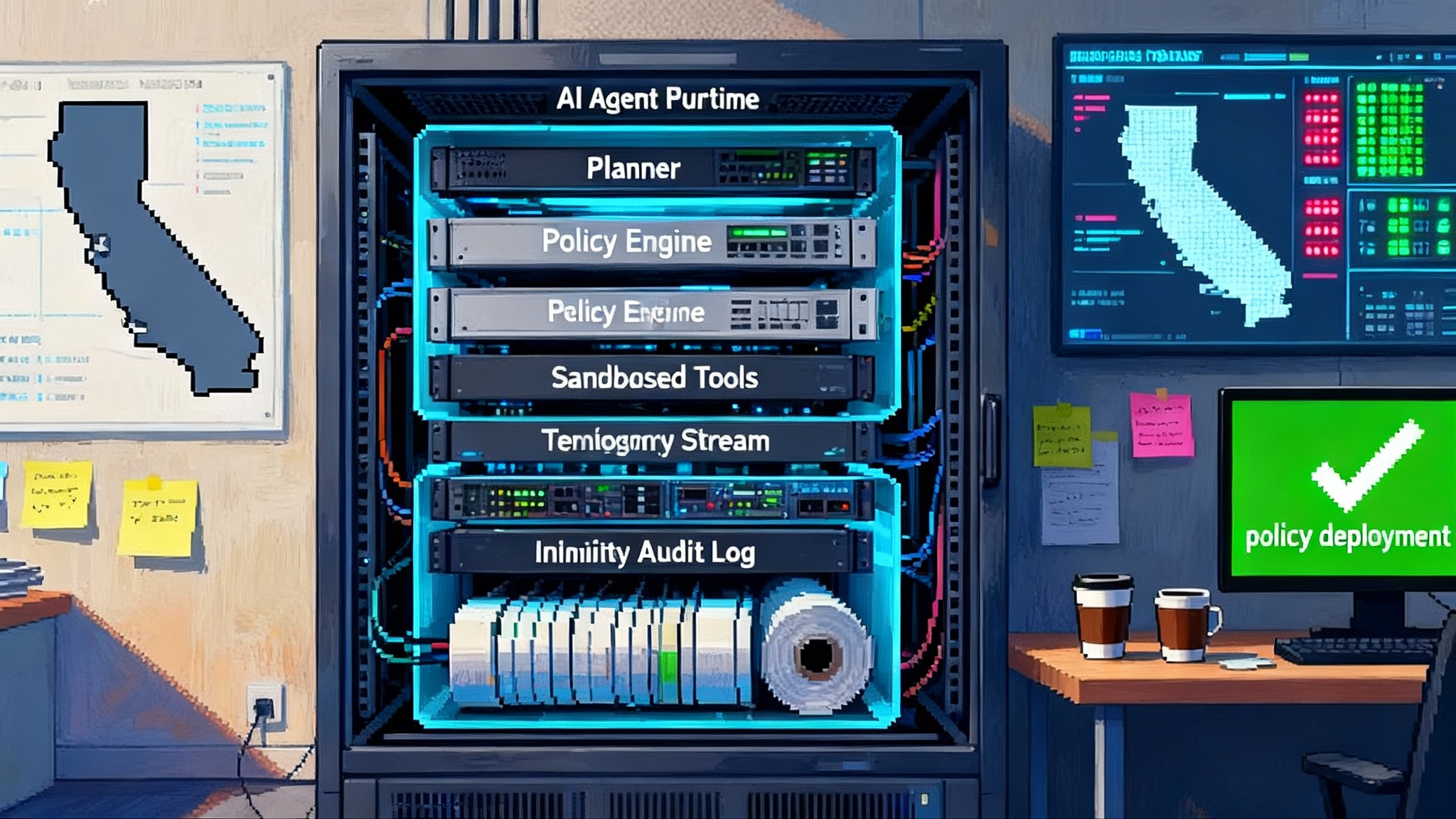

Real-time routing is the new inference

Imagine an enterprise agent serving thousands of concurrent users. It needs to trade off latency, cost, and locality in real time. A user in New York asks a question that triggers tool use. Another in Frankfurt requests a long document summary that must stay in region. A third on a laptop asks for a quick image caption offline.

- The router chooses Ascend in a regional data center for a long context summarization job, because graph mode and shared memory reduce on-chip hops and keep the job local.

- It picks a Gaudi cluster for a chat burst with many small conversations, because the stack gets strong batchability with predictable tail latency.

- It keeps a short reasoning task on a Mac with Apple Silicon through an MLX or Metal backend, because local is faster than the network and the privacy policy says do not upload.

- It flags a cost spike and moves nightly retrievers to AMD Instinct nodes, because ROCm and a tuned prefill are the best value per token this hour.

The agent is not simply selecting a model. It is selecting a plan across heterogeneous accelerators, and that plan is part of the cognition loop.

Why late September mattered

Two timely signals grounded this shift. First, Huawei’s commitment to open up CANN operators and plug into developer-favorite projects like PyTorch and vLLM puts a non-CUDA stack on a faster innovation clock. The public statement is captured in Ascend opens CANN operators. Second, AMD’s late September updates brought ROCm further into mainstream developer flows on Linux and Windows, which reduces friction for teams that want to ship one binary across workstations and servers. The specifics are in the ROCm 6.4.4 release notes.

The ripple effects showed up immediately. Vendors published how-to guides that deploy new mixture-of-experts models on Gaudi, signaling that model authors and hardware teams are coordinating earlier in the launch cycle. The PyTorch Foundation’s support for a multi-backend serving ecosystem formalized a path where one engine can target many accelerators under shared governance. At the edge and on client devices, CPU and NPU stacks added longer context and dynamic batching, improving small and mid-sized model behavior without code changes. The practical takeaway is that a portable model can reach deep into fleets of laptops and desktops that were invisible to CUDA.

Regulatory and locality pressures amplify these technical shifts. As we argued in When Regulation Becomes Runtime: Law Inside the Agent Loop, compliance is increasingly enforced by the execution path itself. If models can target multiple backends, the policy engine can bind requests to hardware that matches jurisdiction and privacy constraints.

What this means for your stack

If you run models in production, the next 12 months will be less about adding parameters and more about adding places to run. Here is a pragmatic plan.

1) Treat the model as a platform

- Package weights with a runtime and a compiler profile per backend. Include attention kernels, fused ops, and precision variants that you have tested together. Ship it like a database binary, not a loose folder of checkpoints.

- Define a golden prompt suite that asserts bit-wise or tolerance-bounded equivalence across backends. If two backends disagree, block the release and open a regression tied to that backend.

- Publish a manifest that lists supported kernels, precision modes, attention variants, and known caveats per backend. Keep it versioned and signed.

2) Make routing explicit

- Build a two-layer scheduler: a cluster-level router that reasons about locality, cost, and capacity, and an engine-level scheduler that controls prefill, decode, and batching.

- Share signals between the layers so decisions are predictive. Feed the engine’s queue depth, KV cache hit rate, and tail latency back to the cluster router.

- Use policy to enforce non-negotiables. For example: do not leave the region, cap egress per request, and set maximum first-token latency.

3) Price by token, watt, and meter

- Extend FinOps beyond cloud invoices. Track cost per thousand tokens by backend and include energy and egress.

- Compare alternative kernel plans, not just hardware tiers. A slower prefill with lower power can beat a faster one on total cost when power is constrained.

- Prefer local runs on client devices when they satisfy policy. You gain latency and avoid egress risk.

4) Standardize your portability tests

For every supported backend, run the same scenarios:

- Long-context streaming with strict time-to-first-token targets

- Function calling with latency budgets and structured output verification

- Tool use with synthetic network jitter and rate limits

- Cold start and warm start comparisons for prefill and decode

Keep a public badge in your docs that shows the last passing versions per backend. This turns portability from marketing into governance.

5) Build a kernel feedback loop

- Capture runtime telemetry that points to hot kernels and memory stalls per backend.

- Feed that into your compiler choice. On Ascend, this may trigger graph fusions. On ROCm, it may flip a path between Vulkan and HIP. On Gaudi, it may change collective sizes and tensor shard layouts.

- Close the loop with automated experiments. Nightly jobs should test alternate kernel graphs against your golden prompt suite and update the manifest when wins are stable.

How the pieces fit together

Follow a single user query to see how compilers and runtimes effectively join the model.

- The router inspects a prompt, estimates prefill tokens and decode length, then checks policy: do not leave the region, cost ceiling 0.15 dollars per 1,000 tokens, tail latency under 400 milliseconds for the first token.

- The router asks a cost model for the best backend. It returns Ascend in region for long prompts because of graph fusion and memory pooling, Gaudi for short bursts with stable batching, and AMD for scheduled batch jobs overnight.

- The engine-level scheduler adjusts batch size and prefill parallelism. It triggers a kernel plan compiled for that backend, using the model’s packaged kernels and precision. KV cache placement and attention kernels differ per chip, but the application code does not change.

- Telemetry confirms tail latency is inside budget. If it drifts, the cluster router updates its plan and drains requests to the next best backend.

The important part is not which chip won. It is that the model shipped with the knowledge to run well on all of them, and your system knew how to use that knowledge.

New risks, but better knobs

Heterogeneous backends mean more things to break. Fragmented kernel sets increase maintenance. Debugging cross-backend discrepancies is real work. But the knobs you gain are powerful:

- If your supply chain is choppy, you can keep serving by shifting between accelerators with minimal accuracy drift.

- If privacy rules tighten, you can keep data local without rewriting the app layer.

- If budgets wobble, you can change the price curve by routing to the cheapest viable backend for that hour or region.

Governance also gets clearer. With a portability test suite and per-backend policies, you can draw bright lines: what models are allowed to run where, under which price and privacy constraints, and with what performance guarantees.

What to build next

- A broker that speaks many runtimes. Build a small service that speaks CANN, ROCm, Gaudi, and CPU or NPU paths, normalizes telemetry, and exposes one gRPC interface to your apps.

- A profile exchange for backends. Define a simple file that lists supported kernels, precision, attention variants, and caveats for each backend of a release. Treat it like a driver manifest for models.

- Silicon-aware agents. Let the agent ask the platform: what can you do locally in 100 milliseconds and under 10 watts, and what must go to the rack. Teach the agent to adapt its reasoning depth to hardware limits.

The takeaway

Bigger models will keep arriving, but the competitive edge is shifting to better fit. Late September showed a clear turn: non-CUDA stacks moved from second class to first class, and model authors started shipping compilers and runtimes as part of the product. That makes portability and deployment pathways the new moat. In the next cycle, the best systems will not only understand language. They will understand silicon, and they will choose it in real time.