When Models Buy the Means of Compute: AI Becomes Capital

A new class of AI deals is reshaping the industry. When labs underwrite long term GPU supply with equity and prepayments, compute stops being a bill and becomes capital. Here is how contracts, accounting, and agents evolve next.

The moment the category changed

On October 6, 2025, OpenAI and AMD announced a supply partnership that will place six gigawatts of AMD Instinct GPUs into OpenAI infrastructure, with an initial one gigawatt scheduled for the second half of 2026. The headline matters, but the structure matters more. This was not a routine capacity reservation. It paired long dated chip deliveries with equity linked incentives. In other words, the model buyer did not just rent the tools. It helped finance the factory line that produces them. AMD and OpenAI announced a 6 gigawatt deal that reads as much like a project finance term sheet as it does a technology agreement.

The simple but profound shift is this: models are beginning to buy or at least underwrite the means of compute. Once models become partial owners of their upstream, the rest of the stack realigns. Chip road maps, data center construction, power procurement, and financing no longer move in parallel lanes. They close into a vertically coupled loop where delivery schedules, performance per watt, and deployment plans co determine one another.

From software playbook to industrial physics

For a decade, the dominant mental model said AI is software. Ship a model, pay a cloud bill, and scale the product. That story breaks at gigawatt scale. Training and inference at the frontier hit constraints that look like power generation and semiconductor fabrication, not like web servers.

Inputs and outputs that feel like heavy industry

The inputs now include time, electricity, cooling water, logistics, and advanced packaging capacity, plus the silicon itself. The outputs are model checkpoints, tokens served, and service levels defined by predictable throughput. The friction is physical. If a substation permit slips by three months, a training plan slips by three months. If memory bandwidth per package lags, batch sizes and schedules must be rewritten. Anyone who has followed the race for watts, permits, land, and leverage knows this was inevitable.

Compute treated as an asset, not an expense

The OpenAI and AMD announcement surfaced a new baseline. At this scale, labs cannot treat compute as a monthly line item. They must treat it as an asset with a build schedule, warranty terms, and depreciation. The right to future compute becomes as valuable as what you can run today. Delivery risk and upgrade paths are no longer anecdotes. They are variables on the balance sheet.

Warrants, prepayments, and the balance sheet shift

Buried in the announcement is the playbook. AMD issued OpenAI a warrant to purchase up to 160 million AMD shares at a nominal exercise price, with vesting tied to delivery milestones and stock price thresholds. AMD filed an 8-K detailing the warrant, including a first tranche keyed to the initial one gigawatt and full vesting at six. This mechanism aligns chip supply, road map execution, and model deployment with long dated financial upside.

Accounting follows incentives. Historically, a lab might expense cloud compute as consumed. In a warrant plus prepayment world, the right to delivery becomes an economic asset. Think of it as a long dated capacity right, similar to offtake contracts in energy. Expect labs to categorize these rights as a mix of inventory, intangible assets, and contract assets, with fair value adjustments as deliveries crystallize. Investor updates will add new disclosures such as reserved compute alongside cash, equivalents, and committed capital. If oil companies publish proved reserves, labs will publish reserved compute with delivery curves by generation.

How it works in practice:

- The lab prepays or commits to a multi year schedule. Cash and warrants reduce supplier risk, which pulls capacity forward in foundry and packaging queues.

- The supplier ties vesting to production milestones and stock price thresholds. Incentives line up across technology, operations, and capital markets.

- The lab books the right to compute deliveries as a contract asset and amortizes it into cost of revenue as chips arrive and enter service. Depreciation now attaches to fleets of accelerators just like it attaches to turbines and servers.

This is the point where compute turns from operating expense into owned capital.

Compute denominated contracts are next

If you can denominate capacity, you can contract, hedge, and trade it. The units will match how builders think:

- Gigawatt months for power backed capacity at specific facilities

- PetaFLOP days for training throughput on a named architecture

- Tokens per second for served inference with service level guarantees

- Rack month credits tied to memory footprints and interconnect topologies

A representative term could read like this: the buyer receives 80 petaFLOP days per month of MI450 class training capacity in a defined region, with a step up option to MI470 class capacity upon release. Pricing resets quarterly against a published index of FLOP efficiency and energy cost. Delivery shortfalls convert to cash or additional credits at a penalty factor. This looks very much like power purchase agreements, airline capacity leases, and cloud reserved instances, adapted to the physics of modern acceleration.

Two enabling accounting and market features will make these contracts usable at scale:

- Reserve compute accounting. Labs disclose forward compute on a delivery curve, similar to backlog, with sensitivity to yields and ramp schedules. Auditors can tie these disclosures to supplier reports.

- Compute indices. Benchmarks publish standard indices for capacity, for example MI450 petaFLOP day in U.S. West with 99.9 percent availability. Auditable indices create hedging instruments and unlock bankability.

Vendor financed AI factories

Once contracts standardize, the capital stack opens, and the economics begin to look like energy, shipping, and aviation. Vendors will finance part of the build as long as offtake is locked.

Expect three layers:

- Senior project debt from banks and export credit agencies, secured by the facility, its offtake contracts, and a first claim on hardware

- Vendor financing from chip suppliers and integrators who take warrants, revenue share, or preferred equity in exchange for delivery priority

- Customer prepayments and take or pay agreements that secure capacity at a known price

The structure rhymes with power plants and aircraft finance, except the asset soaks electricity rather than producing it. Chipmakers pull demand forward by offering financing and equity linked upside. Data center developers stitch together land, power, and cooling. Labs lock rights to future compute the way miners lock future rigs. The shared objective is throughput.

The vertically coupled loop

The new loop has four gears that turn together, and none can spin alone:

- Chip road maps. Packaging breakthroughs and memory bandwidth set the pace.

- Power and real estate. Site acquisition, grid interconnects, and water rights determine where compute can plausibly live.

- Financing. Warrants, prepayments, and offtake contracts make capacity bankable.

- Software and models. Agent workloads decide whether the capacity earns its keep.

Accelerate one gear and the others must follow. That is why a single supply deal can move a stock price, why a substation permit can change a training plan, and why compiler choices at a lab influence a foundry mask set two years out. As models become more chip literate and learn to speak silicon, this coupling will only tighten.

Competition and antitrust in a world of capacity rights

If a lab can earn equity for pledging scale, regulators will ask whether capacity is being foreclosed to rivals. Useful tests will look beyond slogans and into schedules and terms:

- Share of forward capacity. Not just installed base today, but the share of next generation silicon presold to the top buyers.

- Exclusivity in disguise. Milestones that favor one lab so strongly that others cannot realistically qualify.

- Discrimination in interconnects and software. Whether vendors ship optimized stacks to select buyers months ahead of others.

Remedies will likely focus on disclosure and standardized access rather than structural separation. Think of requirements to publish fair queue positions, to offer standard tranches without tying clauses, or to provide non discriminatory access to interconnect firmware and compilers after a fixed window. This is regulation that behaves like runtime policy, the same mindset explored in law inside the agent loop.

Geopolitics: silicon is strategy

Every gigawatt of compute carries a passport. Front end wafers might be fabricated in Taiwan, Korea, or Arizona. Advanced packaging may live in Malaysia, Singapore, or the United States. Power comes from a local grid with its own climate and reliability profile. Export controls define where certain accelerators can ship and at what performance thresholds. The more labs own their forward supply, the more they inherit these constraints.

Three knock on effects follow:

- Governments will treat compute capacity like a strategic stockpile. Similar to petroleum reserves, they will encourage secured local compute for critical industries and public sector workloads.

- Subsidy programs will shift from broad grants to matched financing against capacity contracts that directly unlock private project debt.

- Cross border arrangements will include reciprocal recognition of compute reserves, similar in spirit to mutual recognition in aviation or medical certifications.

Agents and databases will price compute like currency

If compute becomes an asset, someone must keep its ledger. The next generation of agent systems and databases will do more than store embeddings and routes. They will price, allocate, and trade compute in real time.

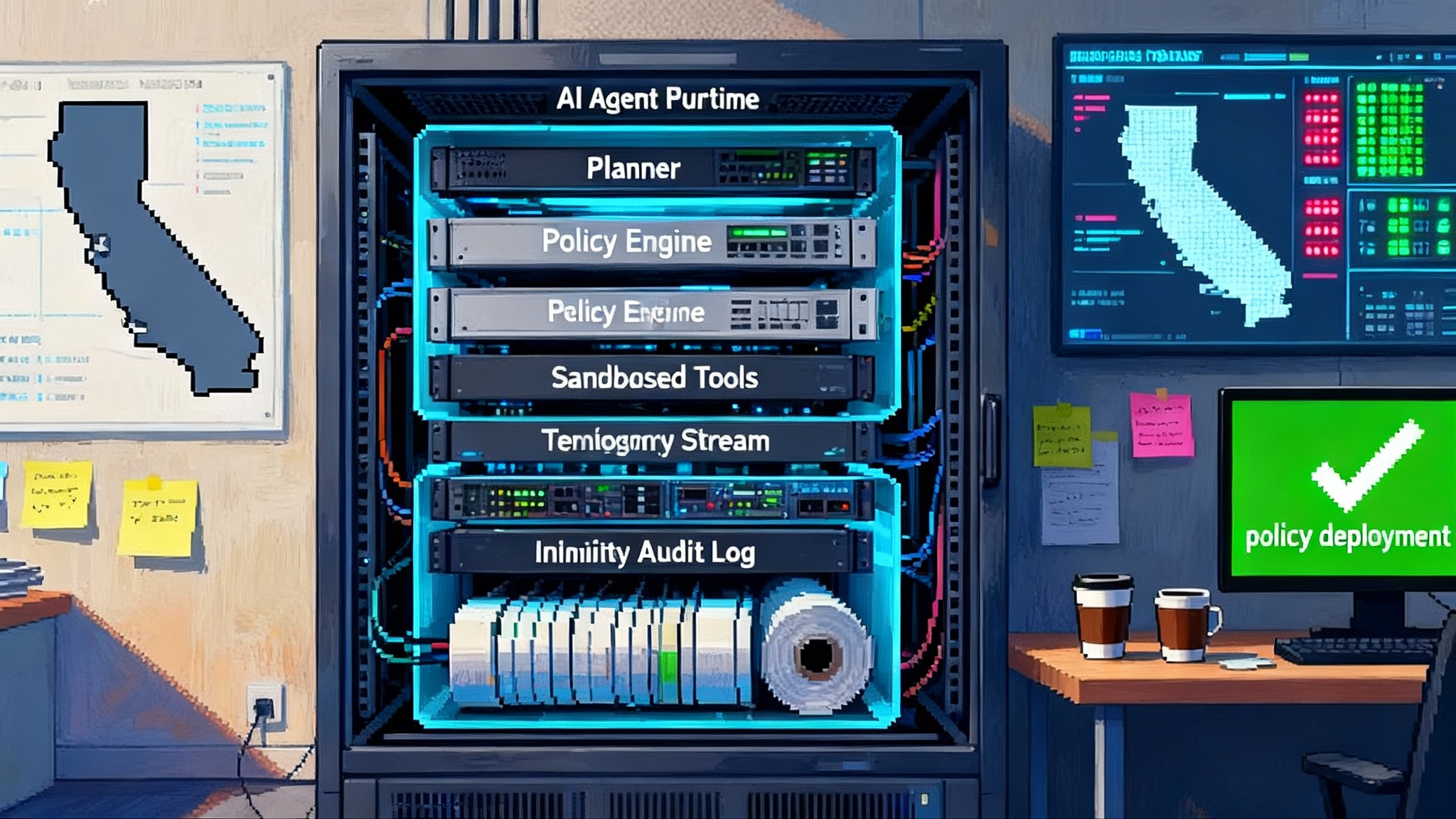

Imagine an enterprise model lab. One agent watches the capacity index. Another monitors the training backlog. A third negotiates top up blocks of petaFLOP days across vendors. A fourth re optimizes the graph and batch sizes to match what actually arrives this week. A reserve compute database emerges as the single source of truth for the firm’s most expensive input. It stores rights, encumbrances, vesting schedules, regional constraints, and service commitments. It exposes a policy engine that decides which job runs where under budget and latency constraints.

In that world, enterprises carry compute credit balances the way they carry working capital. Finance teams hedge with simple futures on compute indices. Treasury allocates compute across business lines through internal transfer pricing. Procurement blends long term parcels of reserved capacity with spot and on demand cloud, governed by rules that autonomous systems enforce without waiting for human approvals. This naturally complements an era where web pages become workers and services compose themselves.

Two design patterns will appear quickly:

- Credit backed schedulers. A training run does not start unless the scheduler can immutably assign enough credits from the reserve compute database to see the job through. Mid run preemption disappears and costs become legible.

- Compute bill of materials. Every product feature ships with a bill that includes compute hours, memory bandwidth, and network ingress assumptions. Product management and finance reference the same numbers.

What builders and investors should do now

- If you build models. Model your pipeline against reserve compute, not hoped for purchases. Ask every supplier for standard, auditable units of capacity and for penalties on delivery shortfalls. Push for warranties on performance per watt and for clear upgrade paths within a generation.

- If you run infrastructure. Publish a standard capacity schedule with quarterly tranches and service level commitments. Offer customers conversion rights from one accelerator generation to the next at a stated ratio so they can hedge technology risk without renegotiation.

- If you allocate capital. Underwrite compute projects as you would a power plant. Demand offtake contracts, take or pay provisions, and priority rights on replacement hardware. Treat warrants and revenue shares as the price of guaranteed delivery.

- If you build agent and database software. Design for compute as a first class asset. Build primitives for rights, vesting, and allocation. Expose auditable histories of every job’s credit use. Unify the scheduler and the ledger in one system.

The road ahead

The OpenAI and AMD partnership sets a pattern. Multiyear supply. Equity linked alignment. Delivery schedules expressed in gigawatts rather than vague availability windows. The more this pattern repeats, the more AI becomes a capital industry rather than a software category.

There is a deeper implication. When models own a slice of their upstream, their learning curve couples to the world’s ability to build the next generation of silicon and the power to run it. That tight coupling forces discipline into every assumption. There is no hand waving when chips are vesting and project debt is accruing. Timelines, yields, and thermals become board level topics for software companies. Suppliers, in turn, learn to price the output of their factories in the units their customers manage most closely: tokens per second, petaFLOP days, gigawatt months.

The result will look familiar to anyone who has financed ships, planes, or power plants. Contracts will standardize. Risk will migrate to the parties best able to hold it. Equity will align incentives where cash alone cannot. A new class of infrastructure will emerge that is priced, traded, and scheduled as a living market.

This is the line crossed on October 6, 2025. Models are not just paying for compute. They are underwriting it. The labs that master that discipline will not simply have more capacity. They will have better capacity, booked earlier, financed cheaper, and tuned to their workloads. That is how you turn compute from a bill into a balance sheet. That is also how the next wave of AI will be built: by models that buy the means of compute and by builders who treat compute like capital.