Discovery Becomes Runtime: AI Drugs Move From Code to Clinic

AI drug discovery is shifting from slow handoffs to a live runtime that compiles hypotheses, runs experiments on robots, and streams evidence to regulators. Funding, human data, and agentic tools point to a stack that is already deploying.

Breaking: the scientific method just went live

On March 31, 2025, Isomorphic Labs announced a 600 million dollar round to push its AI drug design engine and move programs into the clinic. That moment did not just fuel another hype cycle. It marked a phase change in how medicine gets made. When a company builds a pipeline that can design molecules, simulate their behavior, and plan trials as a cohesive stack, discovery starts to look like a running system you can instrument, update, and deploy. See the Isomorphic Labs $600m investment round.

This year’s other datapoint came from the clinic. In June 2025, a randomized Phase IIa study of rentosertib for idiopathic pulmonary fibrosis reported dose dependent gains in lung function with a manageable safety profile. The candidate emerged from a generative workflow that identified both the target and the small molecule. That is not the finish line, but it is a concrete public signal that AI designed drugs are crossing from paper to patients. Read the Nature Medicine rentosertib results.

Put those two events together, add a wave of agentic and biology tuned language model releases, and the conclusion becomes hard to ignore. Discovery is turning into a runtime. It is a live software stack that compiles hypotheses into protocols, executes them on robots, packages results for regulators, and ships evidence to peers in near real time.

Hypotheses as compile targets

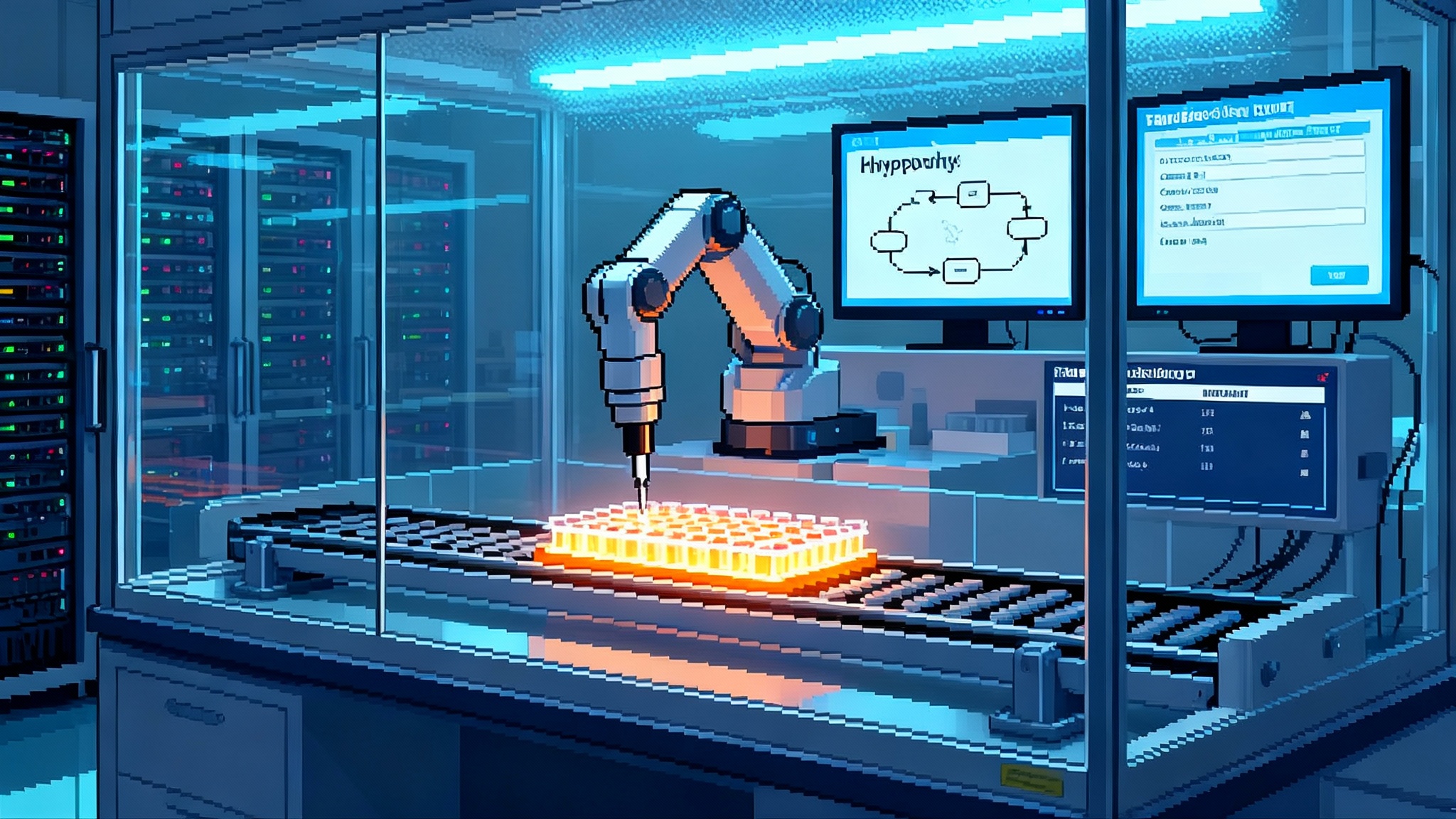

In traditional science, a hypothesis is a sentence in a paper. In the new stack, a hypothesis becomes a compile target. You specify the biological claim, the measurable effect size, and acceptable risks. The system generates assays, selects reagents, plans synthesis, and schedules runs. If the claim fails tests, the pipeline throws a build error, points to logs, and proposes a minimal change set to fix it.

Think of design make test learn as continuous integration for biology. Models propose structures and mechanisms. Automated labs build and measure. Analysis services validate and version the results. The next commit starts. When it works, iteration speed is no longer bottlenecked by human calendar time. Weeklong waits for plate availability turn into queued jobs. Experimental flake becomes a monitoring problem rather than a mystery.

A concrete example helps. A kinase hypothesis triggers three branches. Branch one explores scaffold A using a retrosynthesis planner. Branch two explores scaffold B with explicit constraints on solubility and permeability. Branch three probes an unrelated allosteric pocket predicted by a structure model. The runtime splits the work, assigns it to robots, and merges the data back into a shared evidence graph. Medicinal chemists do not disappear. They review diffs, override defaults, and place bold bets when models get conservative.

The clinical gate is opening

It is one thing to dock a ligand or predict a conformation. It is another to show patient benefit. That is why the rentosertib signal matters. A randomized, controlled human study with dose dependent functional gains is not approval, but it is public proof that the front half of the stack can produce molecules and mechanisms that survive real world constraints.

Across oncology and fibrosis programs, the pattern is similar. Teams are running smaller and faster Phase I or IIa trials that probe mechanism, dose, and biomarkers with tight, model informed designs. Two shifts follow.

- Trial designs become more like software experiments. Instead of one monolithic protocol, you see a series of short cycles that push and pull on the same mechanism under different boundary conditions. Each cycle feeds data back into the models that set up the next one.

- The unit of progress moves from a publication to a stream of validated measurements, pre specified analyses, and updated priors. Peer review does not end. It changes shape.

Peer review as telemetry

Peer review used to be a gate before publication. In a runtime, it looks like an observability layer. Preprints, registered protocols, and code are not just artifacts. They are telemetry. Reviewers become SREs of evidence quality, checking data lineage, statistical alarms, and model version drift.

When a lab posts a figure, the community should be able to trace which robot, which lot, and which analysis code produced each point, and whether the same pipeline reproduced the finding on a new batch last Tuesday. This is not science fiction. It is a set of process upgrades.

- Version everything, from compound libraries to plate layouts.

- Publish structured methods in machine readable form.

- Use continuous benchmarks such as blinded control tasks and known positive controls sprinkled through the queue.

Think of it as unit tests for wet work. The reward is fewer irreproducible blips and faster convergence on what is true. If you want a broader view of how governance becomes part of execution, see how regulation becomes runtime.

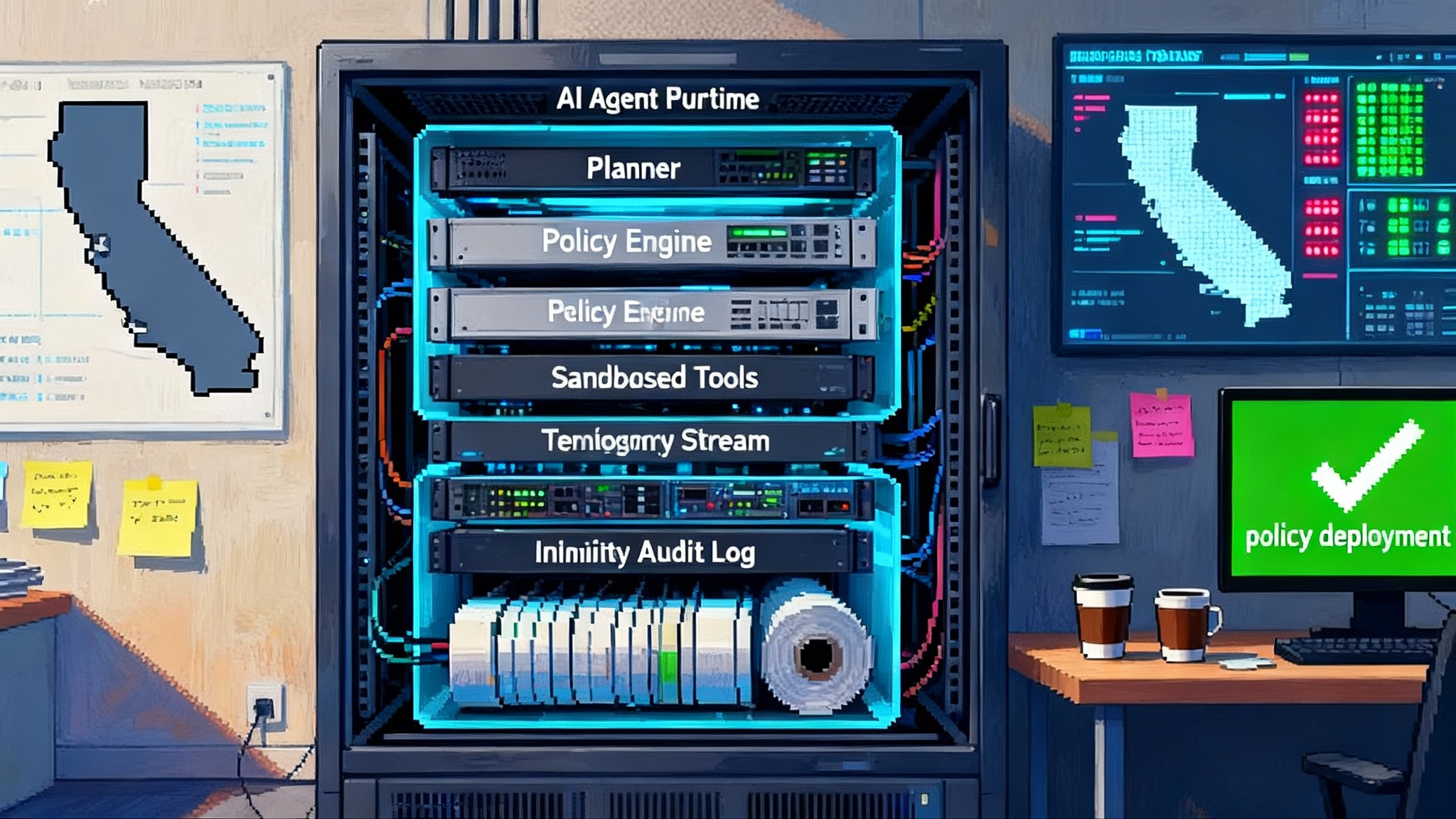

The stack: models, robots, and regulatory APIs

A runtime implies components. Three matter most today.

Models

Structure prediction and interaction models have matured. New tools can reason across proteins, nucleic acids, ligands, and complexes. On top of them sit biology specialized language models and agents that plan, call tools, and cite sources. In 2025 alone, we saw releases like TxAgent, CLADD, DrugPilot, and ChemDual. They are not perfect, but the direction is clear. Models are becoming operators, not just oracles.

General purpose models have stepped forward too, with longer context windows, better tool use, and instruction following that makes them credible lab copilots. That change matters for smaller teams. A graduate student can stitch together retrieval, planning, and lab control without spending a year on infrastructure.

Robots

The lab is turning into a cloud resource. Platforms like Arctoris’s Ulysses, and others with similar capabilities, execute biochemistry and cell biology cascades at scale with precise timing, standardized workflows, and rich metadata. The closed loop cycle gets real when a model’s request shows up as a queued job, a robotic arm moves a plate, and results stream into analysis without manual copy and paste.

The benefit is not only speed. It is data quality, fewer silent deviations, and the ability to replay a run exactly as it happened months later. That is the kind of foundation that supports adaptive trials and model informed dosing without hand waving.

Regulatory APIs

The submission gate is modernizing. In 2025, the U.S. Food and Drug Administration advanced a next generation electronic submissions gateway with modern cloud architecture and programmatic interfaces. European agencies continued rolling out structured data services for product and substance master data. Together, these changes mean a runtime can not only generate evidence. It can package and transmit it in the formats regulators expect, and it can do so continuously rather than in lumpy quarterly drops.

Ownership and credit in machine generated science

Credit and control are not footnotes. If a model proposes a mechanism and a robot validates it, who is the inventor. Current practice in many jurisdictions still requires a human inventor on patents, so teams document human contributions with unusual care. Beyond inventorship, there are three layers of ownership to settle up front.

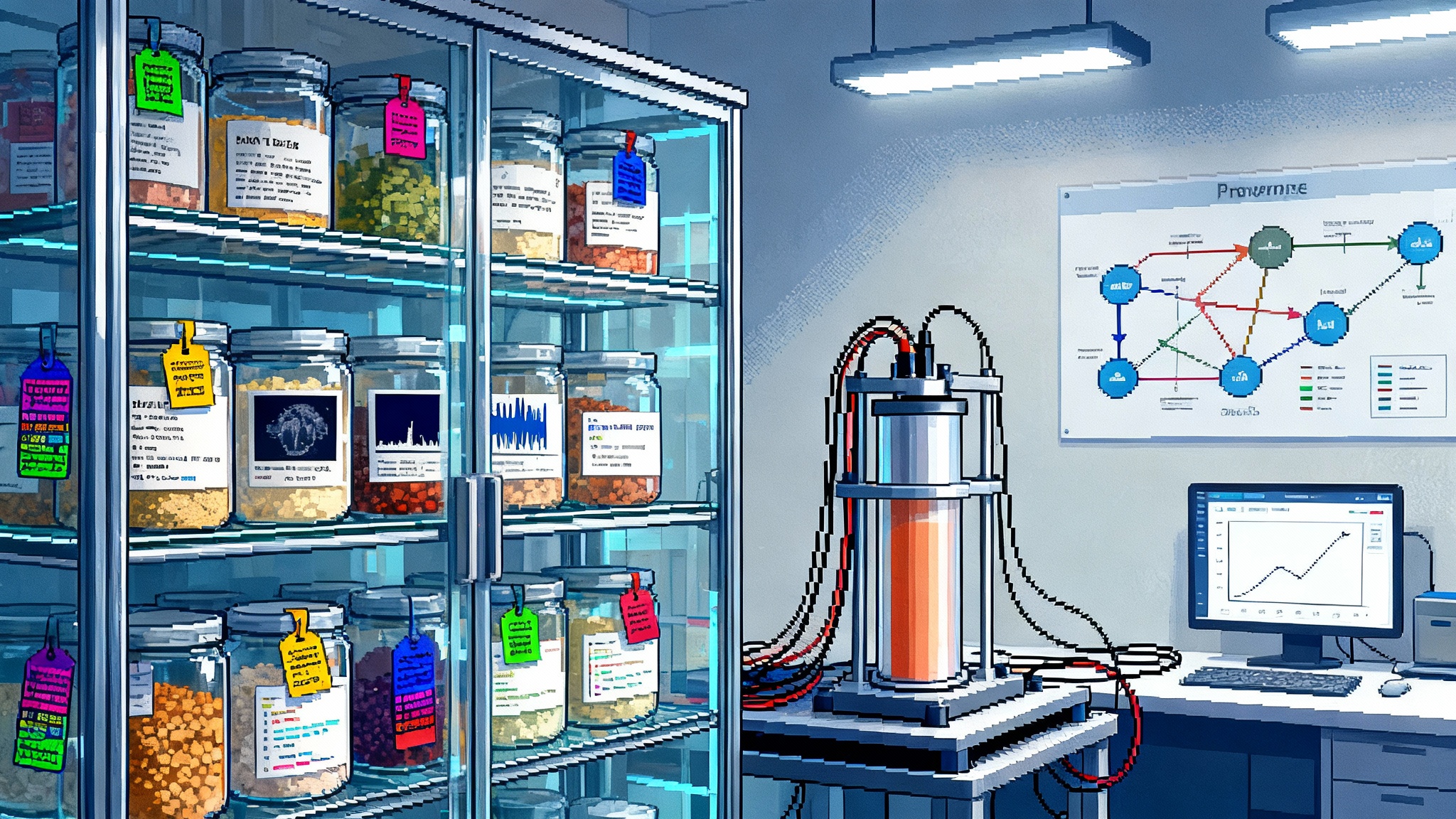

Data rights

High quality experimental datasets have become training assets. Contracts need clear rules for reuse, withholding, and anonymization. If a service provider generated a plate for you, can they retrain on it. If they can, do you get a discount or a license back. Spell this out in master service agreements and collaboration templates.

Model lineage

A model fine tuned on your proprietary assays can leak signal in surprising ways, especially through adapters and prompts. Treat models like supply chain components. Track hashes, training data classes, and deployment scopes. Rotate and retire models the way you retire keys. For more on why provenance becomes a competitive edge, see provenance becomes the edge.

Mechanism claims

Mechanistic findings often sit on top of public biology and known targets. The novelty may be in the combination, the dose timing, or the patient stratification rule. Claim only what you can instrument. If your runtime discovered a dosing window that avoids a toxicity pathway, write the claim around the measurable switch your telemetry can confirm.

Open models and automated labs change the speed limit

Open source models and open datasets have been seeping into biology for years. The difference in 2025 is not just more models. It is easier control loop integration. A small team can take an open protein language model or a domain tuned large language model, write a short agent that chains retrieval, planning, and tool calls, and schedule runs on a remote lab within a week.

That cuts the cost of trying ideas, and it moves the creative bottleneck to the edges of the network. The upside is obvious. More shots on goal, wider participation, and faster iteration on neglected diseases.

The downside is also obvious. Lower friction means more ways to make a confident mistake. That is why norms need to evolve with the stack.

- Evidence. Pre registration is not bureaucratic overhead. It is a guardrail against cherry picking. Require a pre registered analysis plan for any experiment that can change a program decision. Tie budgets and access to adherence. Build dashboards that flag deviations automatically.

- Consent. When the runtime touches patient level data, treat consent as a programmable policy. Every data access should carry a machine readable consent scope that models and agents must check. Make exceptions visible. If a team overrides a consent policy to hit a deadline, the system should page the right people and leave an immutable log.

- Safety. Safety review should be continuous. Build a safety board that behaves like a security incident response team. Define severity levels, notification trees, and time to mitigation targets. Run game days. Simulate lab failures, model hallucinations, and data leaks, then practice containment.

If you want a broader picture of agentic systems that keep working while you sleep, read about idle agents that work offscreen.

Three workflows you can implement in six months

End to end workflows sound abstract until you see them. Here are three short, concrete workflows that many teams could implement within six months.

Mechanism sprint

For a known target with an unclear pathway, run a two week mechanism sprint. Day 1, an agent proposes five plausible mechanisms and the assays to probe them, ranked by expected information gain per dollar. Days 2 to 10, robots execute the assays while the agent updates posteriors every evening. Day 12, a human panel reviews the ranked mechanisms, greenlights one to advance, and documents why the others lost. Day 14, the runtime emits a preregistered protocol for the next cycle.

What this buys you is not just speed. It gives you a clear audit trail from hypothesis to decision that can be reused in filings and partner reviews.

Biomarker backfill

Take a Phase I dataset with sparse biomarker coverage. An agent scans the literature, proposes a panel of five low cost biomarkers linked to the mechanism, and deploys a protocol to collect them in an extension cohort. If two biomarkers add predictive power in a held out set, the runtime updates the next trial’s inclusion criteria.

This pattern reliably finds cheap signals that make the next study tighter, and it trains your models on your own data rather than only on public corpora.

Protocol compiler

Treat a protocol like code. A scientist writes a high level plan in a controlled, machine readable language. The compiler expands it into exact steps with vendor specific details and timing. A diff shows human reviewers what changed from last time, down to plate positions and wash cycles. The compiled protocol ships to robots and comes back with a bundle of logs, plots, and a human readable summary.

This is the bridge between a whiteboard and a robot. It cuts manual errors, speeds onboarding for new instruments, and makes comparative review straightforward.

What leaders should do next

Executives in pharma and biotech can move now, regardless of company size.

- Create a Runtime Lead role. Give someone with scientific and software credibility the mandate to integrate models, lab automation, and regulatory submission tooling into one budget and one roadmap. Measure them on cycle time, reproducibility, and regulatory acceptance.

- Stand up an evidence graph. Build or buy a system that links hypotheses, experiments, datasets, analyses, and decisions. Require every program to register its claims and how they will be tested. Make the default permission read inside the company, write by program owners.

- Pre negotiate data and model contracts. Update master service agreements and collaboration templates to cover data reuse, model fine tuning, and audit rights. If a partner can retrain on your data, require a license back and a disclosure of derivative models that touch your assets.

- Invest in protocol compilers. Standardize how protocols are written and expanded. The payoff is fewer manual errors, faster onboarding of new instruments, and easier comparative review of what changed between runs.

- Modernize trial operations. Bring program managers, statisticians, and clinical operations into the runtime. Use model informed trial designs, adaptive arms where appropriate, and pre registered endpoints that are testable month by month.

Regulators can act too.

- Expand continuous submission lanes. Accept incremental evidence packets tied to a registered development plan and a model card. The reward is fewer last minute data dumps and easier review.

- Publish reference protocol schemas. If agencies define and validate machine readable protocol schemas, tool makers and labs will converge on a common compiler target, which speeds review and reduces redlines.

- Build public test suites. Release blinded datasets and reference analyses for common mechanisms, then certify toolchains that can reproduce them. This is the statistical equivalent of a road test.

Investors and boards should change how they judge risk.

- Underwrite stack maturity, not slides. Ask for end to end demos that begin with a hypothesis and end with a compiled, executed, and analyzed experiment. Reward teams that can rerun a study with one click, and that can show regression tests for their models and labs.

- Fund shared infrastructure. The value of interoperable protocol schemas, evidence graphs, and model cards compounds across portfolios. Put money into the plumbing, not just the programs.

The philosophical shift

If discovery is a runtime, what is science. It is still a human practice of asking questions about the world, but the medium is changing. We used to write our ideas in papers and then argue. We will still write and we will still argue, but now the argument can be instrumented, replayed, and improved like code.

Hypotheses compile. Peer review listens to telemetry. Ownership flows through data provenance and model lineage. Most importantly, the distance from idea to measurement to patient shrinks, not because we found a shortcut, but because we turned the path into a well lit corridor we can walk every day.

The early signs of 2025 suggest that this corridor is open. Mega funding for a design engine with clinical ambitions, peer reviewed human data for an AI discovered mechanism, and agents that can plan and reason across biology and code. Taken together, they are not a prediction. They are a deployment. The stack is up. The pager is on. The next commit is yours.