ChatGPT Becomes the New OS: The Platform Wars Ahead

OpenAI is turning ChatGPT into more than a chatbot. With apps that run inside conversations, enterprise controls, and real pilots like Sora 2 at Mattel, a new platform layer is forming and the stakes are rising.

The day ChatGPT started to look like an operating system

OpenAI’s October 6, 2025 Dev Day was billed as a developer event. The subtext was larger. The company positioned ChatGPT not only as a product but as a place where work happens. Two announcements made the point clear. First, real enterprise pilots showed generative models moving from demos to daily tools. Second, OpenAI introduced apps that run inside ChatGPT, complete with a preview SDK and a directory in the works. Put those together and the chat window begins to resemble an operating system for intent driven computing.

On stage and in briefings, OpenAI highlighted Sora 2 pilots. One headline partnership placed the model in the hands of Mattel designers who turn rough sketches into shareable video concepts. This is not a research sizzle reel. It is a workflow tool inside a production process, as covered in Reuters on Mattel Sora 2 pilots. The second thread was structural. OpenAI previewed an apps SDK that lets third parties run directly inside ChatGPT, showcased alongside updates across text, image, voice, and video in the OpenAI Dev Day announcements.

Taken together, these moves signal a shift from single assistant to host environment. Users start a conversation, connect an app with explicit permissions, and proceed without hopping between windows or brittle scripts. The assistant becomes a runtime that plans, invokes tools, and enforces policy.

From apps to intents

For decades, we attached actions to applications. You opened Photoshop to edit a photo and Excel to model revenue. With a conversational interface and a growing roster of connectors, the sequence flips. The user leads with an intent. The runtime chooses the tools, gathers the right permissions, and executes a plan.

Picture a concierge in a building full of specialists. You say you want a pitch deck by 4 p.m. The concierge lines up design, data, and copy, asks for the minimum permissions, then returns a draft with sources. No manual orchestration. No tab juggling. The orchestration shifts from the user to the runtime.

Why that matters:

- Shared context reduces friction. Identity, payments, and compliance live in one place and can be reused across tasks.

- Plans become inspectable. You can audit why a step ran and which permissions it used.

- Substitution becomes feasible. If one tool is down, the runtime can pick a near equivalent without breaking the flow.

This intent first approach also reframes how we design systems. We stop thinking in monolithic apps and start thinking in composable capabilities that slot into plans. If that sounds like operating system territory, it is.

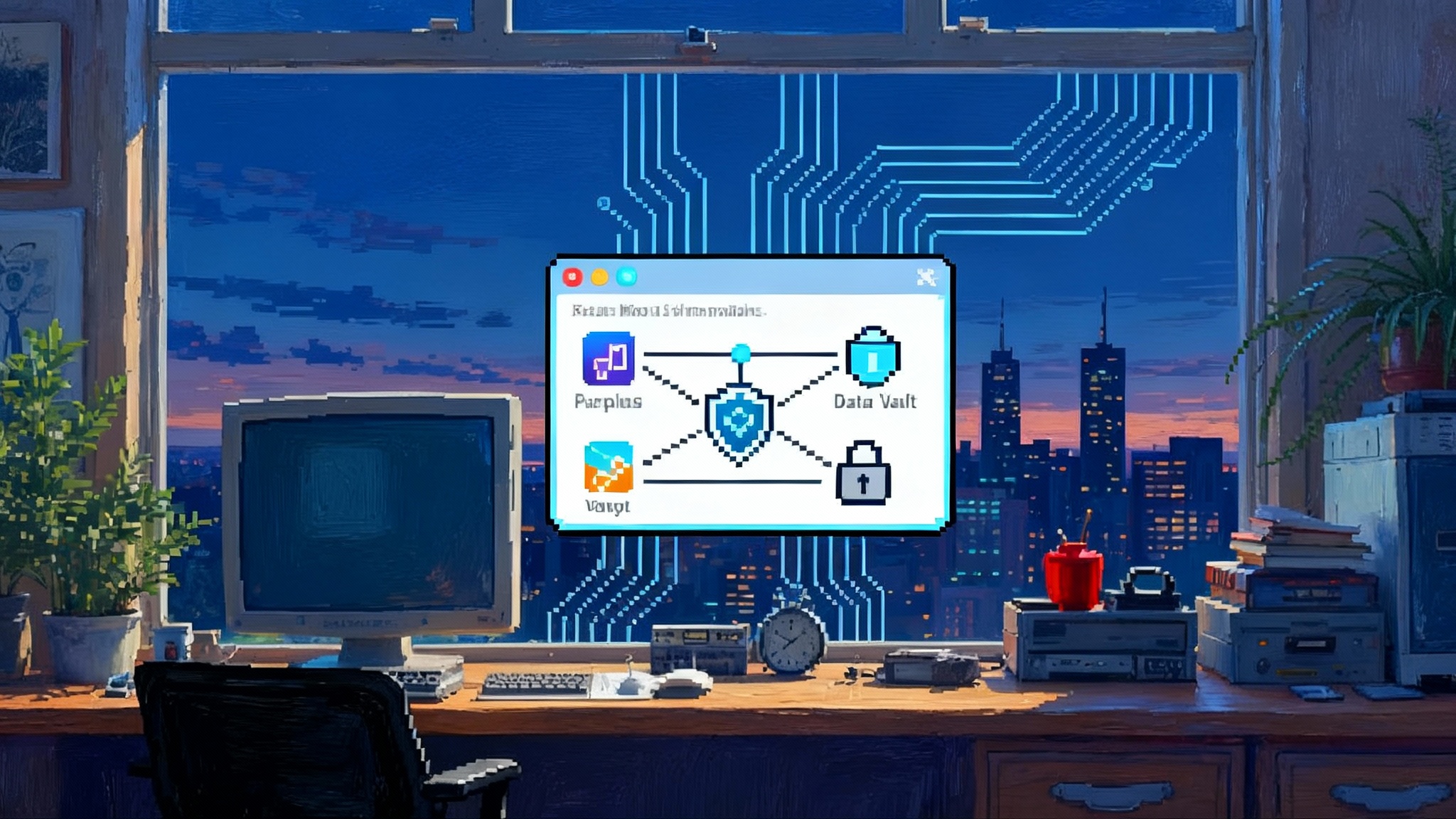

The model runtime as the new platform layer

In the classic stack, the operating system sat between applications and hardware. In the new stack, the model runtime sits between intents and the world. It resolves language into plans, brokers permissions, calls tools, tracks state, and enforces policy. A helpful mental model looks like this:

- Models act as user space processes that interpret goals and propose steps.

- Tools are system calls that touch files, services, and devices.

- Connectors behave like drivers into third party capabilities.

- Memory is the file system that persists context across sessions.

- Policy plays the role of a kernel that constrains what runs and how data moves.

The vocabulary of an app store inside ChatGPT makes the comparison explicit. When a platform controls distribution, payments, and policy for the agents that live within it, it begins to behave like an operating system.

Where power shifts next

The migration from apps to intents does not just rearrange interfaces. It rearranges leverage.

-

Model vendors. If experiences begin inside assistants rather than app icons, model vendors gain distribution power. They set default tools, run the directory, and control permission prompts. That is distribution and policy combined.

-

Device operating systems. Apple and Google built mobile power on identity, payments, and secure hardware. If conversational runtimes become the first tap, device platforms risk shrinking to high quality drivers. Expect a response that fuses assistants with notifications, keyboards, and radios, with an emphasis on on device trust guarantees.

-

Sovereign and enterprise stacks. Governments and large organizations want control over model choice, data location, and audit trails. Intent routing gives them a lever. They can pin policies and connectors to their own runtimes while still exposing a safe surface to employees or citizens. The new contest is who defines the policy language and who certifies connectors.

In the short term, expect competition on composability. The runtime that routes across models, respects local policy, and delivers predictable cost will win enterprise accounts. In the medium term, expect leverage over discovery. The runtime that owns the directory can tax traffic and promote house brands.

The three layers an AI OS must nail

Today’s demos hint at the destination. To earn the operating system label, an AI runtime must harden three core layers that survive beyond keynotes.

1) A capability graph

Instead of a static app list, the runtime should maintain a graph of actions, tools, and constraints. Each node represents a capability such as generate a three minute product video or schedule a vendor payment. Edges encode prerequisites and policies such as requires access to finance system or allowed only during business hours. When a user expresses an intent, the planner walks the graph to assemble a plan.

What this solves:

- Precise permissions. The runtime requests only what the plan needs rather than broad scopes.

- Substitution. If one tool is unavailable, the graph suggests a near equivalent based on inputs and outputs.

- Auditable plans. The graph yields a human readable trace of why each step was taken.

How to implement now:

- Define tools with typed inputs and outputs plus policy tags.

- Expose a graph query interface so planners can search for legal paths.

- Log each path so security teams can review patterns and refine policy.

2) Durable memory

Agents do not feel reliable if they forget. The runtime needs durable memory that is permissioned, inspectable, and scoped. A simple schema helps:

- Profile memory. Stable facts like name, roles, preferences, and allowed vendors.

- Working memory. Short lived context for ongoing tasks with explicit expiration.

- Organizational memory. Shared facts and documents with owners, retention policies, and lineage.

What this solves:

- Fewer repetitive prompts and smoother handoffs between agents.

- Compliance friendly retention with the ability to purge or freeze on schedule.

- Safer retrieval since memory access can be bound to the plan and its policy tags.

How to implement now:

- Store memories as structured records with provenance and consent fields.

- Provide a user and admin memory browser for inspection and deletion.

- Bind memory fetches to the capability graph so agents retrieve only what the plan allows.

For a deeper dive on why memory becomes a strategic asset, see our analysis of why memory becomes capital.

3) Policy and compliance as first class

In a traditional OS, policy lives near the kernel. In an AI runtime, policy should be expressed in a single language that governs planning, tool use, data movement, and output. It should be testable, explainable, and enforceable.

Useful policy categories:

- Data residency. Sensitive data must never leave a specific geography or cloud boundary.

- Duty segregation. The agent that proposes a payment cannot be the agent that approves it.

- Harm and safety constraints. Content and actions that are blocked in specific contexts.

What this solves:

- Predictable behavior. Plans fail fast when a path violates policy.

- Faster vendor reviews. Security teams can evaluate a connector against a known policy set.

- Better forensics. When an incident occurs, auditors can replay the plan, inputs, outputs, and decisions.

How to implement now:

- Standardize policy tags on every tool and memory type.

- Build a policy linter that runs on plans before execution.

- Provide simulators so teams can test intents against policy without side effects.

Why enterprises will accelerate the shift

Enterprises do not switch stacks for novelty. They switch for control, cost, and compliance. OpenAI framed its Dev Day with an emphasis on reliability, agent tooling, and enterprise controls across identity and data governance. That is the difference between a viral chatbot and a platform that survives a vendor security review.

A proper runtime centralizes what scattered proof of concept bots struggled to deliver:

- Clear, minimal permission prompts for each step in a plan.

- Isolation between tools so one compromised connector cannot exfiltrate everything.

- Cost controls that track spend per intent, not just per token.

- A consistent audit trail across voice, text, image, and video actions.

When those pieces are in place, pilots like the Sora 2 work at Mattel move from novelty to normal. Teams bring their own connectors, wrap them in policy, and let designers or analysts make requests in natural language without breaching governance. This also connects to a broader trend we explored in a domestic operating system, where shared context, permissions, and memory make intelligent environments usable rather than uncanny.

What 2026 agent ecosystems could look like

The step change is not that agents exist. It is that runtimes begin to standardize plans, permissions, and payment. Standardization creates predictable markets. By early 2026, three patterns seem likely to coexist:

- In assistant ecosystems. Agents live entirely inside assistants like ChatGPT. Discovery and transactions happen in the chat surface where identity and payments are already trusted.

- Customer hosted ecosystems. Enterprises run private runtimes with curated connectors and a policy catalog, then allow employees to install approved agents.

- Sovereign runtimes. National or sector specific runtimes certify connectors for healthcare, education, or finance, and allow multiple language models to compete inside the same policy perimeter.

This is distinct from browser centric narratives that emphasize navigation and retrieval. It is also distinct from grid centric narratives that treat models as just another workload. The operating system view is about standardized planning, durable memory, and enforceable policy so intents can be executed with less friction and more control. For the web angle, see how pages themselves become active participants in the agentic browser era.

What to build now

These are concrete steps teams can take while the ecosystem consolidates.

For developers

- Design tools like system calls. Give each tool typed inputs and outputs with explicit policy tags. Avoid broad scopes so the runtime can substitute a competitor without breaking your integration.

- Add testable constraints. Include rate limits, guardrails, and sample plans your connector supports. Publish a machine readable manifest so planners can reason about your capabilities.

- Build cost awareness in. Expose a cost function per call. Return hints so planners can optimize for speed or price.

For product leaders

- Ship a memory browser. If users cannot see or delete what the agent knows, they will not trust it.

- Invest in plan explainability. A one line summary of why a step ran reduces fear and slashes support tickets.

- Treat permissions like onboarding. Ask for the minimum, explain why, and revisit scopes only when the agent needs more capability.

For legal and security teams

- Define a policy baseline once and enforce it everywhere. Express residency, retention, role based access, and safety in a single language the runtime can interpret.

- Demand a full audit trail. Plans, inputs, outputs, tool responses, and decisions should be captured with timestamps and identifiers.

- Push for connector certification. Require third party tools to publish manifests, undergo tests, and accept revocation if they violate policy.

For infrastructure teams

- Separate compute, storage, and policy. Run models where it is efficient, but keep memory and policy close to your trust boundary.

- Pilot multi model routing. Allow the runtime to pick the right model for each step. Record choices so you can tune for cost and quality over time.

- Prepare for voice and video at scale. Plan for streaming permissions, storage, and redaction to handle conversational and media heavy tasks.

Open questions to watch

- Portability. Can an agent built for one runtime move to another with a predictable manifest and policy file.

- Ranking power. How will directories rank connectors and what conflicts arise when platform owned tools compete with partners.

- Pricing clarity. Will runtimes price by intent, by plan step, or by raw tokens, and which model is easiest for customers to predict.

- Safety liability. When an agent makes a harmful decision, where does responsibility sit across the runtime, the connector, and the model.

The bottom line

When a platform reframes computing around intents, permissions, and model runtimes, it grabs the levers that shaped prior operating system shifts. Distribution, defaults, and policy move to the center. OpenAI used Dev Day to signal that ChatGPT is becoming such a platform. The evidence is not any single demo. It is the presence of an apps model, an agent toolkit, enterprise controls, and a plan to curate discovery. If those pieces mature, the assistant becomes the new home screen. The next platform contest will be decided by who turns that home screen into an operating system that teams trust with real work.