Interface Is the New API: Browser-Native Agents Arrive

Browser-native agents use pixels and forms to run apps without private APIs, closing the last mile of SaaS integration. Expect an Affordance War, formal agent agreements, and a shift from UI to protocol.

Breaking: the cursor learned to think

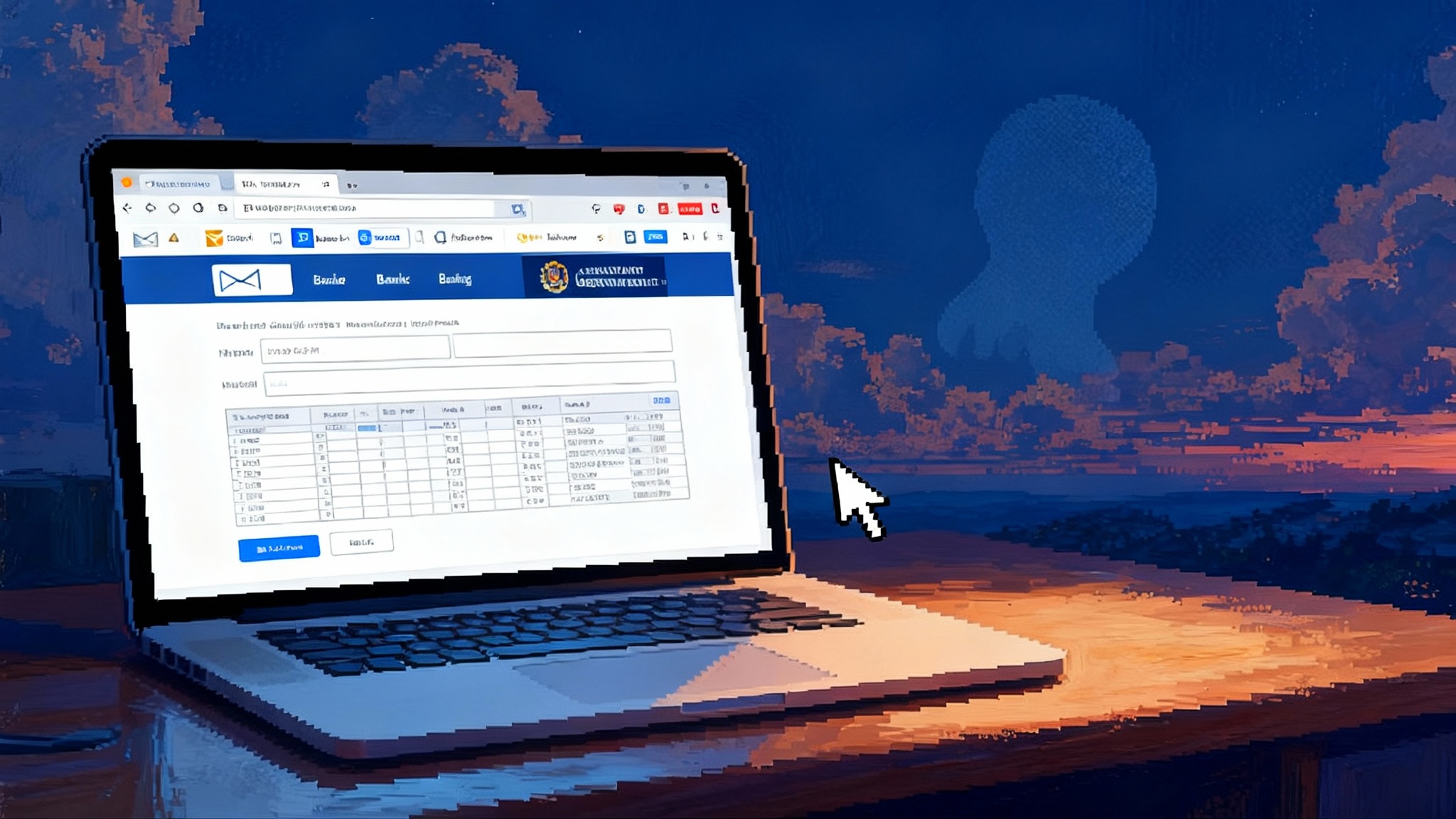

You open a laptop and watch a cursor sign in, open tabs, fill forms, and reconcile invoices faster than a seasoned operator. It hovers, scrolls, copies, pastes, and even corrects itself when a field rejects a value. There is no private application programming interface and no prebuilt connector. The agent is using software the way you do. It is looking at the screen and acting.

That simple change is the headline. The interface is the new application programming interface. Instead of waiting for vendors to expose a clean endpoint, these agents see elements, understand intent, and take action at the surface where work actually happens. For teams trapped between brittle integrations and human clickwork, this is not a minor upgrade. It is a new execution layer for the internet.

What changed: computer-using agents grew up

For years there were two unglamorous options for automation. You could wire systems together with official application programming interfaces and hope the vendor exposed the method you needed. Or you could script the user interface with test frameworks and robotic process automation and hope the page did not change. The first path was clean but incomplete. The second was complete but fragile.

Browser-native agents blend the strengths of both paths. They bring perception and planning to the surface. They read what is on the page, reason about it, decide which control to use, and then act while monitoring the outcome. When a date picker opens instead of a free text field, they adjust. When an error banner appears in red, they read it and try again.

The loop inside the browser

- Perception: parse layout, labels, tables, colors, and error states.

- Planning: decompose the goal into steps compatible with what the page affords.

- Action: click, type, upload, and confirm with humanlike pacing and checks.

- Feedback: re-read the page to detect success or failure and adapt the plan.

This loop has existed in research for years. The change is that it is now productized, documented, and moving toward common patterns. That raises a practical question for every team that treated the browser as a view-only layer. What happens when your view becomes the programmable interface by default?

The last mile of SaaS integration collapses

Think about the ugliest edges in your stack. A claims portal with no export. A procurement site that only accepts manual uploads. A supplier directory behind a login. A bank reconciliation that takes twenty clicks. A government form that refuses to die. All of that is now reachable by an agent that can see and act.

Consider a midsize manufacturer that receives invoices from hundreds of small vendors. Some send emails. Some upload PDFs to a portal. Some have unusual templates. Historically the company tried to normalize this with an enterprise resource planning integration that covered only the top twenty suppliers. Everyone else became manual labor. A browser-native agent can read the vendor portal, fetch the invoice, open the accounting system, and post the entry with supporting documents. It does everything a human would do, and it does it the same way for the long tail that never got an integration.

Or consider customer support. An agent can triage an inbound ticket, search the knowledge base, check the customer’s entitlements in a billing page, and issue a refund by navigating a payment dashboard. The company no longer waits for a partner to expose a private refund endpoint. The work happens where it already happens, at the surface.

The consequence is profound. Integration work shifts from building and maintaining brittle connectors to describing tasks as goals, constraints, and acceptance checks that an agent carries out on any page that exposes the right affordances.

Before and after, in practice

- Before: write a connector, wait on partner roadmaps, and rebuild when a field changes.

- After: specify the goal, enumerate constraints, and let the agent adapt to the page.

Product teams who embrace this shift will treat surface automation as a first-class integration layer, not a hack.

The Affordance War begins

Affordances are the signals a product sends about what can be done. A button with a label is an affordance. A drag handle is an affordance. For humans, we tune design to make affordances clear and efficient. For agents, the same signals determine whether a task is feasible and reliable.

As agents become capable and common, websites will harden their affordances against uncontrolled automation. Expect measures that go well beyond simple bot detection. Sites will randomize element structure, alter field names, insert timing constraints, and hide key operations behind account reputation or cryptographic challenges. Some will require hardware-backed attestation from the agent’s runtime. Others will offer agent-specific modes that reduce ambiguity but enforce rules, quotas, and audit trails.

Likely defensive tactics

- Obfuscate or rotate element identifiers to break brittle scripts.

- Introduce proof-of-person or device attestation for sensitive flows.

- Gate critical actions behind risk scoring, account age, or step-up checks.

- Require higher-fidelity logs and session proofs for refunds and transfers.

Why blocking everything will fail

Blocking all automation will not hold. Competitors will publish safe lanes for accountable agents and gain throughput. Users will demand automation to remove repetitive clickwork. The pressure will push teams to channel automation into controlled pathways rather than attempt a blanket ban.

Agent Interaction Agreements

The early web used a simple social contract for crawlers. The Robots Exclusion Protocol told bots what not to fetch and relied on goodwill because reading did not execute business logic. Automation in the age of browser-native agents needs something stronger.

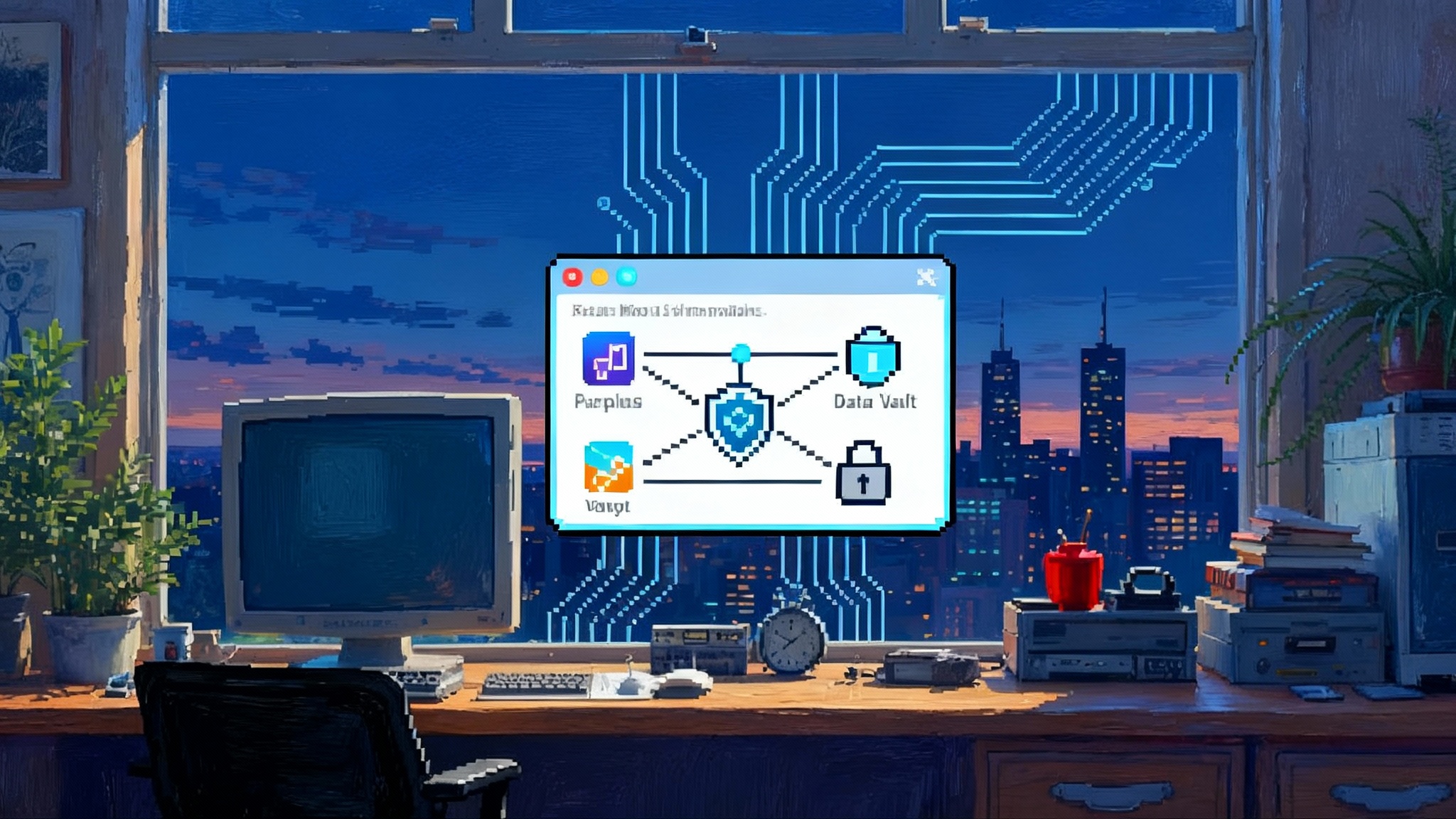

Agent Interaction Agreements are that next step. They are terms, schemas, and limits that define what an authenticated agent may do within a user interface. Think of them as a compact between the runtime and the site.

Three essential parts:

- Identity and attestation. Who is the principal, what runtime is acting, and what proofs are attached to the session. Use hardware-backed keys, signed audit logs, and risk signals that travel with every click.

- Capability and scope. A small set of named actions that are allowed, with parameter schemas and preconditions. For example, issue refund up to a specific amount, create user with defined fields, or export a dataset with specific filters.

- Rate and oversight. How often each action may occur, what approvals are required, and where the logs are stored for review.

What Agent Mode looks like

With an agreement, a page can flip into Agent Mode.

- Buttons expose stable identifiers and consistent roles.

- Confirmation steps present machine-readable summaries.

- Error banners carry structured codes with actionable hints.

- The human supervisor gets an overlay to approve, pause, or take over.

Agreements are not altruism. They are a trade. Sites grant capability in exchange for accountability. Enterprises get automations they can certify, monitor, and roll back. Vendors keep control while enabling real throughput. For the trust layer, see how content provenance and proofs shape behavior in content credentials win the web.

From user interface to protocol

Once an action is consistently performed by agents, the interaction stops looking like a conversation with pixels and starts looking like a protocol. It is a short step from a predictable Agent Mode to a compact specification.

You can see the outlines already.

- A pay invoice task becomes a message with a schema and a state transition.

- A submit know your customer form task becomes a staged handshake with clear failure codes.

- A book shipment task becomes a negotiation over options and constraints instead of a cascade of dialog boxes.

This drift does not eliminate the user interface. Humans still supervise, intervene, and audit. But the center of gravity moves from pixels to messages. When enough sites publish agreements and enough agents can negotiate them, the browser becomes a protocol router. Repetitive clickwork becomes orchestration among semi-formal endpoints discovered at the surface and stabilized by consent.

The discovery to standardization path

- Agents learn a task by observing the surface and succeeding repeatedly.

- Teams codify the task as a named action with parameters and outcomes.

- Vendors publish a formal description and quotas as part of an agreement.

- The action graduates to a protocol-like exchange, while the page remains a console.

This is similar to past transitions. Spreadsheets turned into standardized imports and exports, which then turned into application programming interfaces. Agents compress that arc because they can learn from the surface and nudge it toward a protocol without a full rewrite.

Governance: who is allowed to click

If agents can do anything a human can do in a browser, we need rules about who is allowed to do what.

- Terms and enforcement. Terms of service will evolve to distinguish prohibited scraping from permitted automation bound by an agreement. Violations will be met with identity-level blocks, not just network blocks.

- Consent and delegation. Organizations will track which agents are allowed to act for which users and on which systems. Delegation will look like action-level access control, not just page-level access.

- Auditing and evidence. Every agent run should produce a signed, immutable log of intent, steps, and outcomes. Screenshots alone are not enough. Sites should publish error codes and state transitions that make audits meaningful. The arguments for cryptographic provenance echo the case in content credentials win the web.

- Safety and redress. When an agent makes a mistake, there must be a way to halt, roll back, and compensate. Agreements should include a fast path for remediation and caps on exposure.

What changes for product teams

If you build software that runs in a browser, you will feel this quickly. Here is a focused playbook.

- Ship an Agent Mode. Keep the human experience but add a mode that stabilizes identifiers, provides structured error codes, and compresses multi-step flows. Publish a machine-readable description of allowed actions.

- Offer an Agent Interaction Agreement. Start with conservative limits. Tie every session to an attested runtime. Sign every step. Provide a sandbox. Require opt in at the account level.

- Harden against unaccountable automation. Detect suspicious execution patterns, add proof-of-person where needed, and rate limit actions with monetary impact. Channel automation into safe lanes instead of blocking all automation.

- Instrument for supervision. Build a console that shows pending, running, and completed agent tasks with full context. Allow a human to take over in one click. Make that takeover an explicit event in the log.

- Design for intent and outcome. Rewrite flows around the goal, not the incidental steps. Provide a way to declare a goal and a way to confirm success without pixel-level inspection. For platform context, see ChatGPT becomes the new OS.

What changes for teams adopting agents

If you plan to let agents operate your stack, treat them as a new integration layer.

- Start with a task catalog. Describe the goal, systems touched, acceptance criteria, and failure modes. Only then choose a model and a runtime.

- Separate duties clearly. Let the agent propose a plan. Require approvals where stakes are high. Enforce budget and scope limits per run.

- Build a safety wrapper. Log every action, capture structured errors, and attach artifacts like screenshots and downloaded files. If a run fails, retry with a smaller scope or hand off to a human with a precise diff of what changed.

- Budget for maintenance. Every site changes every week. Monitor key affordances and alert when a page update breaks a task. The cost is lower than hand clicking but not zero.

- Evaluate with live tasks. Static benchmarks undershoot real complexity. Favor scenario-driven evaluations that reflect your actual workflows, a theme explored in the end of static AI leaderboards.

Accessibility becomes an operational advantage

Accessibility is no longer only a moral imperative. It is an operational advantage in an agent era. Semantic markup, clear labels, and predictable flows help both humans and agents. Teams that align with the Web Content Accessibility Guidelines will see higher automation reliability with fewer hacks.

Practical steps include using proper roles and landmarks, ensuring focus order matches visual order, surfacing machine-readable status messages, and avoiding magic text that only a sighted user could decode. Many of these improvements pay off twice by improving human usability and agent comprehension at the same time.

A five stage forecast

- Keyboard mimicry. Early agents replay human patterns with better perception and planning. Value comes from replacing rote tasks that never got proper integrations.

- Agent modes appear. Major sites add an Agent Mode with stabilized identifiers and structured errors, gated by agreements and quotas.

- Agreements become the norm. Enterprises demand Agent Interaction Agreements from critical vendors so they can certify and audit automation.

- Protocolization. Common tasks graduate from page flows to compact protocols. Pages remain as supervisory consoles with embedded proofs.

- Orchestrated ecosystems. Agents coordinate across many sites using a mesh of agreements. Marketplaces emerge for certified task packs and verified runtimes.

What to build next

- A contract-first layer for Agent Interaction Agreements, including schemas, attestation, and logging primitives.

- Observability for agents, with run timelines, structured error catalogs, and automatic diffs when a page update breaks an affordance.

- Semantic overlays for existing apps that expose stable identifiers and hints without a full redesign.

- A safe handoff pattern that lets a human supervisor take over live cursor control with a single shortcut and return control with a signed note.

- Shared corpora of page archetypes so models can generalize from invoices and calendars to the long tail of custom forms.

Conclusion: the browser becomes a universal robot arm

We have spent two decades treating the browser as a place to look and click. Browser-native agents treat it as a place to plan and act. When the interface becomes the application programming interface, the long tail of unintegrated work begins to shrink. There will be friction as sites harden and as agreements emerge. There will be design debates as we learn to serve humans and nonhumans at once. But the direction is clear. The cursor has learned to think, and the web is rearranging itself around that fact.