Civic GPUs: Governments Are Building an AI Commons

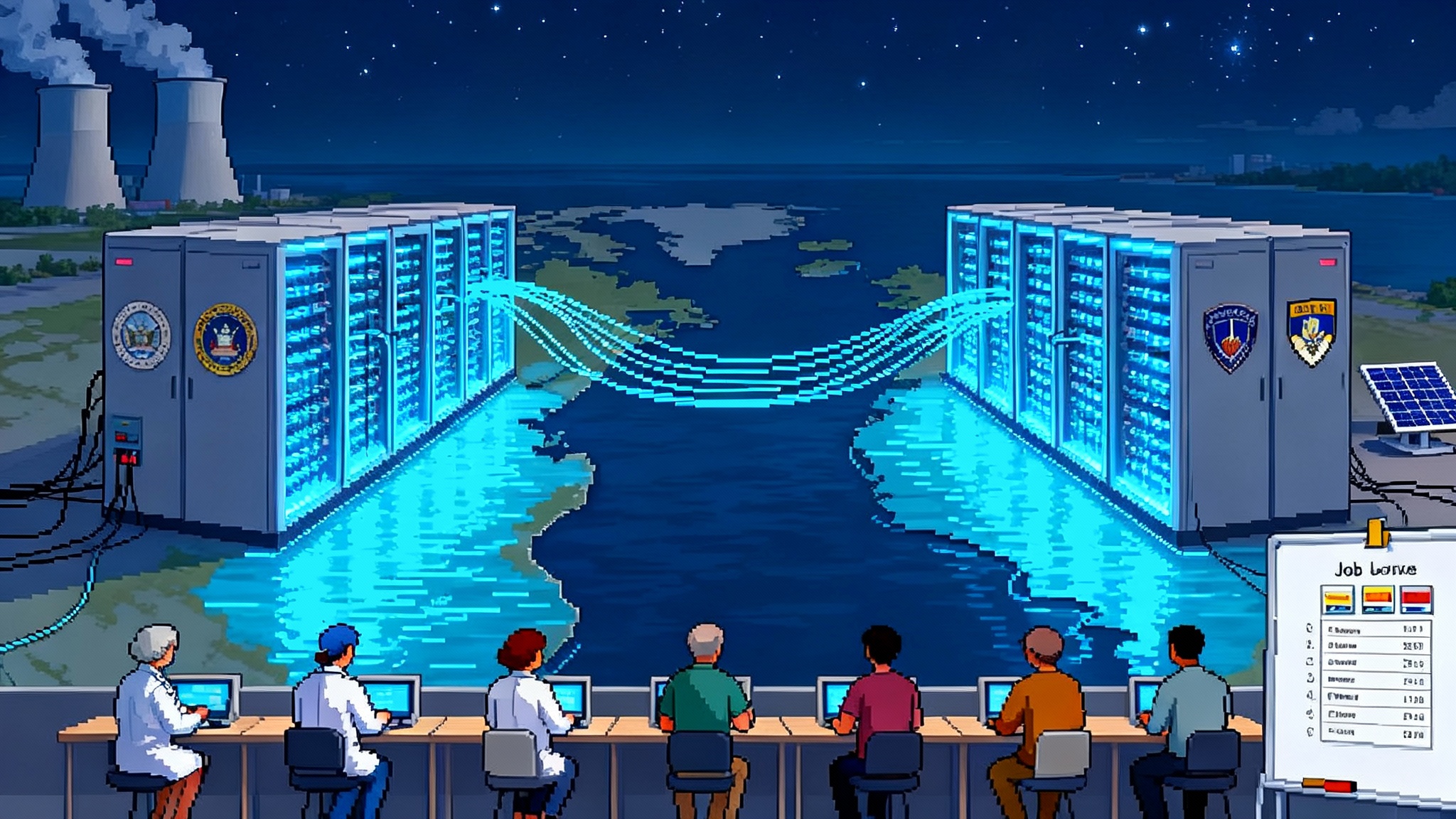

A public compute turn is reshaping AI. From NAIRR in the United States to Isambard-AI in the U.K. and EuroHPC AI Factories in Europe, governments are wiring access rules that encode democratic values into the stack.

The quarter when rhetoric met the rack

The most important AI stories of the past quarter were not about smarter chatbots. They were about plug sockets, queue schedulers, and who gets a login. The United States moved to harden the National AI Research Resource from a pilot into an operational capability that can reach far more researchers. The United Kingdom switched on Isambard-AI, a national system in Bristol that puts thousands of cutting edge accelerators under public stewardship. And the European Union funded a pan European network of AI Factories and confirmed that the first obligations for general purpose model providers took effect on August 2, 2025. Taken together, these moves signal a public compute turn. Governments are not just writing strategies. They are pouring concrete, negotiating access rules, and encoding a public philosophy of AI into the substrate itself.

In July, the University of Bristol and its partners announced that the U.K.’s most powerful AI supercomputer, Isambard-AI, was live for researchers and small companies. The system was switched on by the Secretary of State for Science, Innovation, and Technology and began accepting applications from academic teams and startups. The launch details are captured in the university’s announcement, which shows how a country can build sovereign capacity without severing global supply chains: U.K. supercomput er Isambard-AI launches.

Across the Atlantic, the National Science Foundation took steps to move the National AI Research Resource from a proof of concept to sustained operations. The pilot has already connected hundreds of U.S. research teams to pooled compute, curated datasets, and training resources. Over the summer, the agency broadened support and prepared an operations center solicitation that will formalize the work of onboarding, scheduling, and security. The signal is simple. NAIRR is shifting from a helpful experiment to the country’s default public option for AI discovery and education.

Meanwhile in Brussels and national capitals, the European Union advanced two legs of its strategy. First, it has been seeding an interconnected network of EuroHPC AI Factories next to national supercomputers, creating places that combine accelerators, data curation, and hands on support. Second, it clarified obligations for providers of general purpose models, with the initial set taking effect on August 2, 2025, and further timelines for models already on the market and for models deemed to present systemic risk. The European Commission’s policy explainer is a useful reference for the months ahead: Guidelines for general purpose model providers.

Put these developments together and something important changes. The moral and economic center of modern intelligence tilts away from purely private platforms toward public institutions that run the queues, define access tiers, and shape the disclosure record that follows models into the world.

Why compute is the new policy

It is tempting to treat compute as neutral infrastructure. It is not. The way public systems accept users, provision jobs, and log activity becomes a de facto bill of rights for the next decade of AI research and deployment. What researchers can try, which models are favored, how safety is measured, and even which sectors get time first are governance choices expressed as cluster policy.

Think of a civic GPU cluster as a public library for intelligence. The library decides opening hours, lending limits, and what goes on the front table. Those choices nudge an entire community of readers. In the same way, NAIRR, Isambard-AI, and the EuroHPC AI Factories will nudge entire national and continental AI communities. If training runs that include transparent data provenance get priority, transparency will spread. If safety evaluations and red team artifacts are required for larger allocations, safety will become routine craft instead of emergency theater. If energy accounting is part of every job submission, efficient methods will move from papers to practice.

For readers tracking the physical constraints that come with this shift, see how energy and siting pressures are already reshaping the stack in Gigawatt Intelligence on the grid bottleneck. Policy is now as much about power usage effectiveness and queue fairness as it is about abstract rights.

The thesis: access rules are philosophy, made executable

There is a real opportunity in the next 18 months. New obligations for general purpose models in the EU have begun to apply, NAIRR is evolving into a standing capability, and the U.K.’s national system is maturing. The entities that define these systems’ access rules, disclosure templates, and procurement terms will write the practical ethics of AI for universities, hospitals, and startups. This is not a debate about slogans. It is a set of forms and queues that will define how science, health, and education use machine learning day to day.

To make the most of this public compute turn, a slightly accelerationist program is in order. Build capacity fast, but structure it so it produces more open knowledge and less dependency. Here is a concrete three part plan.

Program part 1: stand up public option foundation models

Public systems should offer a baseline family of foundation models with open weights for language, vision, and multimodal tasks. The goal is not to replace private labs. It is to guarantee that researchers and public interest developers can build on a stable, transparent substrate without negotiation overhead.

What to do

- Commission at least one language and one vision model per jurisdiction with open weights, trained on documented mixtures of licensed, public domain, and permissioned datasets. Make these models the default first class citizens of the national clusters. The default matters. If a model is pre positioned next to storage, optimized for the scheduler, and continuously evaluated, it will be used.

- Require transparent training summaries as a condition of public funding. Summaries should list dataset classes, major sources, curation steps, deduplication rates, and the distribution of languages and domains. Add compute reporting that includes accelerator counts, estimated floating point operations, energy use, cooling approach, and grid mix.

- Reserve capacity for sectoral fine tunes. Hospitals, climate labs, and education ministries should have named allocations to adapt the public models under strict privacy and provenance controls, with the expectation that weights are released when data rights allow.

Why it works

- Open weights reduce switching costs for public institutions and small firms. They make audits and domain adaptation feasible. They enable reproducibility in science that depends on model behavior, not just code.

- Transparent training summaries create a common floor for disclosure before legal obligations force it. They also make it possible to compare like with like when policies or procurement officers ask which model is appropriate for a given setting.

This focus on evaluation and transparency also aligns with the end of static leaderboards. As we argued in Benchmarks Break Back, dynamic and domain specific tests reveal more than a single aggregate score. Public systems can turn that insight into routine practice.

Program part 2: adopt a civic license for downstream sharing of safety work

Public money should purchase more than just a model checkpoint or a service. It should purchase a feedback loop. A civic license is a simple way to do this. It allows wide use of public option models but requires that anyone who fine tunes or substantially modifies the model must share back the following artifacts when they publish or deploy at scale:

- Evaluation suites used during development, including task lists and metrics for bias, robustness, and potentially dangerous capabilities

- Red team prompts and results for the relevant domain, with sufficient detail to reproduce risk relevant outcomes

- A brief deployment report that describes the domain, intended users, safeguards, and any domain specific reinforcement or filtering

How to implement

- Publish a standard license addendum that attaches to publicly funded model releases. Keep the obligations short and concrete. Tie the duty to share to thresholds, such as monthly active users or revenue.

- Provide a compliance portal at the national cluster. Make it easy to upload evals, red team logs, and deployment notes. Make submissions discoverable to auditors and researchers.

- Offer a safe harbor. If a downstream deployer shares artifacts within a set window and fixes any issues found by public audits, limit penalties to preserve incentives to participate.

What it changes

- Evals and red teaming become public goods rather than private security costs. Over time, this compounding knowledge base raises the floor on model safety and helps regulators focus on real patterns instead of case by case headlines.

Program part 3: interconnect national resources into a democratic compute commons by 2027

The EU timeline gives a clear horizon. By August 2027, models already on the market before the 2025 start date must meet their obligations. That is also a reasonable target for the first iteration of an interconnected democratic compute commons spanning U.S., U.K., and EU public systems.

What to build

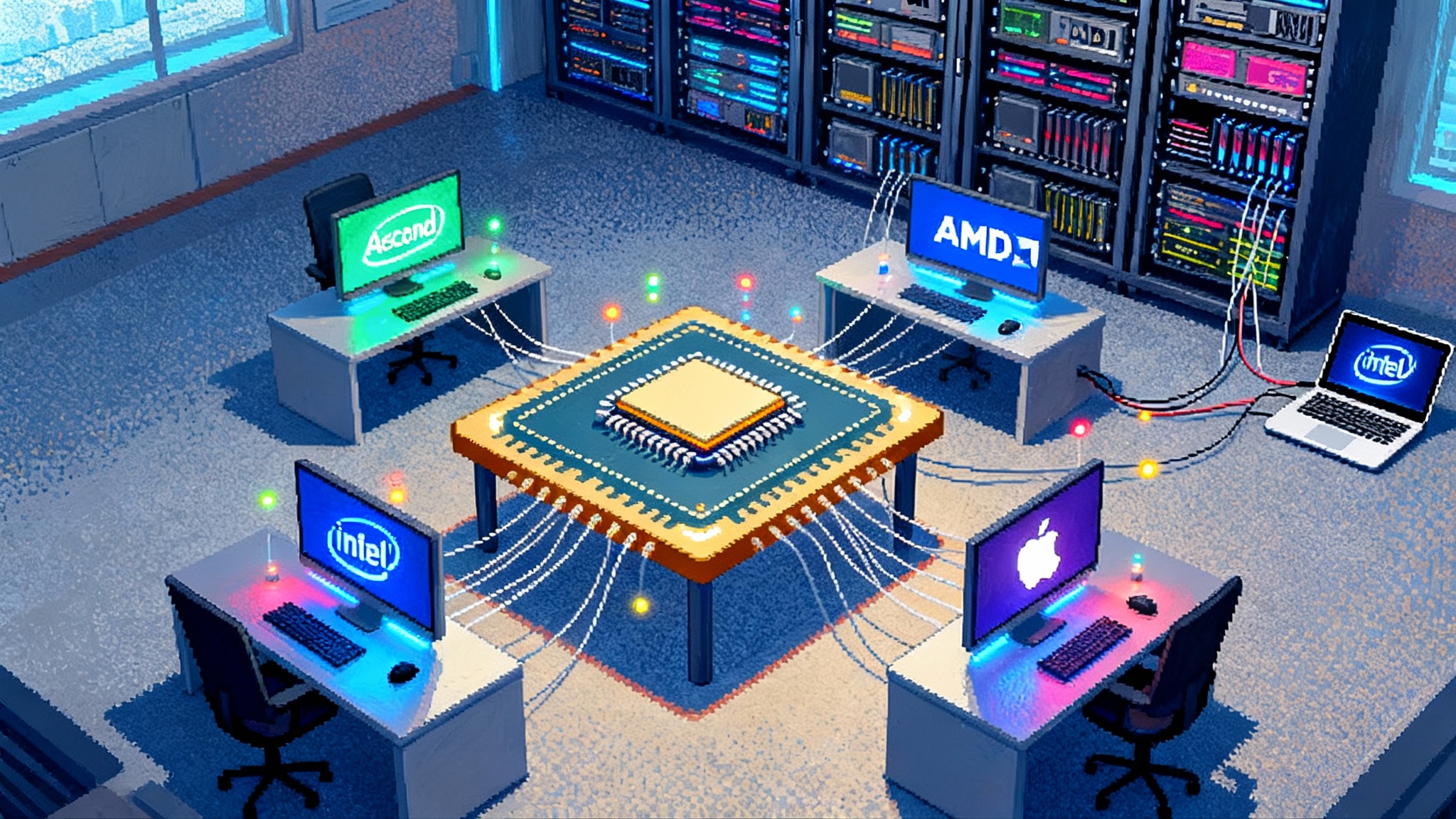

- A shared identity and allocation federation. Researchers with a verified identity at a university or hospital should be able to submit jobs across NAIRR, Isambard-AI, and EuroHPC AI Factories with a single token. Start with bilateral pilots, then add members.

- A portability layer for data rooms. Sensitive health or education datasets should not travel, but workloads can. Provide templates for virtual data rooms where models go to the data under standardized controls, with audit logs that meet sectoral rules.

- A common disclosure kit. Agree on a minimum package that travels with any model trained or significantly updated on public resources: training summary, compute and energy report, evaluation pack, and a short license compliance statement.

Why 2027

- The date aligns with EU compliance milestones for general purpose models already on the market and gives time for procurement cycles and interconnect work to complete. It is ambitious but achievable if the pilots start now.

Templates that make values executable

Rules only matter when they show up as forms and defaults. Here are three concrete templates national operators can publish this year.

- Access tiers with service level clarity

- Education tier for instructors and students, with interactive notebooks and low friction quota

- Research tier for peer reviewed or ethically approved projects, with scheduled batch jobs and priority access during off peak

- Public interest tier for nonprofit and civic tech groups working on health, climate, and rights, with advisory support and sandboxed data rooms

- Small business tier with cost recovery pricing and a path to scale on commercial clouds for production

Each tier should specify default quotas, support levels, and the disclosure obligations that trade off against higher priority or larger allocations.

- A disclosure kit as a first class artifact

- A four page training summary with documented dataset classes, major sources, de identification steps where relevant, language and domain distribution, and notable exclusions

- A compute and energy sheet with accelerators, total estimated floating point operations, runtime, power draw, cooling approach, and grid mix

- An evaluation pack with core benchmarks, domain specific tests, and adversarial probes, including instructions to reproduce

Publish these artifacts with model checkpoints and make them searchable in the national portal. Tie larger allocations to timely completion.

- Procurement that creates competition on transparency, not just speed

- Score vendors on three axes: capability per watt, disclosure quality, and openness of the resulting weights and adapters

- Require that vendors who train on public systems commit to using the civic license for downstream safety sharing when the resulting models are deployed in public services

- Reserve a portion of awards for open weight model families that meet performance thresholds in education, health, and science

Avoiding regulatory capture while moving quickly

Public compute needs guardrails to prevent the soft capture that can accompany big partnerships.

- Cap the share of any single vendor’s hardware or managed service in a given cluster for each procurement cycle. This keeps negotiation leverage and reduces single point failure risk.

- Publish monthly allocation dashboards and queue logs in aggregate. Show who used the system, for what sectors, and with what disclosure packs. Open the data unless privacy rules forbid it.

- Seat an independent allocations board with rotating members from science, civil society, small business, and unions. Give it the power to audit large training runs and to pause allocations that ignore disclosure or safety duties.

These are not heroic measures. They are ordinary institutional designs in other utilities. They work here for the same reason. When users know the rules, and the rules reward openness and efficient methods, the ecosystem compounds in useful directions.

What a democratic compute commons unlocks

Science

- Molecular discovery and materials science benefit from open weight generative models with transparent provenance. Researchers can iterate without waiting for private terms, share safety findings about emergent capabilities in protein design, and reproduce one another’s results.

Health

- Public models fine tuned in privacy preserving data rooms help radiologists and pathologists with decision support while keeping audit trails for regulators. Hospitals do not need to ship data across borders or rewrite incompatible contracts.

Education

- Teacher led tools based on open weights let districts design tutoring and assessment that match curricula and languages. Transparent training summaries help educators defend choices to parents and boards.

Small firms and regions

- A common substrate reduces the time to proof of concept for startups outside tech hubs. It also helps regional governments coordinate on workforce programs that map directly to the models their institutions can actually run.

For a deeper dive on how memory and context shape performance in these applied settings, see why context becomes capital. Public systems that standardize context windows, retrieval practices, and logging will make it easier to compare approaches across domains.

The near term horizon

Over the next year, two clocks will tick together. Public systems will add capacity and users. At the same time, rules for general purpose models will become real in everyday practice as compliance templates and audits move from PDFs to portals. If governments treat these clocks as one project, they can shift practical control over the trajectory of AI from a handful of private platforms to a network of public institutions that reflect democratic priorities.

Here is what to do this quarter

- Publish the access tiers and disclosure kit for national systems. Make the forms as legible as a passport application.

- Fund at least one open weight foundation model per jurisdiction, with a fixed date training summary and an evaluation pack.

- Draft and adopt a civic license addendum for publicly trained models so that downstream deployers share safety and evaluation artifacts by default.

- Launch bilateral pilots for identity federation and workload portability between national systems. Pick one health and one education use case to prove the model.

A pragmatic path to public value

The public compute turn is not a slogan. It is the practical work of standing up a few large systems, defining queues that match public values, and making openness easier than secrecy. The result is not only more equitable access to compute. It is a more legible and auditable AI ecosystem where incentives line up with the public interest.

If these institutions follow through, by 2027 we will have more than new machines. We will have a democratic compute commons that speeds up real progress in science, health, and education, while building institutions that the public can trust.