AI’s Thermodynamic Turn: The Grid Is the Platform Now

Record U.S. load forecasts, pre-leased hyperscale capacity, and gigawatt campuses signal a new reality. The bottleneck for AI is shifting from algorithms to electrons as the grid becomes the platform for training and scale.

The breakthrough nobody optimizes: electrons

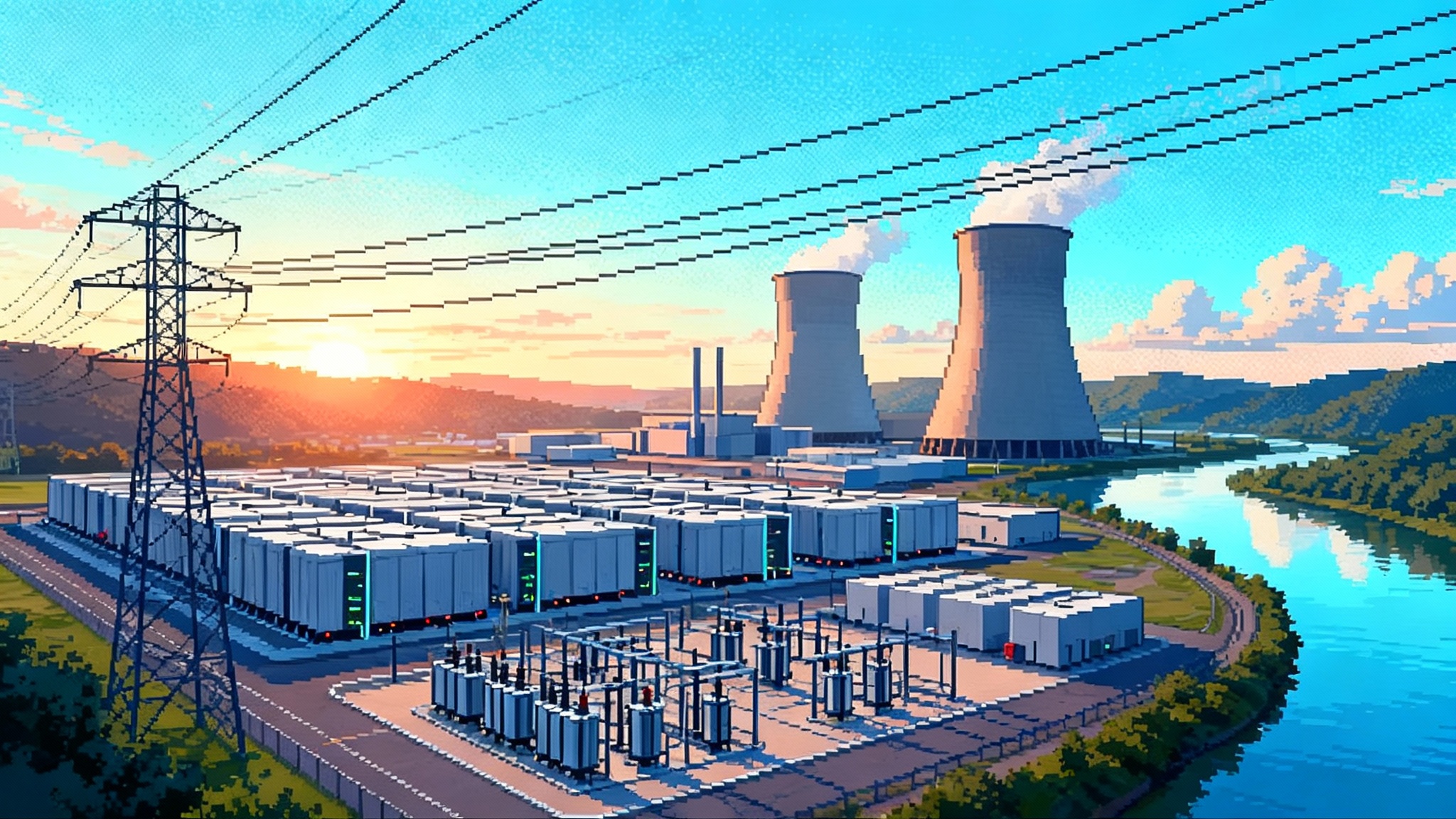

For a decade, artificial intelligence raced ahead by squeezing more performance from models, silicon, and clever software. Then 2025 arrived with a simple lesson from physics. Utilities lifted their long range load forecasts to levels not seen in a generation. Hyperscale data centers began reserving capacity years before transformers leave the factory. Campuses are now drawn in gigawatts, not megawatts. Leading cloud and model companies are signing power deals for nuclear and even fusion pilots. The frontier of intelligence is no longer just about parameters or process nodes. It is about power.

The takeaway is uncomfortable and clarifying. The bottleneck for artificial intelligence has shifted from algorithms to electrons. If that sounds abstract, translate it to execution. The teams that can guarantee clean, always on megawatts closest to their compute will ship more capable systems sooner and at lower cost. That is a new competitive axis that cuts across machine learning research, chip design, and data center development.

Signals from 2025 that changed the conversation

Several signals converged this year and pushed energy to the top of every planning document.

- Utilities revised load growth upward and called out structural drivers. Data center demand for AI, electrified industry, and an onshoring wave for manufacturing now show up as named verticals rather than residual categories.

- In the top regions, hyperscale capacity is pre leased before foundations are poured. Vacancy remains tight. Land near strong transmission nodes now prices the value of interconnection speed more than acreage.

- Developers announced gigawatt scale campuses with dedicated substations, on site storage yards for high voltage equipment, and purpose built water or dry cooling.

- Major technology companies inked long term power purchase agreements for nuclear generation and placed early markers on advanced nuclear and fusion. These are procurement strategies to secure round the clock power with verifiable attributes, not marketing stunts.

Each datapoint alone might look like exuberance. Together they map a structural turn. The grid is becoming the platform.

From FLOPs to watts: the new governing constraint

The classic scaling story relied on floating point operations and specialized accelerators. That story still matters, yet the governing constraint has moved to power delivered at the rack, the building, and the campus. Three forces drive the shift.

-

Density. Direct to chip liquid cooling and immersion make 100 kilowatt racks normal and 200 kilowatt racks plausible. At these densities, distribution losses, pump energy, and coolant temperatures become first class design variables. Every kilowatt saved in thermal overhead buys usable compute.

-

Duty cycle. Frontier training runs tie up clusters for weeks. Latency and jitter in power availability translate into schedule risk and stranded capital. Backup generators help for minutes or hours, not for multi day resource adequacy events.

-

Carbon accounting. Investors and regulators increasingly expect hourly or sub hourly emissions data. Annual renewable energy certificates are no longer sufficient. The new bar is verifiable, always on, low carbon power that matches consumption in time and place.

You can optimize kernels and compilers. You cannot push a training schedule through an interconnection queue.

The new competitive axis: guaranteed megawatts and proximity to power

In the world now forming, advantage looks like this:

- Guaranteed megawatts. Firm contracts and physical assets that deliver power at the meter. That can mean on site generation, behind the meter storage, and long term rights to transmission capacity.

- Proximity to power. Siting compute where the grid can deliver with minimal congestion, minimal curtailment risk, and short interconnection lead times. The old mantra was latency to users. The new one adds latency to power.

This does not erase the value of low network latency to customers and partners. It adds a second dimension. The winning map is a two layer topology. Keep user proximate inference nodes close to markets and embed power proximate training clusters where firm supply is abundant, then stitch them together with guaranteed bandwidth and predictable power. For a deeper dive on how inference strategy shifts under cost and latency pressure, see inference for sale and test time capital.

Behind the meter generation moves to the center

Behind the meter generation is power produced on the customer’s premises and consumed on site. In the AI era it becomes a core design pattern for three reasons: speed, firmness, and control.

- Speed. Utility scale interconnection queues can stretch for years. A campus that pairs a grid tie with on site generation can move faster by sizing for incremental energization milestones. Modular assets like reciprocating engines, fuel cells, and battery blocks can be added in quarters, not years.

- Firmness. On site plants can run during grid stress and price spikes, reducing exposure to curtailments and demand response events that would otherwise pause training.

- Control. Behind the meter systems can be tuned with the workload. If a cluster benefits from slightly higher hot water temperature for direct to chip cooling, the plant can co optimize thermal and electric output.

What fits behind the meter for a large campus today:

- Gas engines or turbines designed for high efficiency and low nitrogen oxides, paired with carbon capture where policy or corporate commitments require it. These are proven, fast to deploy, and can transition to hydrogen blends over time.

- Solid oxide or proton exchange membrane fuel cells, which offer high quality waste heat and good part load performance. They pair well with absorption chillers for cooling.

- Advanced geothermal where geology supports it, delivering high capacity factors and stable thermal output that integrate with liquid loops.

- Solar plus storage as a daytime contributor. With enough acreage and trackers, solar can cut marginal energy costs and charge batteries for peak shaving, even if it cannot carry a 24 hour baseload alone.

The hard part is not just choosing a technology. It is packaging the plant as part of the cluster. Think shared heat exchangers, harmonized ramp rates, and a common control plane.

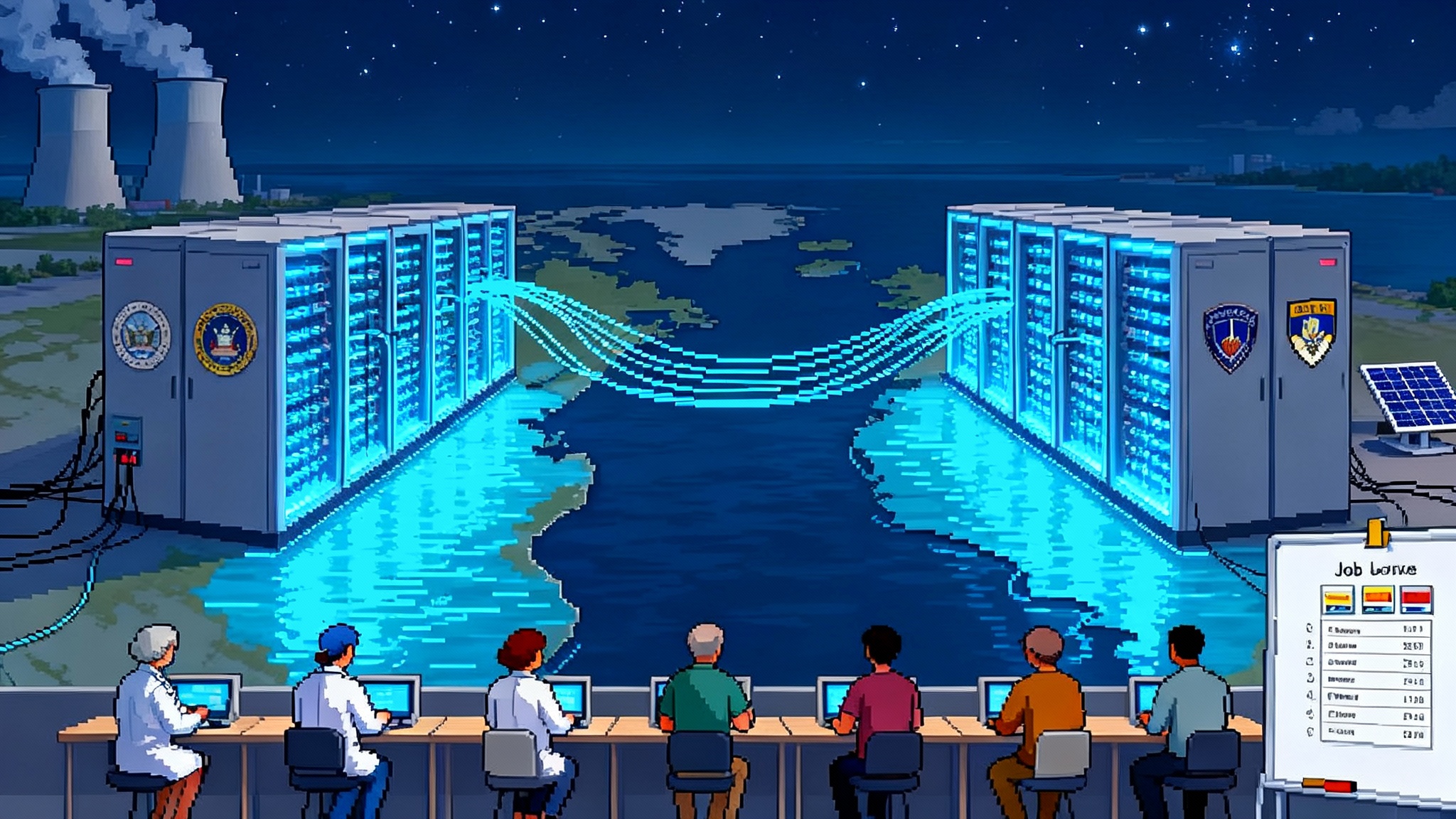

Modular nuclear and the return of always on compute

The most decisive change is the return of always on power as a first principle. Nuclear energy offers high capacity factor, low marginal cost, and minimal land use. Three strands matter for AI builders.

- Uprates and life extensions of existing large reactors, often paired with next generation grid services. These can back new campuses that anchor a regional clean power profile.

- Small modular reactors, factory built units designed for faster deployment and standardized parts. Products like the BWRX 300 small modular reactor aim to fit behind industrial meters and operate with passive safety features.

- Long dated deals for advanced concepts, from reactors with integrated energy storage to early fusion purchase agreements that place optionality on future supply.

If always on clean power is the scarcest input for future intelligence, then long term rights to that input become the deepest moat. A company with contracted nuclear supply for twenty years has a clearer path to predictable training schedules and defensible unit economics. Public sector efforts to create shared compute and energy footprints will also matter. See how governments are approaching this in governments building an AI commons.

Cooling becomes a thermodynamic co processor

At 100 kilowatt racks and above, cooling stops being a utility bill and starts acting like a co processor. Choices made in the thermal loop can add or lose real percentage points of usable power.

- Direct to chip liquid cooling reduces parasitic fan energy and allows higher inlet temperatures. Higher temperatures enable free cooling for more hours of the year and even useful heat recovery in some climates.

- Two phase immersion systems simplify hot spot management and reduce maintenance at the server level. They also raise practical questions about fluid supply chains and materials compatibility over time.

- Waste heat reuse moves from public relations to economics. District heat sales, greenhouse integration, or industrial process heat can turn a liability into a small revenue stream, especially in cold regions.

Thermal decisions must happen in the same room as workload scheduling and power procurement. Otherwise, the cheapest kilowatt gets squandered at the pump or rejected through a poorly tuned heat exchanger.

The energy operating system for AI

Treat energy as software, not just a bill. Call it an energy operating system for AI. It coordinates compute, cooling, and clean power in one control loop. A minimal viable version has six layers.

-

Resource graph and contracts. Build a live model of everything that produces or consumes power. Include grid interties, on site plants, battery state of charge, transformers, chillers, and pumps. Bind the model to contracts. Interconnection limits, power purchase agreements, tolling arrangements for on site plants, and fuel supply all belong in the graph. The model must know what is firm and what is interruptible.

-

Workload and thermal scheduler. Tie job submission to power availability and heat rejection capacity. A training run that needs three weeks of uninterrupted power should not start until the system can guarantee that window. The scheduler should understand both megawatts and megawatt hours, along with the thermal headroom in the liquid loop.

-

Market interface and dispatch. Expose bids and offers to wholesale markets where policy allows. Batteries may provide frequency response. On site plants can hedge through forward fuel purchases. During rare price spikes, the system can pause non critical inference to preserve training schedules or sell back power if contracts permit.

-

Carbon and provenance ledger. Track emissions at hourly granularity and tie them to specific jobs. Use time matched energy certificates where available. The ledger should be auditable and simple enough for a third party to confirm that a model was trained on clean power within defined tolerances.

-

Risk and reliability engine. Model single point failures and regional events. Keep spare transformers on site. Plan for cooling loop maintenance windows. Simulate what happens if the grid requests curtailment during a heat wave. Reliability becomes a property of the whole stack.

-

Developer facing abstractions. Expose energy aware primitives in the platform. Examples include submit job with power guarantee, reserve megawatts, and request verifiable clean supply. Make energy constraints as explicit as storage or memory requests.

None of this needs exotic AI to begin. It needs strong telemetry, clear contracts, and authority to orchestrate across facilities, trading, and compute operations.

Measuring the new moat

If power becomes the moat, leaders will disclose new metrics. Expect to see these in investor decks and technical blogs.

- Megawatts reserved and energization dates by campus. The important number is not theoretical power. It is the energization date on the utility’s calendar.

- Hours of verifiably clean supply per year, with the certificate system used and the geography of matching. Annual averages hide the story. Hourly matching shows real commitment and resilience.

- Kilowatts per rack and power usage effectiveness under load. PUE should be measured at realistic utilization, not in empty halls. For context, see power usage effectiveness explained.

- Thermal recovery factor, a measure of how much waste heat becomes useful work.

- Carbon intensity guarantees in grams of carbon dioxide equivalent per kilowatt hour, stated as a service level agreement where appropriate.

With consistent metrics, buyers can compare energy platforms the way they compare storage tiers or network latency today.

Siting playbooks built around power, not just fiber

The classic checklist for a data center site looked at fiber routes, tax policy, and workforce. The new playbooks start with power physics.

- Interconnection posture. How long is the queue at the nearest substation and what upgrades are needed. A good site has a clear path to an initial energization and a plan for staged growth.

- Capacity factor of nearby generation. Siting near nuclear or high capacity factor hydro adds firmness. Wind and solar help on energy cost but require storage or on site firm assets to support multi week training.

- Water and heat rejection. If water is scarce, plan for dry coolers or hybrid towers and budget the land. Consider whether waste heat has a local buyer.

- Fuel logistics. For on site combustion assets, secure fuel redundancy. Dual fuel capacity, local storage, and contracts with multiple suppliers mitigate interruptions.

- Community license. Long lived power assets require community trust. Engage early on noise, cooling tower plume visibility, traffic, and local hiring. Permitting speed and stability are part of the real levelized cost of electricity.

Evaluate sites as energy systems, not just land parcels. Bring transmission planners, thermal engineers, and market experts to the first visit, not the last.

Procurement that treats power like a product roadmap

Procurement teams can borrow from chip and capacity planning.

- Stage power the way you stage accelerators. Lock in the first 100 megawatts with firm delivery dates and build options for the next 400 megawatts. Tie option exercise to model milestones and demand.

- Blend contracts. Mix physical on site generation with long term power purchase agreements for nuclear or geothermal, and short term hedges for price exposure. A portfolio approach smooths shocks and allows opportunistic growth.

- Prepay long lead items. Transformers and high voltage breakers are now strategic components. Hold safety stock the same way you hold networking gear.

- Write carbon and reliability into contracts. Ask for hourly matching, penalty clauses for missed delivery, and specific language for curtailment and outage handling.

Power is no longer an operating expense managed quarter to quarter. It is a capital strategy that shapes the product roadmap for years. The macro implications of scarce compute and scarce power will also flow into finance and policy. For that lens, see how compute pushes up the neutral rate.

What to build next

There is an obvious opportunity for builders and founders to create the enabling layer for this era.

- Energy native data center developers that deliver guaranteed megawatts alongside guaranteed latency to specific training clusters. Think of them as power first cloud zones.

- Verification networks that issue cryptographic attestations tying compute jobs to clean power in time and location. Treat energy provenance the way we treat software supply chain security.

- Market facing schedulers that arbitrage workloads across campuses based on power and thermal headroom. If a heat wave stresses one region, shift jobs to a cooler site with firm nuclear supply.

- Cooling vendors that sell integrated thermal packages and expose simple programming interfaces for power aware scheduling.

The buyers are clear. Cloud providers, model labs, and large enterprises building sovereign AI will all value guaranteed megawatts with traceable carbon attributes.

Risks and how to handle them

This turn is not free of friction. A few risks stand out, each with practical mitigations.

- Supply chain for big iron. Transformers, switchgear, and chillers remain long lead. Mitigation. Standardize on a small set of interchangeable models, prequalify multiple vendors, and hold buffer stock at regional depots.

- Regulatory uncertainty for advanced nuclear. Licensing paths are improving but still uncertain. Mitigation. Pair long dated advanced deals with near term firmness from uprates, life extended reactors, and behind the meter generation.

- Water stress and community concerns. Large thermal systems can strain local resources. Mitigation. Prioritize dry or hybrid cooling where water is scarce, fund water saving projects locally, and design for heat reuse.

- Fuel price volatility. Gas and hydrogen markets can move. Mitigation. Mix fuel types, use long term hedges, and maintain flexible plant configurations that can throttle without large efficiency penalties.

The goal is not to pretend these risks vanish. It is to make them explicit in the energy operating system and treat them like any other production constraint.

A new mental model for builders

If the grid is the platform, then AI builders are also energy developers. That does not mean everyone must own a power plant. It means every serious program needs energy operators at the table with the authority to shape model timelines, siting, and procurement. In many programs, energy will be the schedule and the budget more often than code is.

The prize is large. A cluster with guaranteed megawatts, verifiably clean attributes, and a thermal loop tuned to its workloads will run more jobs, sooner, and at lower effective cost per unit of intelligence. It will turn power into a strategic asset rather than an invoice. It can do so in a way that neighboring communities can trust and verify.

The endgame

We used to say the internet was the computer. In the AI era, the grid is the platform. Teams that internalize that sentence will plan power like they plan silicon, treat cooling as a co processor, and build a clean power moat that compounds for decades. The next breakthroughs in intelligence will be written in code, but they will be paid for in electrons. The sooner we design around that truth, the faster we can build an abundant, reliable, and clean platform for thinking machines and for everyone who depends on them.