The Memory Threshold: After the Million Token Context

AI just crossed the memory threshold. With million token windows and hardware built for context, entire codebases, videos, and institutional archives now fit in working memory. Here is how to design for it.

The day the context window stopped being a window

On September 9, 2025, NVIDIA signaled a turning point when it announced Rubin CPX, a processor designed for massive context inference. The company described a system that targets million token workloads and long form video, packaged into the Vera Rubin NVL144 CPX rack with eye catching memory bandwidth and scale. The specifics are important, but the strategic direction is clearer. By designing a chip around context, not just generation, NVIDIA acknowledged that the bottleneck in artificial intelligence is shifting from model size to memory shape. You can read NVIDIA’s framing in its announcement of Rubin CPX for massive context inference.

Model providers made the same story impossible to ignore this year. On April 14, 2025, OpenAI introduced API access with a context window up to one million tokens and reported improvements on long context comprehension and multi hop reasoning. For practical work, a million tokens means entire codebases, thick legal binders, and feature length video can sit in a single session. See how the company positioned it in “OpenAI detailed GPT 4.1 million tokens.” Other labs followed with their own long context offerings. The debate is no longer whether more context is useful. The question is how to make it routine.

From prediction to context native cognition

Large language models began as engines of prediction. Feed a short prompt, predict the next token, repeat. Retrieval augmented generation emerged as a practical patch. It was a way to stuff extra facts into the prompt, usually by pulling top ranked snippets from a vector database. That worked, but it was brittle. Each hop from query to chunk to paste introduced errors and latency.

When models can carry entire projects in working memory, the balance shifts. Systems move from model centric predictions to context native cognition, where the model holds enough of the world to reason inside it. The difference is like answering a question about a novel from a paragraph versus bringing the whole library into the exam hall. With million token memory, the system can keep the full codebase, the entire policy manual, or the full video in view, so cross references, rare edge cases, and long range dependencies stay within reach. The goal is not just recall. It is reasoning over the total context, like a lawyer with the entire discovery archive on the table.

The quiet collapse of retrieval and reasoning

When context is scarce, retrieval is a separate step. You search, you chunk, you embed, you rank, then you paste. Each step is an opportunity for drift. When context is abundant, retrieval becomes a memory organization problem inside the session. The model can attend across many files at once, hop between frames of a video, and maintain a mental map of a codebase. Tooling will still surface the right material, but the boundary blurs. Instead of choosing five chunks to paste, the question becomes how to stage a working set that might be hundreds of megabytes, keep it warm, and route attention efficiently.

This is the architectural significance of Rubin CPX. NVIDIA split inference into a context phase and a generation phase. The context phase is where attention and routing saturate compute, and where intelligent caching and streaming decide what stays live. The generation phase is more sequential, focused on turning understanding into tokens. At runtime this looks like a system that treats memory as a first class resource. In practice it means builders will plan around the cost of loading and retaining context, not only the size of model weights.

Interfaces become archives

Product design will change even faster than the chips. The interface is no longer only a chat box or a code editor. It becomes an archive, a live memory graph. A few patterns will feel natural:

- Whole codebase copilots. The assistant does not ask you to open files. It already has them, including tests, historical diffs, and performance dashboards. It proposes a refactor plan that spans many services, links risks to prior incidents, and opens coordinated pull requests.

- Long form video workstations. An analyst loads a two hour earnings call along with slides and filings. The system finds claim contradictions with timestamps, aligns narration with on screen numbers, and answers follow up questions that depend on callbacks between the first and last thirty minutes.

- Institutional copilots for operations. A hospital agent ingests policies, protocols, and de identified case histories. When a new case arrives, the agent traces recommendations to patient safety records, cites the relevant procedure, and flags conflicts with local law. Instead of reacting per request, it maintains a durable memory of how the institution actually operates.

Across these examples the product is not a thin wrapper over a model. It is a memory system with a conversation layer. Design shifts from prompts that say do X with Y, to canvases that say here is the living archive, now think and act.

The engineering shift: treat memory as a budget

Engineers will start to track memory spend like they track compute spend. With million token prompts, the difference between a clean working set and a messy one is the difference between a minute of latency and a usable experience. Three practices are worth adopting now:

- Stage context, then stream. Pre stage stable assets like code and policies. Stream volatile assets like logs and new user inputs. Use prompt caching to avoid paying for repeated prefix tokens. Treat cache invalidation as a first class event with tests.

- Build memory views, not monolithic prompts. Compose contexts from named views such as codebase core, dependency graph, incidents, and specification. Let the model switch views based on task. This yields more predictable behavior than concatenating arbitrary chunks.

- Make forgetting a feature. Offer users a red line control to evict or quarantine context at the object level. Push a memory diff that shows what was retained, what was dropped, and why. Persist this diff in an audit log.

The emergent discipline is memory engineering. It applies classic ideas like working sets and locality to modern tokens and attention.

Privacy grows teeth when memory persists

Many companies have tried to stay safe by prohibiting sensitive data in prompts. That will not scale when the value depends on keeping institutional archives warm. Long context turns privacy into an operational property, not only a policy. Consider three implications:

- Per object access control inside context. If an agent holds one million tokens, access must apply at the paragraph or file level, not just at the chat session level. Redaction should be structural, not a regular expression pass. A request for synthesis should include a verifiable list of which items were allowed.

- Context provenance by default. When an assistant gives an answer, it should provide a click through trail of the exact documents and code lines that shaped the reasoning. This is more than citing a source. It is a chain of custody that can be audited and disputed. Our piece on why content credentials win the web outlines the strategic payoff of trustworthy provenance.

- Rotation and quarantine for memory. Treat long lived context as a data store with retention classes. Some material should roll off after seven days. Some should be quarantined after a policy change. The runtime must enforce these rules in real time.

Alignment that begins with the archive

Alignment debates often focus on model behavior. In a context native world, alignment begins with what the agent is allowed to remember. Changing the archive often changes behavior more predictably than changing the model. A practical playbook follows:

- Define admissible context. Specify the classes of documents and media the agent may ingest. Make the specification machine readable and enforceable. On violation, block ingestion and write a clear log event.

- Introduce memory unit tests. Before deployment, run suites that assert what the agent should never say given a defined context, and what it must always retrieve across long sessions. Fail fast when the working set violates expectations.

- Adopt explainable routing. When the agent selects which view to attend to, log the routing decision with a short natural language explanation. This makes troubleshooting and policy review tractable.

The context economy arrives

As memory becomes the product, moats, attacks, and norms shift.

New moats

- Proprietary archives. Licensing a model is table stakes. Owning the best archive for a vertical, such as a game studio’s assets or a bank’s procedures, becomes a defensible advantage. The barrier is less about parameter count and more about institutional memory.

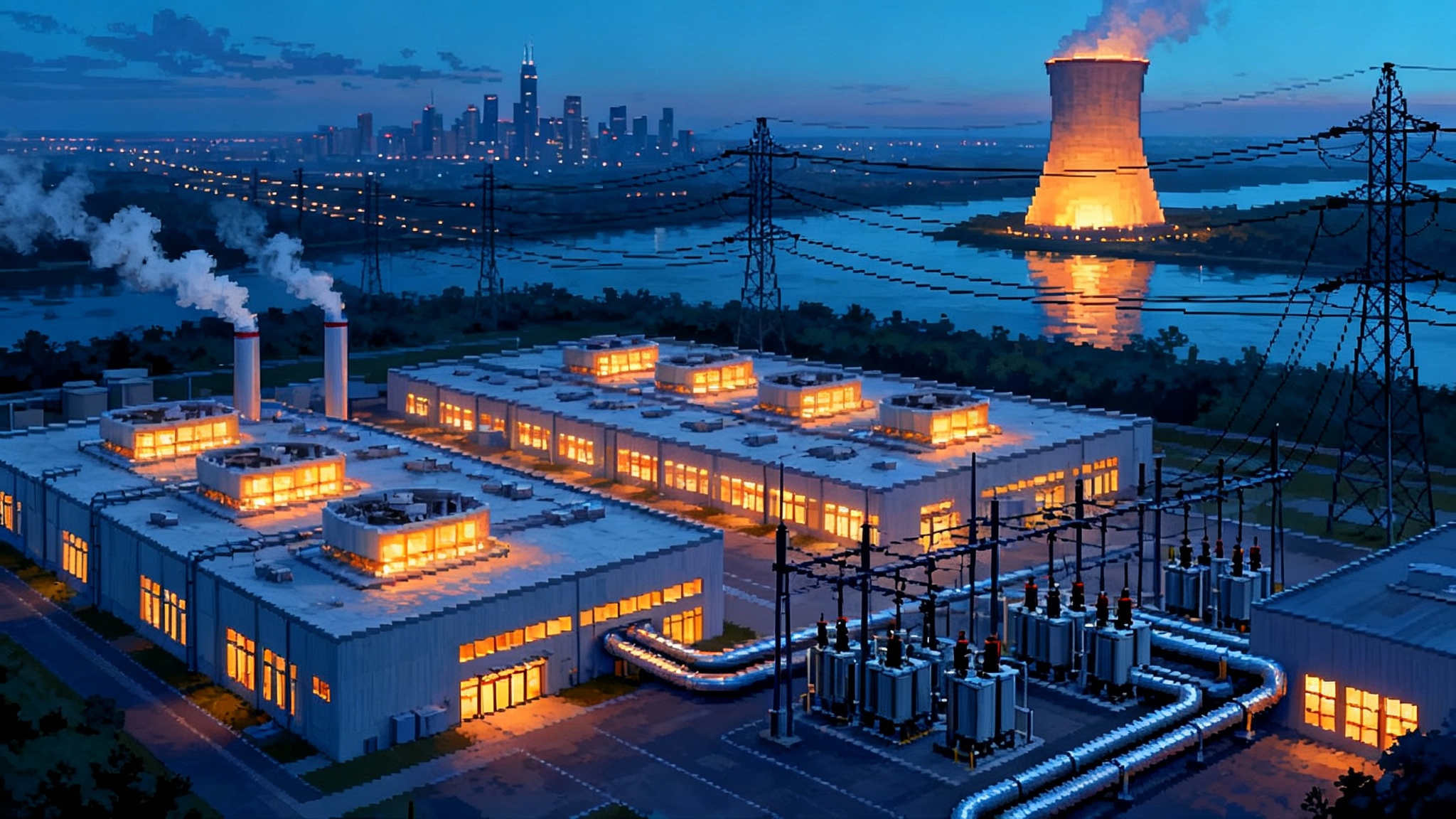

- Proximity to memory. Hosting where the archives live reduces bandwidth costs and cache miss penalties. Expect deals that tie inference to where the data sits, since cold starts are expensive at a million tokens.

- Memory orchestration stacks. Runtime layers that split context and generation, schedule caches, and enforce policy will accumulate know how, bug fixes, and partner integrations. These stacks will feel closer to modern databases than to libraries.

New attacks

- Context poisoning. Adversaries seed long lived archives with subtle mislabels or off by one instructions that only surface during cross file refactors or long form video edits.

- Cross session prompt injection. Malicious patterns hide in persistent memory, not only in a single web page. The agent carries the bait from an earlier conversation into a later task.

- Provenance spoofing. Attackers forge or replay provenance trails that look legitimate. Without cryptographic signing and server side checks, the agent may trust the wrong lineage.

New norms

- Memory transparency. Users will expect a panel that shows what the agent currently holds, how old it is, and where it came from. This becomes the new settings page.

- Object level consent. Teams will grant consent at the repository, folder, and paragraph level. Blanket consent for a whole tenant will be seen as sloppy.

- Memory budgets in contracts. Service level agreements will include guaranteed warm context size and cache hit ratios, not just latency and uptime.

What Rubin CPX really signals

The Rubin CPX announcement matters for a simple reason. It validates context as a workload class. NVIDIA described hardware acceleration for attention and video IO inside the same chip, and a rack scale design in which the context phase can be scaled and orchestrated separately from generation. Availability is targeted for the end of 2026, which gives builders a two year window to get their memory houses in order. The better your memory engineering is today, the more you can exploit specialized hardware tomorrow. For broader platform dynamics, see how ChatGPT becomes the new OS influences developer choices that depend on context locality.

There is also a strategic metric hiding in plain sight. If vendors start to emphasize useful tokens per dollar of retained context, customers will benchmark not just tokens per second, but useful tokens per dollar. That pushes product roadmaps toward reducing unnecessary memory churn and maximizing cache reuse across tasks. It also rewards architectures that run closer to the data. Our analysis of why the grid becomes AI's bottleneck explains why proximity and power planning will shape memory heavy deployments.

Builders’ checklist for the context native era

If you are building agents, tools, or platforms, you can act now. Here is a concrete checklist to adopt in the next quarter:

- Implement named memory views for your largest use case. For a code assistant, define core, dependencies, incidents, and specification. For a legal assistant, define filings, evidence, rulings, and commentary. Teach your runtime to switch views automatically.

- Add a memory panel to your interface. Show current working set size, age distribution, and provenance badges. Provide a one click purge per object and a quarantine toggle.

- Enforce object level access controls. Integrate with your identity provider so that context assembly respects document level permissions. Block answers that would require unauthorized objects, and log the block with a reason.

- Adopt prompt caching and prefix staging. Measure cache hit rates and time to first token for large contexts. Treat regressions as blockers for release.

- Ship a provenance trail for long context answers. Provide a compact, clickable set of sources the user can expand. Store this trail server side for audits and incident response.

- Write memory unit tests. Run them as part of continuous integration. Include tests that stress long range cross references, such as function calls across services or callbacks across video chapters.

- Prepare for poisoning. Build detectors for anomalous context insertions, such as commits or documents that introduce silent globals or inconsistent policy phrases. Gate high risk changes behind review, and rehearse incident playbooks.

- Plan retention by class. Decide what is hot, warm, or cold, and wire your runtime to rotate and evict accordingly. Document how eviction affects repeatability of results and searchability of past work.

Law and strategy will follow the memory

Regulators and courts will focus on archives because that is where value and risk concentrate.

- Data portability for memory. Expect pressure to let customers export their working sets and provenance trails, not just chat histories. Vendors that support clean export will gain trust and simplify procurement.

- Discovery and audits. In litigation or compliance reviews, the ability to reconstruct what the agent knew at a specific time will matter. Keep immutable snapshots of long running sessions for high stakes workflows.

- Sector specific norms. In finance and health, memory policies will become part of certification. Organizations will publish what their agents remember by default and how they forget by design.

For strategy teams, the central question is where you want your memory to live. Centralization simplifies security and orchestration. Federation respects local control and reduces blast radius. Hybrid patterns will emerge, but the principle is the same. Move slow data toward the runtime, or move the runtime toward slow data. Either way, context gravity rules.

A note on costs and latency

Long context changes user experience. Time to first token grows as context grows, which is acceptable for batch analysis and workable for many editing tasks, but challenging for tight interactive loops. Product teams should design progressive disclosure. Start with a quick skim over a small view, then expand to deep analysis as the user leans in. The model does not need the entire archive for every keystroke. It needs the right view for the current step, then a plan to escalate.

On the cost side, real gains come from cache discipline. Treat prompts like code. Factor out shared prefixes, measure reuse, and delete dead context. Many teams will find that the largest savings do not come from a cheaper model, but from better memory hygiene that avoids paying repeatedly to load the same assets.

The next default

The industry’s first default was model centric. Then came retrieval centric, where indexes and embeddings carried the load. The next default is context native. You will know you have crossed the memory threshold when your agent can sit down with a million tokens of your world and feel at home. At that point, the best products will not be those with the largest models. They will be the ones with the most disciplined memory.

Rubin CPX names the hardware bet, GPT 4.1 proved the window can stretch, and the rest is now in the hands of builders who treat memory like the product it has become. The boundary between retrieval and reasoning is collapsing into a single act: think on what you hold. That is a simplification for users and a responsibility for designers. The next advantage belongs to teams that curate the right archives, shape them into working sets, and make them safe to remember. The context economy has begun, and it will reward those who think in memory.