Sovereign Cognition and the New Cognitive Mercantilism

States are beginning to treat models, weights, and safety policies as tradable goods. See how sovereign cognition, model passports, and export grade evaluations will reshape AI governance, procurement, and cross border deployment.

The week foreign policy learned to speak in logits

Artificial intelligence has moved from a policy sidebar to the main stage of statecraft. In a matter of months, model providers and ministries began negotiating not just price and performance but where inference happens, who holds the keys, and how much visibility a regulator gets into logs and governor settings. Major platforms have reported takedowns of state linked misuse networks probing model boundaries at scale. In parallel, legislators and standards bodies are shaping compliance regimes for deployment. Put together, these are not isolated signals. They are the opening moves of cognitive mercantilism.

Cognitive mercantilism treats models, weights, safety policies, and evaluation artifacts as tradable and sanctionable goods. Think of it as a mental shipping manifest. Instead of steel coils and crude oil, the cargo is a 70 billion parameter checkpoint, a safety governor profile, a set of watermarking keys, and a signed red team scorecard. Foreign ministries are learning to care about hash values, reproducible builds, and inference logs because these artifacts define who controls the flow of reasoning capacity across borders.

This shift sits alongside the regulatory push that is already underway in many capitals, including the European Union’s evolving policy framework captured in the EU AI Act overview. The policy conversation is widening from training and data to deployment, transparency, and remedy. The question is no longer only how big a model is. It is where it runs, how it is governed, and whose laws it operationalizes by default.

From chips to cognition

For the last five years, AI geopolitics focused on compute supply, data access, and software talent. That logic remains sound. Whoever produces the most advanced chips, collects the richest datasets, and trains the most capable models influences the digital economy. What is changing is the policy lens. Governments are moving from inputs to outputs, from capability to control.

Energy is the closest analogy. Early policy builds power plants and pipelines. Mature policy prioritizes grid stability, demand response, and interconnection protocols. AI has reached that second phase. Nations are prioritizing placement of inference, runtime integrity, and interop standards that let workloads fail over across vendors without losing safety posture. Domestic investments in shared compute and public sector adoption are becoming part of industrial policy, echoing the thesis that governments are building an AI commons.

What sovereign cognition really means

Sovereign cognition is the idea that a country should be able to provision, direct, and verify the reasoning capacity that touches its core functions, even when that capacity comes from foreign firms. It is not isolationism and it is not an argument to halt progress. It is a design requirement for resilience and accountability.

There are three practical layers to sovereign cognition:

- Compute and runtime control. Placement of inference, failover options across clouds and on premises, enclave isolation, and deterministic build pipelines for the serving stack.

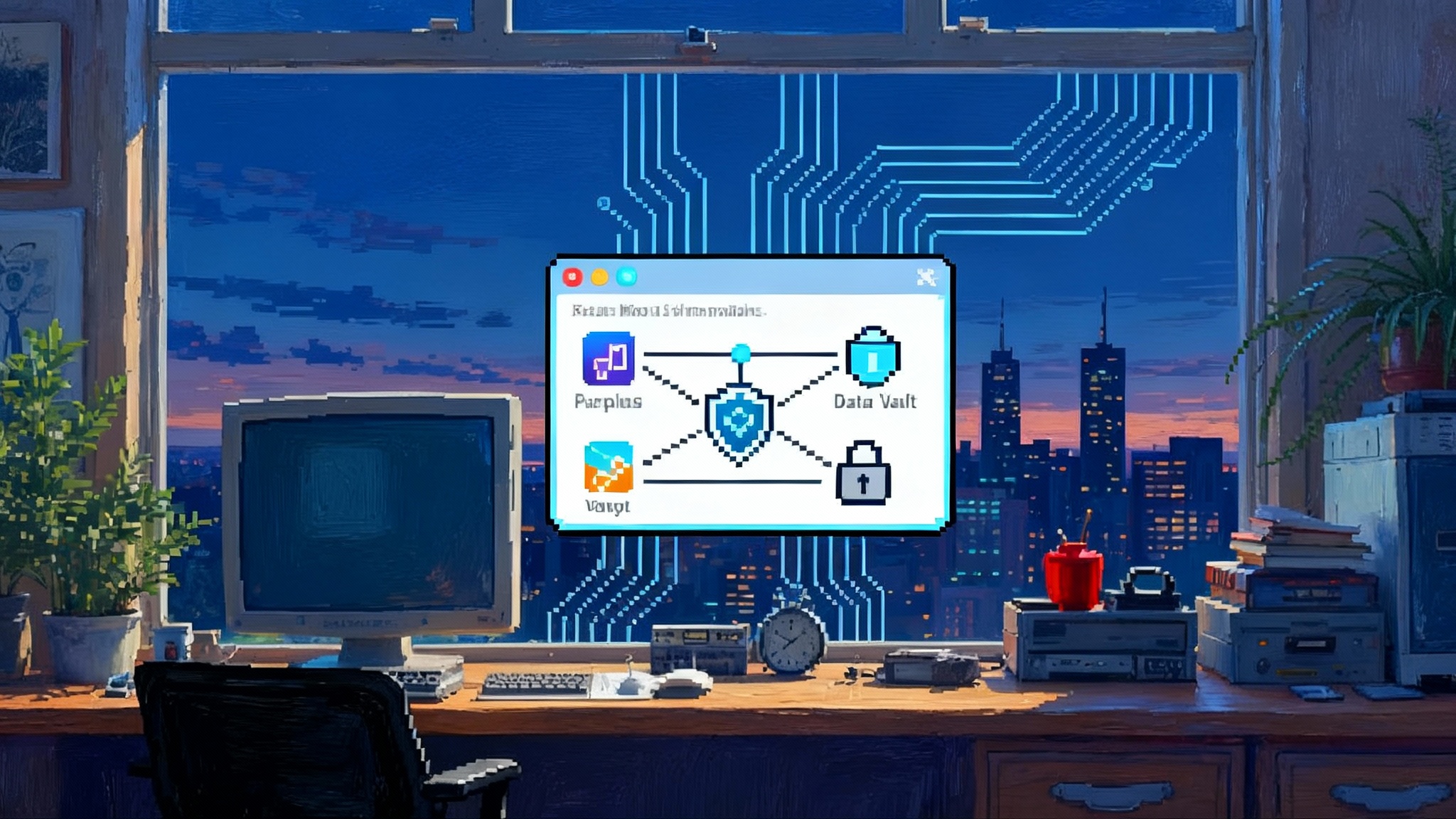

- Model and safety control. Access to weights or robust weight escrow, tunable safety governors, clear permissions for domain fine tuning, and documented override procedures under emergency law.

- Observability and recourse. Verifiable logs, bounded audit windows, and fast paths to remedy harmful behavior or contest outputs in regulated workflows.

If a ministry or a critical enterprise cannot answer who can modify the model, who can see the prompts, and how to prove what was run and why, then it does not possess sovereign cognition.

The new tradables: weights, governors, and guarantees

What exactly is being traded in cognitive mercantilism? Four classes of assets are moving from developer lore to foreign policy instruments.

- Weights and weight access. Model weights are the crystallized product of training. They can be open, licensed, escrowed, or kept proprietary. Expect export categories that differentiate between fully open weights, time delayed releases, and sealed models with supervised access only.

- Safety governors. Governors are policy modules that sit between users and models. They encode identity, context, and constraints. They decide whether a model can explain a chemical synthesis step, how it phrases a geopolitical claim, or whether it may call tools on behalf of a minor. Governors will be traded, audited, and sanctioned separately from base models.

- Evaluation suites and attestations. Third party evaluations are becoming passports. Attestations summarize test coverage and results, allowing a receiving regulator to understand what was measured, with what reproducibility, and under what red teaming.

- Deployment guarantees. Signed binaries, reproducible builds, runtime isolation, and content provenance settings are the shipping containers and seals that make models safe to move at scale.

When a government signs a memorandum of understanding to deploy a foreign model in public services, it is increasingly negotiating all four classes at once. Price matters, but so do knobs, logs, and keys.

A philosophical break with universalism

For a decade, alignment discourse leaned toward universal rules for safe and helpful AI. The instinct was noble. If everyone agrees that systems should be honest and harmless, the job is to formalize those definitions and train toward them. Reality is messier. Harmlessness in one country may include stringent filters on politically sensitive topics. Harmlessness in another may require robust support for protest guidance and investigative reporting. Truthfulness in a multilingual society demands nuance about contested history.

We are witnessing a pivot from universalist alignment to culturally scoped value systems. The question is not whether a model should help a teenager build explosives. The answer is no everywhere. The question is how the model should respond when asked about reproductive rights, a disputed territorial claim, or the bounds of satire. The practical path is plural governance with shared safety floors, not a monoculture. A model can honor a country’s speech laws and educational standards while maintaining a high baseline of misuse resistance.

Forecast: passports, export grade evaluations, and model diplomacy

Over the next year, three concrete mechanisms are moving from pilot to policy.

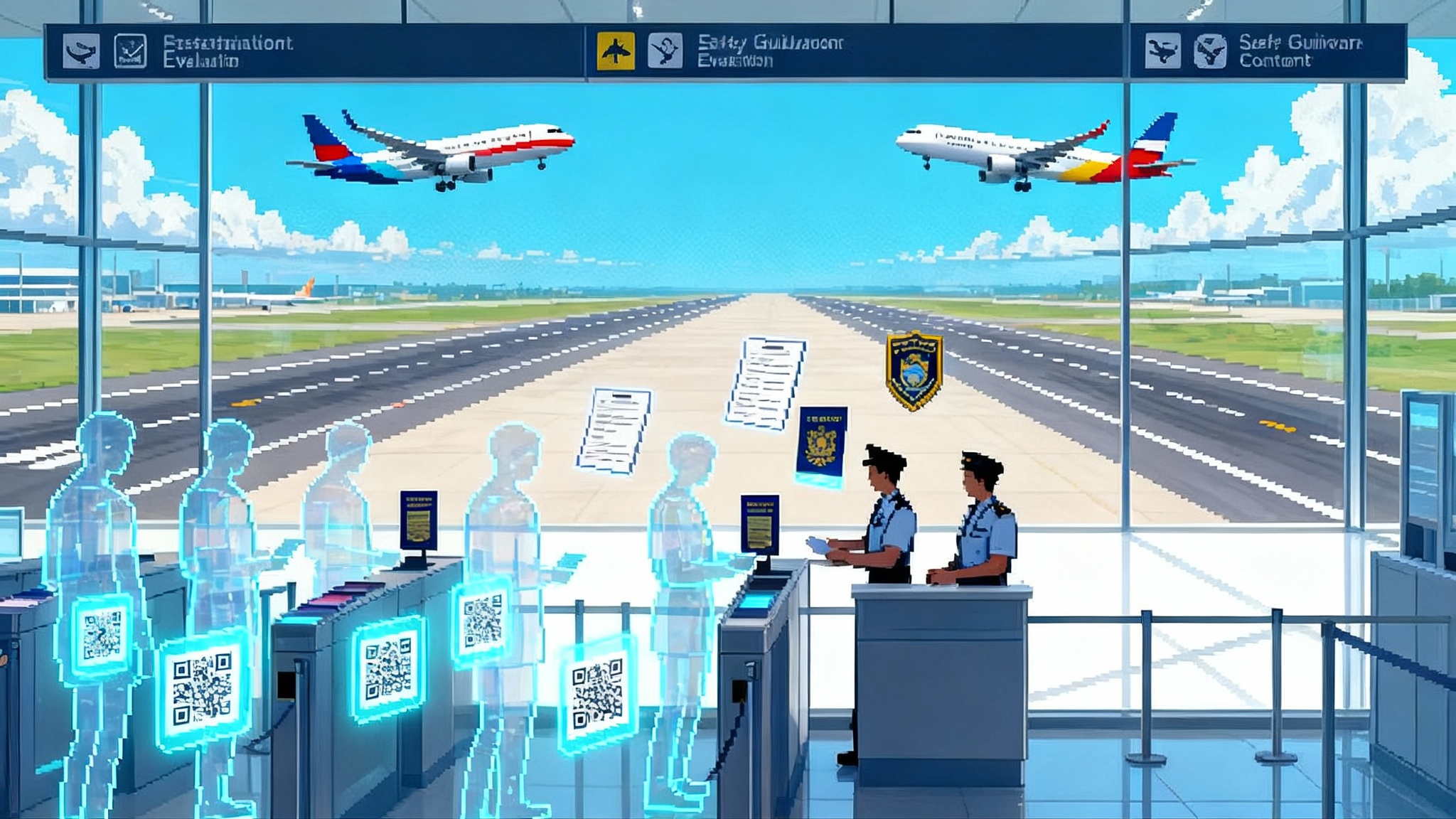

- Model passports. A passport is a cryptographically signed profile that travels with the model. It contains a versioned hash of the weights, a dependency manifest for the serving stack, a safety governor bill of materials, a privacy and logging policy, a training data provenance summary, and a red team scorecard. Passports make compliance legible and reduce the temptation to lean on marketing claims.

- Export grade evaluations. Like medical devices that face different test regimes for domestic sale versus export, frontier and sector models will face export grade evaluations. They will include stress tests for social targeting, critical infrastructure misuse, and biological or chemical decision support. Thresholds, reproducibility requirements, and sealed test sandboxes will become standard, influenced by guidance such as the NIST AI Risk Management Framework.

- Model diplomacy protocols. Countries will establish standing channels to negotiate model behavior for cross border deployments. Think hotline procedures for misbehavior, joint incident exercises, and protocols for emergency governor updates. They agree to publish a post incident report whenever the hotline is used, with technical and policy details. Finally, they codify a right to audit, limited in scope and time, when model behavior in one country has cross border consequences in the other.

These are not hypotheticals. They are the natural next steps once models enter procurement, trade, and security portfolios.

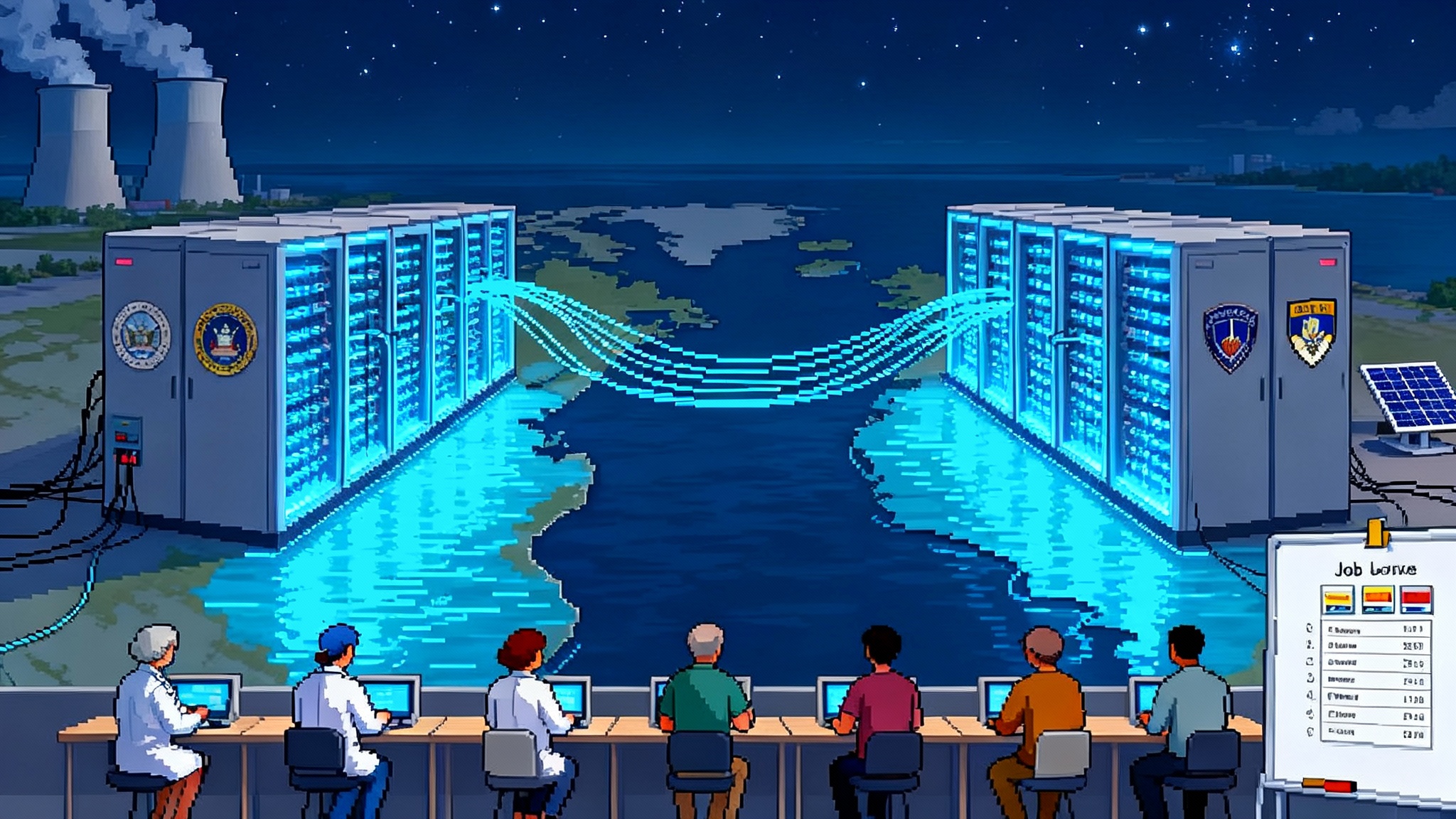

Interoperability or a fractured internet of minds

The world faced a similar choice when the internet left research labs. We could have built many incompatible networks. Instead, we set shared standards for routing and addressing. We need the same spirit for cognition. Without interoperability, we get a patchwork of model silos that punish multinational businesses, slow research collaboration, and erode public trust.

A practical interoperability stack would include:

- Identity and consent protocols for agents. Agents act with tools on a user’s behalf. They need portable identity, scoped permissions, and revocation. Identity should travel with the agent so it can interoperate across vendors without privilege creep. For an architectural deep dive on consent primitives, see how the consent layer is coming.

- Content provenance that survives translation and paraphrase. The point is not to label everything as AI generated. The point is to make it possible to validate origins when it matters, such as elections, medical advice, and emergency information. Watermarks and signatures must be robust to benign edits.

- Standardized safety governor interfaces. If a school district switches model providers, it should carry its policies along without a costly rewrite. A shared schema for prompts, rubrics, and override procedures would make safety portable.

- Verifiable runtime attestations. When a regulator or a customer asks what model was used, the provider should produce an attestation, not a guess. Attestations must be cheap to generate and difficult to forge.

If we move quickly, we keep a single global conversation while honoring local law. If we wait, market power and political pressure will lock in incompatible defaults.

Compete hard, coordinate smart

An accelerationist stance does not mean closing our eyes to risk. It means building the rails for speed and safety at the same time. Here is a concrete playbook.

For governments

- Tie funding to adoption. Grants for domestic compute and model development should be paired with procurement targets for schools, clinics, and agencies. The goal is a flywheel where public demand shapes domestic supply.

- Require passports for public use models. Make model passports mandatory for any system used in public services. Start with a light profile, then tighten over time. Publish the templates so the private sector can align.

- Create bilateral model diplomacy tracks. Add model behavior and interoperability to existing trade and digital policy dialogues. Run joint incident response exercises twice a year.

- Calibrate export controls to access. Instead of blanket bans, differentiate between open weights, escrowed weights, and sealed inference. Align controls with misuse pathways demonstrated in tests, and document them against the NIST AI Risk Management Framework.

For companies

- Productize safety governors. Treat governors as first class, testable, auditable components. Offer customers migration tools that let them carry policies across products.

- Ship attestation by default. If your platform cannot tell a customer what ran, you are one incident away from a trust crisis. Invest in tamper evident logs and simple, human readable certificates.

- Publish red team scorecards. General claims about safety are not persuasive. Summarize top failure modes, the conditions under which they appear, and the fixes you shipped. Invite replication and make settings reproducible, building on lessons from the observer effect hits AI.

- Build for dual deployment. Deliver every major capability in a sealed cloud configuration and a controlled on premises configuration. Design for feature parity across both to avoid lock in resentment.

For platforms and civil society

- Increase transparency cadence. Takedown reports for state linked abuse are valuable, but the latency is often months. Create a rolling feed that gives trusted researchers and election authorities early warnings without naming victims.

- Unbundle content policy and safety. Let communities and countries select policy bundles for legal and cultural content moderation while you maintain a consistent safety floor for misuse resistance.

- Fund independent evaluation communities. Evaluations cannot be a vendor sport. Underwrite diverse teams that probe models for propaganda amplification, social engineering, and sector harm. Make results portable and reproducible.

The risk map, without hand waving

- Balkanized defaults. If each country trains a domestic model with idiosyncratic interfaces, cross border services will slow to a crawl. Interoperability standards reduce this risk.

- Compliance theater. Vendors may game evaluations to win passports without meaningful change. Randomized audits and attestation spot checks are the antidote.

- Weaponized safety. Governments may demand governors that suppress lawful dissent. The countermeasure is procedural transparency and a public record of governor changes in public deployments.

- Supply chain attacks on weights. Theft or subtle tampering with weight files would undermine entire product lines. Weight signing, secure distribution channels, and deterministic serving pipelines are essential.

- Overreach in export controls. One size fits all bans could push innovation underground and deprive democratic allies of needed capacity. Graduated controls tied to access modes and misuse metrics are safer and more enforceable.

Naming these risks is not an argument to slow down. It is an argument to pick high leverage mitigations and measure whether they work.

Metrics that actually matter

The next phase needs crisp metrics that capture the health of a cognitive economy.

- Time to origin attestation. How long it takes a provider to show what model, version, and governor produced a contested output in a public service.

- Cross jurisdiction failover drills. Whether a ministry can move a workload across clouds or from cloud to domestic hardware in less than a weekend with preserved safety settings and audit trails.

- Reproducible evaluations. Whether independent teams can reproduce headline evaluation results within a defined tolerance. Reproducibility is the antidote to evaluation theater.

- Governor portability score. How many policy bundles a customer can migrate without custom engineering. Portability reveals lock in and market power.

- Misuse lag. The time between the emergence of a new misuse technique and its detection and mitigation in the wild. The goal is to drive this toward days, not months.

These numbers will become the performance indicators of sovereign cognition. They are hard to fake and easy to compare.

The emerging art of model diplomacy

Imagine neighboring countries that share a power grid and a broadcast market. One deploys a foreign model to help agencies write benefits letters in multiple languages. The other adopts a domestic model for emergency messaging. Both need to exchange content in real time during floods and wildfires. Now imagine that one model refuses to repeat a phrase the other considers civil rights language. The friction is not hypothetical. It is inevitable.

Model diplomacy offers a path through. Before deployment, the countries exchange passport templates and agree on a shared safety floor. They run joint drills where each side probes the other’s sealed instance under observation. They set up a hotline staffed by senior engineers and policy leads who can authorize temporary governor changes during emergencies. They agree to publish a post incident report whenever the hotline is used, with technical and policy details. Finally, they codify a right to audit, limited in scope and time, when model behavior in one country has cross border consequences in the other.

This is the difference between hoping for alignment and engineering for it.

Accelerate the rails, not the fog

We are entering a period where nations will buy, sell, license, and sanction cognition like they once did steel and ships. That is not a crisis. It is a recognition of reality. The choice is whether cognitive mercantilism fragments the public square or channels into healthy competition on capability, reliability, and accountability.

The accelerationist move is to build interoperability and verification layers now. Make model passports routine. Make export grade evaluations meaningful and reproducible. Stand up model diplomacy so that disagreements have procedures, not only press releases. Compete hard on performance and efficiency. Compete even harder on how verifiable, portable, and governable your systems are.

If we do, a teacher in Barcelona, a nurse in Milwaukee, and a city manager in Nairobi can benefit from distinct models that still talk to each other, respect local law, and reveal what they did when questioned. That is not a lowest common denominator. It is a higher standard for shared progress.

The internet taught us that compatibility beats uniformity. Sovereign cognition can teach us that pluralism beats monoculture when paired with verification. Build the rails, measure what matters, and let the best minds, human and machine, meet in the open.