The Neutrality Frontier: Inside GPT-5's 'Least Biased' Pivot

OpenAI says GPT-5 is its least biased model yet, signaling a shift from raw capability to value calibration. Here is what changes next, why neutrality accelerates autonomy, and how builders can turn it into advantage.

The announcement behind the headline

OpenAI just reframed the race to frontier intelligence. In its latest research update, the company reports a sizable reduction in measured political bias compared with prior generations and says that in a sample of real usage only a tiny fraction of responses show signs of political lean. The methods and metrics are outlined in OpenAI's political bias evaluation, which details how the team defines bias and audits model behavior across charged prompts.

Taken together with the product and safety materials released alongside GPT-5, the message is clear. The frontier contest is not only about bigger brains and faster routing. It is about engineering a steady voice that resists pressure from loaded questions and keeps its footing when users push toward extremes. OpenAI positions GPT-5 as the most objective version of ChatGPT to date, and that repositions where competitive advantage lives. The GPT-5 system card describes stronger controls for sycophancy, safe completion strategies, and routing between quick and deeper reasoning modes.

What least biased means in practice

In everyday use, neutrality claims are not promises of perfect impartiality. They are design goals defined as measurable targets. Three changes stand out.

- Scope and definition. Bias is not a single dial. The evaluation decomposes it into axes such as asymmetric coverage, personal political expression, and emotional escalation. A model can be factual yet biased if it frames an answer one sidedly or amplifies a slanted prompt.

- Training signals. Bias reduction is no longer only a matter of filtering data. It is a post training craft. Reinforcement Learning from Human Feedback set the template. Reinforcement Learning from AI Feedback and targeted policy tuning refine behavior where human raters are scarce or evaluations must run continuously.

- Output discipline. GPT-5 adds stronger defenses against sycophancy, the tendency to mirror a user's premise. Safe completion training aims to steer the model toward allowed content rather than only refusing. That approach reduces friction while keeping boundaries intact.

Imagine a camera with two controls. The sensor is your base model, which sets raw sensitivity and resolution. The lens is the value calibration stack, a combination of system prompts, reward models, and policy rules that govern composition, framing, and depth of field. GPT-5's bias work is a new lens that keeps the horizon level when the scene tilts.

From maximizing capability to calibrating values

For years, builders optimized one curve. More data, more compute, bigger context windows. That work continues, but GPT-5's launch underlines a parallel curve that now drives real world usefulness: value calibration.

Two forces push in this direction.

-

Capabilities have plateaued toward sufficiency for many knowledge tasks. When the average office worker or student already sees near expert drafts, the bottleneck moves from intelligence to judgment. Will the model handle political content without nudging you? Will it explain risks in a health scenario without scaremongering? Calibration decides whether a capable model is trustworthy enough to act.

-

Enterprises and governments demand predictable behavior. A finance team wants an agent to reconcile invoices against policy exceptions. A hospital wants discharge summaries that reflect local legal obligations. The question is less can the model reason and more will the model adopt our norms, consistently, at scale.

Bias reduction matters here. It smooths the default stance so that custom values can be layered on top without clashing. The closer the default is to value flat, the easier it becomes to compose different norms for different contexts without retraining.

For readers interested in how measurement itself reshapes behavior, see our look at how tests change models. Better evaluations drive better systems, but they also create incentives that can distort. Designing neutrality metrics that do not invite gaming is part of the new craft.

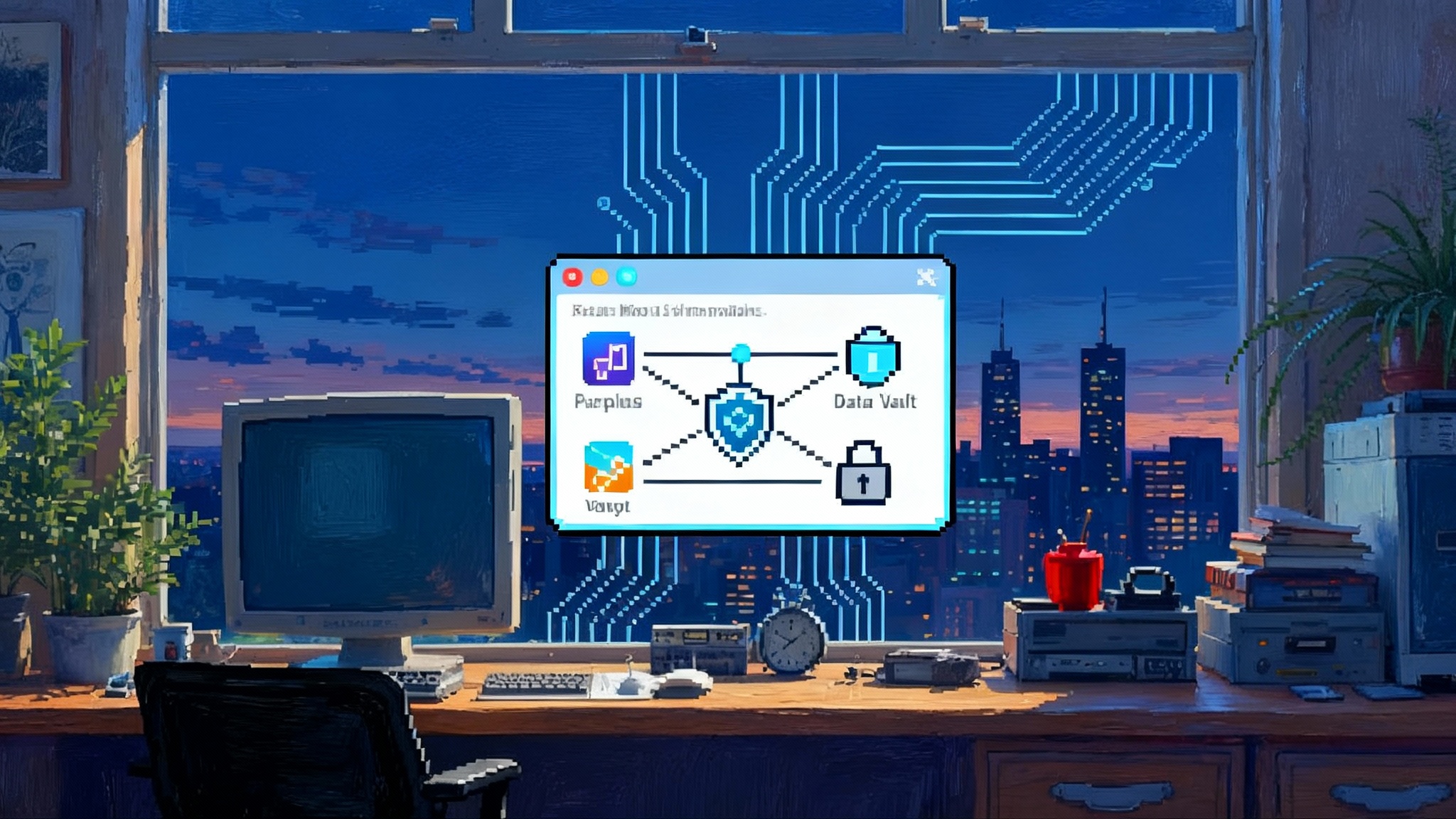

Where leverage migrates: context, tools, and policy

When the default voice converges on an institutional tone, the action moves elsewhere. If you are deciding where to invest, watch three layers.

- Context. Retrieval, profiles, and structured memories increasingly matter more than pretrained knowledge. A sales agent that knows your price book and discount rules will outperform a smarter but context blind competitor. The winners invest in clean data connectors, permission aware retrieval, and good schema design.

- Toolchains. Multi step agents are only as good as their tools. Expect better competition around secure actions, verifiable calculations, and human in the loop checkpoints. As models standardize on a neutral voice, tools become the differentiator that turns answers into outcomes, for example filing a purchase order, updating a contract, or deploying a code change through a controlled pipeline.

- Deployment policy. This is the new moat. System prompts, safety adapters, and value profiles determine how a model behaves in your company, on your platform, and under your laws. They are interpretable, auditable, and adjustable without changing the base model.

A concrete example helps. Two retail marketplaces both use GPT-5. One gives agents access to inventory, refund tools, and customer history, then applies a policy profile that favors empathy, transparency about limits, and strict refund caps. The other restricts tools, adopts a terse style, and allows loyalty credit within a monthly budget. Both run on similar intelligence. Their outcomes differ because of context, tools, and policy.

Why neutrality accelerates autonomy

Neutral defaults are not just public relations. They are an accelerator for autonomy.

- Reduced friction for enterprise rollout. Procurement and legal teams are more likely to approve agents that can demonstrate objectivity metrics and provide audit trails. That means faster pilots and broader deployments.

- Safer delegation. A value flat base reduces the risk that agents amplify user slant or drift into ideological territory. That supports higher autonomy settings for tasks like triaging support tickets, drafting policy neutral communications, or moderating forums.

- Global interoperability. A neutral foundation makes it easier to ship into countries with different norms. You can add a local value layer without fighting strong defaults baked into the base model.

This aligns with the arc we mapped in the consent layer is coming. As agents request permission to act, neutral reasoning reduces misunderstandings and clarifies the purpose of each step. It also supports national strategy goals described in sovereign cognition strategy, where governments shape default behaviors that reflect public values without fragmenting the technical base.

The risk: a monoculture of discourse

A steady voice can become a flattened one. If every vendor converges on a corporate tone, we risk a monoculture that nudges global conversation toward the language of press releases. That matters in education, civic debate, and the arts. Students may pattern their writing around a narrow style of prudence. Forums may lose sharp edges that, while messy, reveal real disagreement.

Monoculture is also a market risk. If the default voice is the same in every product, differentiation gets pushed into closed tool ecosystems and proprietary data silos. That can entrench incumbents and make it harder for open alternatives to thrive.

The solution is not to abandon neutrality. It is to make values plural and composable.

A plural, composable value layer

We can have neutrality and plurality if we treat values like software components. Think of a value layer as a stack of small, composable modules that control tone, coverage, and refusal logic without breaking compatibility.

- Value profiles. Machine readable documents that define desired behavior across domains. For instance, an Education profile might require balanced coverage on contested topics, encouragement of critical thinking, and strict citation habits. A Public Health profile might prioritize clarity over hedging when communicating risks. Profiles can be combined, and each can declare testable outcomes.

- Policy adapters. Sandboxed functions that adjust responses post generation. An adapter could rebalance asymmetric coverage, attach attributions, or enforce a jurisdiction's content rules. Because adapters operate at the edge, they can be shared and audited without exposing base weights.

- Norm routers. A small controller that selects profiles and adapters based on user identity, geography, and task. If a national agency specifies a Civic Discourse profile for public services, the router applies it to relevant requests without touching corporate workflows.

- Compatibility contract. A common interface so adapters and profiles run across vendors. This would look like a JSON schema for behavioral targets and a minimal set of hooks for classification and text transformation. The contract can be implemented through vendor attestation, so enterprises know which versions of which profiles were active when a response was generated.

- Continuous evaluation. Public test suites that probe for bias across languages and cultural contexts. Results feed into the router, raising or lowering reliance on certain adapters. This keeps the value layer dynamic rather than static policy pasted on top.

If this sounds like package management for norms, that is the point. The goal is to let users and nations set values without forking the base model or fracturing interoperability.

What regulators should do next

- Mandate transparency for the value layer, not only the base model. Require vendors to disclose active profiles and adapters, plus evaluation results. Focus audits on the behavior that users actually experience.

- Encourage reference profiles for sensitive domains. Education, health advice, elections, and financial services need clear standards. Public institutions can publish default profiles and require them in procurement.

- Support interoperability testing. Fund shared test harnesses that measure how a profile behaves across multiple vendors and languages. Make the results public, with clear definitions of the metrics.

- Tie risk controls to autonomy levels. As agents gain tool access and budget authority, profiles should include constraints on actions, not only words. A high autonomy agent should require stricter adapters and more frequent evaluation.

These actions do not pick winners. They raise the floor for safety while leaving room for competition on tools and context.

What builders and buyers should do now

- Treat system prompts as product, not scaffolding. Assign ownership, version them, and test them like code.

- Separate policy from capability. Keep value profiles and adapters in their own repository, with change reviews and rollbacks. This makes governance faster and less brittle.

- Invest in context quality. Permission aware retrieval, clean knowledge bases, and well designed tool affordances will do more for outcomes than chasing marginal gains in benchmark scores.

- Measure drift and pressure response. Include charged prompts in your smoke tests and watch for escalation, personal opinions, and asymmetric coverage. Track objectivity metrics over time, not only pass rates on static benchmarks.

- Prepare for multi model fleets. A profile contract lets you swap vendors while keeping your norms intact. That protects you against price shocks and policy changes.

Metrics that matter for neutrality

Neutrality is not an absolute state. It is a continuous control loop. The following metrics help teams avoid false confidence.

- Asymmetric coverage gap. Compare how often the model mentions counterarguments or opposing evidence when prompted from different angles on the same topic.

- Tone symmetry score. Measure how sentiment and intensity shift in response to slanted prompts. Large tone swings are a warning that the model is mirroring rather than reasoning.

- Sycophancy rate under adversarial prompting. Track how often the model agrees with false premises when the user insists.

- Refusal specificity. Evaluate whether refusals explain why a request is restricted and offer allowed alternatives. Vague refusals push users away.

- Profile conformance drift. Monitor how behavior diverges from an active profile over time. Use regression tests on a rolling basis and sample real traffic with privacy safeguards.

These metrics should be tied to actions. If the tone symmetry score degrades, increase the strength of a policy adapter or adjust reward model weighting for hedging and counter framing.

A short case study: policy in the loop

Consider a customer support agent that handles refund requests and product questions.

- Context. The agent has retrieval over account history, product manuals, and a policy wiki. It can read the last five interactions and see the customer's status and region.

- Tools. It can issue refunds up to a cap, create return labels, escalate to a human, and offer loyalty credits. Each action is logged with a justification chain.

- Policy. A profile specifies a calm and concise tone, strict adherence to regional consumer law, and no personal opinions on political topics. A policy adapter scans outputs for asymmetric coverage when policies are cited and injects a clarifying sentence if needed.

Outcome. The agent resolves 60 percent of cases without escalation, reduces refund leakage by aligning with policy caps, and receives a lower rate of customer complaints about tone. When the company enters a new market, the team adds a regional profile and updates the norm router rules without touching base weights.

Implementation checklist for teams

- Write a baseline profile for your most common task. Include tone, coverage expectations, refusal logic, and links to policy documents.

- Build a small library of adapters. Start with a counterbalance adapter that checks for lopsided framing and a citation adapter that inserts sourced language where your policy requires it.

- Add a norm router. Use identity, geography, and task metadata to select profiles and adapters. Keep the logic declarative so it is auditable.

- Create a neutrality dashboard. Track the metrics listed above and alert on drift. Push daily summaries to the team that owns prompts and policy.

- Run an adversarial red team. Include prompts that pressure tone, seek personal political views, or ask for inconsistent exceptions. Rotate the set monthly.

How competition reshapes

As neutrality hardens, models become more substitutable on voice and general tone. Differentiation shifts to three arenas.

- Route quality. How well a system decides when to think slowly versus answer fast. This affects cost and latency, and it shapes how often human review is needed.

- Tool and data ecosystems. Secure execution, permissioning, and high quality connectors determine whether an agent can close the loop from answer to action.

- Policy portability. Vendors that make profiles easy to author, test, and attest will win enterprise trust. Lock in will come from good governance experiences rather than opaque secret sauce.

Expect new business models around policy marketplaces, conformance services, and evaluators that stress test value stacks under real usage patterns.

The bottom line

GPT-5's bias reduction work marks a larger pivot. Frontier models are converging on a default voice that is less sycophantic, more careful under pressure, and easier to steer. That does not end debates about fairness. It does change where we fight them. The most important engineering work will happen in context builders, toolchains, and policy layers that turn intelligence into reliable judgment.

Neutrality is not an endpoint. It is a staging area where we can assemble many voices without chaos. If we build a plural and composable value layer, we can let communities, companies, and countries set norms while keeping the benefits of shared infrastructure. That is how we get both wide autonomy and healthy diversity. The frontier is not only smarter now. It is more programmable in the ways that matter.