The Preference Loop: How AI Chats Rewrite Your Reality

Starting December 16, Meta will use what you tell Meta AI to tune your feed and ads. There is no opt out in most regions. Your private chat becomes a market signal, and your curiosity becomes currency.

Breaking: Your side chats just became ad signals

On October 1, 2025, Meta announced that what you tell its assistants will begin shaping your feed and ads. Notifications began October 7, and the change is slated to take effect December 16. Meta frames this as just another ranking signal, similar to a like or a follow, that helps decide what to show you across posts, Reels, and ads. The rollout targets most regions. You can adjust ad topics and feed controls, but the core use of assistant chats as a signal will be on by default. See the official details in the Meta newsroom announcement.

Multiple outlets emphasized that there is no user opt out for this specific use in most places at launch. Regions with stricter privacy laws, including the European Union, the United Kingdom, and South Korea, are excluded for now. A report summarizing these carve outs and the lack of an opt out is here: TechCrunch on no opt out.

Treat this as more than a policy tweak. It is a shift in how your identity is inferred, priced, and presented back to you. Your private, exploratory dialogue becomes a live input to the attention marketplace.

The preference loop, explained

Think of preferences as wet clay on a wheel. In the past, platforms shaped the clay by watching what you tapped, shared, or hovered over. Now the wheel connects to a microphone and asks you questions. When you whisper your goals or doubts to the assistant, that whisper becomes a knob the system can turn in real time. This is the preference loop.

The loop has four steps:

-

You explore in a low stakes chat. You tell the assistant you want to get into trail running, learn the oud, or consider a move across the country.

-

The chat becomes structured signals. Trail running maps to trails, shoes, hydration packs, training plans, and outdoor creators. The oud maps to lessons, instruments, and local music events.

-

The recommender pushes content and ads that match the new signals across your feed, Reels, and Stories.

-

You react to the new lineup. Your taps validate the guess. The loop closes. The next pass pushes stronger versions of the same thing.

Yesterday you had a private thought. Today it is a market segment. By tomorrow that segment is editing your options by filling your day with similar content.

Why dialogic signals are different

Not all signals carry the same weight. Conversations are different from clicks.

- Higher intent: Talking through a two week training plan signals more than a single search for running shoes. It encodes readiness, constraints, and tradeoffs.

- Longer half life: A conversation about moving for a job hints at downstream categories for months, from neighborhoods to commuting to furniture.

- Emotional framing: Chats carry mood and motivation. That framing steers which versions of a topic you see, from aspirational to fear based.

When those details feed your recommendations, the system is not just reflecting who you are. It is helping decide who you will become.

For a deeper treatment of how inquiry itself changes systems, see our take on the observer effect in AI.

From diary to dealer: the new path from introspection to manipulation

Imagine this timeline. On Monday night you confide to the assistant that your back hurts and you might try a standing desk. On Tuesday your feed tilts toward ergonomic videos, creator reviews of height adjustable frames, and posts about cable management. By Friday you are in three communities that trade discount codes and desk modification tips. You never searched for desks. You never followed a store. The bridge between thought and exposure was your diary like chat.

Why does the leap matter? Because it collapses the distance between an internal state and a commercial response. When that distance shrinks, two things accelerate:

- Preference formation speed: Early, tentative ideas harden quickly under continuous reinforcement.

- Preference lock in: Each click makes the feed more confident and your alternatives less visible.

If you are trying on identities, the system can calibrate the try on into a plan, then into a habit. Your future self is assembled by a sequence of recommendations that feel natural because you initiated the first nudge.

How fast loops harden identity

A fast loop feels helpful because it reduces friction. But friction is sometimes the only thing that preserves optionality. Three reinforcing dynamics matter here:

- Recency bias amplified: Chats often capture what you are thinking about right now. When a platform gives recency extra weight, it crowds out quiet but important interests.

- Confirmation by design: Recommenders optimize for reaction. That makes it easy to saturate your attention with a single storyline that performs well.

- Memory effects: Assistant memory and long running profiles ensure that today’s chat leaves traces that persist. As these memories grow, they can become an invisible default. We explore similar thresholds in the memory threshold.

The takeaway is not to avoid assistants. It is to separate experimentation from commitment and to make commitments explicit.

Design patterns for agency

If a dialog becomes a market signal, you need tools and norms that let you explore without rewriting yourself by accident. Below are three patterns that balance usefulness with autonomy. They also align with a broader push for consent first AI design.

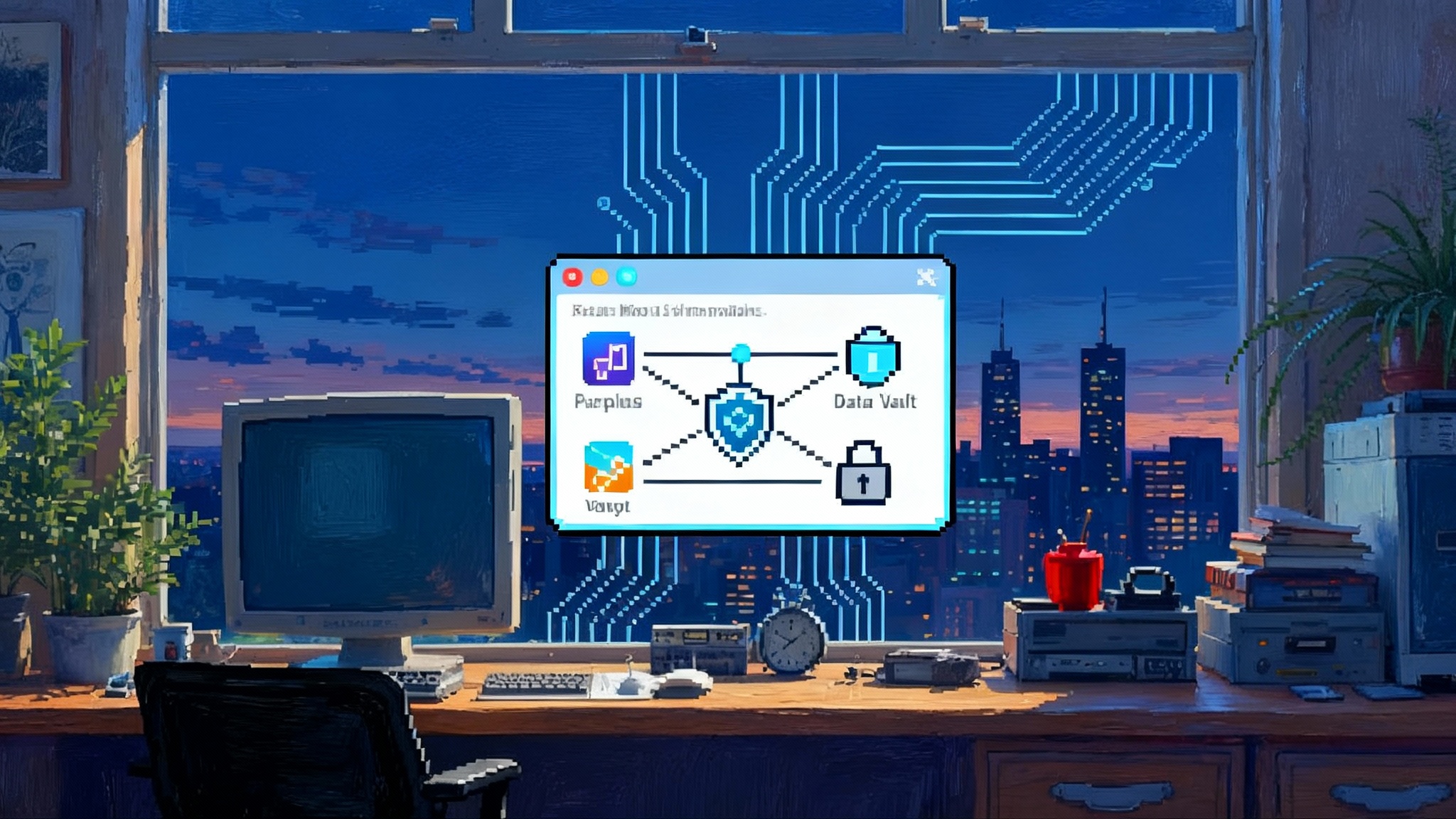

1) Persona sandboxes

A persona sandbox is an isolated room for exploratory chats. You can try on an interest or an identity without leaking that trial into the main profile that drives your feed and ads.

Design principles:

- Scoped identity: You pick a named persona when you chat, like Runner Trial or Career Pivot. The platform stores it separately from your main profile.

- Ephemeral memory by default: The sandbox forgets unless you explicitly promote a finding to your main preferences. Promotion should require a clear, one click action with a visible summary of what will change.

- No cross talk: Signals from sandboxes do not reach the recommender or ad system. They can power local tools such as drafts, checklists, or private collections, but nothing that affects global ranking or targeting.

- Audit view: The sandbox shows exactly what would have flowed out, if flows were allowed. Think of it as a simulation log for signals.

What you can do now:

- Keep sensitive explorations in apps that allow local only or device only modes, or use separate accounts that are not linked in any accounts center.

- Favor assistants that offer explicit chat separation and a switch to disable feed personalization from assistant interactions. If a product offers no separation, assume your trials will follow you.

2) Auditable narrative budgets

A narrative budget is a cap on how much any single theme from your chats can influence what you see over a set period. The platform publishes a ledger that shows which themes gained exposure rights, how much, why, and for how long.

Design principles:

- Theme accounting: The system turns chat derived signals into labeled themes, like Running Gear or Career Change. Each theme gets a weekly budget of impressions in your feed.

- Consent by promotion: A theme only spends from your main budget when you explicitly promote it from a sandbox or from a clearly labeled dialog box in the main chat. Promotion includes a one sentence consequence statement, for example, 10 percent more posts about running for two weeks.

- Saturation brakes: Even if you engage heavily with a new theme, the system respects a cap so early adoption does not become total takeover. The cap is visible and adjustable.

- Third party audit hooks: Independent auditors can test whether caps are respected by submitting controlled personas and measuring output.

What you can do now:

- Build your own manual budget. Once a week, scan the last 50 posts and ads you saw, classify them into five themes, and decide which to dial down. Use ad and feed controls to implement the change. It is crude, but it restores some agency.

3) Zero knowledge recommenders

Zero knowledge recommenders use cryptography so the platform can test whether an ad or a post is relevant without reading the underlying chat.

Plain language version: Instead of exporting your diary to the ad machine, your device produces a short proof that says this user fits the hiking segment with score above 0.8. The platform checks the proof is valid, but it never sees your chat content or your raw features. Relevance can be verified without disclosure.

Design principles:

- On device segmentation: Your device or browser computes interest scores from chat locally.

- Proofs instead of profiles: The only thing that leaves your device is a proof that the score clears a threshold for a category, not the score itself and not the text that produced it.

- Revocation and expiry: Proofs expire quickly and can be revoked with a simple kill switch.

- Performance budgets: Industry standards should ensure proofs do not add unacceptable delay to the ad auction or the feed refresh.

What you can do now:

- If you build products, prototype a minimal proof for one category, such as recipes, and measure the latency cost. The overhead is real, but the gain in user trust is an asset.

- If you are a power user, ask vendors how they would support proofs instead of profiles. Push your browser and phone vendors to expose local segmentation that you control.

The policy patchwork

This update is planned for most regions, while some areas with strict privacy law are excluded at launch. That patchwork means your experience and your leverage will vary by country. In looser regimes, product and market forces will set the norms first. In stricter regimes, regulators and courts will keep forcing products to offer clear choices and chronological fallbacks.

For companies operating across borders, building for the strictest regime and then relaxing it is usually cheaper than maintaining many variants. That is a practical reason to build persona sandboxes, narrative budgets, and proofs now. They travel well across legal climates.

What this means for builders

- Redefine success metrics: Track balance alongside engagement. A healthy recommender does not just maximize clicks. It shows a mix of viewpoints and avoids crowding out new interests.

- Make promotion explicit: If a chat derived theme will change a user’s feed, ask for promotion with a one line consequence and a short, human summary.

- Publish your narrative math: Explain caps in plain language and let outside researchers test whether you respect them.

- Offer a true sandbox: If you cannot prevent leaks from exploratory chats into the recommender, label the absence and give users a switch to turn off all cross talk.

- Map sensitive edges: Even if ad policies exclude sensitive categories, adjacent themes can still steer behavior. Design guardrails for the gray areas.

What this means for the rest of us

- Decide where to draw the line: If you use Meta AI after December 16, assume your conversations will steer your feed and ads in most regions. If that feels wrong, do not use the assistant for sensitive topics, or separate exploratory personas from your main account.

- Schedule a monthly reset: Clear chat histories you do not want turned into long term signals. Then revisit ad preferences and mute themes that feel overrepresented.

- Create an alternate input stream: Balance what the system learns from your chats by actively following accounts that contradict your new interests. If you are experimenting with a high protein diet, follow endurance athletes who eat vegetarian meals. If you are chasing hustle content, follow accounts about rest and play.

- Use frictions on purpose: Add pauses. Save, do not like. Bookmark, do not share. These tiny choices slow the loop enough to check whether a new theme deserves more space.

The coming split

We are headed toward two internet tribes.

- The co authored: People who invite assistants to co write their preferences. They will get fast moving, high relevance feeds. They will also find it harder to change course. Their sense of self will be tidy, efficient, and constrained by short term reward loops.

- The firewalled: People who treat chat as a separate room, or who avoid it for sensitive topics. Their feeds will feel slower but more diverse. They will give up some convenience to keep a wider option set.

Neither path is pure. You can co author for hobbies and firewall for health. You can use a sandbox for career while letting the assistant steer your home setup. The key is to keep the boundary visible and adjustable.

What to watch next

- Assistant memory becomes a lever: If your assistant remembers you love mountain towns, that memory will be more than a convenience. It will be an advertising primitive and a recommendation weight.

- Creators refine their hooks: Expect content tailored to match intent confessed in chats. Scripts will mirror common assistant prompts.

- New privacy labels emerge: Products that adopt persona sandboxes, narrative budgets, and zero knowledge proofs will market those features the way browsers marketed private tabs. Expect shorthand labels and badges that signal composable privacy.

- Interfaces rebalance agency: Expect UI patterns that make the line between curiosity and commitment explicit. Confirming promotion should feel like publishing a change to your profile.

A closing thought

We used to treat private chat with a machine as practice. Now practice is performance. When your quiet questions become signals, the loop between who you are and what you see tightens. You do not need to fear the loop, but you do need to design for it. Draw a line between curiosity and commitment. Put your experiments in a sandbox. Demand budgets and proofs. Then you can keep the clay soft long enough to shape it on your terms.