Supermemory Turns Memory Into AI’s New Control Point

Models keep changing, but the apps that win will remember. This deep dive explains why a shared memory API is becoming the control point for AI, what Supermemory offers, and how to evaluate vendors today.

The control point in AI is shifting

For two intense years, the center of gravity in AI apps lived inside the model. Teams chased bigger context windows, cleverer prompts, and a constant stream of retrieval patches. It worked until it did not. Apps forgot users between sessions. Agents collapsed when tasks stretched beyond a single chat. Token counts ballooned while reliability sagged.

This week, the conversation moved. Supermemory launched with momentum and coverage on October 6, 2025, positioning memory as the new control layer for AI systems. Instead of cramming more tokens into a prompt, the company proposes a universal memory API that any model or agent can call to store, evolve, and retrieve what it has learned about a user or a project over time. The pitch is simple: keep models swappable and make memory durable, low latency, and explainable. The announcement followed public traction and fundraising noted in TechCrunch’s October 6 report.

Think of an AI app as a person working at a library desk. The model is the librarian’s reasoning ability. The vector store is a shelf of index cards. Memory is the notebook that spans weeks: what a patron asked last Tuesday, the book they disliked yesterday, and the outline due next Friday. The notebook has sections and cross references. It is persistent, composable, and fast to flip through. That is the service Supermemory wants to productize as a first class platform primitive.

What Supermemory actually does

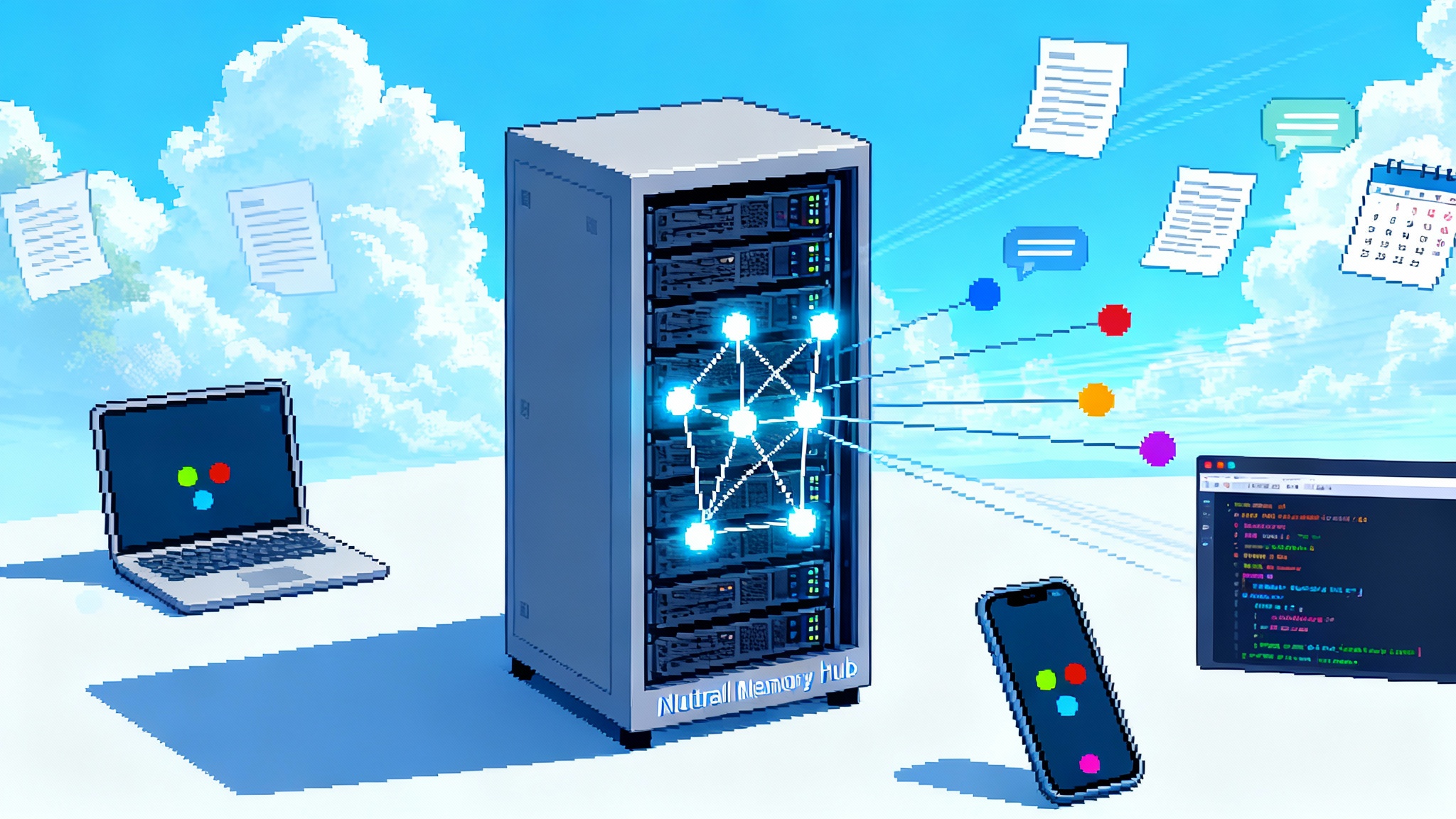

Supermemory aims to give developers a drop in memory engine that is model agnostic, multimodal, and quick. Under the hood it takes on five jobs teams usually stitch together themselves.

-

Ingest and normalize data. Developers can send text, files, chat logs, emails, and app events. Think of the artifacts scattered across Drive, Notion, OneDrive, and your product. Supermemory cleans, chunks, and annotates this stream.

-

Enrich into a knowledge graph. Instead of dropping embeddings into a vector store and calling it a day, Supermemory identifies entities and relationships, then builds edges across them. Preferences link to people. People link to projects. Projects link to dates and outcomes. This enables queries like: What did Maya change in the design spec after the customer call last month?

-

Index for speed and precision. The system maintains both a graph index and a vector index, then uses hybrid search with reranking to return the right memories quickly. The company emphasizes graph plus vector indexing and tuned recall paths on its product overview.

-

Retrieve with context assembly. At query time, memory is filtered by entity, time, provenance, and confidence. The result is not a random list but a set of citations that map to people, files, and events. Because memory lives outside the model, prompts stay simple and the model can change.

-

Evolve over time. Memories are not immutable chunks. They decay, get superseded, or summarize upward into higher level facts. A shoe preference can shift from Adidas to Puma after a bad return. A project moves from draft to shipped. The graph makes these changes explicit rather than hiding them inside token soup.

If you have ever hacked this together with a vector store, a custom schema, and a couple of notebooks, you know why an opinionated memory layer is attractive. The difference here is a real time pipeline and a unified data model that hides plumbing without hiding state.

Beyond brittle RAG and bigger windows

Retrieval augmented generation is great at find similar text and paste it into context. It is fragile when queries cross sessions, shift over time, or depend on who the user is. Doubling the context window can help in the short term, but it does not provide structure, provenance, or durable personalization.

A memory layer solves a different class of problems:

- Personalization that lives for weeks. A travel agent that remembers your family’s no red eye rule even if the last booking was in June.

- Long running workflows. A sales agent that watches a deal for a month, threads together email, call notes, and contract edits, and asks finance for approvals on day 43 without a human nudge.

- Explainability and editing. Because memories point to sources, you can show a timeline, explain where an answer came from, and fix a wrong assumption.

- Composability. The same memory about a customer should inform your helpdesk bot, your success playbooks, and your churn model. Do not rebuild the state three times.

The practical takeaway for builders is blunt: if your app needs to remember, pick a memory layer early and keep the model swappable. If it does not need to remember, a search box and a good prompt template may be enough.

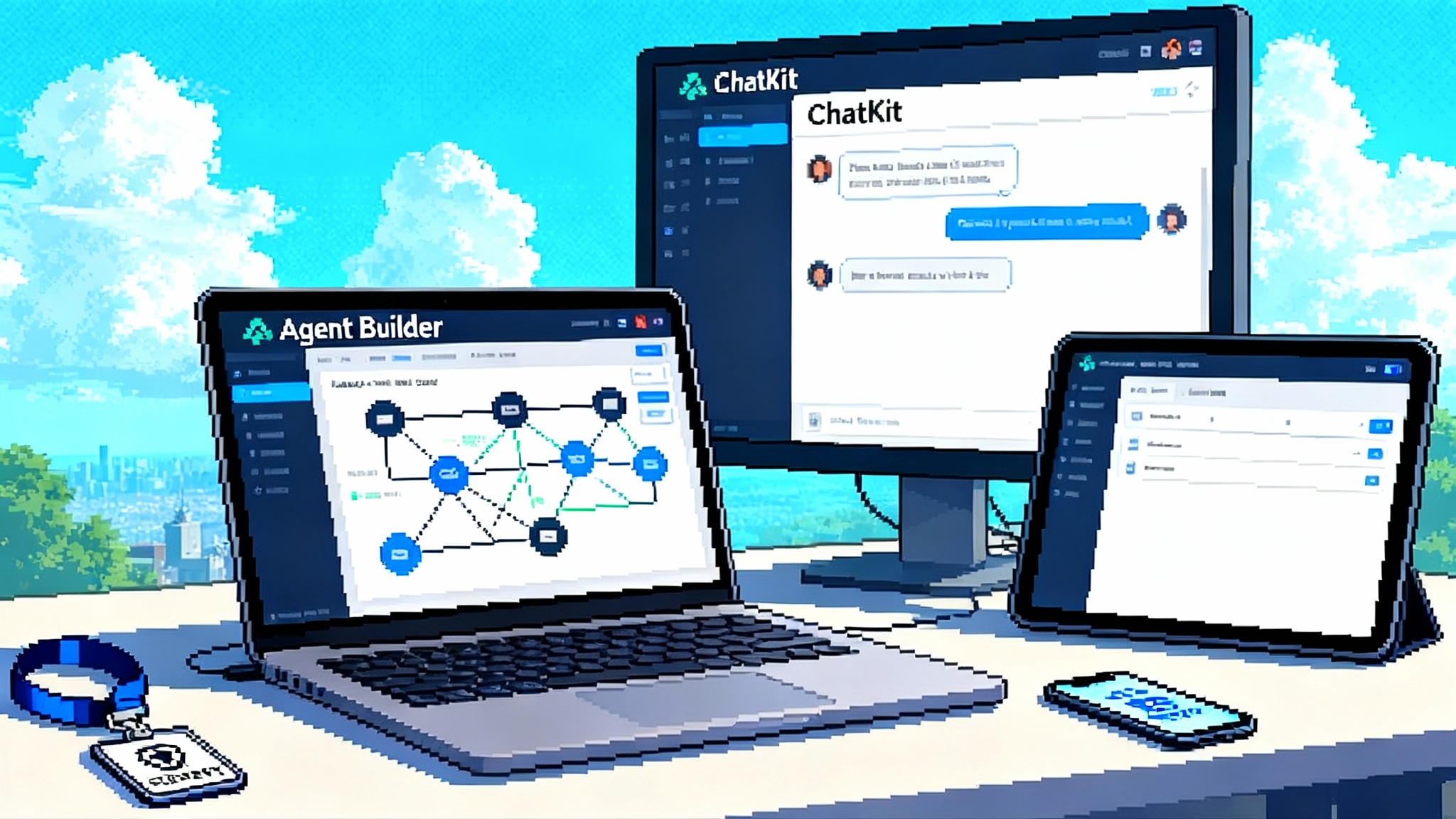

How memory plugs into today’s agent stack

Developers already work in editors, IDEs, and chat clients, so the memory layer needs to meet them there. Supermemory exposes a plain memory API and an implementation compatible with Model Context Protocol so that tools like ChatGPT, Claude, Windsurf, Cursor, and VS Code can share memory across contexts. That means a preference saved while you debug in your editor can shape how a help bot responds in your web app later that day.

This pattern matches a broader shift in agent infrastructure. We have covered how agent frameworks are compressing and standardizing the stack in pieces like AgentKit compresses the agent stack-so you can ship fast and how coding environments are opening deeper hooks in Cursor's browser hooks. Memory fits neatly alongside these shifts because it centralizes state while leaving the choice of model, router, and orchestration open.

On the content side, built in connectors for Google Drive, Notion, and OneDrive keep documents and notes flowing with webhook updates and scheduled sync. Two details matter in practice:

- Container tags and multi tenant graphs. Memories are grouped by a container tag that maps to a user, organization, or project. Each tag gets its own graph. That keeps permissioning manageable and lets you move an entire project between environments without reindexing.

- Hybrid recall tuned for latency. An initial semantic search narrows the set, then reranking and graph filters assemble the final context. Teams trade a small latency bump for higher precision and still hit sub second responses on common routes.

Why memory becomes the new control point

Control points emerge where switching costs and network effects converge. In cloud, identity providers and data warehouses won because everything else plugged into them. In AI, memory has similar gravity for three reasons.

- Data gravity beats model gravity. Your users’ private data and the graph of how it changes over time are the scarce inputs. Models will keep improving and commoditizing. If the memory layer owns a clean, connected, permissioned version of that data, traffic will route through it.

- Latency decides experience. Users feel latency more than a small quality delta between frontier models. A memory service that assembles the right context in a few hundred milliseconds becomes the default path for prompts. Each integration compounds that advantage.

- Cross app reuse compounds. The same memory powers chat, autonomous agents, and analytics. Each new use case strengthens the incentive to standardize on one memory API.

If you lead a platform team, your checklist is straightforward: choose one memory service, wire every new app and agent to it, and build internal dashboards that make the graph visible and editable. Encourage teams to propose new entity types rather than new indices.

What this unlocks for agents in the next six months

The industry learned the hard way that many autonomous agents do not fail because they cannot think. They fail because they cannot remember and coordinate over time. A reusable memory tier changes the horizon.

- Week long task chains with stable state. An incident response agent that opens a ticket, correlates logs, files updates, requests approvals, and posts a draft post mortem on Tuesday. Memory provides the spine and the audit trail.

- Personal workflows without orchestration sprawl. A writing coach that knows your tone markers, the topics you avoid, and which examples your readers liked. It tees up ideas next Wednesday drawn from your bookmarks, documents, and replies.

- Multi agent collaboration that is legible. When research, drafting, and editing agents share a graph, handoffs are just links between entities and deadlines. You can render the state, review it, and nudge it without spelunking logs.

We see this direction echoed in tools that push autonomy further while keeping a human loop. For example, Replit Agent 3 goes autonomous because it can maintain state across build, test, and ship. A memory API generalizes that benefit to more domains.

Privacy, locality, and the architecture ahead

A memory control point raises hard questions about privacy and data locality. The near term blueprint looks like this:

- Fine grained scope by default. Treat memory like identity claims. Every memory should carry tags for who can see it, where it came from, and when it expires. Build UIs that let users view and edit that ledger. If a preference changes, mark the old one as superseded rather than deleting history, then hide it from retrieval.

- Selective sync and audit trails. Connectors delight on day one and terrify on day ninety without visibility. Provide per source toggles, sync frequency controls, and immutable logs of what was ingested when. Expose those logs to users and admins.

- On device and edge caches. Not every memory needs to live on a central server forever. Keep a small working set on device for mobile agents and browsers, encrypted at rest and synced with the server graph on a cadence. Push read only caches to an edge store to reduce latency and keep sensitive data inside a region. The server memory becomes the source of truth and the policy engine, not the only copy.

- Enterprise isolation options. Expect regulated customers to demand single tenant deployments on their cloud or on bare metal. Your memory layer should run in cloud with the same APIs and observability. Pricing should reflect isolation and support.

- Minimal model exposure. Prefer retrieval that passes compact, source linked summaries rather than entire documents into prompts. This reduces token costs and minimizes accidental data retention by third party models.

Actionably, appoint a memory data steward who owns schemas, retention policies, and redaction tools. Run quarterly reviews where you sample answers and verify sources. If your product cannot explain why it said something, you have an incident waiting to happen.

Where moats will form for memory platforms

If memory is the control point, where do durable moats appear?

- Private, high signal graphs. The most defensible asset you accumulate is a cleaned, connected, permissioned graph of your domain. The quality of extraction and schema evolution will decide win rates. Invest in tools that surface missing edges and inconsistent entities and then auto repair them.

- Distribution through standards and clients. Model Context Protocol compatibility is more than developer convenience. It is a distribution channel. If your memory follows a user between ChatGPT, Claude, Windsurf, and Cursor, you earn daily active use across contexts.

- Latency and cost curves. Sub second assembly wins usage. If you can hold latency constant while doubling corpus size, you become the default. Cost matters too. Routing fewer, cleaner facts into prompts beats paying for bigger windows.

- Governance and auditing tools. Enterprises will choose the vendor that makes compliance easy. Expect buyers to prefer memory services with turnkey audit logs, policy templates, and subject access request automation.

- Ecosystems of connectors and actions. Storage without activation is dead weight. The stronger your connector set and the cleaner your action surfaces, the more teams can build without friction. Over time, third parties will target your memory schema directly, the way analytics vendors clustered around data warehouses.

A two hour bake off to evaluate memory APIs

If you are deciding whether to adopt Supermemory or a competitor, run a compact but decisive trial. Keep it hands on and measurable.

- Bring your own data. Ingest a realistic slice from Drive and Notion plus a week of product logs. Measure time to first correct answer.

- Query across time and entities. Ask questions that require a timeline and a relationship: who changed what, when, and why.

- Inspect provenance. Can you click back to sources, and can non engineers understand the trail?

- Push updates. Change a preference and confirm that retrieval favors the new fact without losing historical context.

- Measure latency and token spend. Capture a distribution, not a single average. Confirm that compact memory snippets replace giant context dumps.

- Swap the model. Keep memory constant and try a different large language model. Prompts should barely change. Quality should track the model, not the memory layer.

A system that passes this test is ready for production agents and week long workflows. If it fails at provenance or latency, no amount of prompt tuning will save it.

A practical adoption plan for teams

Here is a pragmatic path that platform and product teams can follow without stalling delivery schedules.

- Pick the first container boundary. Start with a single container tag that maps to a user, a team, or a project. Use that boundary to keep permissions and observability simple.

- Define three to five entity types. Resist the urge to model everything. Start with People, Projects, Preferences, Documents, and Events. Add properties only when a question requires them.

- Wire two connectors, not ten. Choose the sources with the highest signal right now. Drive and product logs are a good pair. Add more only after you see answers improving.

- Instrument provenance from day one. Every memory needs origin, timestamp, and confidence. If you cannot render a source timeline, you are building blind.

- Keep prompts simple and short. Route compact, source linked snippets into the model. Avoid template sprawl. Measure token use per answer.

- Build a memory viewer. Create a basic UI that shows entities, edges, and the last ten updates. Make it searchable and editable by admins.

- Run weekly red team reviews. Sample twenty answers, check sources, and file schema or extraction bugs. Treat memory quality like test coverage.

The bottom line

We are entering a phase where the winning pattern for AI apps resembles classic systems architecture more than prompt alchemy. Put a durable, structured memory layer in the middle. Keep your models modular. Move data in through connectors and keep it honest with governance. Supermemory’s universal memory API makes that architecture accessible to teams that do not want to rebuild graphs and retrieval from scratch. The company’s own materials stress graph plus vector indexing and sub second recall paths, and the public launch on October 6, 2025 underlines the timing and interest.

If you are serious about agents that work for weeks and feel personal on day one, choose where their memory will live. The new control point is not the model. It is what the model remembers, how quickly it can recall it, and whether you can trust the trail it leaves behind.