Figure 03 brings large language model agents home at scale

Figure 03 is a credible home robot built for scale, not just a demo. With Helix vision language action, tactile hands, inductive charging, and a real manufacturing line, agentic AI finally steps into daily life.

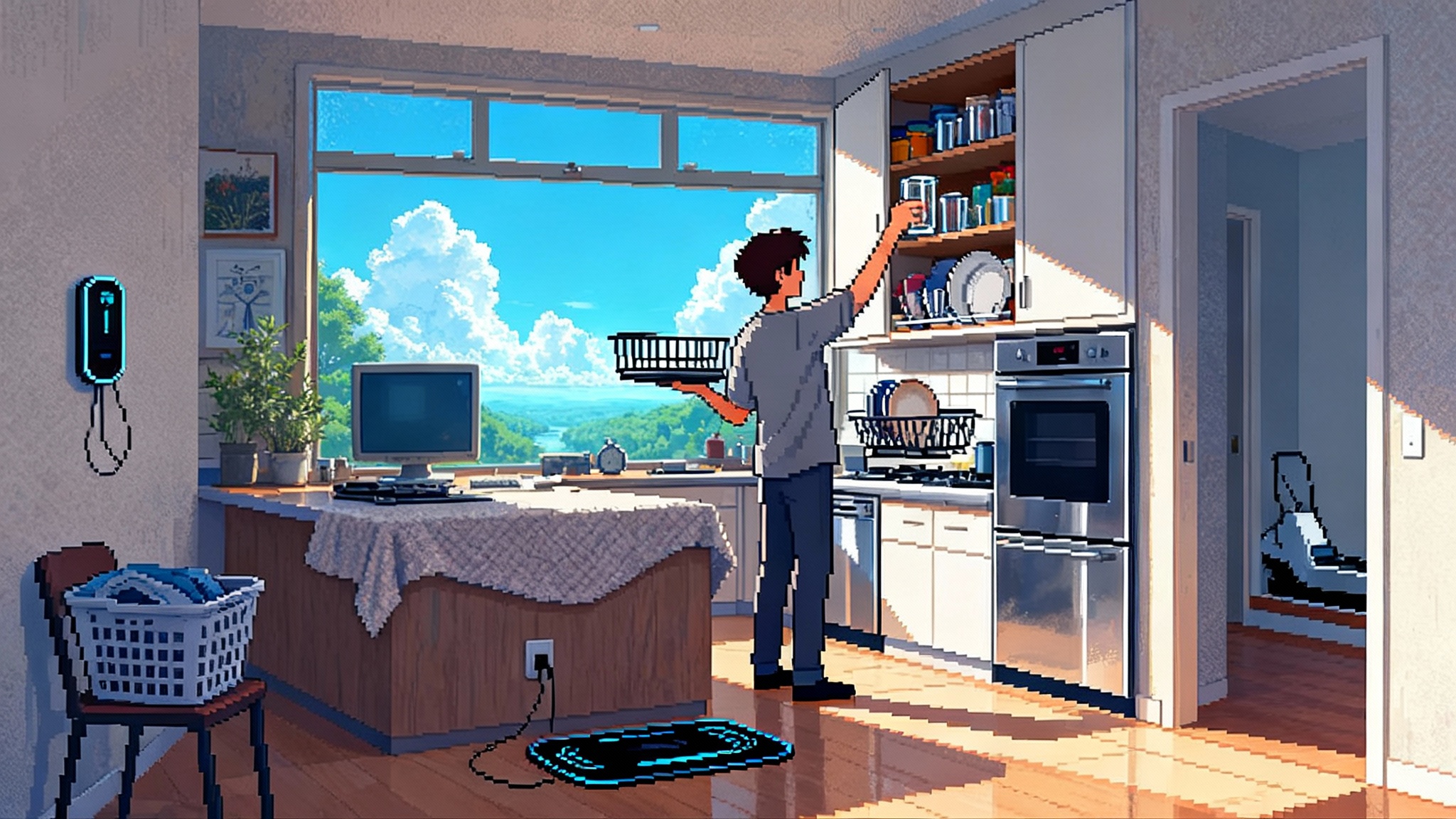

The day agents left the screen

On October 9, 2025, Figure AI introduced its third generation humanoid, Figure 03, and quietly flipped the script on agentic artificial intelligence. For the last two years, we have taught large language models to plan, converse, and even write code on glass rectangles. Today’s launch argues that the next platform is not the browser tab. It is a robot that can unload groceries, fold towels, and hand you a screwdriver without cracking your ceramic mug.

The headline is not just another humanoid reveal. Figure 03 arrives as a credible, home-safe, mass-manufacturable machine. The company redesigned the robot around its vision language action system, Helix; added inductive charging so it tops up by stepping onto a stand; wrapped the body in soft textiles and foam; and built a dedicated manufacturing line called BotQ to scale output. You can see the details in Figure’s announcement, which includes the new hand system, palm cameras, tactile sensing down to three grams, a UN38.3 certified battery, and a production plan that targets 12,000 units per year initially and 100,000 over four years in Figure's detailed Figure 03 announcement.

Why does that matter? Because embodied intelligence only becomes useful when it is both capable and present. Presence means shipping in volume at a cost and safety profile that fits a home. Capability means reasoning about the messy, cluttered, unforgiving environment that is your kitchen at 6 p.m. Figure 03 is the first product that makes a plausible case for both at once.

If you have been tracking the migration of agentic AI from flashy demos to real deployments, this step mirrors what we saw as robots moved into industrial contexts. The same logic that took agentic systems from proofs of concept to pick-and-pack reliability in factories and distribution centers is now knocking on the front door of the home, much like our analysis of agentic AI in warehouses.

What Figure 03 actually changes

Three design moves shift the conversation from demo reels to daily life.

-

Vision language action reasoning. Helix is not a chatbot with a camera. It is a system that takes pixels from multiple cameras, processes them at high frequency, and plans actions in the same loop. The new camera architecture doubles frame rate, quarters latency, and widens the field of view, which is like upgrading a driver from foggy glasses to a panoramic dashcam. With more stable perception, the robot can reach into cabinets and keep track of objects even when the main view is occluded.

-

Tactile hands that actually feel. Each fingertip adds compliant materials and an in-house tactile sensor that can register forces as small as a paperclip. That sensitivity plus palm cameras gives Helix redundant feedback. If you have ever tried to pull a thin glass from a tight shelf, you know that the difference between success and shattering is a few grams of grip. The new hands are built for that margin.

-

A home-safe and self-managing body. Multi-density foam around pinch points, washable textiles, and a battery that meets UN38.3 are not glamorous, but they are the details that move a robot from a lab to a living room. Inductive charging at 2 kilowatts means no cables to trip over and no manual docking. The robot can simply step onto a mat, recharge during lulls, and return to work.

These are not marketing flourishes. They are cause and effect. Better perception enables higher-frequency control. Better hands reduce the failure rate of grasps. Safer packaging reduces the risk and friction of shared spaces. Together, they make an agent that can execute entire chores rather than one-off tricks.

Why this time is different: a credible path to mass manufacture

Every home robot pitch eventually collides with the bill of materials. You cannot scale if your design assumes computer numerical control machining, one-off assemblies, and boutique suppliers. Figure 03 was re-engineered around tooled processes like die casting, injection molding, and stamping. The company also vertically integrated actuators, batteries, sensors, structures, and electronics, then built BotQ to control quality and cadence. First line capacity is 12,000 units per year with an ambition to deliver 100,000 over four years, and a manufacturing execution system that tracks every subassembly.

If you are a founder or product manager, the lesson is concrete. Real platforms ride supply chains that are boring in the best possible way. Part count falls. Assembly steps shrink. The design eliminates fasteners and hand fits. The result is not just cost down. It is reliability up. When a humanoid can be built, tested, and serviced like a home appliance, you can forecast unit economics instead of wishing for them.

The agent finally leaves the screen

Large language models excel at abstraction. Vision language action systems add the missing channel: continuous control. Instead of answering a prompt about a spilled cereal box, Helix sees the flakes, tracks the broom, modulates pressure on the dustpan, and checks the floor before declaring done. The loop is language for goals, vision for state, and action for policy. It is like moving from map navigation to driving, where you do not just know the route but also feel the wheel.

Two implementation details matter for developers:

-

High-bandwidth fleet learning. The robot offloads data over 10 gigabit per second millimeter wave. That means terabytes of pixels and proprioception can flow to training clusters without a manual cable dance. The faster the loop, the faster policies improve.

-

Occlusion-resistant manipulation. Palm cameras plus tactile sensing let the robot keep a lock on objects when the main cameras are blocked. That enables tasks in tight spaces, a precondition for real homes.

What unlocks for developers

A credible, home-safe platform changes not just what tasks are possible, but how developers will build and ship them. Here is a playbook.

-

Skills as products. Treat a skill as a package that binds goals, constraints, and telemetry signatures. For example, a dishwasher load skill might include a language template for preferences, a grasp library tuned for slippery plates, and a success check that counts racks and verifies door latch state. Publish the skill to a marketplace scoped by safety and versioning. Charge per successful completion, not per minute, and include a fallback path to human-in-the-loop support for edge cases.

-

On-robot tooling. Build tooling that runs on the robot to catch issues that only appear in live environments. Think of a developer overlay that renders contact forces, grasp likelihood, and action confidence in augmented audio. Add a one-tap capture that bundles sensor windows, policy state, and logs into a reproducible bug report. Make diffing runs easy so you can tell if a new policy regressed plate stacking angles.

-

Data loops by design. Use the fleet offload to define precise labels that come from outcomes, not from annotations. A failed towel fold leaves a certain texture pattern and duration signature. A successful fold leaves another. Encode those signatures as automatic rewards. Then run nightly retrains that push incremental updates. Publish skill version notes like any modern software release, with clear rollback.

-

Simulation where it helps, reality where it matters. Sim is still the fastest way to explore edge cases, but reality creates the hard labels that improve grasp stability and recovery strategies. Build sim-to-real pipelines that assume the last 10 percent of progress will come from messy kitchens, not perfect physics.

-

Safety checks as code. Put guardrails into the skill itself. Define maximum forces, joint limits near humans, and safe stances when confidence drops. Treat these as unit tests that run before a skill is accepted into a marketplace and as live monitors that can halt execution.

-

Privacy by default. Home robots will capture audio and video. Scope retention to the minimum needed to improve a skill, run opt-in policies per task, and integrate clear local deletion controls. Make privacy state as visible as battery percentage. If you build for trust, you will earn it.

These practices echo broader shifts in agent tooling, where memory and environment design have become central. For perspective on how memory shapes reliability and control, see our take on Supermemory as AI's control point. And for a deeper look at how curated environments become a moat for learning, our analysis of how an environments hub makes data the moat is a useful companion.

New moats: supply chain plus deployment data

The moats in agentic robotics are shifting from closed models to the hard things that compound.

-

The supply chain moat. BotQ is not just a factory. It is a process that creates predictable lead times, traceability, and an upgrade path for each subassembly. Whoever owns the schedule owns the learning rate, because more robots in the field generate more data. That is why vertical integration across actuators, sensors, batteries, and electronics matters. Each change rolls forward through the line without waiting for an external vendor’s quarter.

-

The deployment data moat. Learning in the wild is the difference between a good demo and a dependable product. Homes generate long-tail failures at astonishing speed. A cereal box stuck under a chair. A dog tail in the sweep path. Lighting changes at dusk. Every resolved failure teaches the policy distribution. The company that can ingest, filter, and learn from that stream will improve faster than rivals, and that advantage accelerates with every install.

-

The safety certification moat. A battery that passes UN38.3 and a body that is designed to reduce pinch risk are not academic. They unlock distribution. Retailers, insurers, and regulators all ask the same question: can this share space with people. The team that answers yes with documentation and history ships more units and learns faster.

Why startups now set the pace

For a decade, hyperscalers defined the speed of artificial intelligence with model releases and compute leaps. In embodied intelligence, the bottleneck is less about scaling parameters and more about closing the loop between design, manufacturing, and deployment. That rewards organizations that can change hardware weekly, push software nightly, and take manufacturing risk early.

Figure spent 2025 cutting dependencies. In February it publicly dropped its collaboration with OpenAI to focus on in-house models, a move that signaled confidence in its own vision language action stack, as reported when the company dropped its OpenAI partnership. The company then turned around and built BotQ while reworking the entire robot for tooled production. Startups can make these cross-stack moves because they are small enough to be opinionated and integrated.

Meanwhile, hyperscalers are indeed entering robotics with model variants and partner programs. That will matter for training and tooling. But the teams that own the last mile into the home, plus the line that builds the machines, will set the tempo. Shipping robots is not a cloud deployment. It is chemistry, logistics, certification, and service. Startups that master those will have a pace advantage that even large data centers cannot erase.

What to build now

If you are a developer, product owner, or founder, here are specific actions to take while the platform crystallizes:

-

Pick a household vertical where success is legible. Laundry. Dishes. Pantry restocking. Build for a clear definition of done with measurable success rates. Avoid vague assistants that do a little of everything and nothing completely.

-

Design with hand-first thinking. Grasping is the rate limiter. Optimize the first five seconds of every task for grasp quality. Use the palm camera and tactile cues to detect incipient slip, then switch to compliant control. Add self-checks that confirm object state before the next subtask.

-

Instrument your skill. Log force profiles, grasp success on the first attempt, occlusion percentage, and recovery time. Publish these metrics. Your customers will buy reliability, not poetry.

-

Build real-time triage. Create a human-in-the-loop console that can accept a handoff in under three seconds when confidence drops. Record the handoff as labeled data for future learning.

-

Plan for textiles. Home-safe robots will have soft covers that change contact dynamics. Calibrate your grasps and postures with the soft goods installed, not just with bare frames.

-

Respect the home. Implement local processing for sensitive audio, and offer per-room privacy modes. If a household opts out of data sharing, your skill should still run well on-device. That credibility earns opt-ins later.

-

Write your change logs for robots. Each version should list what improved, what regressed, how it was validated, and how to roll back safely. Treat skill releases with the same rigor you would apply to flight software.

-

Design your customer success motion. These are living machines. Offer service level agreements for uptime, replacement policies for wear items, and a cadence for skill updates that aligns with household routines. Build trust through predictable support, not surprise magic.

The bigger picture: from apps to appliances

We may look back at today as the moment agentic AI moved from software to appliance. In the 2000s, we wrote apps for phones because billions of phones already existed. In the late 2020s, we will write skills for home robots because the robots will finally arrive in numbers that make markets. A robot that can do dishes one day can learn pet care the next, then graduate to basic home maintenance. Each new skill multiplies the value of the platform, just as each app did for smartphones.

Do not mistake this for a copy of the mobile playbook. The constraints are different. Skills must be safe by construction, measurable in outcome, and respectful of the home. But the flywheel is familiar. More robots mean more data. More data means better skills. Better skills mean more robots. BotQ turns the crank, Helix learns from the spin, and households get increasingly capable help.

A smart end to a long beginning

Figure 03 will not solve home life in one release. The first deployments will be bounded, the price will start high, and early users will discover failure modes no lab predicted. That is fine. What matters is that the platform now has the ingredients to compound. A reasoning stack tied to real perception and action. Hands that can feel mistakes before they break things. A charging and safety story that respects homes. And a production line that can grow from thousands to tens of thousands to more.

The story of artificial intelligence has been trapped behind glass. Today a robot took a step over that line. If you build software, start thinking in skills, sensors, and service calls. If you invest, evaluate supply chains and learning loops, not just parameter counts. If you are a consumer, expect the first truly helpful embodied agent to arrive not as a toy but as an appliance that learns. We did not just get a new demo. We got a foundation.