LiveKit Inference makes voice agents real with one key

LiveKit Inference promises a single key and capacity plan for speech to text, large language models, and text to speech. Here is how it changes production voice agents, what to test before launch, and which tradeoffs matter most.

Breaking: one key for the entire voice stack

On October 1, 2025, LiveKit announced Inference, a unified low latency model gateway that puts speech to text, large language models, and text to speech behind a single LiveKit key and capacity plan. In LiveKit’s framing, you select models from a menu of partners, call them through one interface, and manage concurrency and billing in one place. The company also emphasized global co-location, provisioned capacity, and a roadmap for dynamic routing that can steer around slow regions or providers. You can read the official framing in LiveKit's Oct 1 Inference launch.

If you build voice agents, this is the first offering that feels like a platform move rather than a tool or a wrapper. It turns the messy parts of production into a single operational surface and invites competition among models behind the scenes.

What LiveKit Inference actually is

LiveKit Inference is not a single model. It is a unified interface that lets you pair a recognizer, a reasoning model, and a voice, then swap any of them with a model string change. The big change is operational. Instead of juggling keys, quotas, and dashboards across multiple vendors, you provision capacity once, monitor limits in a single dashboard, and route calls through consistent methods and events.

A quick mental model

Think of Inference as a smart power strip for models. You plug your agent into one end, choose which outlets on the other side you want to feed it, and if an outlet flickers, you move the plug without rewiring your house. That swapability is the point. It reduces the friction of experimentation and helps production teams contain risk as underlying providers evolve.

Why this matters for production agents

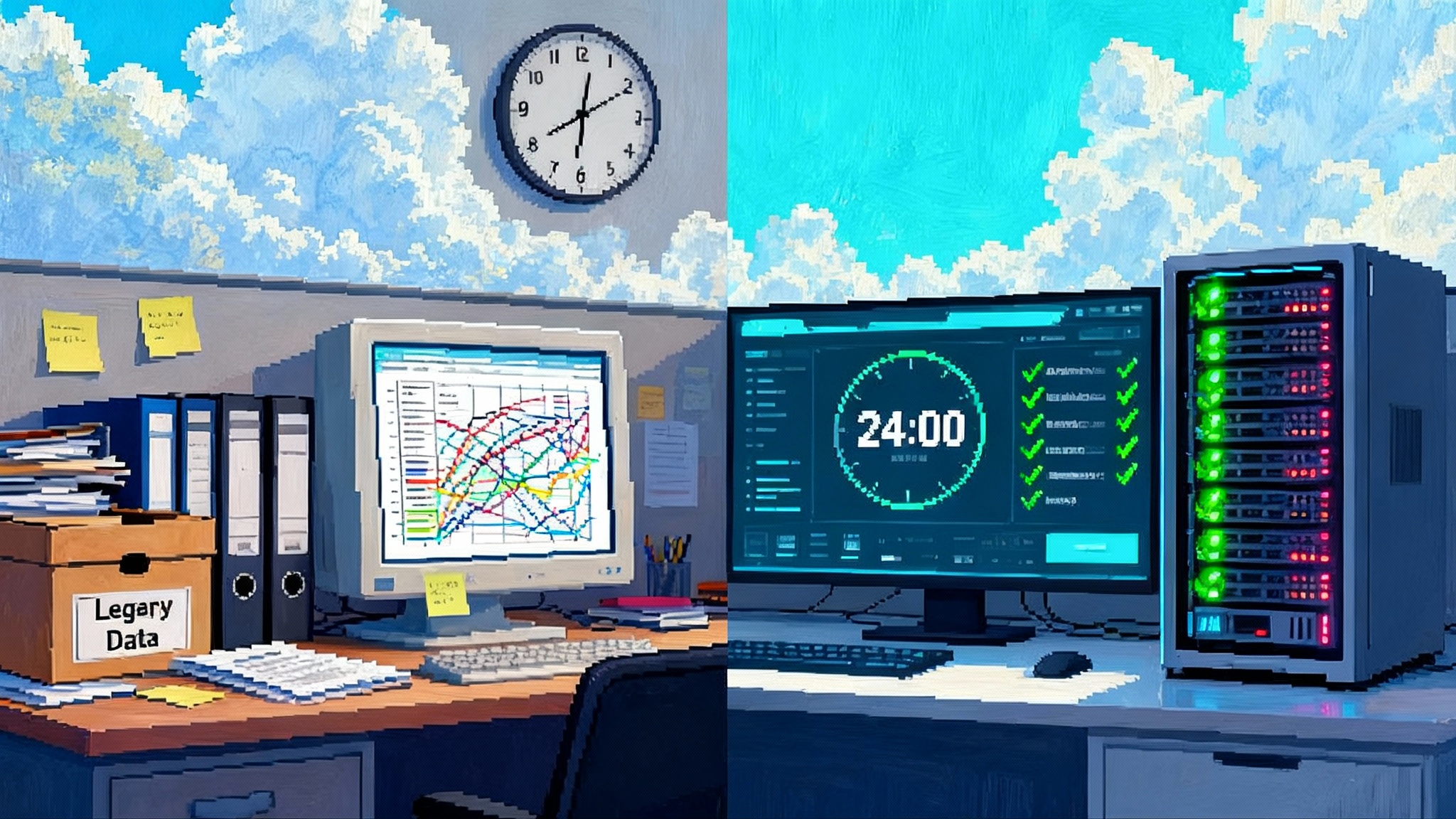

Most real time voice agents are pipelines. Audio arrives over WebRTC or a phone call. Speech to text transcribes in real time. The transcript streams into a large language model that reasons over state and tools, then emits text. Text to speech converts that text back to audio. Each link often comes from a different company, with its own authentication, rate limits, failure modes, and version cadence. Swapping a model can force code path changes, new error handling, and a rewrite of observability. Multiply that by three categories of models and brittleness grows fast.

LiveKit Inference attacks three recurring pain points:

- Access and setup. One key and one billing relationship instead of several. Teams avoid the first week of vendor setup for each experiment and can try more voices or transcribers without procurement friction.

- Concurrency and capacity. Limits are tracked and enforced at the LiveKit layer with a unified view. You can see how many concurrent recognizers are active, how many text to speech generations are live, and how many tokens per minute your large language model sessions are consuming. Capacity planning stops being guesswork across four dashboards.

- Routing and latency. LiveKit already moves media around the world for low latency calling. With provisioned capacity and planned dynamic routing on the Inference side, you get a path to more predictable response times. If a provider’s public endpoint is congested, LiveKit can use dedicated capacity and, soon, reroute to a different region or provider.

These remove common blockers between a promising demo and a reliable agent. Startups can focus on memory, guardrails, and product surfaces. Mature teams can align operations around one control plane rather than writing glue code for provider churn.

The contrast with single model stacks

OpenAI’s Realtime API takes a different path. It compresses the pipeline into one model that handles recognition, reasoning, and speech. In August 2025, OpenAI announced general availability with additions like carrier grade phone calling via SIP and support for the Model Context Protocol. That approach reduces moving parts and often reduces latency, because there is no hop between separate services. For details, see OpenAI's Realtime API announcement.

LiveKit Inference goes the opposite direction. It embraces the pipeline but makes it feel like a product, not a patchwork. The benefit is flexibility. You can pair the fastest transcriber with the most controllable reasoning model and the most on brand voice, then adjust those choices as models improve without regime changes in your stack. In practice, both philosophies will coexist. Your direction should hinge on requirements such as voice control, compliance boundaries, and the cost structure of long sessions.

Under the hood: what is new versus well known

Some pieces are familiar. LiveKit has long run media infrastructure across a global backbone. Co-location, which places your agent runtime near the inference service, is a proven way to shave round trips. What is new is how those capabilities now front multiple model providers as one addressable service, plus the operational niceties real teams need:

- Model strings instead of per provider SDKs. Lower switch costs and less code sprawl.

- Unified billing tied to pay as you go rates. Easier accounting and cleaner cost per session visibility.

- Provisioned capacity for predictable tails. Dedicated lanes bypass congested public endpoints at peak times.

- Dynamic routing on the roadmap. The service can steer traffic across regions or providers when performance dips.

The combination matters. Wiring up a new provider is easy. Promising a consistent operational envelope around a changing roster of vendors is the hard part.

The platform claim

Why call Inference a platform move and not a feature drop?

- It binds model choice to infrastructure guarantees. Co-location plus provisioned capacity is an infrastructure promise. When you can hold that line while models rotate beneath, you earn a durable place in the stack.

- It reduces supplier specific failure modes. When one provider slows down, the platform can route around it. That is a property of a platform, not a library.

- It creates a comparable surface. If every provider is reachable through the same interface and billed through one meter, customers can evaluate quality, latency, and cost with fewer confounders. Platforms create markets by making swaps cheap.

If LiveKit executes on dynamic routing and keeps adding partners, Inference becomes a clearing house for the best model at the moment. That is what production teams want in 2026: a model agnostic infrastructure layer where you select quality and price and let the system route calls accordingly.

Tradeoffs builders should measure

No consolidation is free. Compare Inference to direct integrations and to single vendor stacks like Daily, Retell, Vapi, Twilio, or OpenAI Realtime across at least five dimensions.

-

Pricing clarity. LiveKit says provider calls are billed at provider pay as you go prices, collected on a single invoice. Helpful for accounting, but it can mask small markups and forgo negotiated discounts you might secure directly. Action: request explicit rate tables for every model you plan to use and confirm how any LiveKit credits apply to third party usage.

-

Control plane lock in. Your agents will depend on LiveKit for routing, auth, and observability. Moving the control plane later will touch more than one module. Action: keep thin interfaces internally. Wrap the Inference client with your own adapter at the agent runtime boundary and log everything you would need to swap.

-

Reliability dependencies. Co-location and reserved capacity reduce tail latency, but they add a shared dependency. Outages in LiveKit’s control plane will affect multiple providers at once. Action: demand public status pages, ask for historical end to end SLOs for voice sessions, and plan visible failover messages if calls degrade.

-

Observability depth. A unified dashboard is convenient, but deep debugging sometimes needs raw provider signals like token timing from a reasoning model or mel frame synthesis timing from a voice. Action: confirm you can export provider specific logs and that session level traces can be correlated with your own telemetry.

-

Feature cadence. Partners ship fast. A new voice style, diarization mode, or barge in detector can matter. Action: ask how LiveKit propagates cutting edge options and whether provider specific parameters can pass through when needed.

None of these are dealbreakers. They are the ordinary costs of any platform. The value proposition is more time spent on agent behavior and customer experience, less time on integration glue.

The competitive frame in late 2025

- OpenAI Realtime is a single model path that emphasizes speech quality and carrier features like phone calling. It can be simpler to operate, especially if you are already all in on OpenAI. The tradeoff is less freedom to combine best of breed components.

- Agent platforms like Daily, Retell, and Vapi ship opinionated defaults that get teams to a demo quickly. The question is how easily you can change vendors, enforce concurrency budgets, or negotiate provisioned capacity per provider.

- Telephony incumbents like Twilio remain the workhorse for numbers, compliance, and contact center workflows. The challenge is layering modern models without rebuilding your own mini Inference layer.

The real question is not who has more features. It is who gives you the cleanest seam for fast swaps as model quality shifts month by month.

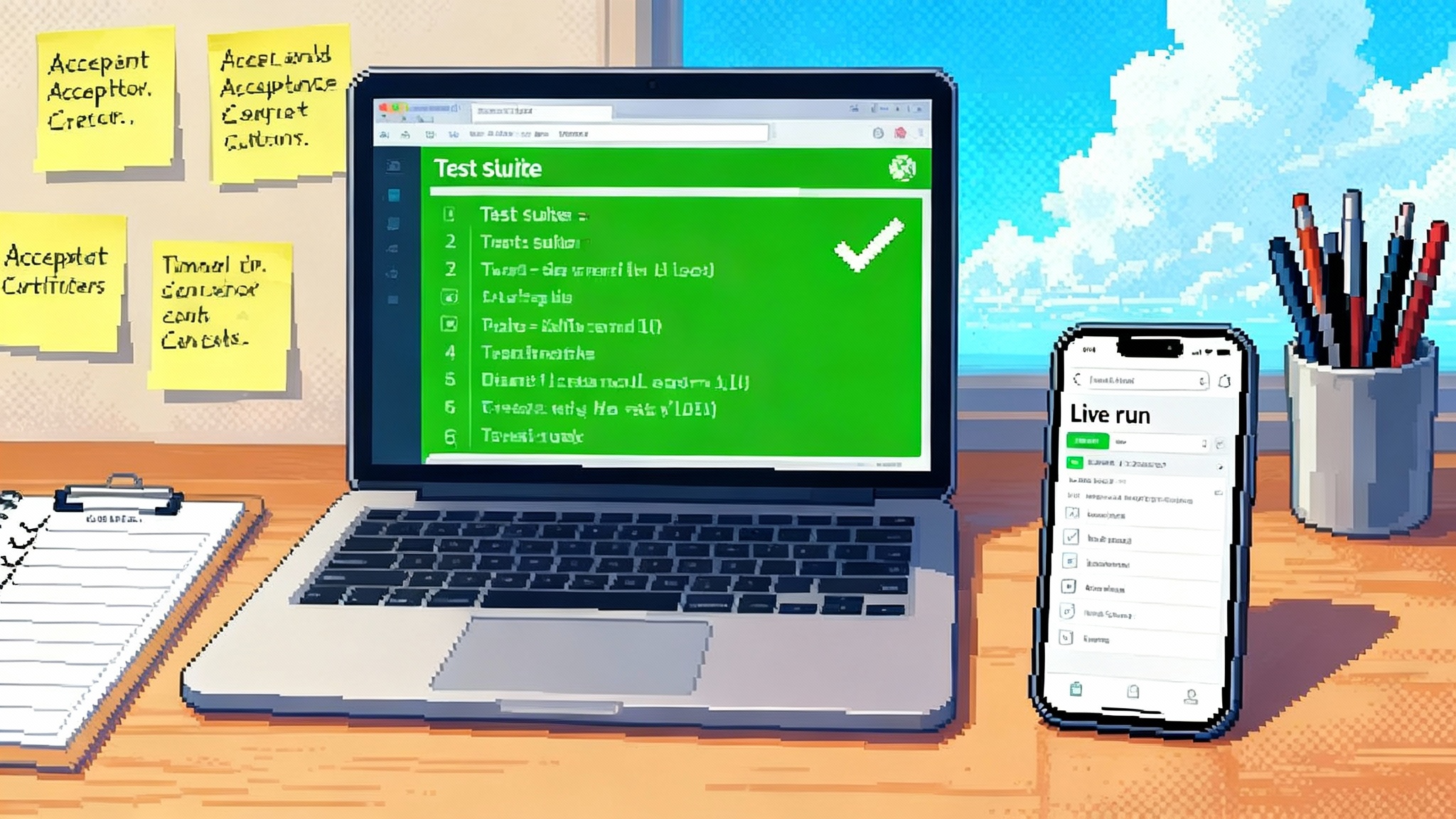

A pragmatic test plan you can run this week

Treat Inference like a database. Prove swapability, measure latency under load, validate failover behavior, and lock down costs.

1) Swap providers mid conversation

- Goal. Switch the recognizer or the voice while the call is live, without a hard edge.

- Setup. Build a harness with a command channel. Start a call with speech to text A and text to speech X. At 10 seconds, switch to speech to text B and text to speech Y by changing model strings. Keep the reasoning model fixed to isolate variables.

- Metrics. No silence gaps longer than 300 ms, no audible artifacts beyond a single frame at the switch, no loss of turn detection. Log timestamps at audio ingress, first partial transcript, first token from the reasoning model, first audio packet out. Plot deltas before and after the swap.

2) Measure end to end latency under load

- Goal. Quantify perceived latency from speech start to first audible response and to stabilized response across realistic concurrency.

- Setup. Use a traffic generator to play scripted utterances with barge in moments into 1, 10, 100, and 500 concurrent sessions. For each session record wall clock timestamps for speech start, first partial transcript, last transcript chunk, first model token, first audio packet, and last audio packet. Run tests in two regions that mirror your user base.

- Targets. Median time to first audio under 400 ms at 100 sessions and 95th percentile under 700 ms. Adjust targets to fit your product. Track dropped sessions and retransmissions. Compare against a direct integration baseline.

3) Failover routing

- Goal. Keep the conversation going when a provider slows or errors.

- Setup. In staging, inject failure by rate limiting or returning errors from the active provider. If LiveKit routing handles this automatically in your region, verify. If not yet active for your stack, implement a manual fallback policy that swaps model strings after error thresholds.

- Success. No user facing error. The system switches within one second of detecting a slowdown or error spike. Session logs clearly mark the switch for postmortems.

4) Cost telemetry and guardrails

- Goal. Predict spend before you scale traffic.

- Setup. Turn on cost per session logging. Compute cost by component for 10 minute sessions at your expected concurrency. Set soft and hard budget alerts in the LiveKit dashboard or in your own telemetry.

- Success. Cost per session estimates within five percent of the invoice for the same traffic pattern.

5) Human evaluation for voice quality

- Goal. Compare subjective quality across voices and providers without bias.

- Setup. Run a double blind test with recordings from your top 20 intents across three providers and three voices. Randomize order. Collect mean opinion scores on clarity, warmth, and brand fit.

- Outcome. A single provider and voice wins by a significant margin, or you codify a routing rule that selects different voices for different intents.

Integration patterns that age well

- Wrap the client. Build a thin adapter that isolates Inference calls. Keep provider names, model strings, and vendor specific parameters out of business logic.

- Centralize event schemas. Define a canonical event for transcript partials, model tokens, and audio packets. Map vendor events into your schema at the edge.

- Typed errors and retries. Standardize error categories across providers, set bounded retries, and emit metrics that correlate error spikes with routing decisions.

- Session budgets. Enforce per user and per team budgets for tokens, generation length, and session duration. Surface soft warnings in product before you hit hard caps.

These patterns mirror what we have seen as teams move from demos to durable operations in other agentic domains, including the watch and learn agents rewriting operations and the shift toward Agent 3 software by prompt.

Pricing and procurement checklist

- Request a rate card that lists every partner model you plan to use and any LiveKit surcharge or minimums.

- Confirm how your LiveKit plan credits apply to third party calls and whether provisioned capacity has separate terms.

- Ask for historical latency and error distributions by region for end to end sessions, not just per provider.

- Verify data retention defaults, redaction options, and export paths for transcripts and audio. Map those to your compliance requirements.

- Clarify pass through of new provider features and timelines for exposing advanced parameters.

Where this fits in the broader market

Across the ecosystem, teams are converging on control planes that abstract volatile components. We have covered similar patterns in contact centers with Dialpad Agentic AI in contact centers, and in enterprise software where orchestration layers make it easier to swap capabilities without rewriting the product. Inference applies that pattern to real time voice, where latency budgets are tight and session length drives cost.

What to do this quarter

- If you are prototyping. Adopt Inference for speed. Use the single key to explore more recognizer and voice combinations than you would otherwise try. Keep your adapter thin so you can switch later.

- If you are heading to production. Ask for provisioned capacity numbers and historical latency for your target regions. Mirror LiveKit’s concurrency limits in your runtime. Run the swap and failover tests before you launch.

- If you already operate at scale. Use Inference as a pressure relief valve. Start by moving the most volatile component, often text to speech, behind the gateway. Measure whether reserved capacity shrinks tail latency during peak hours.

What this foreshadows in 2026

If LiveKit continues to add partners and ships dynamic routing, Inference becomes the control plane for model choice. That unlocks two notable outcomes next year:

- Model agnostic contracts. Instead of negotiating per model, customers buy pools of speech minutes, tokens, and generations with performance guarantees regardless of provider. Finance gets predictability while engineering keeps the right to swap.

- Quality based routing. With standardized telemetry and reserved capacity, platforms can route based on observed latency, recent error rates, or topic specific accuracy. The market for models starts to look like a live auction for requests, with users benefiting from competition.

We have seen this pattern before. Content delivery networks turned web hosting into edge delivered experiences. Cloud gateways abstracted storage behind a single namespace. Voice AI is on the same path.

The bottom line

LiveKit Inference turns a fragmented voice stack into a single measurable surface. For startups it shortens the path from idea to production. For mature teams it adds the levers operations leaders want, such as capacity, routing, and concurrency budgets in one place. The tradeoffs are real, so treat this like a platform decision, not a toggle. Run the tests, negotiate the terms, and keep your interfaces thin. Do that and you will get faster agents today and a cleaner path to a model agnostic infrastructure layer in 2026.