Suno Studio debuts the first AI‑native DAW for creators

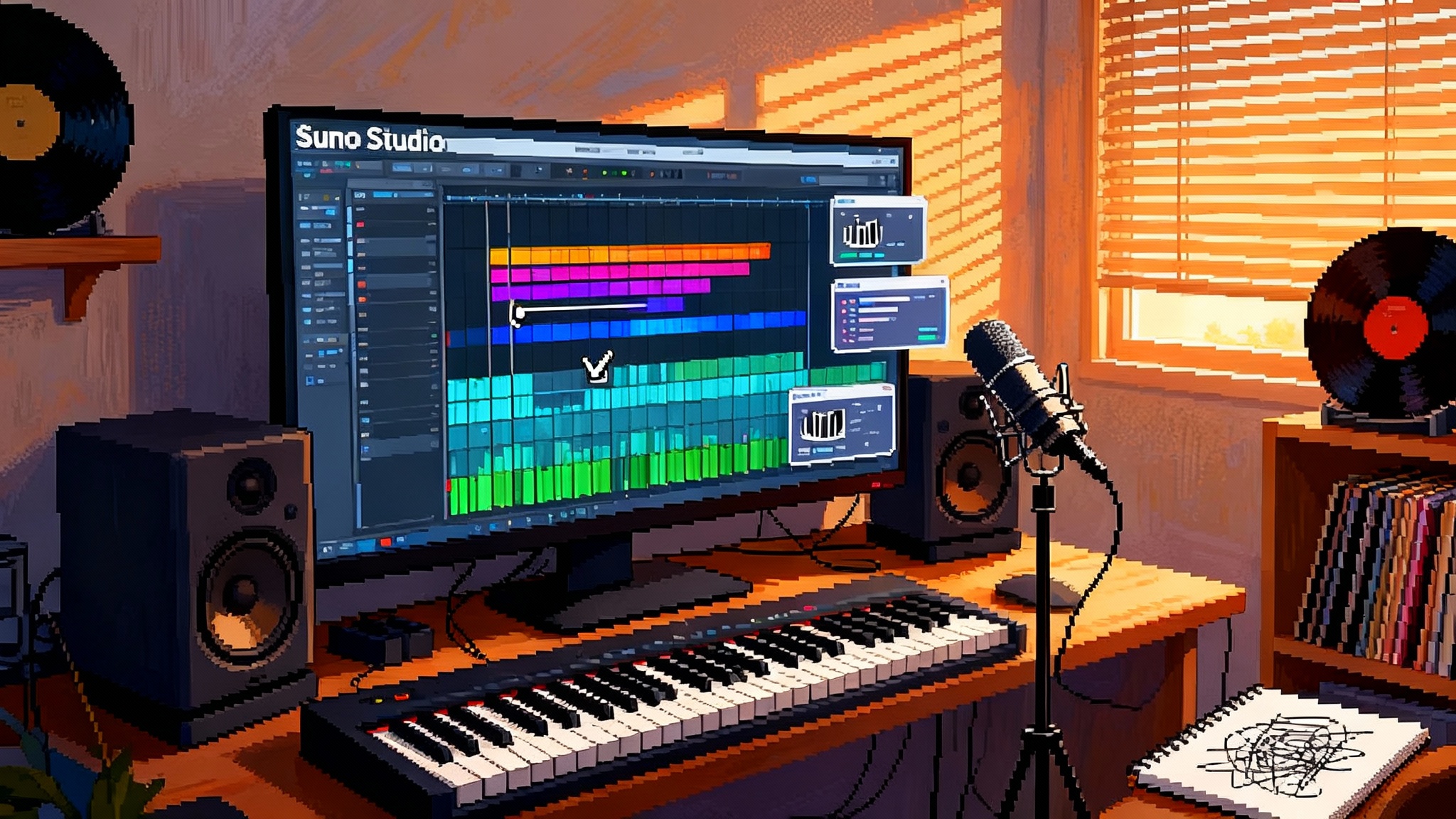

Suno has turned its one-shot generator into a desktop workspace. Suno Studio pairs the latest v5 model with multitrack editing, stem generation, and AI-guided arrangement, shifting AI music from novelty to daily workflow.

From one-shot tricks to a real workstation

For nearly two years, AI music tools were mostly party tricks. You typed a prompt, crossed your fingers, and waited for a tidy two-minute demo. The results were often fun, occasionally impressive, and rarely something you could build on. Suno Studio changes that center of gravity. Launched in late September 2025, it reframes generation as only the first step inside a full desktop timeline. You can start from a prompt, a voice memo, or stems you already have, then arrange, replace, and iterate inside the same session where you mix. That shift from one-shot outputs to editable parts is the moment AI stops being a novelty and starts behaving like a tool.

If you want the official snapshot of what shipped, Suno’s own Suno Studio press announcement lays out the core story: a generative audio workstation with multitrack editing, stem-level generation, and export paths for audio and MIDI. Independent early looks, like this TechRadar hands-on with Studio, describe an experience that feels closer to a DAW than a web toy, with the model listening to your timeline before proposing parts.

What actually shipped in September

Suno Studio positions itself as a DAW where generation is native. In practice, that means three pillars:

- A multitrack timeline with the basics: cut, copy, slip-edit, move sections, adjust levels, and tweak pitch or tempo.

- Targeted stem generation: instead of re-rolling an entire song, you can ask for a new vocal take, a punchier snare, or a warmer pad, then audition variations in context.

- Arrangement that listens: prompts can be written in musical terms, and the system proposes parts locked to your tempo and key, so you can keep, cut, or regenerate without losing alignment.

The v5 model matters because it makes those parts more workable. Separation is cleaner. Bridges and builds are more coherent. Vocals still divide opinion, especially on quiet verses or exposed intros, but editors suddenly have material that survives more aggressive edits without crumbling.

Why it feels different from generator-first tools

Traditional DAWs assume you already have sound to manipulate. Generator-first tools assume you will accept what the model gives you. Suno Studio sits in the middle and treats prompts like a starting point rather than a finished product. That unlocks three habits of real production:

- You sketch with intention. A scratch vocal or rough guitar riff becomes the anchor while you generate alternate drums, bass, and pads that respect the session grid.

- You iterate without file-juggling. Because generation and editing live in the same timeline, you do not need to bounce to another app just to test a new hi-hat pattern.

- You keep options open. Stem-level commits let you try bolder ideas earlier, then revert or blend without losing the thread.

This is the same design pattern we have seen across agentic software. Generation is only valuable when it fits a human workflow and respects state. If that idea resonates, see how a similar move plays out in voice with LiveKit Inference for voice and in search-to-action with Inside Vectara's Agent API.

Access, pricing, and the first wave of users

At launch, Studio is desktop-first and available to paying users on a higher tier. That choice telegraphs the initial audience: serious hobbyists, prosumers who already pay for a DAW, and small studios that want speed. These users will not be seduced by novelty for long. They will judge the tool on four criteria: how fast it is to sketch, how editable the output is when it lands, how predictable exports are, and how little cleanup the audio needs before release.

If Suno wants Studio to become default by 2026, the company has to win this pragmatic crowd. The best way to do that is to make the first 10 minutes feel effortless and the next 10 hours feel reliable.

How the workflow actually changes

Think of a track as a stack of Lego bricks. Early AI gave you a finished castle you could admire or repaint. Studio lets you pull that castle apart, swap the tower, deepen the moat, and add five new flags, all while auditioning each change in context. Concretely, that means:

- Multitrack edits that feel familiar. Move a chorus, mute a pre-chorus, or comp a vocal phrase. The novelty is not the timeline itself. It is that you can regenerate only what you need without exporting or reimporting.

- Stems on demand. Ask for a drier vocal, a busier hi-hat, or a more analog-feeling pad. Keep the bass from version one and the snare from version three. That is how producers already work, just faster, because the machine proposes options while you stay in flow.

- Arrangement as a dialogue. Write prompts in musical language: a six-eight funk bass, a lighter pre-chorus in half time, a two-bar tom fill into the bridge. Because the model listens to the existing timeline, its suggestions lock to your grid.

The result is not automatic hits. It is an assistant that never gets tired and speaks mix engineer. You remain the arranger.

What creators can get done this week

- Build a song like a kit. Start with your scratch vocal. Generate three drum interpretations that respect your tempo map. Audition bass concepts that carry the chorus. Keep the one that melts with your melody and ask for a breakdown in the bridge. Because everything lands as stems, you commit faster without painting yourself into a corner.

- Refactor your catalog demos. Labels and writers have hard drives full of half-finished songs. Upload the dry vocal, generate four harmony ideas and a modern rhythm bed, and cut an EP of refreshed arrangements. Stems and MIDI export make it simple to finish in your home DAW or hand off cleanly to a mix engineer.

- Turn briefs around quickly. For supervisors and brand clients who want to hear three moods before lunch, use Studio to produce alternate instrumentations and energy curves without booking players. You still clear rights and handle provenance, but the ideation step shrinks from days to hours.

What DAWs and plugin makers must adapt to

Suno did not just add a feature. It collapsed three jobs into one surface: generate, edit, arrange. Incumbent DAWs have sprinkled in machine learning for stem separation, drummer emulation, or vocal cleanup, but the generative loop still happens outside most sessions. When the engine becomes native to the timeline, expectations change.

Editors will want to:

- Describe sound in plain language. Mixers will type make the synth warmer and expect a spectrum-aware chain of suggestions rather than a static preset browser.

- Treat MIDI and audio as fluid. If a pad arrives as audio, the session should infer MIDI and harmonic intent on demand. If a riff arrives as MIDI, it should render through model-native instruments with near zero latency.

- Bake in provenance and rights checks. Labels and libraries will demand track-level lineage that travels with stems. A modern DAW should carry creation metadata and checksums through every render and export.

Suno’s acquisition of the browser-based DAW WavTool earlier this year was a clear tell. That deal brought mature editing ergonomics into the fold at the exact moment the company needed sophisticated timeline behavior. Competitors will respond, but Studio starts with an integrated advantage: generation that is aware of edits, and edits that are aware of generation.

For plugin makers, this is a wake-up call. If generation lives in the host, effects have to interoperate with a model’s sense of intention. Imagine a compressor that listens not only to transients but also to a target vibe the user types. Or an amp sim that takes a request like slightly more edge in the chorus and uses the session map to automate drive, presence, and mic position only in that section. The market will favor plugins that understand context.

Frictions to watch between now and 2026

- Pricing and credits. If every render and re-render consumes credits, creators will watch the meter the way mobile users once watched data caps. One fix is generous iterative credit pools for in-session tweaks. Another is tiered pricing that rewards stem-level edits over full-song re-rolls.

- Export and interop. Studio already exports audio and MIDI. The next rung is lossless stems with consistent naming, tempo map export, and rock-solid alignment when reimported to Pro Tools, Logic Pro, Ableton Live, or FL Studio. If Suno lands simple round-tripping with the big four, it will earn trust with engineers who live in those environments.

- Vocal reliability. v5 reduces mush and improves phrasing, yet some ears still hear a glaze, especially on quiet verses. If Suno can generate vocals that sit in a dry mix and blend with human overdubs, adoption accelerates.

- Legal clarity. Lawsuits and debates about training sets will continue. That does not stop experimentation, but it shapes enterprise adoption. Track-level provenance, opt-in libraries, and exportable rights logs will be table stakes for label deals.

- Platform coverage. Desktop-first makes sense at launch. Mobile capture and browser parity will matter for idea-first workflows, especially for creators who sketch on phones and finish on laptops.

A practical playbook for creators and small labels

- Treat Studio as a sketch-to-stem engine. Use it to generate the parts you would otherwise hire a session player for when time or budget is tight. Keep a change log for every AI stem you commit. Export both audio and MIDI so your mix engineer can re-voice or replace sounds later.

- Standardize your handoff. Define a folder structure now. One folder per song, subfolders for drums, bass, vocals, synths, and effects. Include BPM, sample rate, and key in filenames. Keep a text file with the prompt and parameters for each stem, plus generation time and version. Your future self will thank you during revisions.

- Pair AI vocals with human texture. If you use AI vocals, track a human double or a small gang vocal on choruses. Even a few ad-libs can break up uniformity. If you stick to AI-only vocals, plan more automation and reverb rides to hide telltale sameness.

- Measure twice, spend once. Before committing to a paid tier, run a two-day sprint. Count how many iterations a typical song needs. If your average track takes six to ten targeted re-generations, choose a plan that covers that without surprise top-ups.

- Build a responsible pipeline. Save prompts, seeds, model versions, and stems in a metadata file. Keep a copy of licenses for any third-party samples you add. When you move to release, that paper trail will reduce friction with distributors and labels.

How this fits the larger agentic story

The shift we are witnessing is bigger than music. Across categories, the interface is becoming conversational and context-aware. We are moving from apps that wait for clicks to agents that propose next steps. In audio, Studio places that loop on the timeline where creators live. In voice, similar loops are making agents feel instant, as seen in LiveKit Inference for voice. In media licensing, standardized provenance is starting to emerge, a trend we covered in Eleven Music licensing rails. The common thread is simple: AI is most useful when it respects the constraints and rituals of expert users.

What success looks like by the end of 2026

If Suno succeeds, the default mental model for production will change. Prompts will become parts. Parts will become songs. And the boundary between sound design, arrangement, and mix will soften. A few concrete markers would signal that shift:

- Editors describe sound in language first, then refine with knobs. That will feel as normal as typing a search term before opening a filter menu.

- Multitrack sessions will carry provenance metadata by default. A bounced stem will know what it is, where it came from, and which rights apply.

- Round-tripping between Studio and the big four DAWs will be boring. That is a compliment. Boring means predictable.

- Playlists will quietly include more AI-assisted tracks, not as a novelty tag but because the production held up under scrutiny.

The bottom line

Suno Studio turns prompts into parts and parts into songs in one place. It will not write your hook, but it will help you test ten arrangements before lunch and keep the best one without losing momentum. If Suno smooths pricing, makes export and reimport bulletproof, and gives labels the audit trails they need, AI-first production could become a default setting by 2026. The most exciting part is not that machines can sing. It is that arranging with an infinite band inside your timeline becomes normal, and the tools push us toward more listening and less waiting.

If you build for the creative stack, watch this space closely. AI is moving onto the timeline. The winners will not be the tools that promise magic songs by Tuesday. They will be the ones that let musicians ask better questions faster, then render answers as editable parts that respect tempo maps, key signatures, and human taste.