Tinker flips the agent stack with LoRA-first post-training

Thinking Machines Lab launches Tinker, a LoRA-first fine-tuning API that hides distributed training while preserving control. It nudges teams to start with post-training for agents, with managed orchestration and portable adapters.

A new default for building agents

On October 1, 2025, Thinking Machines Lab introduced Tinker, a low-level fine-tuning API that aims to make post-training the first choice for building production agents. Instead of stretching a general model with ever more elaborate prompts, Tinker encourages teams to train compact adapters on data that reflects their real tasks and failure modes. The launch narrative is clear. If you want repeatable capability, stop relying on a fragile prompt and start teaching the model with your own examples. The announcement framed that shift not as an ideal but as a practical path, and it backed the claim with a managed service that takes the sting out of distributed training. You can read the release in the official Tinker announcement.

What Tinker actually is

Tinker exposes a narrow, composable core that looks more like a toolbox than a framework. Rather than dictating a pipeline, it provides a few primitives you can wire into your own loops:

forward_backward: run a forward pass and accumulate gradientsoptim_step: update weights using the accumulated gradientsample: generate tokens for interactive steps, evaluation, or RLsave_state: persist training progress for resumption or audit

These four calls are the Lego bricks. With them, you can assemble classic supervised fine-tuning, modern preference optimization, and reinforcement learning loops without fighting a heavy abstraction. The managed layer handles the operational burden that usually slows teams to a crawl. Scheduling across clusters, resource allocation, fault tolerance, and checkpoint hygiene are built in. You bring data, objectives, and a loop. Tinker supplies a reliable training surface that does not force you into a one-button flow.

LoRA-first by design

Tinker is opinionated about efficiency. It assumes Low Rank Adaptation as the default so you update compact adapter weights rather than touching the full base model. That decision matters for three reasons:

- Memory and capacity: LoRA reduces memory pressure, which lets you work with larger base models on the same hardware or pack more experiments onto a given cluster.

- Throughput: Because adapter updates are light, the platform can multiplex many simultaneous runs. More throughput reduces queue times and boosts experiments per week.

- Portability: Adapters are small artifacts you can carry to any compatible inference stack. Train on a managed platform, then run wherever latency, compliance, and cost make sense.

In other words, LoRA-first is not just a cost trick. It is an architectural choice that speeds up iteration and makes switching providers and models a genuine option rather than a rewrite.

How this reshapes the agent stack

The familiar agent stack has been prompt templates, retrieval, and tools. Tinker turns that order around. Start from a task-specific adapter, then add retrieval and tools, and finish with a simple prompt that acts as scaffolding rather than a crutch. That design lowers variance, makes behavior more predictable, and shortens the path from a prototype to a production baseline.

This mirrors a pattern we have seen whenever an ecosystem standardizes on a stable interface. When we examined protocol-level unification in agents in our piece on the USB-C moment for agents, the lesson was the same. Once the plumbing is reliable, method development accelerates. Tinker attempts to be that reliable training bench for post-training.

Concrete use cases that benefit first

- Airline customer support: Replace a fragile tree of prompt rules with adapters trained on a few thousand resolved tickets labeled by outcome, tone, and regulatory constraints. The agent internalizes escalation thresholds and cites policy text accurately.

- Life sciences assistants: Fine-tune on lab protocols, reagent catalogs, and instrument error logs. Pair the adapter with tool use for unit conversions and scheduling. The agent develops a grounded sense of safe versus merely possible actions.

- Narrow legal review: In a focused domain such as vendor master services agreements, train on annotated clauses and decision memos. The agent flags covenants procurement actually cares about instead of surfacing generic contract warnings.

These domains share a trait. The preferences and constraints are hard to express in prose but straightforward to learn from examples, rankings, and trajectories. LoRA-first post-training lets you teach those patterns directly, which is more robust than juggling dozens of prompt caveats.

Model coverage and scale without rewrites

According to the launch materials, Tinker supports open-weight model families such as Llama and Qwen, including very large mixture-of-experts variants like Qwen3-235B-A22B. The headline is not the nameplate size. It is the promise that switching models is often just a string change in your code. That matters because most teams validate ideas on small dense models, then jump to larger dense or MoE models when methods stabilize. If your training surface stays stable across those jumps, you get to move faster, and the cost of exploring capacity limits drops.

This is where LoRA-first meets scale. Training adapters on a large MoE model can remain tractable if orchestration is handled and artifacts are small. You run more experiments per dollar, and you can shard research across teammates without waiting for a single mega run to finish.

Signals from early adopters

Tinker launched with early users from Princeton, Stanford, Berkeley, and Redwood Research. Their projects read like a tour of the frontier. Mathematical theorem proving, chemistry reasoning, asynchronous off-policy reinforcement learning with multi agent tool use, and preference-tuned safety experiments all made an appearance. The signal is not just prestige. It is that a small set of composable calls can express unusual loops. When researchers can implement custom algorithms without fighting the API, the abstraction is probably right.

There is also a cultural signal. Methods that start in labs often become enterprise defaults within a few quarters. Direct preference optimization and modern RL pipelines followed this path. A platform that shortens the distance between a paper and a production baseline will accumulate new recipes quickly. Teams that want to keep pace will need solid evaluation and telemetry, which is why we consistently recommend building an early observability stack using our zero-code LLM observability guide.

The managed path for startups

For many startups, the day one blocker is not data or ideas. It is cluster access, drivers, reproducible builds, sharding, checkpoint recovery, and log hygiene across dozens of machines. Tinker moves that work onto a managed surface and leaves control of the loop and the data with you.

Two choices stand out for founders:

- Portable artifacts: If you train adapters in Tinker and later want to run inference on a different provider or on your own hardware, you carry the weights out. That reduces lock-in and lets you place training and inference in different environments for cost and compliance.

- Small first, big later: Switching base models by changing identifiers keeps your code stable. When a demo hits, you scale up the base model without reworking the training stack.

For teams shipping vertical agents in customer support, revenue operations, or clinical workflows, this clarifies the path. Start small, iterate fast, and scale cleanly. When you reach the point where retrieval is the bottleneck, revisit architecture choices with the lessons from our Vectara Agent API deep dive.

Costs, pricing, and the experiment flywheel

The launch emphasized that Tinker is free to start, with usage-based pricing to follow. The economics hinge on the architecture. LoRA adapters are small, which allows higher cluster utilization and lower wait times. If a run crashes two hours in, resuming from a saved state is cheap and fast.

Buyers usually care about two numbers more than list price:

- Experiments per dollar: How many method iterations you can run for a given budget. LoRA and efficient scheduling push this number up.

- Experiments per week: A function of both cost and queue times. Managed orchestration that starts runs quickly and recovers gracefully keeps the flywheel turning.

If Tinker delivers strong numbers on both, it will become a default for method development even if nominal prices look similar across providers. Teams will follow the fastest path to learning.

Five pressures on incumbents

There is no shortage of fine-tuning offerings from incumbents and hyperscalers. Many are higher level, heavily prescriptive, or tied to a single model family. Tinker resets expectations in five areas:

-

Lower-level control without infrastructure pain: Enterprises will expect to express custom loops, not just push a one-button supervised pipeline. Providers that cannot expose lower-level primitives will need to expand their APIs.

-

LoRA as a first-class path: Customers will ask for LoRA by default, with strong guarantees about adapter portability. Platforms that only offer full fine-tuning or closed adapters will feel pressure to match LoRA economics and exportability.

-

Open-weight coverage at the high end: Support for very large MoE models signals that a managed platform is not limited to mid-range dense networks. Buyers will ask hard questions about top-end support and scheduler reliability under load.

-

Post-training beyond supervised learning: Preference optimization and RL need to be first-class citizens. That means sampling, replay, and off-policy control flows in the API, not just an example notebook.

-

Artifact portability and data control: If adapters and checkpoints move easily, customers will expect similar freedom elsewhere. Clean export paths, audit trails, and data residency options will move from nice-to-have to mandatory.

Over the next six to twelve months, those pressures are likely to influence roadmaps. Expect more providers to publish lower-level primitives, document production-quality RL, and ship adapters as downloadable artifacts. Pricing pressure will show up indirectly through higher quotas, better scheduling, and more generous restart policies.

A practical playbook you can run this month

If you are evaluating Tinker, here is a concrete plan you can execute in a week or two:

- Define the slice: Pick a single task slice with clear outcomes. For support agents, this might be policy exceptions and refunds above a threshold. Collect a few hundred to a few thousand examples that reflect the hardest cases.

- Baseline with SFT: Use supervised fine-tuning to establish a baseline. Track accuracy, escalation decisions, and citation quality. Keep the prompt simple.

- Add preferences: Gather pairwise rankings in realistic contexts and add a preference optimization step. Measure offline win rates over your evaluation set.

- Keep evaluation tight: Build an evaluation harness that captures prompts, contexts, expected behaviors, and allowed tools. Sample during training rather than waiting until the end.

- Scale method, not code: When your loop stabilizes on a small dense model, jump to a larger dense or MoE model to test capacity. Keep latency and cost in the same dashboard as quality.

- Package artifacts: Store adapter weights and checkpoints with clear provenance. Record base model identifiers, training data versions, and commit hashes. Make switching providers uneventful.

This playbook treats the managed layer as a speed boost rather than a black box. You still own the loop and the data. You just stop losing days to cluster babysitting.

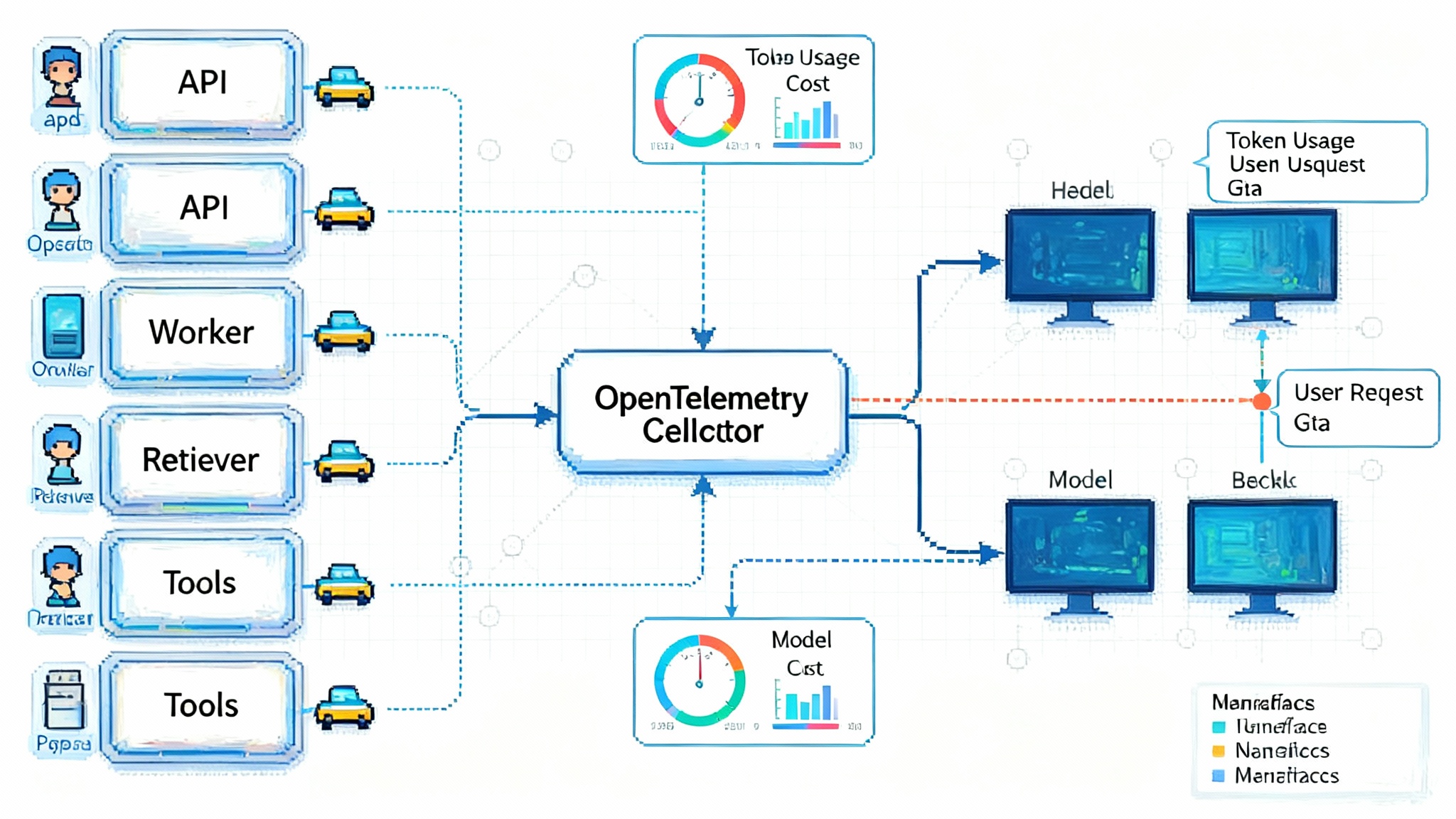

Observability and safety by design

Production agents fail in surprising ways when observability is an afterthought. Instrument the loop early. Capture token-level traces for sample steps, track reward signals if you introduce RL, and maintain a ledger of adapter versions tied to evaluation runs. Our zero-code LLM observability overview outlines quick wins that make regressions visible and audits painless.

Safety follows the same pattern. If your domain has compliance requirements, embed constraints into your training examples and add targeted adversarial prompts to your evaluation set. Preference data that encodes tone, refusal boundaries, and citation rules will outperform a long prompt with soft guidelines.

What to watch next

- Cookbook growth: If the open cookbook adds high-quality recipes, the platform’s value compounds. Watch for canonical loops for preference optimization, outcome-supervised RL, and multi agent tool use to stabilize.

- Scheduling and reliability: As more users arrive, the real measure will be how quickly runs start and how often they finish. That is the day-to-day determinant of experiments per week.

- Model lineup and adapters: More base models, especially new MoE releases, will test the claim that switching is mostly a string change. The portability story will mature as teams export adapters into varied inference stacks.

- Enterprise features: Audit trails, data residency, role-based controls, and clean export paths will determine whether large organizations move sensitive workloads onto a managed training surface.

For a quick look at capabilities, supported models, and common questions, the company maintains a concise overview on the official Tinker product page.

Bottom line

Tinker is a deliberate bet that the fastest path to useful agents runs through post-training by default. It does not romanticize prompts. It makes learning the unit of progress. By keeping the API narrow and the infrastructure managed, it turns complex distributed training into a loop most teams can understand and modify. The early academic users suggest the abstraction is minimal enough to support new methods, which is how research becomes product.

For startups, the managed path plus portable artifacts offers a way to ship vertical agents quickly without painting yourself into a corner. For incumbents, the pressure will come from customers who ask for lower-level control, open-weight coverage at the high end, and adapters they can take with them. If those expectations take hold, the next year will be less about crafting perfect prompts and more about crafting small, focused datasets and loops that teach models what users actually need.