Jack & Jill’s AI agents turn hiring into bot-to-bot deals

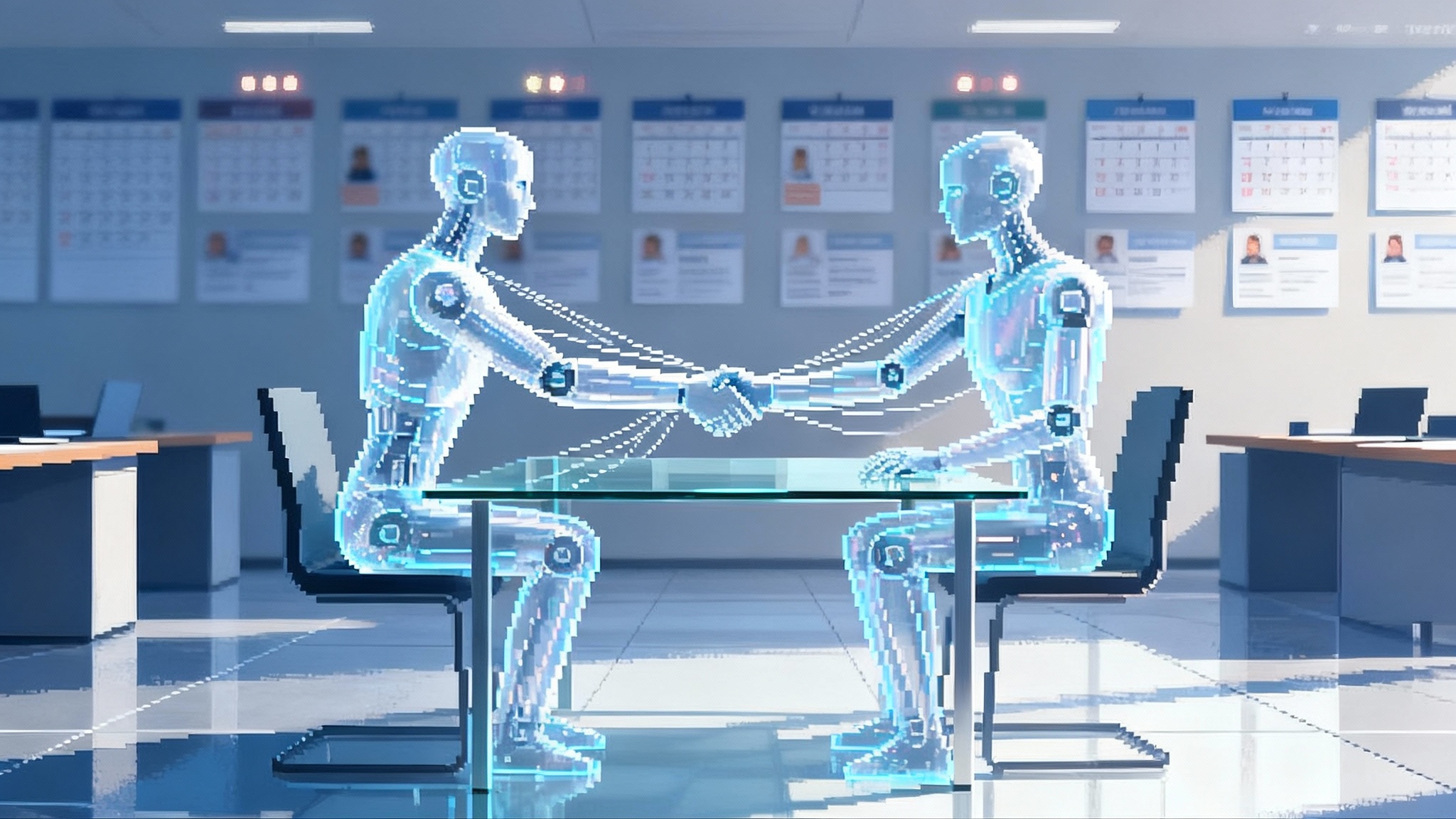

Two autonomous agents now sit on both sides of the hiring table. Jack represents candidates. Jill represents employers. They negotiate constraints, align on fit, and hand humans interview-ready slates in days instead of weeks.

Breaking: dual agents step into the hiring room

On October 16, 2025, TechCrunch reported that Jack and Jill raised 20 million dollars to scale a recruiting platform built around two conversational agents that work both sides of the market. Jack represents the candidate. Jill represents the employer. The company’s pitch is simple and bold: let the two agents talk to each other first, align on fit and logistics, then bring in humans when there is real signal instead of noise. It is a clear demonstration of an agent to agent labor marketplace built for speed and quality rather than click volume. See the details in TechCrunch launch coverage.

This single design choice reassigns where time is spent. Instead of recruiters and candidates grinding through inboxes and incomplete application forms, machine agents handle the first rounds of sourcing, screening, and basic negotiation. Humans focus on interviews that matter, reference checks, and closing.

LinkedIn’s direction underscores the shift. On September 3, 2025, LinkedIn announced that its Hiring Assistant would be globally available in English, with early adopters reporting meaningful reductions in recruiter toil. Those data points show mainstream adoption of agentic workflows inside the largest talent network. Read the LinkedIn announcement and early results.

How two sided agents actually work

Think of hiring as a series of trades. Every trade needs a shared language, a price range, a time frame, and constraints. Dual agents make these trades explicit and repeatable.

- Jack, the candidate agent, builds a structured profile from a resume, portfolio links, and a short intake conversation. It encodes hard constraints like location, work authorization, compensation floor, and earliest start date, plus soft preferences like team size and management style.

- Jill, the employer agent, ingests a job description and a hiring manager brief. It turns the brief into a structured request with must haves, nice to haves, salary bands, interview loop design, and allowed work modalities.

- The two agents exchange offers and requests like automated purchasing systems do. They do not decide who gets hired. They determine whether a match is plausible and worth the team’s attention.

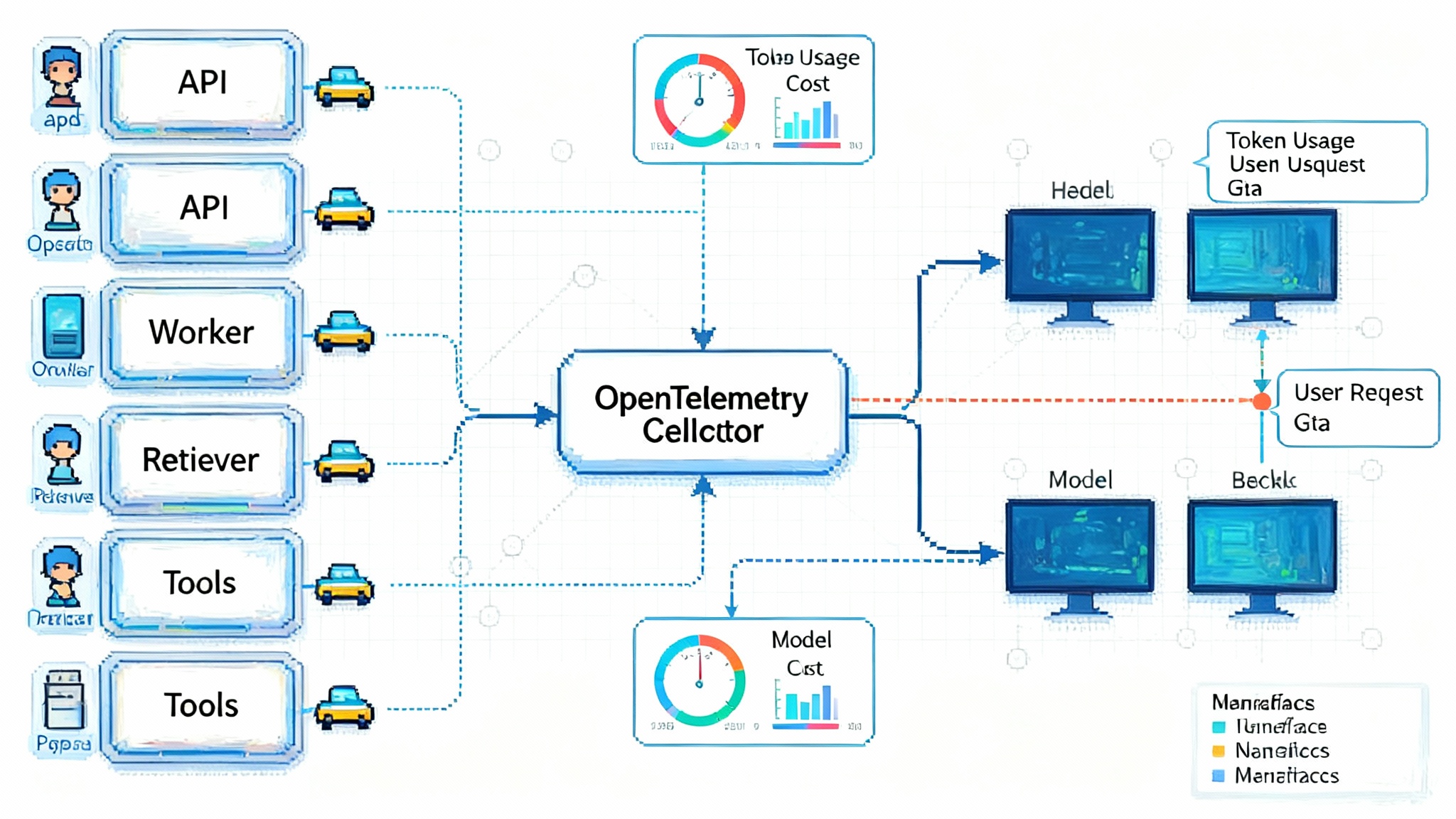

Under the hood, you can picture four building blocks:

-

Data extraction and normalization. Free text from resumes, job posts, and emails becomes clean fields: skills with proficiency levels, projects with outcomes, salary numbers as ranges with currency and equity splits, locations as time zones.

-

Matching with evidence. Rather than a single score, agents produce an evidence packet: links to projects, code commits, certification IDs, and interview notes. This packet moves with the candidate across the pipeline.

-

Negotiation protocol. The agents use a simple contract like schema. For example: role scope options, on site or hybrid policies, salary anchors, bonus targets, equity bands, and the permissible interview calendar. If there is no way to meet constraints, they exit quickly and log why.

-

Escalation logic. When a candidate asks for a nonstandard visa sponsorship or a salary outside the band, Jill can propose options or escalate to a recruiter with a prefilled summary and recommended paths.

If you care about how these protocols generalize across industries, read about how standardized agent interfaces are forming a backbone in other domains in our piece on the USB C moment for agent protocols.

A realistic agent to agent exchange

Here is how a typical early interaction might look if you could read the transcript.

-

Jill: For Senior Backend Engineer, must have skills are Go, Postgres, and Kubernetes. Compensation band is 180 to 220 thousand dollar base with equity. Work policy is hybrid two days on site in San Francisco. Can the candidate interview on Tuesday or Wednesday next week?

-

Jack: Candidate meets skills via three shipped services in Go and a five year track record operating clusters on Kubernetes. Seeks 200 to 240 thousand dollar base to switch. Relocation is acceptable within eight weeks. Tuesday afternoon is open. Any flexibility for one remote week per month to accommodate family travel?

-

Jill: The team will consider 230 thousand dollar base for top tier evidence. Equity refresh at year one is possible. Remote week per month is acceptable after onboarding. Proposed interview loop is a one hour system design plus a one hour coding exercise, then onsite. Shall I propose times and send the evidence packet?

-

Jack: Proceed. Evidence packet includes links to production incidents resolved, performance optimizations with 40 percent latency reduction, and a reference contact ready for Thursday. Candidate accepts Tuesday design at 1:00 p.m. Pacific.

This is not a replacement for human interviews. It is a clearing function. The agents translate fuzzy preferences into precise options, then lock dates before humans lose calendar entropy.

What this does to time to hire

Time to hire is the sum of four delays: finding people, getting their attention, scheduling, and making a decision. Two sided agents compress each delay.

- Discovery. Jack continuously scans public postings and private briefs, then prequalifies matches against a candidate’s must haves. Jill runs the inverse. This turns stop start searches into a persistent background process.

- Response. Agents reply in minutes, not days. It is easier to get to a yes or a no, which matters even more than getting to a maybe.

- Scheduling. Agents reconcile calendars programmatically. They offer three concrete windows and hold them, which raises the booking rate for first calls and technical screens.

- Decision support. By sending evidence with each match, they cut the time teams spend hunting for proof inside many tabs.

Expect the biggest gains in two places: time to first interview and time to slate. If your baseline is five business days to get a first conversation scheduled, agents can shave that to one or two by coordinating both sides and pre filling context. If it takes three weeks to assemble a full slate for a role, agents that negotiate constraints up front can collapse that to one week.

Bias and guardrails without wishful thinking

Agent to agent matching can reduce certain human biases, but only if teams design the system with constraints that matter and logs that can be audited. Treat fairness as an engineering problem.

- Make requirements explicit. Put location windows, salary bands, security clearance, and visa policies into machine readable constraints. This prevents silent rule shifting that often disadvantages underrepresented candidates.

- Use blind evidence in early rounds. Allow Jill to compare skills and outcomes without names, faces, or schools until there is a live conversation on the calendar. Reveal identity after fit is established.

- Track outcome parity over time. Measure interview rates, pass through rates, and offers by cohort. If any group consistently sees lower pass through at the same skill evidence levels, you have a model or process problem. Fix by adjusting features, retraining, or changing screens.

- Log every decision. Every auto reject should write a reason code, the constraint violated, and the minimal fields used. This creates an audit trail your legal team can defend.

- Force salary compliance. If a request exceeds the posted range for a jurisdiction, the system must either disclose the full range or refuse to proceed, depending on local rules. Build those rules in, rather than relying on manual checks.

Where the ATS and LinkedIn fit

Agents are not a new system of record. They are a coordination layer. To avoid chaos, keep your Applicant Tracking System as the source of truth for candidates, jobs, and stages.

Integration patterns that work in practice:

- Read from the ATS. Jill pulls job requisitions, stages, and feedback forms from systems like Greenhouse, Lever, Workday, or SuccessFactors. That ensures the agent does not invent stages or bypass compliance steps.

- Write back the minimum viable data. When Jack and Jill agree to schedule, they create a candidate record in the ATS with a structured summary, the evidence packet, and a timestamped transcript. Use webhooks to keep status in sync.

- De duplication. Use email plus phone plus LinkedIn profile URL as the dedupe key. If a record exists, the agent appends notes rather than creating a duplicate.

- Permissions and privacy. Agents only access the requisitions and candidates that a human recruiter would. Your identity provider remains the bouncer. All agent actions are on behalf of a real user who can be audited.

Why this is bigger than recruiting

Once two agents can agree on constraints, price, and timing, other categories become natural fits.

- Sourcing. Vendor agents scan catalogs and supplier capacity, while buyer agents state delivery windows and quality thresholds. They settle on shortlists automatically and escalate only for negotiation of edge cases.

- Procurement. A buyer agent issues a request with exact terms. Seller agents respond with compliant offers that include service level agreements, pricing tiers, and warranty terms. Humans step in for complex trade offs.

- Business to business sales. Seller agents qualify leads, propose demo times, and draft orders against a buyer’s procurement rules. The first call becomes a substantive discussion, not calendaring and paperwork.

If you want to see how autonomy beachheads form in production environments, compare this pattern to the rise of agentic AI in customer experience. And if your integrations are shallow or fragmented, study how post API agents that click across screens help bridge legacy gaps.

A rollout playbook for talent teams

You can pilot dual agents without re architecting your stack. Here is a pragmatic plan that fits inside a quarter.

Phase 1: Two weeks to define the system

- Choose one role family with repeatable signals. Sales development, customer support, or mid level engineering are good starting points. Avoid roles with undefined scopes.

- Create a canonical job schema. Must haves, nice to haves, salary band, work modality, team size, reporting line, and interview loop. Require a reason if any must have is left blank.

- Codify constraints. Put location windows, work authorization, and salary rules into a configuration file, not a slide deck. Include escalation thresholds for exceptions.

- Align with legal and security. Confirm logging, data residency, and retention. Decide which fields can be used for pre screening and which are only revealed after a live call is scheduled.

Phase 2: Four weeks to integrate and calibrate

- Connect the ATS. Read requisitions and write back scheduled events plus structured evidence. Turn on webhooks for stage changes.

- Seed Jack with 100 to 300 consenting candidates. Include a range of experience levels and backgrounds to stress test matching.

- Calibrate Jill with three live requisitions. Have the hiring manager spend one hour to tune must haves, interview loop, and salary flexibility.

- Run weekly red team sessions. Try to break the system with trick resumes, ambiguous titles, and conflicting constraints. Fix failure modes fast.

Phase 3: Four weeks to scale and govern

- Expand to five requisitions and 1,000 candidates. Track time to first interview and slate size per role.

- Add structured debriefs. After each human interview, log which agent signals predicted success and which misled. Use that feedback to retrain matching features.

- Introduce a compensation check. Block any agent proposed conversation that violates a location’s transparency rules or exceeds the band without an approved exception.

- Prepare a playbook for hiring managers. One page that explains what the agents do, what to expect in the first call, and how to give feedback that the system can learn from.

The metrics that matter

If agent to agent hiring is working, you will see movement in specific numbers. Track these with definitions that teams cannot game.

- Time to first interview. Median working hours from requisition open to a live scheduled call. Target 24 to 48 hours for pilot roles.

- Slate assembly time. Days to present four to six qualified candidates who meet must haves and constraints. Target seven days or less.

- Booking yield. Percentage of proposed interview slots that end up confirmed. Good agent scheduling should push this above 60 percent.

- Evidence completeness. Share of candidates where the packet includes role relevant work samples, quantifiable outcomes, and references. Aim for 80 percent in pilot roles.

- Screen to onsite conversion. Percentage of first interviews that proceed to deeper technical or panel interviews. Stabilize this in the 30 to 50 percent range as agents learn to filter better.

- Offer rate and acceptance rate. The point of better matching is fewer offers for the same number of hires. Watch both numbers month over month.

- Compensation delta. Difference between posted band midpoint and accepted offer. If agents are matching well, the delta shrinks and variance tightens.

- Escalation rate. Percentage of agent conversations that require human intervention before scheduling. This should fall over time as rules and patterns harden.

- Parity by cohort. Compare pass through and offer rates by experience level, location, and other legally permitted cohorts. Investigate gaps that persist after controlling for skills and evidence.

- Duplicate reduction. Share of candidates that appear once and only once across job slots. A clean graph is a sign that your dedupe strategy is working.

Risks and how to contain them

- Misrepresentation. An agent might oversell a candidate or a role. Mitigate by anchoring every claim to evidence and by requiring reference ready contacts before onsite.

- Collusion with spam. If either side learns to game the protocol, your signal collapses. Rate limit low quality queries and use risk scoring to identify patterns that waste time.

- Overfitting to what worked last quarter. If you only hire clones, your range of outcomes narrows. Periodically inject diverse profiles that meet must haves and measure how they perform.

- Calendar thrash. Agents that reschedule too often erode trust. Penalize reschedules in the matching score and enforce cooldown periods.

- Shadow pipelines. Teams may try to bypass the system for favorite candidates. That is fine, as long as they log the candidate in the ATS and subject the record to the same post hire analysis.

Crossing the chasm for agent economies

Agent to agent markets cross over when three things are true.

- The protocol is simple and common. Everyone understands the fields and constraints. Jack and Jill’s launch shows a concrete schema that real companies are starting to use.

- The evidence travels. Work artifacts, references, and outcomes accompany the profile. Hiring managers trust it enough to take the first call.

- The incentives line up. Candidates get a real shot at roles they might never see. Employers see fewer but better conversations. Recruiters spend more time closing and less time sorting.

If you can achieve those conditions inside your company by year end, you will not only cut time to hire. You will build a compounding advantage in how fast teams form and how quickly they deliver.

The bottom line

Jack and Jill’s launch is more than a feature drop. It is a visible switch in the hiring interface, from forms and inboxes to agents that negotiate on your behalf. Set your rules, wire the agents to your Applicant Tracking System, and let them clear the path to the first serious conversation. When the bots are done dealing, the humans can do what only humans do well: choose, convince, and build together.