The Warehouse Agent Goes Free. Operations Just Changed

AutoScheduler has released a free Warehouse Decision Agent that coordinators can use today. Warehousing is emerging as the first real beachhead for agentic AI, with crisp data, clear KPIs, and small models that plan and replan real work.

Breaking: a warehouse agent teams can actually use now

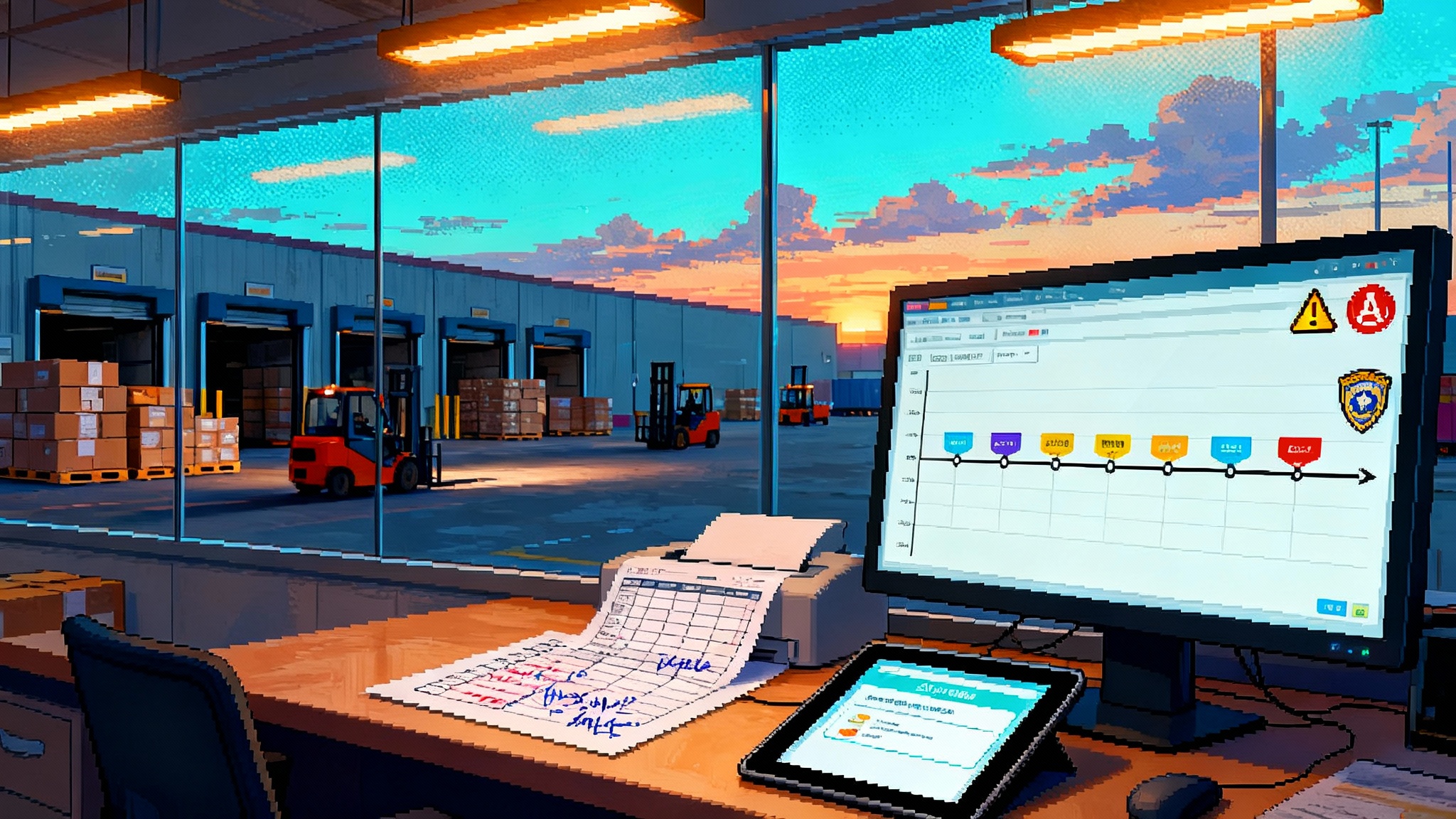

On October 7, 2025, AutoScheduler announced a free Warehouse Decision Agent that is positioned as a working sidekick for coordinators who plan docks, labor, and moves in real time. The move shifts agentic AI out of slideware and into day-to-day shift planning where every minute counts, as confirmed in AutoScheduler's launch announcement. The promise is simple: less time assembling a plan, more time running it.

Many operators have watched polished demos that look clever on stage but collapse under messy data, brittle interfaces, and the thousand small exceptions that define real operations. A free agent aimed at warehouse coordinators lands differently. It targets the narrow decisions that repeat every hour, plugs into systems teams already use, and measures impact against familiar KPIs.

Why warehousing is the first operations beachhead

Warehouses are unusually ready for agents because they produce tight data exhaust and track outcomes with simple, money-on-the-table metrics. Two of the clearest are dock-to-stock time and truck dwell time. Dock-to-stock measures the stretch from receipt on the dock to ready-for-pick inventory. The measure is defined and benchmarked by industry bodies, including the APQC dock-to-stock definition.

Beyond crisp metrics, several practical conditions make warehouses ideal for early agents:

- High-frequency decisions with contained scope. Every shift brings hundreds of assignable tasks with short horizons: which door to assign, what to unload next, who moves what where, which replenishments to accelerate.

- Narrow, well-instrumented action space. The agent does not invent new workflows. It sequences moves already available in Warehouse Management Systems, Transportation Management Systems, and Yard Management Systems.

- Clear constraints. Safety rules, union rules, equipment limits, and space capacity can be encoded as constraints that the agent must satisfy when proposing plans.

- Fast, visible feedback. When an agent changes a dock sequence, the effect shows up within hours in gate dwell and aging trailers. That feedback tightens the learning loop.

Think of a warehouse like an airport gate area. Planes, passengers, and ground crews become trailers, pallets, and forklift drivers. The work is noisy and physical, yet the scheduling problem is digital. This is fertile ground for practical agents.

We have already seen other domains cross this threshold. In customer experience, for example, the patterns of autonomy are arriving first where the data and KPIs are tight, as discussed in our analysis of CX as the first autonomy beachhead. Warehousing fits the same pattern.

The new recipe: small models plus domain ontologies

Most warehouses do not need a giant state-of-the-art language model to improve shift outcomes. They need a planner that understands the facility’s vocabulary and constraints, can read the live schedule, and can replan when reality disagrees.

A modern pattern looks like this:

- A small or mid-sized language model to reason about tasks, summarize status, and generate human-readable updates. With careful prompt design and a handful of warehouse-specific examples, the model is cheap to run and fast enough for minute-by-minute replans.

- A domain ontology that encodes the operation. It names resources such as docks, doors, forklifts, pick faces, forward pick, reserve locations, staging lanes, automation assets, and labor roles. It also encodes relationships: a door is attached to a zone, a zone has a staging area, an equipment type can service certain pallet weights.

- A constraint solver to enforce feasibility. The agent can draft many plans, but only the solver blesses those that fit capacity, labor certifications, and safety policies.

- Tool interfaces into WMS, TMS, and YMS. The agent does not move pallets itself. It proposes sequences and writes tasks through the systems of record, then listens for confirmations and events.

Here is a concrete loop for an inbound-heavy shift:

- At 05:30 the agent ingests the gate schedule, open purchase orders, staffing roster, equipment health flags, and known constraints such as a down conveyor.

- It sequences doors and labor by window, maximizing the flow of priority receipts and reducing the risk that a hot order lacks pickable inventory.

- It posts a coherent shift plan to the supervisor and to team tablets.

- Trucks run late. At 09:45 the agent detects the slip, simulates knock-on effects on dock-to-stock and dwell, and proposes a new sequence while holding firm to hard constraints.

- A supervisor taps approve. The agent pushes updates to WMS tasks and drafts a standardized message to the carrier for the impacted doors.

Most of this is text, timestamps, and structured inventory data. That is why smaller models paired with a crisp ontology perform well. The agent’s power is not poetry generation. It is the ability to reconcile a live, inconsistent picture of the floor and propose a feasible next best move.

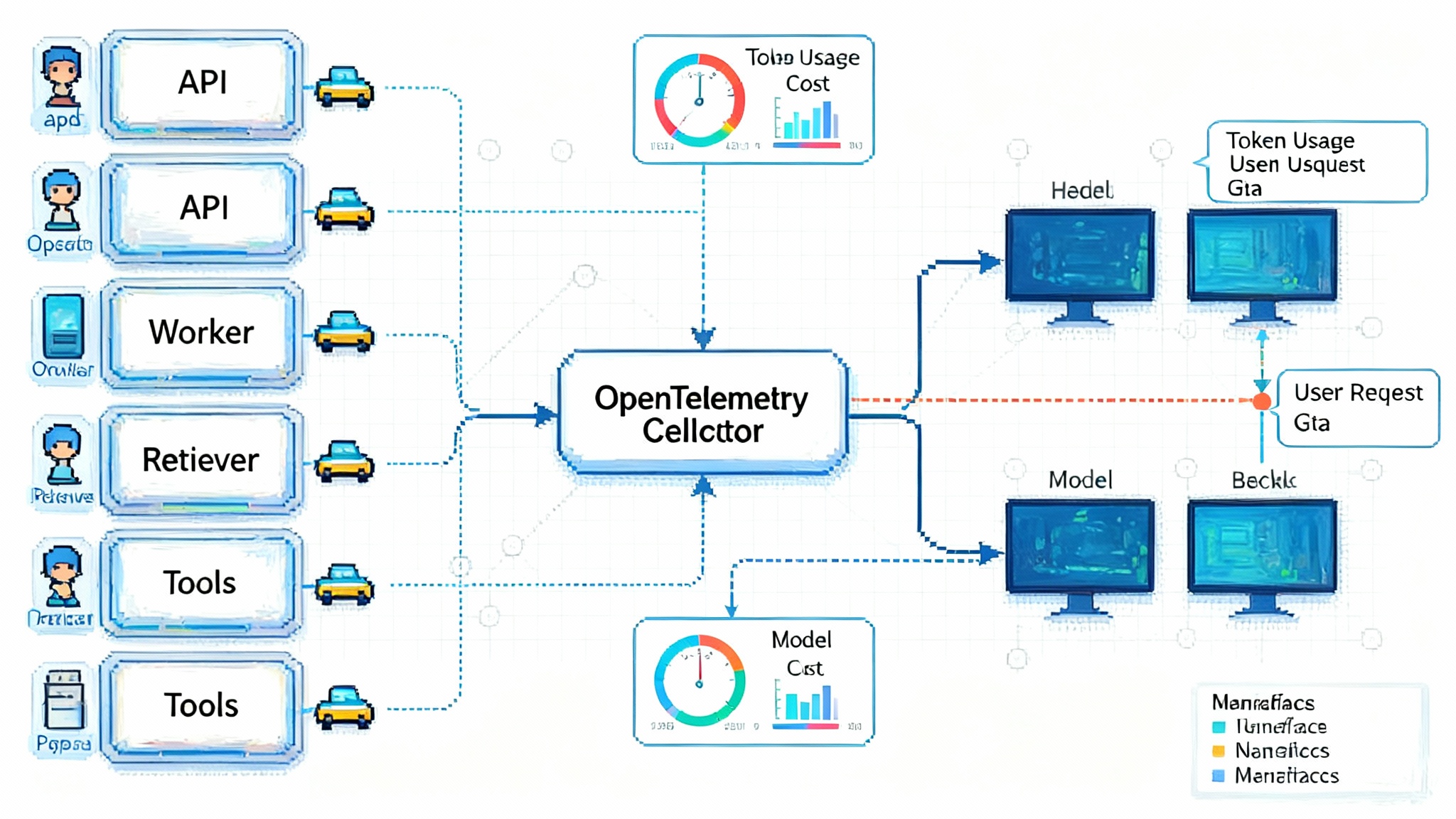

The real-world agent stack

To pilot in 30 days and scale in 2026, teams need a pragmatic stack. Think of five planes: data, memory, reasoning, action, and governance.

1) Data plane

- Connectors to WMS, TMS, YMS, Labor Management, time clocks, and carrier appointment portals. Read events and write tasks through approved APIs or message queues.

- Event normalization. Turn syslog-style messages into typed events such as trailer_checked_in, dock_assigned, pallet_put_away, wave_released.

- Reference data catalog. Maintain ground truth for doors, zones, equipment, SKU families, units of measure, and labor certifications.

2) Memory

- Short-term memory for the current shift. A rolling event buffer plus a feature cache for the planner. Keep the last 48 to 72 hours of high-resolution events to explain any decision.

- Long-term memory for patterns. Store daily aggregates for dock-to-stock, dwell, pick density heatmaps, and exception types to improve prompts and objective functions.

- Human context memory. Persist supervisor notes such as a temporary no-go aisle or a driver with restricted certification. The agent should respect these annotations automatically.

3) Reasoning

- Planner with time windows and resource constraints. Model doors, labor, forklifts, and automation assets as resources with capacities and setup times.

- Risk model. Estimate the impact of slips on dock-to-stock and dwell, then simulate resequencing. The agent should explain why it is changing the plan.

- Scenario engine. Allow fast what-if runs when a supervisor asks to protect an outbound wave or to prioritize a trailer.

4) Action and safety

- Tool schema. Define explicit functions such as create_putaway_task, resequence_dock, assign_labor, message_carrier. The model may only act through these tools.

- Policy guardrails. Hard constraints for safety and compliance. The agent cannot assign uncertified labor to a high-reach truck, cannot exceed slot capacities, and cannot schedule simultaneous occupancy of a door.

- Confirmation gates. High-impact actions require human-in-the-loop approval until performance thresholds are met.

- Rollback and compensating actions. Every write has a reverse path so supervisors can unwind mistakes quickly.

5) Governance and observability

- Immutable audit log. Capture prompts, tool calls, parameters, and outcomes per decision. This creates explainability for supervisors and compliance for audits.

- Metrics sink. Stream real-time counters for dock-to-stock, dwell, trailer aging, labor utilization, and exception resolution time.

- Incident postmortems. Tag decisions that caused rework and harvest new guardrails or ontology refinements.

For teams building a similar stack, start with observability. We have a deeper guide to wiring this layer in our piece on zero-code LLM observability.

A 30-day pilot blueprint

A successful pilot is scoped, instrumented, and boring in the best way. Here is a practical plan that teams can execute without robots or new automation.

Week 0 to 1: Frame and wire

- Choose one site, one shift, and two metrics: dock-to-stock for inbound and gate dwell for yard. Define a 30-day success condition such as a measurable reduction in late putaways.

- Data connections. Read-only into WMS receipts, task statuses, and inventory locations. Read-only into carrier appointments and gate events. Daily roster and certifications from Labor Management.

- Ontology sketch. Name doors, zones, equipment, labor roles, and key flows such as cross-dock, flow-through, reserve putaway, and forward replenishment.

- Safety envelope. Define what the agent may change in week 2: door resequencing and message generation, but no live task creation yet.

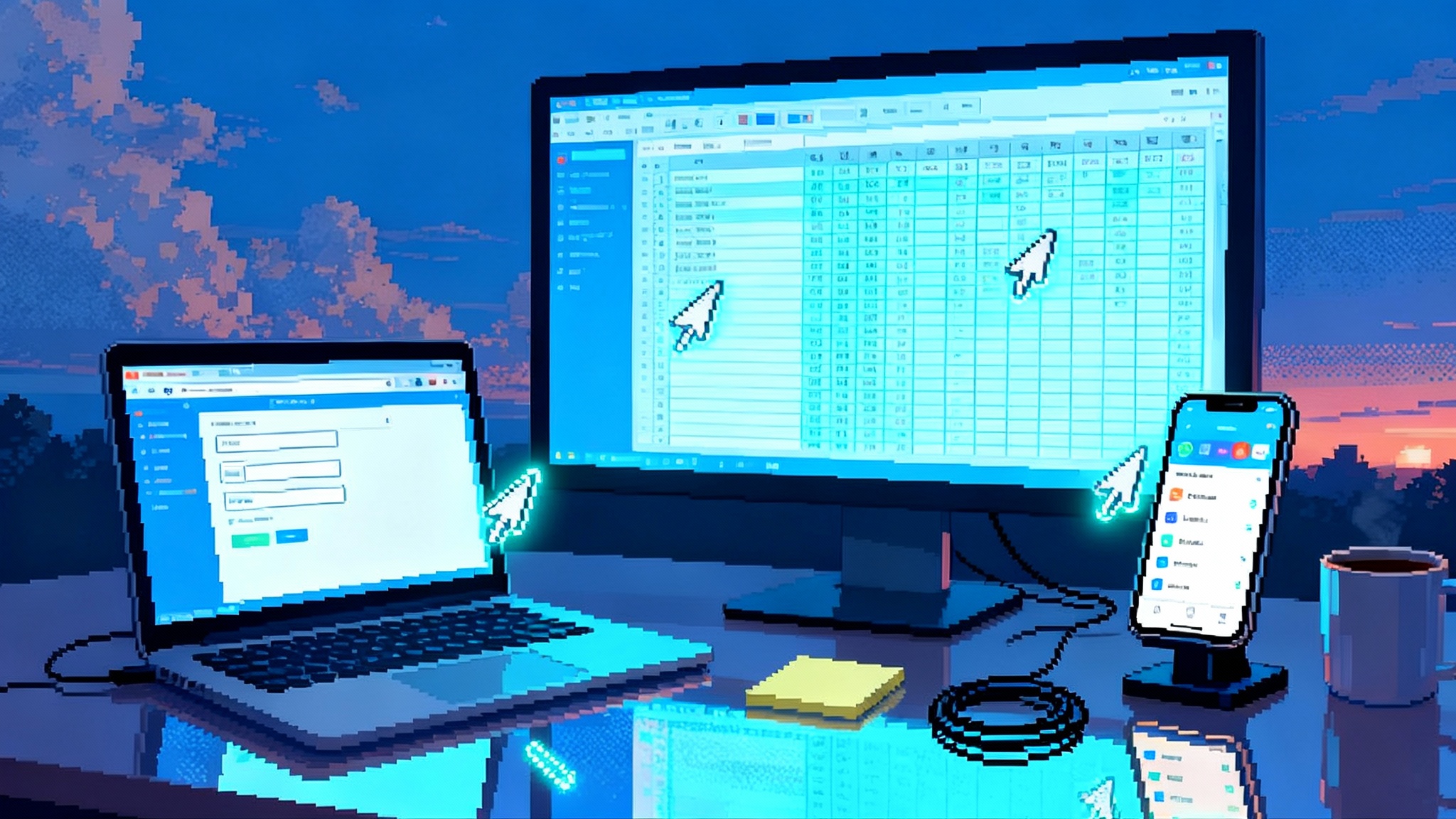

Week 2: Shadow operations

- The agent generates a shift plan and replans hourly, but supervisors execute manually.

- Compare agent plans against human plans on three criteria: on-time receipts for priority orders, average dock-to-stock by item family, and number of exception handoffs.

- Collect counterexamples where the agent missed context. Add those cases to the ontology and prompt library.

Week 3: Human-in-the-loop control

- Allow the agent to execute low-risk actions such as door resequencing and templated carrier messages with supervisor approval.

- Introduce action budgets. For example, the agent may propose at most five resequences per four hours to reduce churn on the dock.

- Tighten audit trails. Every approved action should have a visible why, including which constraint or KPI improved.

Week 4: Narrow autonomy

- Enable automatic creation of specific WMS tasks for putaway or replenishment under thresholds. Keep human approval for anything that changes labor assignments.

- Run daily 15-minute reviews. Supervisors scan the audit log, flag good calls, and mark any bad calls with a preferred correction. Feed findings back into guardrails.

- Decide on graduation criteria for phase two. For example, sustained improvement in dock-to-stock for inbound consumables and a reduction in average gate dwell for late arrivals.

At the end of 30 days, you either have a working agent in a narrow area or you have a precise list of blockers. Both outcomes are progress.

What to standardize now to scale in 2026

Scaling agents across sites is mostly a discipline problem. Here is what to lock down before you roll from one pilot to a network.

- Data contracts. Publish schemas for events and tools that every site must provide. Do not rely on one-off field names from legacy exports. Assign owners for each contract.

- Identity and permissions. Use single sign-on, per-site roles, and explicit tool scopes. The agent should never hold broad database credentials.

- Site profiles. Parameterize each building with door counts, zone maps, equipment profiles, and labor skill matrices. Treat these as versioned artifacts, not tribal knowledge.

- Evaluation harness. Create a replay dataset from each site that can run a two-hour slice of operations in simulation. Use it to regression test prompts, constraints, and new tools before live rollout.

- Guardrail library. Codify safety and compliance rules once, then inherit per site. Keep the list short and explicit. Each rule should have a clear test and a clear failure mode.

- Observability. Standardize dashboards for dock-to-stock, dwell, hot order protection, and exception rates. Add per-action latency and success counters so you can see health at a glance.

- Cost control. Run small models locally for plan-of-record actions and reserve larger models for rare, ambiguous cases. Track token spend per decision path to avoid surprises.

- Change management. Train supervisors to read audit logs, approve actions, and request scenario runs. The agent is a teammate, not a black box.

If your stack depends on standardized tools and capabilities, you will recognize the pattern we explored in the USB-C moment for AI agents. Vertical adapters and clear contracts accelerate rollout.

How small models and ontologies span WMS, TMS, and YMS

A warehouse agent earns trust by making the same systems more effective rather than bypassing them.

- In WMS, the agent sequences receipts, putaways, replenishments, and picks using existing task types. It watches task confirmations to assess plan drift and decides whether to pull releases or accelerate replenishments.

- In TMS, it reads appointment windows, truck arrival messages, and carrier performance. It drafts standardized messages when slips occur and proposes gate schedule changes that reduce dwell.

- In YMS, it matches doors to trailers based on content, urgency, and downstream waves. It tracks trailer aging and flags risers before they become problems.

Across these systems, the ontology is the glue. It maps the names in each database to physical reality, so the agent can reason about resources and not just tables. This mapping lets the agent explain, in plain language, why resequencing two doors will protect a hot order and shave hours off dock-to-stock.

Action safety and audit trails that operations accept

Operations teams will not accept an agent that acts without guardrails or explanations. A simple safety playbook helps an organization ship with confidence.

- Single-write principles. The agent must write through systems of record, not side databases. That keeps reconciliation simple.

- Safe defaults. If a rule is unclear, the agent proposes and waits. If a tool errors, it retries with backoff, then escalates with a templated message.

- Explain every action. For each write, store input state, proposed change, KPI deltas, and the constraint set. Make this readable on one screen for a supervisor.

- Post-incident fixes. When a decision causes rework, add a test to the harness so the bug never ships again.

If you are thinking about productionizing this stack, you will want to measure the agent as a first-class system. We cover patterns to instrument agents in our guide to zero-code LLM observability.

What good looks like in 90 days

You can get meaningful results without new robots or capital projects. Aim for these outcomes and report them weekly.

- Fewer late putaways on priority receipts, measured daily and broken out by item family.

- Lower average gate dwell for late arrivals, driven by better door assignment and clearer communication with carriers.

- A smaller stack of aging trailers, with clear explanations for each sequence change that reduced risk.

- Supervisors who start shifts by approving a plan rather than creating one from scratch.

That is a real change in how work starts, and it is achievable with small models and focused engineering.

What the AutoScheduler launch tells us

A free warehouse agent with a clear on-ramp signals that vertical, operations-grade agents are moving from prototypes to production. The design choice is as important as the price. The agent is aimed at coordinators who fight the day’s chaos. It plugs into existing systems, speaks the language of dock-to-stock and dwell, and makes narrow, frequent decisions that can be audited. This is the pattern every team should copy, whether you build or buy. AutoScheduler did not bury the news. The availability date and the free access model are both explicit in the AutoScheduler's launch announcement.

The takeaway for leaders

Do not wait for a humanoid to stride down your aisle. Pick one facility, codify its ontology, wire the agent to your WMS, TMS, and YMS, and constrain its actions to the smallest set that moves a KPI you already care about. Invest in memory, action safety, and audit trails. Standardize the parts that let you roll across sites in 2026. You will reduce chaos today and build a capability that compounds.

The crossing has started. It is not a keynote. It is a shift plan.