Cursor 2.0 Turns Multi Agent Coding Into an IDE Primitive

Cursor 2.0 brings Composer and a new interface that puts multi agent coding inside the editor. Agents run in parallel on isolated copies, diffs are unified, and typical turns finish in under 30 seconds. Here is what changes for teams.

The news: agents move from sidecar to center stage

On October 29, 2025, Cursor released a major upgrade that puts multi agent workflows at the heart of the editor. The company introduced a new coding model called Composer and a redesigned interface that treats agents as first class citizens inside the integrated development environment. Cursor frames this as a shift from chatty prompts to orchestrated work: plan, execute, test, and iterate on an agent canvas without leaving the IDE. The company’s positioning, performance claims, and design goals are detailed in the official posts for Introducing Cursor 2.0 and Composer and the Cursor 2.0 changelog.

This is not a cosmetic refresh. Cursor is turning parallel agents into an IDE primitive, similar to how source control panels, terminals, and test explorers earned permanent places in developer workflows. If you have been living in a prompt and paste world, this is a new mental model: you orchestrate a team of specialized bots with clear roles, each working in an isolated workspace, while you supervise outcomes through tests, diffs, and reviews.

What changed in practice

Cursor 2.0 adds two pillars that matter for day to day engineering work.

-

Composer, a low latency coding model tuned for multi step tasks. Cursor says most turns complete in under 30 seconds and that the model is four times faster than similarly capable options. Speed is not the only goal. Short turn time keeps humans in a tight loop, which reduces idle time between agent actions so context stays warm and decisions stay fluid.

-

A multi agent interface that lets you run several agents in parallel on a single prompt and plan. Each agent works in an isolated copy of your codebase to avoid file conflicts and then proposes merges back to the main branch. The changelog calls out up to eight agents in parallel, isolation via git worktrees or remote machines, and improved multi file change review inside the editor.

Think of it as moving from a single Swiss Army knife to a pit crew. You do not ask one tool to do everything. You assign distinct jobs and watch the lap time drop.

Why this is a real shift, not a feature checkbox

The prompt and paste pattern helped for single file changes and quick refactors. It broke down as tasks demanded coordination, environment setup, or non trivial testing. The friction showed up as long scrollback chats, manual copy operations, and context resets. Even with strong models, that pattern left throughput on the table.

Making agents a first class interface changes the unit of work. The unit is no longer a single prompt result. It is a plan with roles and checkpoints. The tool bakes in mechanics that drive software quality: isolation, diffs, tests, and safe merges. That is the daily rhythm of effective engineering. Cursor is attempting to automate the scaffolding around that rhythm.

There is also a psychological effect. When the interface invites you to define roles and attach tests upfront, you naturally think in terms of outcomes and verification rather than clever phrasing. That nudge moves teams from one off wizardry to repeatable patterns.

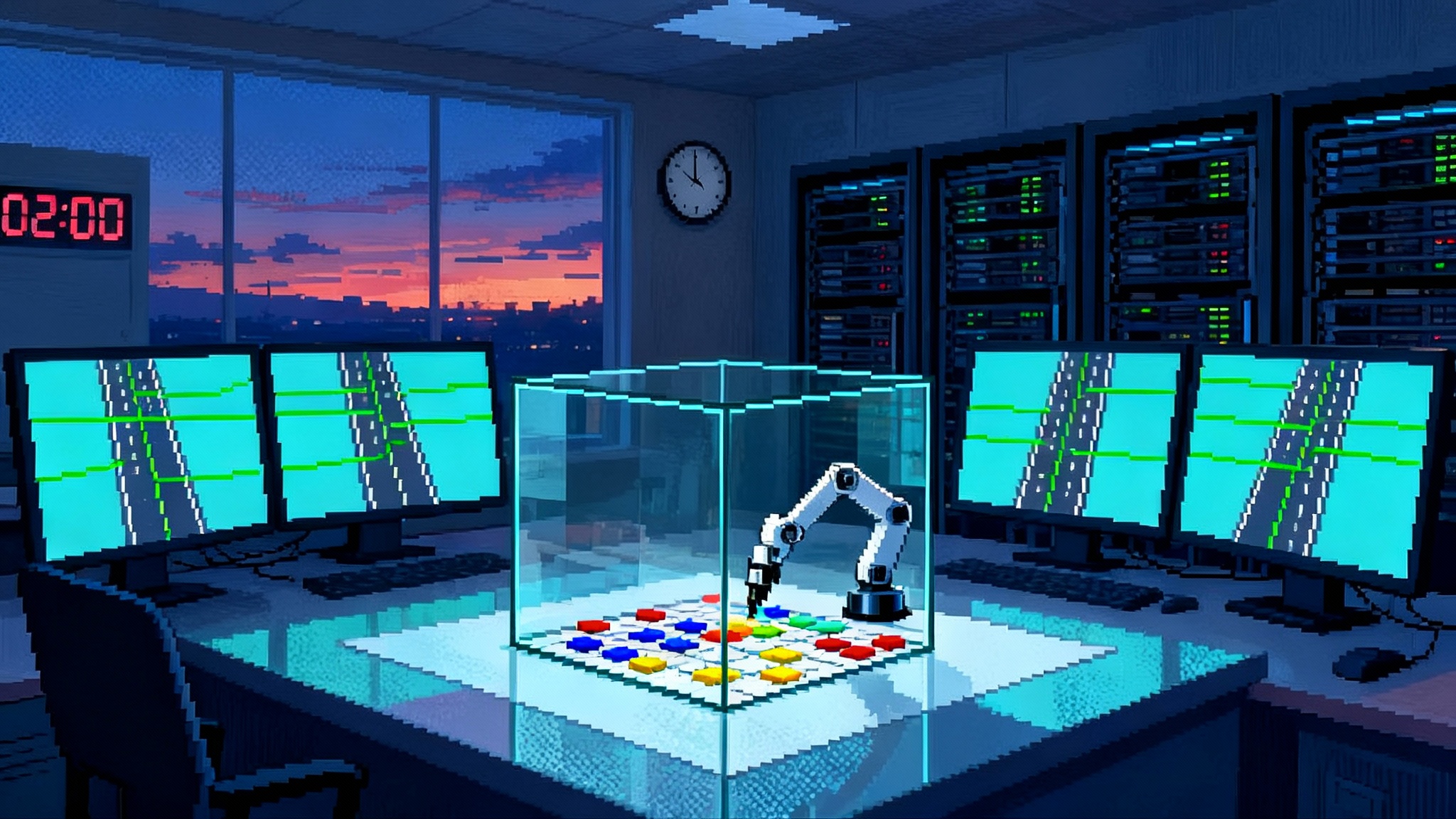

How parallel agents work inside Cursor 2.0

Cursor’s framing is pragmatic. Here is what you can expect to see in a typical agent run.

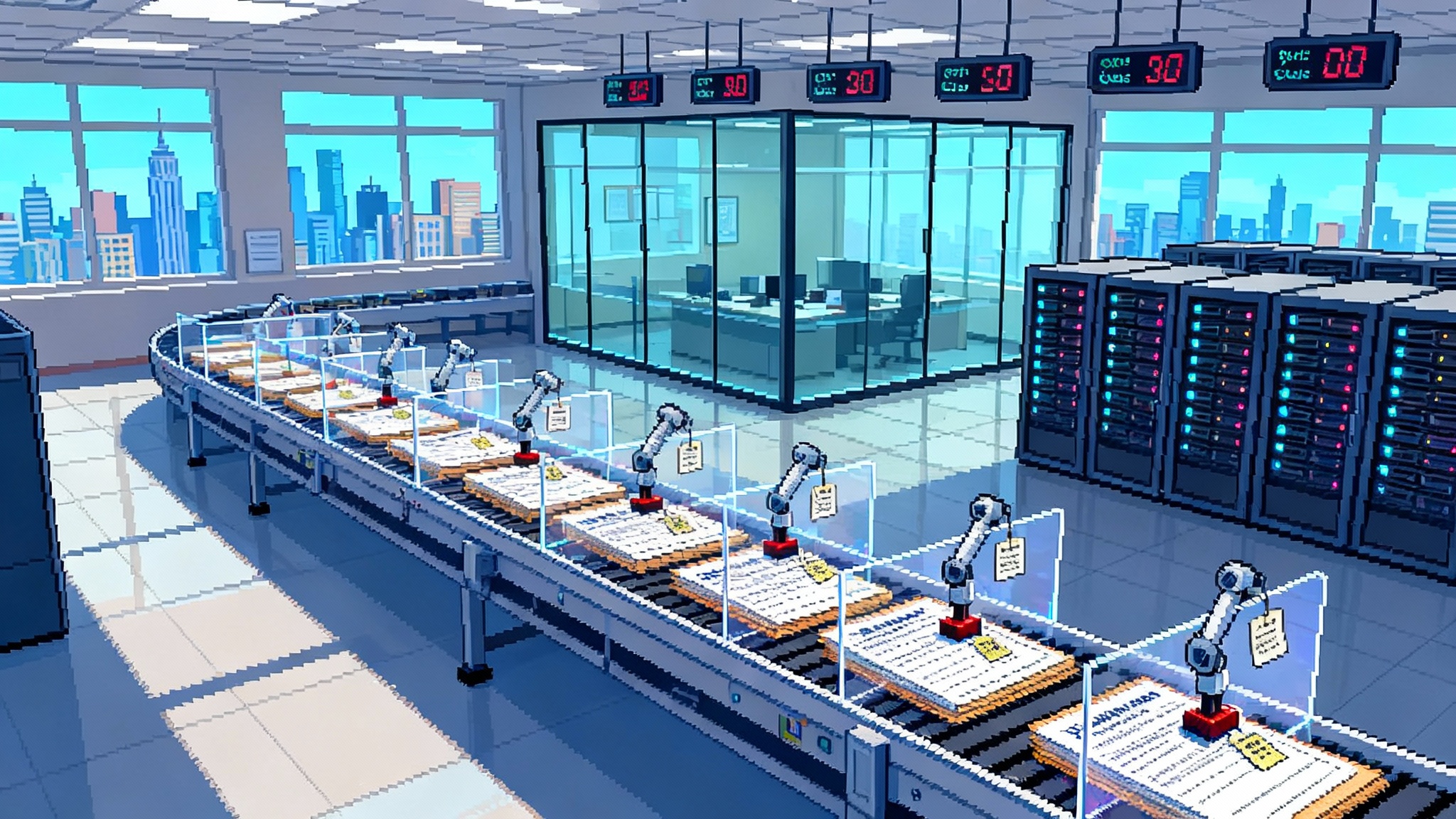

- A plan. You describe the goal, constraints, and the code areas involved. Composer and the planner agent break it down into steps.

- Roles. You spin up agents with clear jobs such as Implementer, Test Runner, Linter, Docs Updater, and Schema Migrator. Each agent operates within a bounded scope.

- Isolation. Each agent edits an isolated copy of the repo using git worktrees or a remote sandbox. That prevents collisions and makes it cheap to discard bad branches.

- Tools. Agents can search the codebase semantically, open terminals, run tests, and capture diffs. The editor gives you a unified pane to compare changes across files before anything touches the main branch.

- Merge intent. Agents propose patches or pull requests. You gate on tests and reviews. The interface helps you review multi file changes in one place.

Under the hood this is the same playbook human teams use. The difference is that the editor handles the plumbing so you can parallelize with confidence.

Composer’s angle: speed as a workflow enabler

Time between turns shapes whether a human supervises well. Composer is billed as a frontier model that is four times faster than similarly intelligent models, with most turns landing in under 30 seconds. Numbers matter, but the qualitative shift matters more. If your agent can iterate three times before your attention drifts, you will keep guiding it with sharper feedback and you will catch problems earlier.

Composer also leans on codebase wide understanding. Trained with access to code level search and repository scale context, it can reason about cross file changes, not just one file at a time. That is crucial when agents split tasks and must coordinate contracts. In practice, this can look like the Implementer updating a function signature while the Test Runner adjusts fixtures and the Docs Updater rewrites a usage example, with conflicts surfaced before merge.

A concrete example: from ticket to orchestrated workflow

Imagine a feature request: add passwordless sign in with email links to a production web app.

- Planner reads the ticket, scans the authentication module, and proposes a plan with tasks and dependencies: database migration for magic link tokens, API endpoint, email sender, client flow, tests, and documentation.

- Implementer creates a branch, scaffolds the endpoint, and modifies the client form. It outputs a draft patch and notes the new token schema.

- Migration writes a reversible database migration and a safe backfill script for existing users.

- Test Runner generates unit tests for token generation and expiry, and integration tests for the sign in flow. It runs the suite, captures failures, and iterates until green.

- Security Auditor checks that tokens are single use, time bound, and stored hashed. It flags a missing rate limiter and opens a task for Implementer.

- Docs Updater adds a section to the README and a snippet in the user guide.

You watch the plan, review diffs per branch, and approve merges once the suite is green. The flow is ordinary engineering, only now the mechanics are automated and parallelized.

Shipping velocity: where the time actually goes

Parallel agents help in three ways.

- Less idle time. Humans do not wait for long single model turns. They authorize the next step within the same attention window, which keeps momentum.

- Fewer context resets. Isolated branches and unified diff views mean you do not reconstruct the state from chat history. You inspect artifacts in one place.

- True concurrency on independent tasks. Test generation, documentation updates, and refactors can proceed while implementation happens. You pull forward work that usually waits until the end.

The result is not just faster coding. It is faster convergence, which is what matters for shipping. Teams can measure this by looking at median time from ticket open to first green build, not lines of code generated.

Code reliability: make the test loop the center of gravity

Agentic speed without guardrails can create brittle systems. Cursor’s approach is to make tests and diffs the anchor of the workflow. The practical takeaways:

- Make tests a dependency, not an afterthought. Define the test suite target in the plan. Set a rule that no agent merge happens until the suite is green.

- Use isolation to your advantage. Let agents try risky changes in separate worktrees. Discard branches that fail tests repeatedly rather than nudging them forever.

- Prefer small, typed changes. Ask agents to preserve function signatures or update call sites systematically. This raises the chance that errors are caught statically or in tests.

- Keep humans in the loop on contracts. Use review checkpoints on public interfaces, database schemas, and security boundaries. Give agents freedom on internal implementation details.

If you adopt those habits, multi agent speed will increase reliability rather than erode it.

What changes for team roles

- The conductor. Someone designs the workflow, chooses agent roles, sets rules, and curates prompts. This role maps to a technical lead, but the unit of work is a workflow template rather than a file set.

- The reviewer. Code review becomes more about verifying contracts and less about style. Reviewers assess plans, test coverage, and safety checks, then sample diff quality.

- The builder. Individual engineers still write code, especially at boundaries and in tricky algorithms. They also write reusable instructions that agents can follow the next ten times.

- The reliability owner. This person sets up test oracles, monitors flaky tests, and ensures agent runs are observable and auditable.

Over time, you can expect teams to evolve from one developer per feature to one developer per orchestrated workflow, with templates handling a growing share of repetitive work.

Failure modes to watch and how to mitigate them

- Agent collisions. Even with isolation, agents can step on shared configuration files or migrations. Mitigation: pre declare ownership of paths per agent, and fail fast on conflicts.

- Stale context. Long running plans can drift as the main branch moves. Mitigation: rebase isolated branches regularly and rerun quick sanity tests after each rebase.

- Non determinism in outputs. Two runs produce slightly different code. Mitigation: freeze versions of tools and libraries used by agents, and enforce deterministic formatters and linters.

- Overproduction of diffs. Too many small changes create review fatigue. Mitigation: set a maximum diff size per agent and require squashing once tests pass.

Treat these as operational issues you can manage with the same discipline you use for continuous integration.

A seven day adoption plan

Day 1: Pick one product area and one repository with a solid test suite. Define three agent roles you trust and write a simple workflow template.

Day 2: Instrument your pipeline. Ensure tests run fast locally and in continuous integration, and that flaky tests are addressed. Make green the only merge condition.

Day 3: Convert a medium scope ticket into an agent plan. Include acceptance criteria, test expectations, and a rollback note. Keep the plan short and explicit.

Day 4: Run agents in parallel on two independent tasks. Example: test generation and documentation updates while implementation proceeds.

Day 5: Review metrics. Time to first green build, number of iterations, review duration, and defects found. Compare to your last human only ticket.

Day 6: Tune prompts and roles. Promote winning patterns into reusable templates. Drop roles that add noise.

Day 7: Socialize the workflow. Write a short guide and record a one minute screen capture of the plan to merge path.

The goal of the first week is not bragging rights. It is to surface bottlenecks, then encode the fixes into workflow templates.

Where this leaves the market

Cursor is not alone in pushing beyond autocomplete. GitHub, Google, and others are exploring workspace level agents that plan and run tasks across a repo. Replit has been aggressive on agent autonomy, a theme we covered when Replit Agent 3 goes autonomous. In legal tech, orchestration layers are formalizing, as seen when Harvey debuts the Agent Fabric. Operations teams are also demanding control points such as approval gates, a pattern illustrated by approval gated agents for MSPs.

Cursor’s move is distinct because it ships orchestration as an IDE primitive rather than a bolt on tool. The integration tightens feedback loops and reduces integration tax. The broader trend is clear. Coding tools are moving from suggestion engines to execution environments. The winners will combine speed, strong test loops, safe isolation, and predictable human workflows.

Sharp questions to ask before you commit

- How does the tool isolate parallel changes, and what happens on conflicting edits to shared files and schemas?

- Can you cap diff sizes per agent and enforce merge gates on green tests only?

- How fast are average turns under load in your environment, and what error reporting is available when tools fail mid run?

- What does auditability look like, including a full trace of plans, commands run, and diffs produced?

- How easy is it to templatize and reuse multi step workflows across repositories?

Ask these now to avoid surprises later.

The bottom line

Agents inside the editor are no longer a novelty. Cursor 2.0 treats them as a core part of how work moves from idea to merged change. Composer lowers the latency tax, the multi agent interface brings structure and safety, and the result is a workflow that looks more like an orchestra than a solo performance. If you define clear roles, bring tests forward, and keep humans in charge of contracts, you will ship faster and with more confidence. The future of coding is not a bigger prompt box. It is a better conductor’s podium.