Agent Bricks marks the pivot to auto optimized enterprise agents

Databricks' Agent Bricks moves teams beyond hand-tuned bots to auto-optimized enterprise agents. With synthetic data, LLM judges, and built-in observability, it shortens idea to production and sets a 2026 stack blueprint.

The moment the tinkering era ends

For two years, enterprises tried to turn pilot chatbots into real products with a maze of prompts, brittle rules, and late-night dashboard watch parties. Demos impressed, then drifted in production. With the debut of Agent Bricks at the Data + AI Summit in San Francisco on June 11, 2025, Databricks moved the center of gravity from hand tuning to auto optimization. The intent and scope were explicit: generate task-specific synthetic data, create evaluations and judges, then search the space of models, prompts, and tools until the agent hits a business-defined target for quality and cost. The launch details are spelled out in the official release, Databricks launches Agent Bricks.

This is more than a product update. It is a manufacturing step for agents that shapes behavior using your own data, tests that behavior with automatic and human-in-the-loop scoring, and ships with monitoring that is fit for regulated industries. If the past two years were about clever prompt recipes, the next two are about closed-loop optimization.

What Databricks is actually shipping

Agent Bricks packages three ideas that research and platform teams have validated for years, then makes them work on governed enterprise data out of the box:

- Synthetic, domain-shaped data. Rather than begging for labeled examples, teams can bootstrap task-specific datasets that look like their contracts, support tickets, clinical notes, or knowledge articles. These are not random fabrications. They mirror the structure and vocabulary of the enterprise corpus, so the agent learns to behave in context.

- Large language model judges and task-aware benchmarks. At project start, Agent Bricks generates evaluations that mirror the job to be done. LLM judges score outputs against those benchmarks. Subject matter experts can add rules and feedback, and both signals roll up into a scoreboard that tracks cost and quality together.

- Built-in observability and governance. The work does not stop at the first good score. Databricks connects agents to production-grade monitoring, tracing, and governance across data, prompts, and models, reducing blind spots after go live.

Databricks positions Agent Bricks to tackle specific, repeatable jobs. Think information extraction from invoices, knowledge assistants that actually know policy, custom text transformation for regulated writing, and even orchestrated multi-agent systems. The Agent Bricks product overview highlights this scope and how it slots into the broader Data Intelligence Platform, including storage governance with Unity Catalog, vector search, and model serving for scale.

If you already work on governed agent builds, you will recognize the emphasis on shipping agents as products with controls, reviews, and telemetry. That philosophy rhymes with the governed delivery patterns discussed in AgentKit governance patterns, where teams treat agents as shippable software, not toy prototypes.

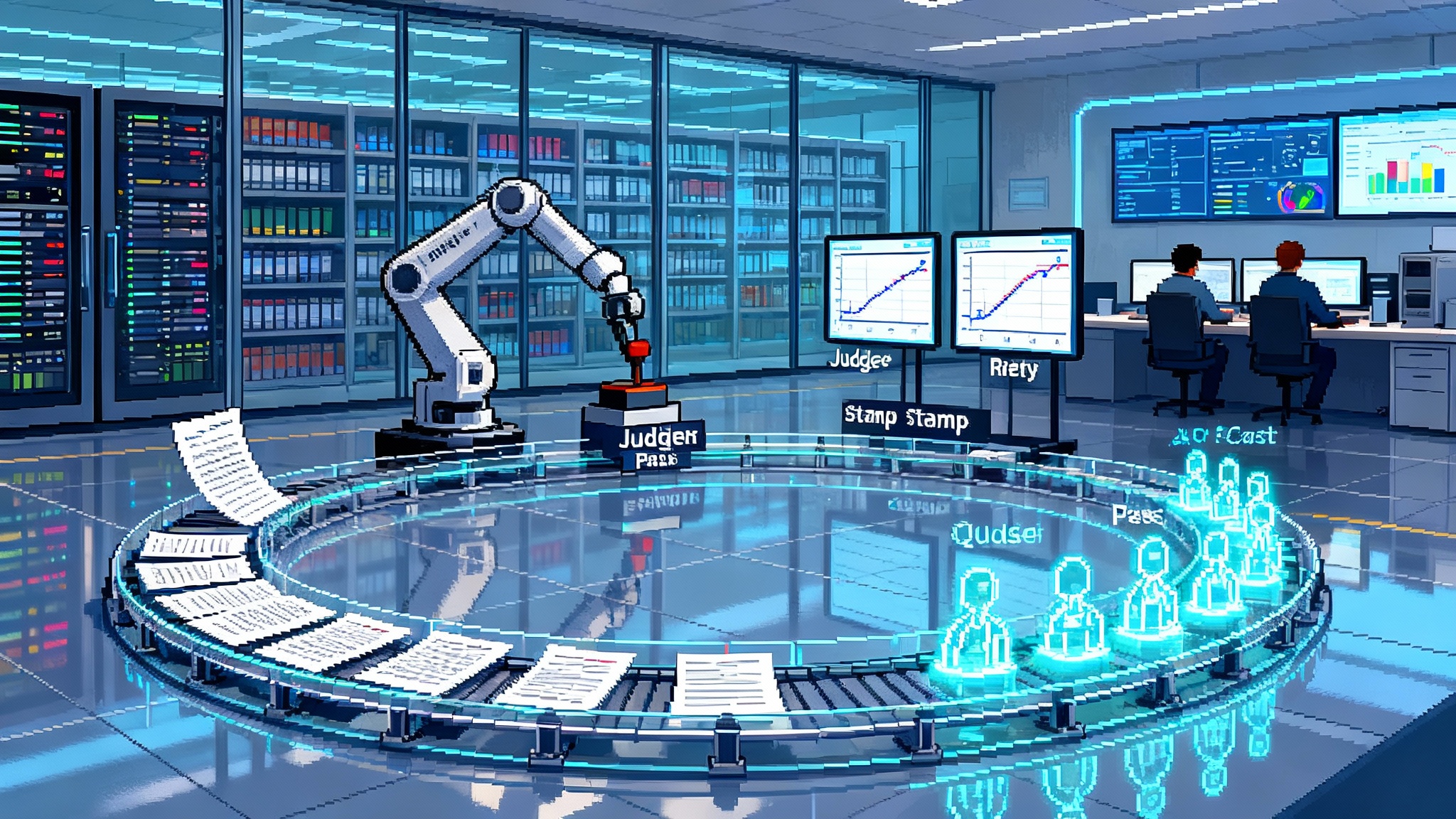

From idea to production, as a loop not a line

The most useful mental model here is a circular factory loop rather than a linear build. A typical path looks like this:

-

Define the job. A support leader wants answers that are consistent with the latest policy, and finance wants the cost per resolved ticket to stay under a specific budget. Those goals become explicit inputs.

-

Ground on enterprise data. Knowledge articles and policy documents are ingested with governance intact, so access controls follow the data. The agent must never hallucinate a policy that does not exist, and it must never expose a document to the wrong role.

-

Generate synthetic training and test sets. Agent Bricks creates synthetic examples that match the customer’s writing style and edge cases. For a refund policy, that might include tricky scenarios like partial shipments or expired promo codes. Synthetic data reduces the cold start problem without waiting months for labels.

-

Create evaluations and judges. The system builds task-aware tests and LLM judges that grade responses. A human expert can add canonical answers or rules, like always cite policy paragraph numbers or always mask personally identifiable information.

-

Run optimization sweeps. The platform explores models, prompt structures, tool use, and memory settings. This is not random wandering. It is a guided search over the quality and cost frontier. The scoreboard makes tradeoffs explicit. A version that costs thirty percent less but drops one point in accuracy might be ideal for a tier one support queue.

-

Ship with telemetry. Once an agent hits its targets, it moves into production with tracing, cost accounting, and guardrails. If performance drifts, the loop can be re-run with fresh synthetic data and updated evaluations.

The key shift is cultural as much as technical. Teams stop arguing about the perfect prompt and start running controlled improvement loops. The platform turns artisanal tinkering into a measured process. If you manage developer agents, the mission-control pattern described in GitHub mission control for agents pairs naturally with this loop by making traces, runs, and rollbacks first-class.

Why this is a pivot, not a feature

- Quality becomes a measurement, not a promise. Most pilots fail quietly. They lose accuracy as data shifts or as prompts get patched. Automatic evaluations and judges catch that drift early.

- Cost becomes a dial, not a surprise. When price per thousand tokens spikes or a model change doubles the bill, finance gets a predictable knob rather than a post hoc warning.

- Data gravity becomes an advantage. Because data, evaluations, and agents live under one governance umbrella, the headaches of copying datasets across tools or clouds shrink. Red teams and auditors have one place to check lineage.

In short, production agents need feedback loops and accountability, not just cleverness. Agent Bricks bakes those loops in.

A concrete cost and time picture

Consider a real but anonymized scenario that mirrors common enterprise patterns. A health care provider needs an agent to extract specific fields from clinical trial reports. The baseline approach, a hand-tuned extractor with a generic model, takes three weeks of back-and-forth and delivers 89 percent field-level accuracy at a cost of 0.80 dollars per document.

With an auto-optimized workflow, synthetic examples are generated to reflect the provider’s report formats, and judges grade field-by-field validity. The search identifies a configuration that uses a smaller but well-tuned model with task-aware prompts and selective tool use. Accuracy rises to 94 percent, and cost drops to 0.48 dollars per document. Most importantly, the team ships in five days, not three weeks. Even if your exact numbers differ, the mechanism holds: benchmark the job with your data, search the quality and cost space, then pick the point that fits business goals.

The same logic applies to knowledge assistants. By building a benchmark that checks for citations, tone, and policy compliance, the agent can be tuned to favor correctness and transparency over verbosity. When a new policy drops on Friday, the loop regenerates tests and re-optimizes over the weekend.

The 2026 agent stack blueprint

Agent Bricks clarifies what a futureproof agent stack needs, whether you buy it from one vendor or assemble it yourself. Here is a practical blueprint that maps to the way work happens inside enterprises:

-

Data and governance layer. Centralize structured, unstructured, and vectorized views with fine-grained access controls. Every test and trace must inherit those controls so developers cannot accidentally create a shadow copy with looser rules.

-

Synthetic data and evaluation layer. Build repeatable pipelines that create task-shaped synthetic data, golden test sets, and automatic judges. Make it simple for subject matter experts to add or edit examples without code. Store every benchmark with versioning so you can compare runs over time.

-

Model and tool orchestration. Support a mix of frontier models, distilled models, and tools like retrieval, functions, and external APIs. The system should search over prompts, tool strategies, and model choices without human guesswork.

-

Optimization runtime. This is the engine that explores the tradeoff curve for quality and cost. Expect features like adaptive sampling, curriculum-style training on synthetic examples, and early stopping when a run is clearly inferior.

-

Observability and cost governance. Trace every request, log intermediate steps, and attribute costs to teams and features. Set budgets and alerts at the agent and workflow level. Make quality metrics first-class citizens next to uptime and latency.

-

Deployment, safety, and change control. Treat agents like software. Use staged rollouts, canary tests, rollback plans, and a clear review process when prompts or tools change. Safety checks should be declarative and testable.

If your collaboration platform is a primary surface for work, the operating model in multi-agent OS in Workspace shows how orchestration at the document and meeting layer complements the data-first approach above.

How teams outside the lakehouse can copy the pattern

Not every organization runs on a lakehouse. The pattern still transfers. Here is how to replicate the loop if your stack looks different:

-

Data and governance. If your source of truth is a data warehouse, set up a governance bridge to your unstructured content so access controls carry across documents and embeddings. Nightly copy jobs are not enough. You need lineage auditors can follow.

-

Synthetic data. Use style guides, templates, and historical records to seed synthetic generations. Start with two slices: the common, easy cases, and the rare, high-risk edge cases. The mix matters. If you only generate happy-path data, your agent will fail when it matters most.

-

Evaluations and judges. Define success with task-aware checks. For extraction, judge field-level validity. For knowledge assistants, judge citation presence and faithfulness to source. For text transformation, judge structure and tone. Add human spot checks on a fixed schedule, for example every Monday morning.

-

Optimization. Run controlled sweeps over prompts, tools, and models. Resist the urge to change two or three things at a time by hand. Let the system search. Track both cost and quality in the same graph.

-

Observability. Instrument agents like services. Capture latencies, token counts, tool failures, and judge scores. Build a weekly report that pairs cost per task with accuracy.

-

Deployment and safety. Gate changes behind reviews. Run canaries on fifty real tasks before rolling to a thousand. Keep rollback one click away.

If you prefer open source, combine a vector database with a modern orchestrator, script synthetic data generation, store benchmarks in your warehouse, and log traces with a telemetry standard so they do not get lost in notebooks. The important part is the loop, not the logo.

What changes for people and process

- Product managers write quality and cost goals as first-class requirements. They call out acceptable tradeoffs upfront, for example a two cent increase per answer is fine if it lifts citation accuracy by three points.

- Data scientists and machine learning engineers become loop designers. They choose how to seed synthetic data, how to weight judge scores, and when to stop a search, rather than hand-assembling prompts.

- Subject matter experts contribute examples and rules through lightweight interfaces. Because the system turns their input into synthetic datasets and benchmarks, their time compounds.

- Finops teams forecast and watch unit economics in real time. They no longer chase bills after the quarter ends.

This redistribution of work reduces firefighting. The team spends less energy guessing and more time deciding.

Readiness checklist for the next 90 days

Week 1 to 2

- Pick one job that already hurts. Write a one-paragraph spec that states the metric that matters, the budget per task, and where the source-of-truth data lives. Do not pick something dreamy. Pick something repetitive and measurable.

Week 3 to 4

- Build the first loop. Generate a small synthetic dataset, define judges, and run a narrow search. Force yourself to pick a configuration from the first run, then write down what you wish the loop had done better.

Week 5 to 6

- Wire up observability. Add tracing, cost attribution, and a weekly quality report. Make one person the owner of that report so it becomes a habit, not a novelty.

Week 7 to 8

- Add human spot checks and a change review. Decide how often subject matter experts will grade a sample, then publish the rule. Create a simple pull request process for prompt or tool changes.

Week 9 to 12

- Scale to a second job and compare results. Use what you learned from the first loop to cut setup time in half. Share the comparison on cost and quality so executives see the pattern, not just a one-off.

Risks and how to manage them

- Synthetic data overfit. If generated examples are too narrow, agents may ace the benchmark while failing on real data. Counter with varied generation strategies and periodic tests on fresh real-world samples.

- Judge bias. LLM judges can prefer certain phrase patterns. Complement them with rule-based checks and human reviews, and refresh judge prompts when you change style guides.

- Governance gaps at the edges. Edge cases like screenshot text, email attachments, or shared drive archives often sneak past access controls. Inventory those sources before you call a project complete.

- Cost cliffs. New models or pricing changes can swing unit costs. Keep a backup configuration on a smaller model that stays within budget and rehearse the rollback.

None of these risks are unique to Databricks. They are the price of doing agent work at scale, and they are manageable with explicit loops and clear ownership. In parallel, remember that distribution surfaces matter. Customer-facing agents benefit from the patterns we have seen in CRM and workspace ecosystems, like the control-plane approach explored in Agentforce 360 makes CRM the control plane for AI agents.

What to watch next

Two signals will separate teams that talk about agents from teams that run them:

- Loop velocity. How fast can you spin a new loop for a new task, from data grounding to first production run.

- Stability at scale. Can you hold both cost and quality steady as volume grows and data shifts.

Platforms that use your data to produce synthetic examples, score outputs with task-aware judges, and monitor the entire lifecycle will have an advantage. Agent Bricks pushes the market toward that standard. It treats agent building as a measurable, repeatable process, not a craft project. It compresses idea-to-production by packaging the loop and opens the door to consistent, lower-cost delivery.

The bottom line

Whether you run on a lakehouse or not, the pattern is now clear. Build the loop around your data, pick targets, and let the system search. The teams that adopt this pattern in 2025 will set the agent stack norms of 2026. The age of tinkering is over. The age of auto-optimized enterprise agents has begun, and it will reward those who turn feedback into fuel.