PagerDuty’s AI Agents Push SRE Into Autonomous Ops

PagerDuty unveiled its AI Agent Suite on October 8, 2025, shifting incident response from chat coordination to real autonomy. This review explains why SRE will lead the transition and offers a practical Q4 adoption plan you can run now.

The news, and why it matters

On October 8, 2025, PagerDuty announced its AI Agent Suite and positioned it as a move from assistive chat to autonomous operations. In its release, the company described four agents that learn from past incidents, trigger and execute runbooks, summarize communications, and optimize on-call schedules. Early adopters cited faster resolution times and clearer postmortems. For the exact lineup and timing, see PagerDuty’s newsroom coverage of the October 8 AI Agent Suite launch.

This matters because Site Reliability Engineering runs on hard numbers that no one can argue with. When a service degrades, reliability leaders live by indicators like mean time to resolve and error rates. If agents consistently shorten triage, accelerate mitigation, and prevent repeats, the shift will not feel like a novelty. It will feel like a new baseline.

Why SRE is first in line for agent autonomy

Three ingredients make incident response the earliest enterprise function to cross into agent autonomy.

-

Hard, visible key performance indicators. Incident response already runs on a scoreboard everyone can see. Teams watch mean time to detect, mean time to acknowledge, mean time to resolve, change failure rate, and uptime. There is little ambiguity and low political friction. If an agent’s auto remediation trims resolution time by 20 percent for a recurring incident type, leaders can validate the win in days, not quarters.

-

Runbook-native tasks. SRE work is encoded in runbooks, scripts, and automation services. The typical unit of work is a well defined play such as restart the service behind the payment API, roll back the last deployment for the cart service, scale the worker pool, drain traffic from zone C, or fail over the database to the secondary. Agents thrive when tasks are specific, observable, and reversible. Runbooks give them exactly that.

-

Tight collaboration and catalog integrations. Incident response happens where people already live: Slack and Microsoft Teams for coordination and Backstage for the service catalog. Agents that post status, open bridges, look up ownership, and execute playbooks inside these tools eliminate context switching and shorten the path from alert to action. Once an agent can both see service context in Backstage and act in Slack or Teams, it starts to behave like a teammate.

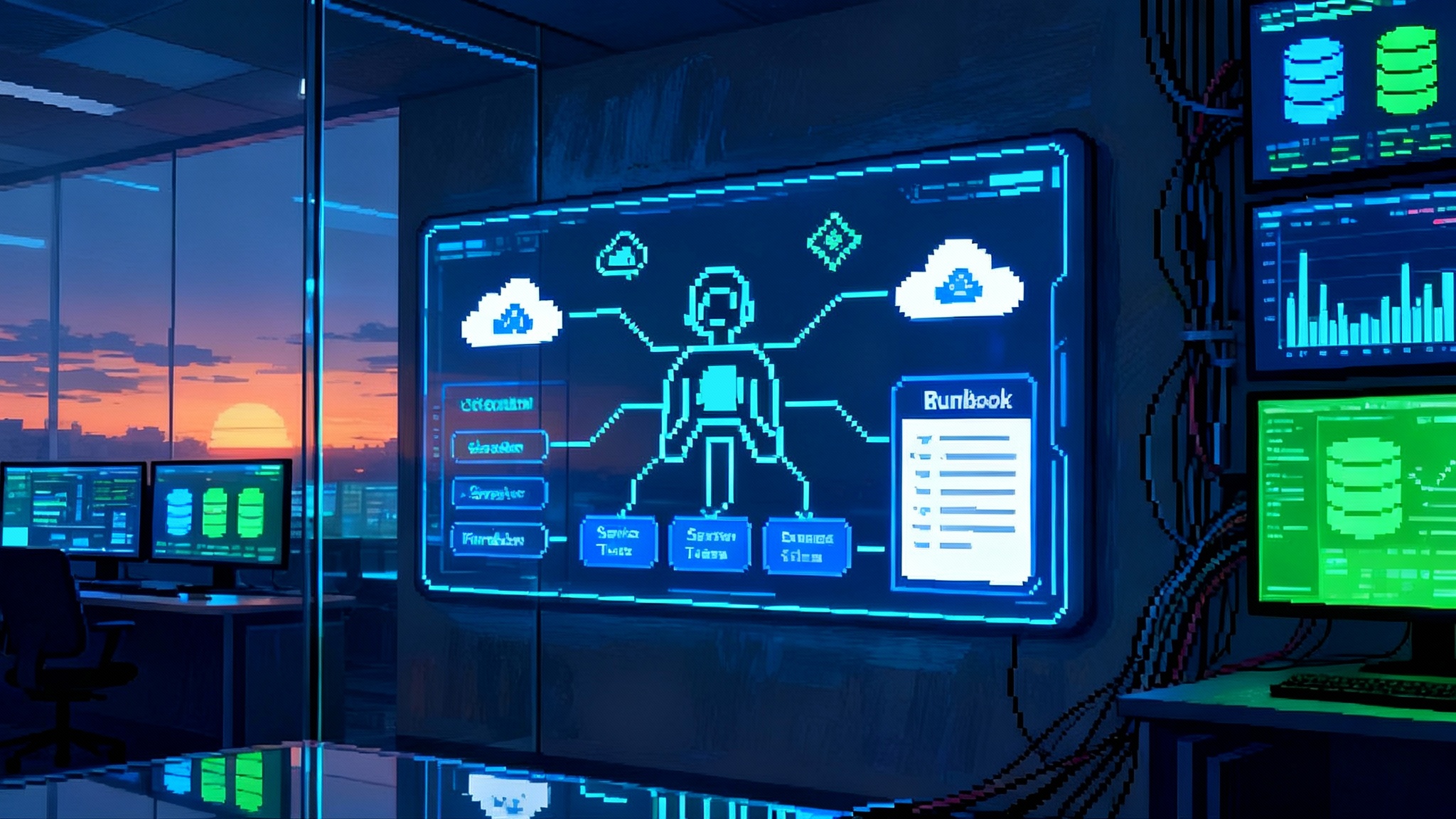

What changes with PagerDuty’s agents

PagerDuty describes four roles in its suite:

- An SRE agent that learns from related incidents and proposes, then executes, diagnostics and remediations.

- A Scribe agent that captures Zoom and chat details and produces structured, searchable summaries in Slack or Teams.

- A Shift agent that resolves on-call scheduling conflicts automatically.

- An Insights agent that analyzes patterns across services and teams to suggest preventive actions.

Each capability attacks a known bottleneck:

- Lost time during triage. The SRE agent assembles the first five minutes of triage in seconds. It pulls runbooks, fetches dashboards, correlates alerts, checks the last deployment, and tests a known-good remediation. Think of a first responder who arrives with the right tools already in hand.

- Missing context and poor memory. The Scribe agent converts chaotic cross channel chatter into a single timeline. New responders see what happened, who did it, and what worked. Postmortems stop relying on detective work.

- Scheduling and handoff errors. The Shift agent cleans up coverage so the right person gets paged and gaps do not turn into outages.

- Prevention by pattern. The Insights agent surfaces leading indicators such as creeping error budget burn or a service that fails for the same cause. That turns ad hoc firefighting into continuous improvement.

From a single agent to an agent mesh

The most consequential detail is interoperability. PagerDuty announced support for a remote server based on the Model Context Protocol, often called MCP. MCP is an emerging standard for how agents talk to tools and data. You can think of MCP as a universal plug for agent integrations. Anthropic documents the protocol here: Model Context Protocol.

Why that matters: if PagerDuty’s agents can use MCP to request tools in other systems, and if other vendors support the same protocol, you get an agent mesh rather than siloed bots. Imagine a fabric where specialized agents expose clean capabilities to one another:

- An observability agent offers get time windowed metrics, fetch exemplars, and propose a probable root cause.

- A deployment agent offers roll back the last canary or promote the blue environment to green.

- A database agent offers rotate credentials and fail over to replica N.

- A compliance agent offers verify change ticket and log evidence to the control system.

An incident agent can orchestrate across this fabric without one off glue code. That means faster triage and safer automation because each agent operates inside its domain with guardrails already in place. If you are thinking about how this meshes with enterprise stacks, consider how the standard stack for agents helps teams normalize capabilities across vendors.

What an agent-driven incident looks like

Imagine a spike in 502 errors on checkout. The SRE agent receives a high priority alert and immediately assembles context. The last deployment to checkout was 12 minutes ago. The error budget for the service is already close to breaching this week. The same pattern occurred once last quarter.

The agent posts a timeline and a plan in the incident channel in Slack. It proposes to run health checks, pull the last three commit diffs, fetch application logs for the checkout service, and query a latency panel. It executes diagnostics, finds a suspicious configuration flag introduced in the last release, and proposes a safe roll back.

Before acting, it checks guardrails. It verifies the change window, confirms that on call approval is required, limits blast radius to the checkout service, and validates a success criterion based on a drop in 5xx rate and recovery of p95 latency below the service level objective. The agent performs the roll back, monitors metrics, and posts success. The Scribe agent finalizes the incident summary. The Insights agent files a task to update the runbook and suggests a code owner review to prevent recurrence.

Humans do not disappear. They set policy, approve high risk changes, and handle novel situations. The mechanical work stops being manual.

The Q4 2025 adoption playbook

You do not need a moonshot. You need a plan you can ship in 90 days.

- Define a pilot scope that can prove value in two weeks.

- Pick two to three high volume, low novelty incident types. Examples include cache saturation, thread pool exhaustion, and deployment rollbacks. These have repeatable diagnostics and clear runbacks.

- Select services with strong observability and clean runbooks. If you cannot describe the diagnosis in ten lines, it is not a good place to start.

- Limit change radius. Start with actions that are reversible in under five minutes.

- Wire the operational surface where work happens.

- Connect Slack or Microsoft Teams channels used for incidents. Require one channel per incident and route all agent actions and approvals there.

- Sync your Backstage service catalog so the agent can map alerts to owners, dependencies, and runbooks.

- Connect observability and deployment systems through supported integrations. If MCP is available for any system in scope, prefer the MCP path over bespoke connectors to build toward the mesh.

- Set guardrails first, then permissions.

- Define a policy matrix. Clarify which actions are autonomous, which require explicit approval, and which are advisory only. Tie that policy to incident severity, service tier, and deployment stage.

- Establish a kill switch and a clear owner who can disable agents during incidents.

- Use least privilege credentials stored in your existing secret system. For production changes, require short lived tokens and session recording.

- Decide how you will measure improvement before you start.

- Baseline the last 90 days for mean time to detect, mean time to acknowledge, and mean time to resolve, split by incident type and severity.

- Track the auto remediation rate and the percent of actions that were rolled back or overridden.

- Monitor false positive suggestions and drift between proposed and executed runbooks.

- Publish a weekly scorecard to the engineering leadership channel so decisions are data driven.

- Invest in the runbooks that feed the agents.

- Convert unstructured wiki pages into tested, parameterized runbooks with clear prechecks, steps, and rollback.

- Attach health checks and acceptance criteria to each step. Treat every runbook as a small, testable workflow.

- Make logs, dashboards, and code owners first class links. If the agent needs to search for these every time, you give back the time you gained.

For leaders who want to understand how modular capabilities scale, see how modular enterprise agent skills keep complex workflows composable and testable.

Guardrails that actually work

Security and safety controls must be specific rather than aspirational. Start narrow and expand scope only when the numbers are on your side.

- Progressive autonomy. Start with read, then propose, then execute for a narrow set of actions. Promote actions from propose to execute based on three weeks of clean performance.

- Blast radius limits. Scope commands by namespace, cluster, or service tier. Hard stop on cross region or database actions without human approval.

- Change windows and approvals. Require daylight windows and two person approval for risky actions until the data shows safe execution over time.

- Preflight checks. Before any action, the agent must confirm service ownership, check for ongoing deployments, verify a viable rollback path, and confirm that telemetry is healthy enough to validate success.

- Logging and evidence. Every agent action logs to your central audit system with a unique correlation identifier and a link to the incident timeline. This is critical for compliance.

How to evaluate success in Q4

Go beyond global averages. Measure like an operator and keep each metric paired with a target.

- Time to first diagnostic. From alert creation to the first validated test of a hypothesis. Target a 50 percent reduction on scoped incidents.

- Triage depth before escalation. Count how many playbook steps the agent executes before involving senior engineers. Target three validated steps or more.

- Safe action rate. Actions executed without rollback that achieve the predefined success criterion. Set a 95 percent threshold before expanding scope.

- Human focus time reclaimed. Book hours by looking at paging volume and meeting notes. Your finance partner will ask for this number.

- Summary quality. Score Scribe outputs for completeness and accuracy using a rubric. Improve with feedback and prompt changes.

Architecture patterns that scale

You do not need a platform rewrite. Use a layered model that matches how teams already work.

- Sensing layer. Alerts, logs, traces, and events. Integrations with your existing observability tools feed the agent actionable signals.

- Reasoning layer. The SRE and Insights agents correlate past incidents, code changes, and service dependencies, then generate a plan with confidence scores.

- Execution layer. Automation and runbooks that make changes, with hard guardrails in policy and access control.

- Collaboration layer. Slack or Teams channels and Backstage for service context and ownership.

Where MCP fits. Use MCP to normalize how agents call tools. Treat the MCP registry like a service catalog for capabilities. For each capability, define allowed verbs, inputs, and outputs. If you add a new system later, you plug it into the same verbs rather than rewriting orchestration code. This aligns with industry moves that make every surface more programmable, similar to how we see in trends like meetings become agent hubs.

Data, prompts, and the human loop

Autonomy is not only about APIs. It also depends on how you structure knowledge, capture feedback, and gate risk.

- Data hygiene. Keep service metadata current in Backstage. Stale ownership or missing runbooks turns autonomy into guesswork.

- Prompt contracts. For each playbook, define inputs, outputs, and acceptance criteria in a structured format. This reduces prompt drift and makes evaluation consistent.

- Feedback loops. Use Slack or Teams reactions and short forms to capture whether an agent’s step was helpful, neutral, or harmful. Feed those signals into weekly tuning.

- Human approvals with context. When human approval is required, present the exact action, the reason, the blast radius, and the success metric. Do not ask humans to approve a vague plan.

Backstage, Slack, and Teams are not side notes

These surfaces are essential. Backstage is your ground truth for service metadata, owners, and documentation. Slack and Teams are how you align quickly. An agent that can post a plan, wait for an approval reaction, execute, and update a status page in the same thread keeps humans informed without slowing the machine. PagerDuty’s updates to chat experience and Backstage integration make this possible now and reduce the number of custom bots teams must maintain.

Pitfalls to avoid

- Overbroad pilots. If you give the agent vague problems like improve reliability for payments, you will get vague outcomes. Anchor to three incident types with clear runbooks.

- Missing rollback. An agent without a tested rollback is a risk amplifier. Pair every action with a verified undo.

- Secret sprawl. Do not mint new secrets. Grant time bound tokens through your identity provider and rotate aggressively.

- Quiet drift in runbooks. Autonomy fails when the world changes. Make the service team own its runbooks and set a monthly hygiene task.

- Invisible changes. Require evidence logging with correlation identifiers so audit and compliance are easy by default.

A three week starter plan

Week 1: Instrument and baseline. Connect Slack or Teams, Backstage, and your observability tools. Choose pilot incident types, convert runbooks to parameterized workflows, and set guardrails and policies. Publish a scorecard template.

Week 2: Dry runs and simulation. Rehearse incidents from the last quarter in a test environment. Compare agent plans against what humans did. Fix gaps. Approve the first autonomous actions with the lowest blast radius.

Week 3: Limited live scope. Enable propose plus execute for the chosen incident types during business hours. Track every action, review daily, and freeze expansion if the safe action rate dips below 95 percent.

By the end of the month, you will know whether the agent can safely handle a narrow set of tasks. Expand stepwise rather than all at once and retire human toil as the data proves safety and value.

The bigger picture

There is a quiet convergence in the market. Vendors are adding MCP or MCP like connectors. Enterprises are standardizing on Backstage for service metadata and on Slack or Teams for coordination. The result is a practical mesh of interoperable agents. PagerDuty’s launch fits this story by putting an incident agent at the center and giving it the sockets to talk to other specialists.

Autonomy in operations will not arrive as a single headline or product. It will show up in your metrics when late night pages slow down, channel chatter quiets, and rollbacks take minutes instead of hours. The fastest way to get there in Q4 is to pick a tractable slice of work, federate agents through standard connectors, and measure, then promote, what proves safe.

The takeaway

SRE is positioned to become fully agentic because it already behaves like a machine. It has clear objectives, codified procedures, and well defined interfaces where humans and software meet. PagerDuty’s October 8 launch moves that machine from assisted to autonomous. The leaders who win this quarter will set tight scopes, enforce real guardrails, and judge by the scoreboard. The rest will spend another year debating while their competitors sleep through the night and ship more in the morning.