Agent HQ turns GitHub into mission control for coding agents

{"Excerpt":"GitHub unveiled Agent HQ at Universe 2025, a mission control that lets teams run, compare, and govern coding agents inside GitHub and VS Code. Learn how to test, enforce policy, and prove ROI without changing tools."}

Breaking: GitHub introduces Agent HQ at Universe

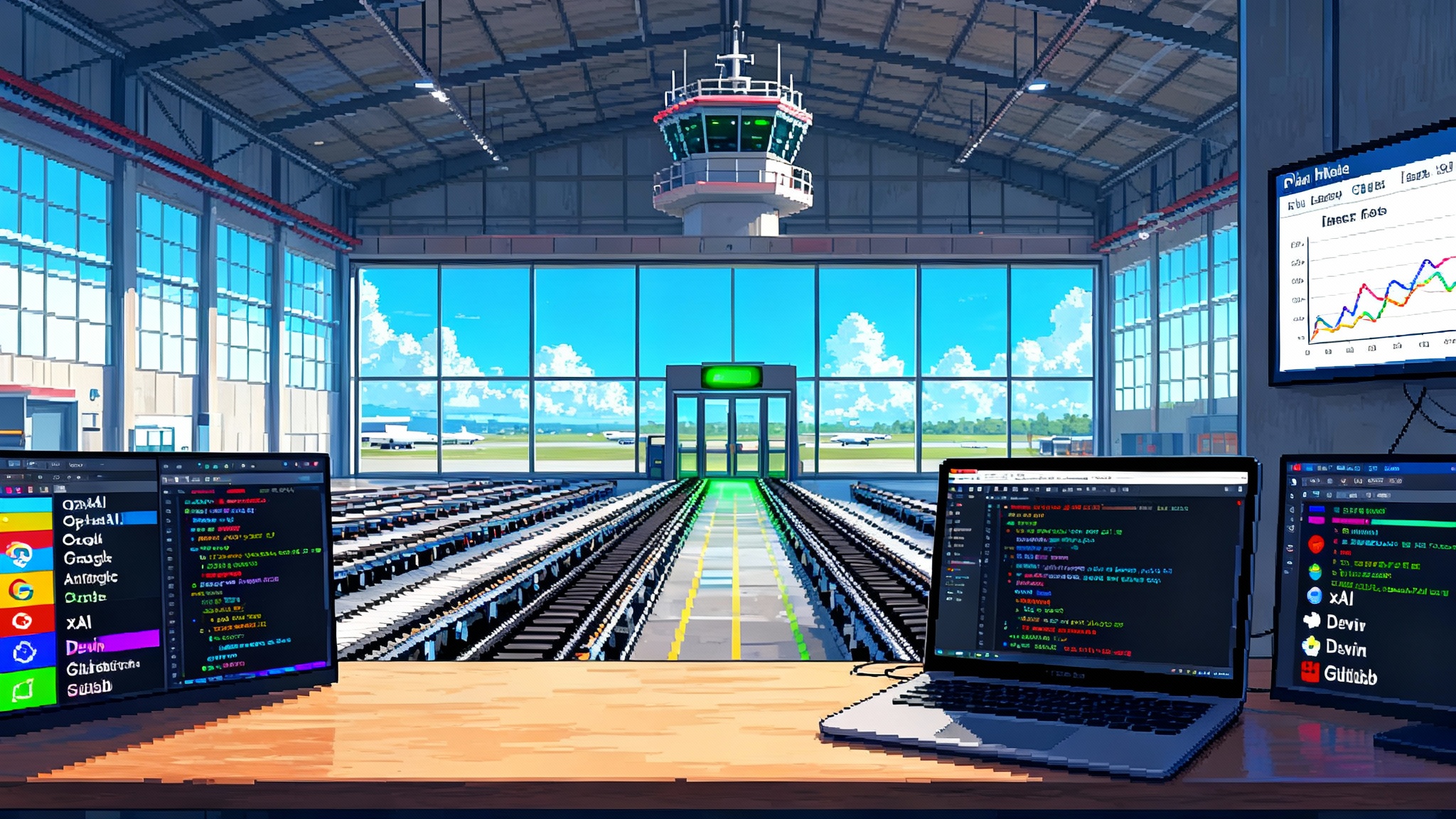

On October 28, 2025, GitHub announced Agent HQ, a neutral command center that lets developers run and compare coding agents from different vendors inside the GitHub workflow. Think air traffic control for software work. Multiple agents taxi on their own runways, and you decide which one takes off, which one circles for another pass, and which one never leaves the gate. GitHub describes Agent HQ as a mission control that appears consistently across GitHub on the web and in Visual Studio Code. Early screenshots show logos from OpenAI, Anthropic, Google, xAI, Cognition’s Devin, and GitHub, all accessible from one console. For a high level snapshot of the announcement, see the official Introducing Agent HQ overview.

Why this matters is simple. Until now, most teams picked one agent and hoped it fit every job. Agent HQ changes that by putting choice, comparison, and control in the place that already holds your code, issues, policies, and audit trails.

What Agent HQ actually unlocks

Agent HQ is best understood as three capabilities that were hard to do cleanly before.

1) True agent A/B testing

- Run two or more agents against the same task in parallel.

- Compare outputs side by side and pick a winner. For example, assign a bug fix to multiple agents and ask each to open a draft pull request. You can compare diffs, test outcomes, and review comments. If one agent overfits or introduces a risky migration, you discard it and merge the safer change.

- Build playbooks for which agent is best at which job. Maybe Claude is your refactor specialist, Gemini is your test generator, Grok excels at CLI scaffolding, and Devin is your flaky test sleuth. Agent HQ’s mission control lets you codify those preferences without changing your developer tools.

If your team has already normalized bot authored pull requests, you will find this model familiar. For background on that shift and its impact on reviews, see our analysis of how Copilot agent PRs go mainstream.

2) Policy enforced automation

- Agents operate inside GitHub’s controls, not around them. Branch protections, required status checks, and audit logs apply to agent activity the same way they apply to humans. That means you can require a CodeQL scan and a human approval before any agent pull request is merged.

- Plan first, execute second. With Plan Mode in Visual Studio Code, you can ask an agent to propose a step by step plan that you review and edit before execution. That separates intent from action and reduces surprises.

- Pre merge security and quality checks. Combining Copilot’s agentic review with CodeQL shifts security left without new portals or processes. Your team approves the plan, the agent produces a pull request, and your existing checks gate the merge.

3) Team shareable custom agents

- Your organization can define project rules and agent behavior in versioned files, then share those agents across repositories. The Visual Studio Code docs now include support for an AGENTS.md file that broadcasts guidance across agents, from naming conventions to restricted operations. See Microsoft’s documentation on Use an AGENTS.md file for the exact format and settings.

- You can wire approved external tools through an allowlist, so agents only touch systems you intend. This is where a platform approach helps. For a deeper strategy on turning agents into governed, shippable components, review our playbook in AgentKit for governed agents.

How Agent HQ fits into your daily workflow

Agent HQ lives where work already happens. Inside issues, pull requests, and the editor, it provides a consistent panel for choosing agents, reviewing plans, and approving execution. That consistency matters. Most failed agent initiatives die because the path from intent to code changes is scattered across portals and scripts. By situating choice and control in GitHub and VS Code, Agent HQ shortens the loop from idea to safe merge.

A typical flow looks like this:

- A maintainer labels an issue with a known task class, such as test coverage uplift.

- From Agent HQ, the maintainer selects two or three approved agents and requests a plan.

- The agents return concrete steps, dependencies, and estimates. The maintainer edits the steps to match policy and environment.

- Agents execute on ephemeral runners, write code, and open draft pull requests.

- Required checks run. CodeQL, unit tests, and a second agent doing review all gate the merge.

- The maintainer compares diffs side by side, picks a winner, and closes the others with notes.

The win is not only speed. It is repeatability. Once your team has a stable flow for a task class, you can templatize it with AGENTS.md and apply it across repos.

The rollout, in practical terms

GitHub is clearly staging the release. The Universe material marks Agent HQ as coming soon, and related features are landing across GitHub and Visual Studio Code in waves. Expect a pilot with select customers first, then a broader preview period where partner agents appear over time. If you want in early, tell your GitHub account team now and prepare a sandbox where you can evaluate agents safely. The announcement context is summarized in the Introducing Agent HQ overview.

A day one playbook that delivers proof

The opportunity is to turn agent selection from a belief into a measurement. Here is a concrete approach you can adopt in a single sprint.

Step 1: Start with a narrow, well defined task class

Choose a task that is common, traceable, and low risk. Examples:

- Fix a class of linter warnings.

- Convert synchronous functions to async where safe.

- Increase unit test coverage for leaf modules.

- Localize strings in a specific folder.

Step 2: Define acceptance criteria and a budget

Write crisp criteria the agent must meet before you merge. For example:

- All tests green.

- No new CodeQL alerts above Medium severity.

- Diff under 200 lines unless approved by a human owner.

- Runtime regression under 2 percent on the example dataset.

Set a hard ceiling on agent runtime and requests. That keeps costs predictable and makes comparisons fair.

Step 3: Run a parallel bake off

Assign the same issue to two or three agents in Agent HQ. Require each agent to propose a plan first, then execute it. Keep the pull requests open simultaneously and capture logs, diffs, tests that passed or failed, and CodeQL findings.

Step 4: Decide with data

Pick the winner based on defined criteria, not gut feel. Close the losing pull requests with a short comment about why. Update your playbook to route similar tasks to the winning agent next time.

Step 5: Convert wins into guardrails

Any class of issue that an agent reliably fixes should be paired with required pre merge checks. For example, if an agent writes tests that pass locally but sometimes violate security policy, add a CodeQL gate and a manual sign off until the false negative rate drops.

If your organization wants to push autonomy further in a controlled way, examine how Claude Skills for enterprise workflows models modular responsibilities and auditability. The same patterns apply when you promote a winning agent from trial to default.

KPIs that actually signal value

If you only track lines of code changed, you will fool yourself. These are the metrics that matter, with definitions your leadership and auditors can live with.

- Latency to safe merge: median time from task assignment to a merged pull request that passes all required checks. Track per agent and per task class.

- Fix rate: share of agent pull requests that merge on the first attempt without rework. A healthy target is above 70 percent for repetitive tasks and above 40 percent for multi file refactors.

- Review deltas: average number of human comments per 100 changed lines on agent pull requests compared with human written pull requests for the same repos and time period. The goal is fewer comments without a drop in defect discovery.

- Escapes prevented: count of CodeQL or other scanner findings that blocked an agent pull request before merge. This is a positive metric. It shows your gates work.

- Rollback rate: fraction of agent merges that are reverted within seven days. Investigate anything above your team’s baseline.

- Cost per accepted change: total agent and compute costs divided by number of merged pull requests from agents for the period.

Collect these weekly, publish them in a dashboard, and use them to decide routing rules in Agent HQ.

Designing sandboxes that keep you fast and safe

A good sandbox is not fancy. It is strict in the right places and generous in others. Here is a template you can adopt.

Identity and permissions

- Use dedicated GitHub Apps or bot accounts for each agent vendor. Scope tokens to the minimum repositories, and where possible, to branches or environments.

- Enable branch protections with required reviews and required checks for CodeQL and agent code review. Disallow bypass for agent identities.

Compute and network

- Run agent tasks on ephemeral runners or GitHub hosted runners with clean images. Destroy the environment at the end of each run.

- Default deny outbound egress. Allow only artifact stores, package registries, and endpoints the approved plan requires.

Data boundaries

- Keep production secrets and customer data out of the sandbox. For tasks that need credentials, use short lived tokens with fine grained scopes. Rotate often.

- If you allow repo read across monorepos, set folder boundaries and ensure AGENTS.md reflects them. Use nested AGENTS.md files to steer agents to different rules in different subtrees.

Approval and escalation

- Require human approval for any agent plan that modifies schema, security configuration, or build pipelines.

- Create a visible kill switch. If an agent starts spamming pull requests or loops on a task, a page on call should be able to revoke its token in one click.

Audit and forensics

- Send agent logs, commands run, and plan revisions to your existing observability stack. Tag by agent vendor, model family, repository, and task type.

- Keep at least 90 days of logs. Review a sample weekly for drift against AGENTS.md.

Building shareable custom agents the right way

Your first custom agent should be boring and useful. A repo scoped cleanup specialist is perfect for proving value with low risk.

1) Write AGENTS.md at the repo root

- Describe the repo, supported languages, build commands, and the safe subset of operations. Be explicit about ban lists. For example, no dependency version changes, no schema migrations, no new third party services without approval.

- Include a checklist for plans. Mention required tests, any contracts, and where to look for golden files.

2) Add folder specific instructions as needed

- For monorepos, include nested AGENTS.md files in frontend, backend, and infra folders. Keep rules local and concrete.

3) Wire tools through the allowlist

- Register the scanners, test runners, and code formatters you rely on. If your organization uses bespoke linters or policy engines, package them as allowed tools rather than pointing agents at raw scripts.

4) Share it with your team

- Store AGENTS.md in the repo and a template in an internal engineering standards repo. Encourage teams to copy and edit, not reinvent.

5) Measure and iterate

- Track the KPIs above by agent and by repo. When a custom agent consistently outperforms a vendor agent for a task class, adopt it as your default for that class.

Governance patterns that scale beyond one team

- Central policy, local autonomy: define organization level rules for identity, required checks, and allowed tools. Let teams tailor AGENTS.md locally.

- Vendor neutrality by design: run multiple agents for each new task class until the numbers tell you who wins. Avoid single vendor assumptions.

- Change control for prompts and tools: treat AGENTS.md and tool allowlists like production code. Pull requests, reviews, and changelogs apply.

- Red team your automations: once a quarter, assign a red team to try to trick agents into violating AGENTS.md. Feed findings back into plans and policies.

How Agent HQ reshapes the ecosystem

Agent HQ reframes the competition. Instead of every company trying to be your one true agent, GitHub is offering a place where specialized agents can compete task by task inside your existing governance. OpenAI, Anthropic, Google, xAI, and Cognition will try to differentiate on speed, reasoning, and reliability. Agent HQ turns those claims into measurable outcomes in the pull requests your teammates review every day.

This also nudges enterprises toward a modular approach to automation. Instead of a single agent that tries to do everything, teams can adopt a portfolio of specialists and route work to the best one per task. We have seen similar moves across the industry, from CRM centric agent control planes to ops focused autonomous responders. If you are exploring adjacent patterns for the business side of the house, our review of AgentKit for governed agents outlines how to wrap tools, policies, and telemetry around agent workflows.

A 30 day plan for engineering leaders

- Week 1: pick two task classes, draft AGENTS.md templates, and set required checks for CodeQL and agent code review on a pilot repo. Train a few maintainers on Plan Mode and approval flow.

- Week 2: run a three agent bake off with a fixed budget. Publish raw results, diffs, and test outcomes for the team to see. Choose winners per task class.

- Week 3: scale the winner to two more repos. Keep a holdout set of tasks to test for regression. Start a shared library of example plans and prompts that worked.

- Week 4: present KPIs to the broader org, lock in routing rules in Agent HQ, and graduate the pilot to a standing program with regular audits.

FAQs stakeholders will ask you

Is this safe for regulated environments? Yes, with the right controls. Keep agents inside your existing identity, logging, and change management, and use AGENTS.md to encode allowed operations. Require CodeQL and human review for sensitive paths. Align your logs with your compliance narratives early.

Will this slow down developers? Not if you keep the blast radius small. Start with low risk tasks and pre approved plans. The combination of Plan Mode and required checks reduces interruptions while catching problems before merge.

How do we avoid vendor lock in? Use Agent HQ to your advantage. Run parallel trials for each new task class, record results, and route work to the current winner. Keep your playbooks and AGENTS.md portable, and avoid agent specific assumptions in your build and deploy scripts.

How do we measure ROI? Track latency to safe merge, fix rate, rollback rate, and cost per accepted change. Compare agent pull requests with human written pull requests on the same repos and time windows. Publish the dashboard weekly so stakeholders see the trend, not just anecdotes.

Bottom line

Agent HQ is not simply another product toggle. It is GitHub turning its platform into the control layer where agents from many companies can work under one roof, with your policies in charge. If you design your sandboxes well, define the right KPIs, and treat agent choice like a measurable experiment, you will ship faster without losing guardrails. The control tower is open. File your flight plan and get moving.