Agentic AI takes BFSI: AutomationEdge’s GFF 2025 launch

Agentic AI just crossed from demo to deployment in financial services. At GFF 2025, AutomationEdge put regulated agents on stage with co-creation for banks and insurers. Here is what matters, what ships first, and how to prove control.

A real signal from GFF 2025

Agentic AI has moved from demos to deployment in financial services. At Global Fintech Fest in Mumbai on October 7-9, 2025, AutomationEdge announced new agentic capabilities and a co-creation program aimed at banking, financial services, and insurance. Coverage focused on real workloads and regulated environments rather than chat UX and novelty. For context, see the Economic Times on AutomationEdge's launch and scan the Global Fintech Fest 2025 agenda.

This matters for one reason: regulated agents are finally showing their work. Instead of a clever assistant that drafts emails, we are seeing accountable systems that propose actions, call tools with entitlements, cite evidence, and capture decisions in a form a second line risk team can replay.

Why vertical agents beat generic copilots

Generic copilots are like smart interns. They read documents, summarize, and write drafts, but they do not wake up on their own, check systems of record, call internal services, and make decisions inside policy. In a bank or insurer, that gap separates a helpful demo from a production system you can audit, supervise, and scale.

Vertical, compliance-aware agents win because they are built inside the control environment rather than beside it:

- They operate in the firm’s process maps, not as sidecar scripts. They push and pull from the same queues, cases, and core systems operators already use.

- They carry context from start to finish. Know Your Customer data and Anti Money Laundering risk are not afterthoughts. The agent’s memory, tool scopes, and plans are conditioned by risk posture at runtime.

- They generate evidence by design. Every retrieval, rule evaluation, and tool call is logged in a structured trail a risk function can read without reverse engineering prompts.

Picture a claims adjuster agent that knows the firm’s fraud thresholds, parses a 30 page policy, fetches third party verifications, and then either settles, escalates, or denies with a rationale that cites policy clauses and documents. A generic copilot can help a human write the email. The agent can close the work.

The stack that makes it possible

Across early adopters a repeatable, three layer stack is emerging.

1) Workflow orchestration as the backbone

- A stateful orchestrator manages steps, retries, timers, and compensation logic. Agents are first class actors in the flow, not just a chat endpoint.

- Tools are explicit. The agent can only call registered functions with narrow scopes, such as customer lookup, sanction screening, document classification, payment initiation, and manual review.

- Human in the loop is modeled, not improvised. Approvals, clarifications, and exceptions are typed tasks with owners, service levels, and telemetry.

If your org already runs RPA or workflow automation, you are partway there. The agent proposes steps and drafts content; the orchestrator governs the path and enforces policy.

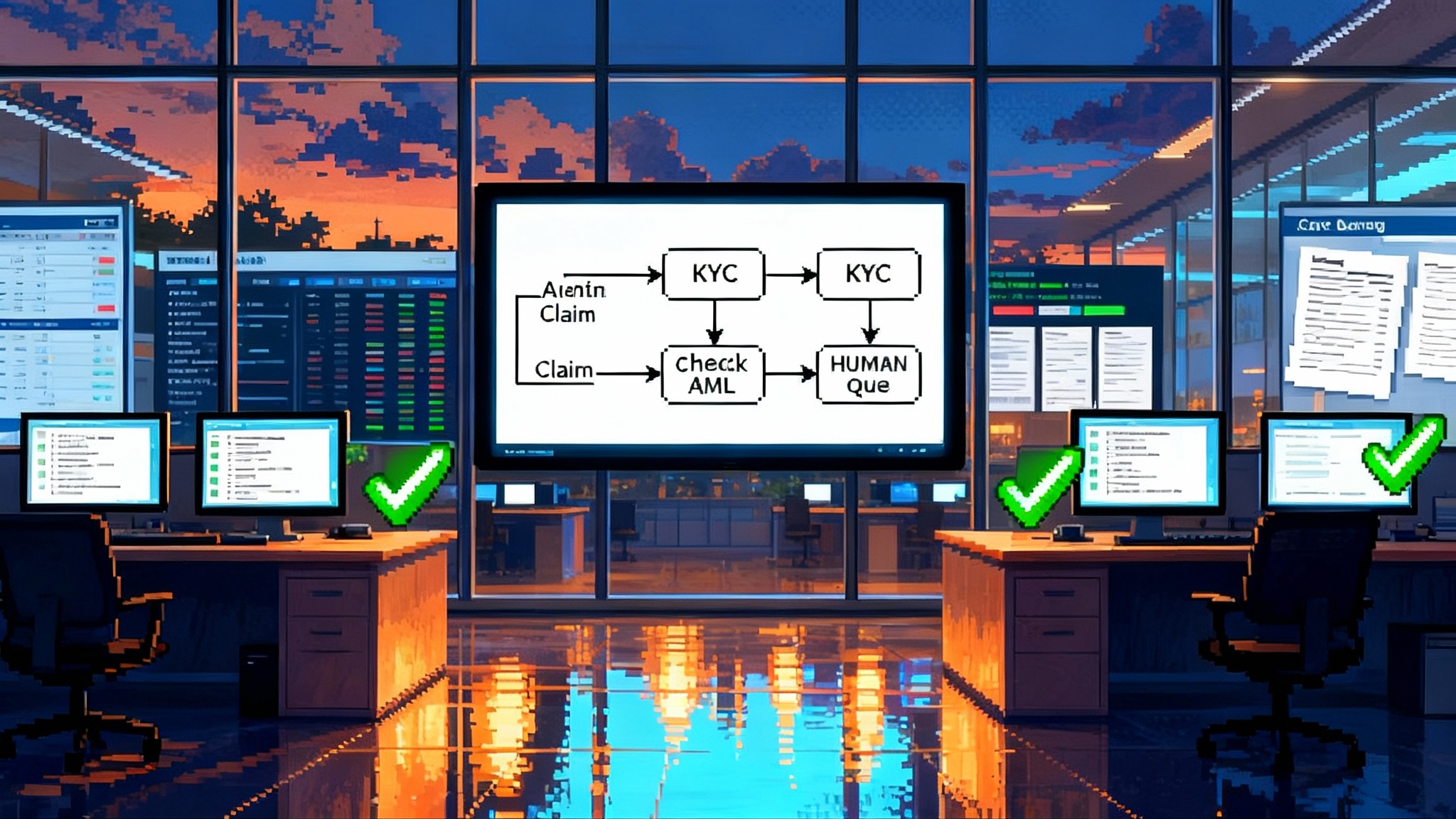

2) KYC and AML context in the runtime, not just the lake

- Plans and tool access are conditioned by customer risk, product type, and jurisdiction. If the actor is a politically exposed person or the payment is cross border, the agent’s plan changes. It may require dual approval, enhanced due diligence, or a hold.

- Policy lives as data. Screening thresholds, watchlist recency, and model cutoffs belong in versioned configuration the risk team owns. The agent reads those values at runtime so behavior can change without a deploy.

- Data minimization is default. The agent retrieves only the fields needed for the step. That cuts exposure and makes reviews faster.

3) Audited retrieval augmented generation

- Retrieval bridges text and action. In regulated contexts it must be explainable. The agent stores what it fetched, why, and how it used the content.

- Every chunk the model sees carries provenance and sensitivity labels. Answers cite those chunks in a structured log that doubles as a training and audit set.

- Redaction and masking happen before the model sees anything. That keeps secrets out of prompts and reduces downstream obligations.

Put together, the model proposes, the orchestrator controls, risk policy supplies the rules of the road, and retrieval supplies the facts and receipts.

Early ROI levers that are landing first

The first wins share three traits: repetitive steps, clear rules, and high volume.

1) Customer onboarding

- Identity and address verification across multiple documents with instant feedback to the applicant

- Sanctions and watchlist screening with risk adjusted workflows

- Automatic account opening in core systems once controls pass, with exceptions routed to humans

Expected outcomes: faster cycle times, fewer drop offs, and less manual rekeying. The control is simple: the agent proposes an account opening, and a human approves when any signal crosses a threshold. The reason code from that approval becomes labeled training data.

2) Insurance claims

- First notice of loss intake that normalizes unstructured statements and attachments

- Policy coverage checks, deductible math, and fraud heuristics as callable tools

- Settlement recommendations with cited policy clauses and evidence

Expected outcomes: lower handling time and more consistent policy application. The control is a tiered approval matrix keyed to claim size, fraud score, and claimant risk.

3) Middle and back office operations

- Reconciliation agents that pull ledgers, identify breaks, and propose adjustments

- Regulatory reporting agents that assemble data packs and prefill disclosures

- Contact center deflection where agents execute changes, not just answer questions

Expected outcomes: fewer tickets per customer, fewer handoffs, and better regulator conversations because you can show how every decision was made.

For technical readers, several of these building blocks echo patterns we have covered in adjacent domains, like the RUNSTACK meta agent pattern for tool calling and plan control, and Agentic Postgres for safe parallelism when agents need controlled concurrency close to data.

Co-creation beats generic pilots

A bank or insurer that co-creates with a vendor moves from prompts to process. The best teams do three things in the first month:

- Define the unit of value. Pick one process slice with measurable outcomes and a clear owner. Examples include address change in retail banking or low severity motor claims below a fixed amount.

- Publish the controls. Write down rules, required evidence, approval tiers, and stop conditions. Treat them as data the risk team can edit.

- Wire one thin path. Build a real end to end route from incoming request to resolution, including one exception path. After that works, add conditions and tools.

This is why vertical agents are winning. They accept responsibility for results inside the firm’s lines of defense.

What this signals for U.S. BFSI in 2026

Three practical shifts are likely inside U.S. firms during 2026.

- Procurement will shift from buying copilots to buying outcomes. Executives will procure agents that clear a named backlog under named controls. Contracts will specify the tool catalog, the evidence the agent must produce, and failure modes.

- Model governance will move from static checklists to runtime attestations. Risk teams will ask for logs that show exactly what the agent retrieved, which policies it consulted, and where humans intervened. Approvals get faster because evidence is structured and repeatable.

- Core vendors will expose agent safe functions. Expect more connectors that enforce entitlements and data minimization so agents can read and write without bypassing controls.

A cultural shift follows. IT, operations, and risk will meet earlier. Review moves left because control intent lives in configuration the risk team can edit before changes ship.

The 30-60-90 day pilot blueprint

Speed wins only when it travels with discipline. Use this blueprint to move from sandbox to controlled production without unsafe shortcuts.

Days 0 to 30: frame, constrain, and wire

Goal: a working thin slice of a real process in a test environment.

- Pick the process and define success

- One process, one owner, one north star metric. Examples: same day onboarding for low risk retail customers, motor claim under a fixed ceiling, reconciliation for a specific ledger pair

- Tie the metric to business value and risk comfort. For onboarding, target cycle time and exception rate; for claims, target handling time and consistency; for ops, target breaks resolved per day

- Gather policies and data

- Collect standard operating procedures, decision tables, and legal or compliance rules

- Inventory every system touched and the allowed read or write scope for the agent

- Mark sensitive fields and define masking rules up front

- Build the first agent path

- Configure the orchestrator with explicit steps, timeouts, and compensation paths

- Register tools with narrow scopes: document classification, KYC lookup, AML screening, policy check, case creation, and queueing

- Implement audited retrieval: chunk documents, tag with provenance and sensitivity, and store citations in the case record

- Add one human approval step with reason codes

- Safety and testing

- Red team prompts with seeded edge cases and adversarial inputs

- Run a golden set of 50 to 100 past cases and compare the agent’s outputs to known good decisions

- Document failure modes and define stop conditions

Deliverable: a narrated demo on live test data with logs that risk can read without a translator.

Days 31 to 60: expand tools, tighten controls, and shadow live work

Goal: prove the agent handles variety and coexists with people and policy.

- Tool and data expansion

- Add two or three tools, such as address verification, external fraud signals, or payment initiation

- Expand retrieval sources to include policy forms and recent bulletins with stricter provenance filters

- Controls and observability

- Convert rules into configuration that risk can edit: thresholds, checklists, and escalation paths

- Require structured rationales. The agent must output a decision with evidence references and policy clauses

- Instrument dashboards for cycle time, exception rate, false positive and false negative rates, and human touchpoints

- Shadow production

- Route a small percentage of live cases in parallel. The agent completes the work but requires human approval before action

- Compare outcomes to human only cases and document discrepancies

Deliverable: a joint review with operations and risk that signs off on behavior under clear thresholds.

Days 61 to 90: controlled production and continuous learning

Goal: ship to production with guardrails and a learning loop.

- Progressive rollout

- Start with one segment and a daily cap

- Keep a kill switch and stop conditions in configuration

- Feedback into improvement

- Treat every human override as a labeled training example

- Hold weekly error clinics with the agent team, operations, and risk to review logs and tune policies

- Evidence and governance

- Produce a closeout pack: scope, data sources, tool catalog, configuration snapshot, golden set results, performance metrics, and a model card for any learning components

- Present the audit trail for a random sample of decisions and confirm traceability from input to action

Deliverable: a signed go or no go with a plan for volume and scope increases next quarter.

If you want a pattern for phased change management, study the DualEntry's migration agent playbook. The same principles apply when an agent must rewrite records across multiple systems with controlled rollbacks.

How to brief your regulator and your board

- Lead with the control story. Show how policies become configuration and how approvals are enforced by workflow, not by shelfware.

- Show the logs. Demonstrate that a decision can be replayed with the same inputs and yields the same outputs.

- Prove reversibility. If the agent fails, humans can pick up the thread with full context in the case record.

- Quantify exposure. Report the number of cases, data fields touched, and the entitlements granted to each tool.

This turns the conversation from fear to engineering. You are not asking for trust; you are demonstrating how it is earned.

Practical architecture checklist

- Orchestrator with retries, timers, queues, and compensation

- Tool layer with explicit scopes, rate limits, and entitlements

- Identity and access controls aligned to the agent, not the developer

- Retrieval with provenance tags, sensitivity labels, and redaction

- Policy configuration owned by risk and version controlled

- Observability that tracks plan steps, tool calls, and human touchpoints

- Sandboxing by product, customer segment, and geography

What to build first for U.S. teams in 2026

- A low risk onboarding lane. Restrict geography and product scope with simple identity rules. Add a small claim or service use case only after the first lane hits its metrics.

- An agent catalog. Publish the tools an agent can call and the processes they can touch. Make it easy to add a tool but hard to grant write privileges.

- A living policy store. Let the risk team change thresholds without a deploy and pair every change with a smoke test suite that proves behavior.

The moment to move

The story from GFF 2025 is not that another vendor demoed a flashy assistant. It is that regulated agents now carry policy, call tools safely, show their work, and connect to real systems under watchful controls. BFSI is becoming the first regulated beachhead for agentic AI.

If your 2026 plan still says evaluate copilots, rename it. Pick one process, express the controls as configuration, and ship a thin path in 30 days. You will not just have an assistant. You will have an accountable teammate that leaves an audit trail and returns business value you can count.