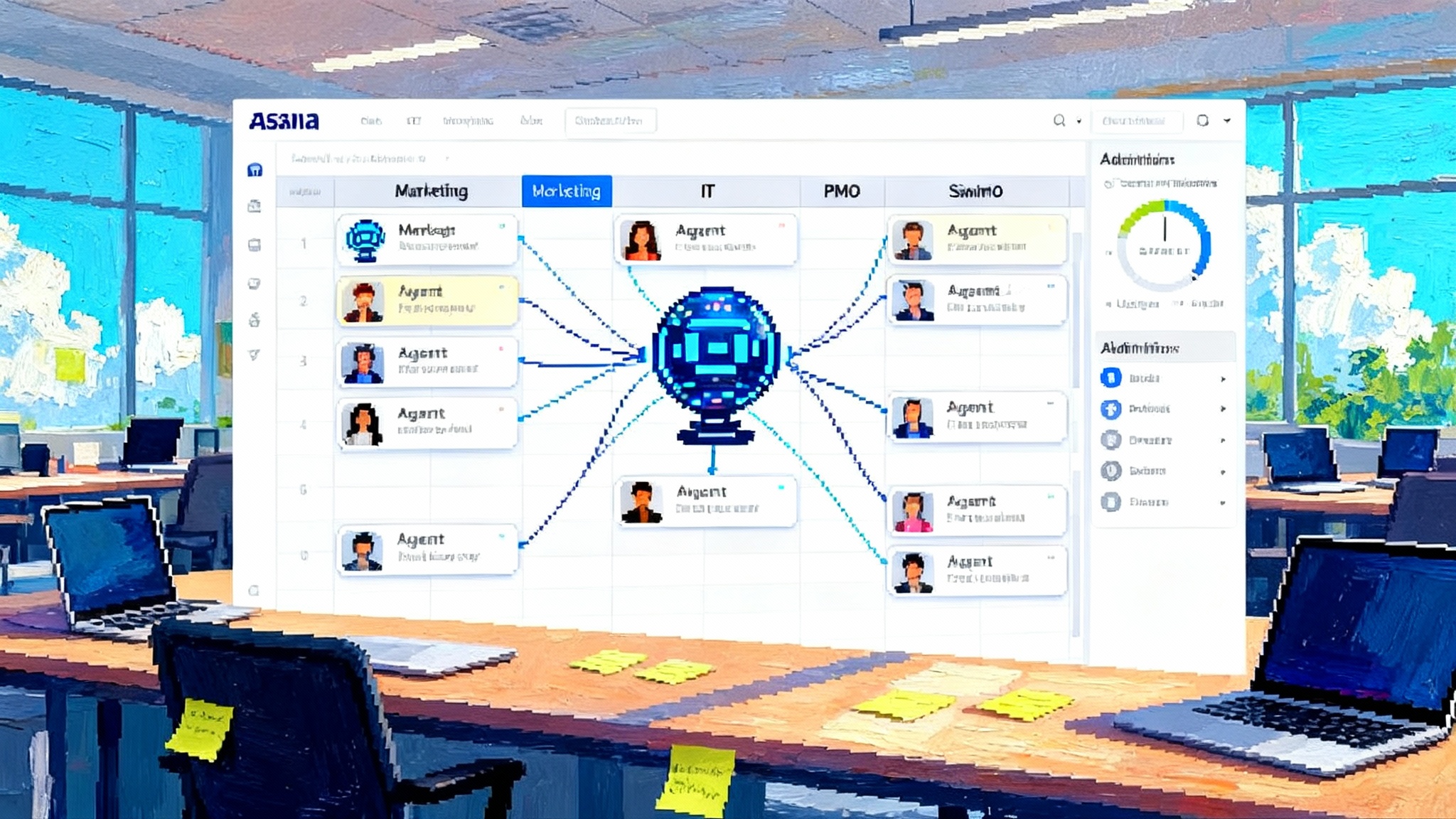

Asana’s AI Teammates Turn Agents Into Real Coworkers

Asana’s AI Teammates live inside projects, respect permissions, and pause at checkpoints. See what is different, how to run a four week pilot, and the questions to ask before these agents reach general availability.

Breaking from the bot pack

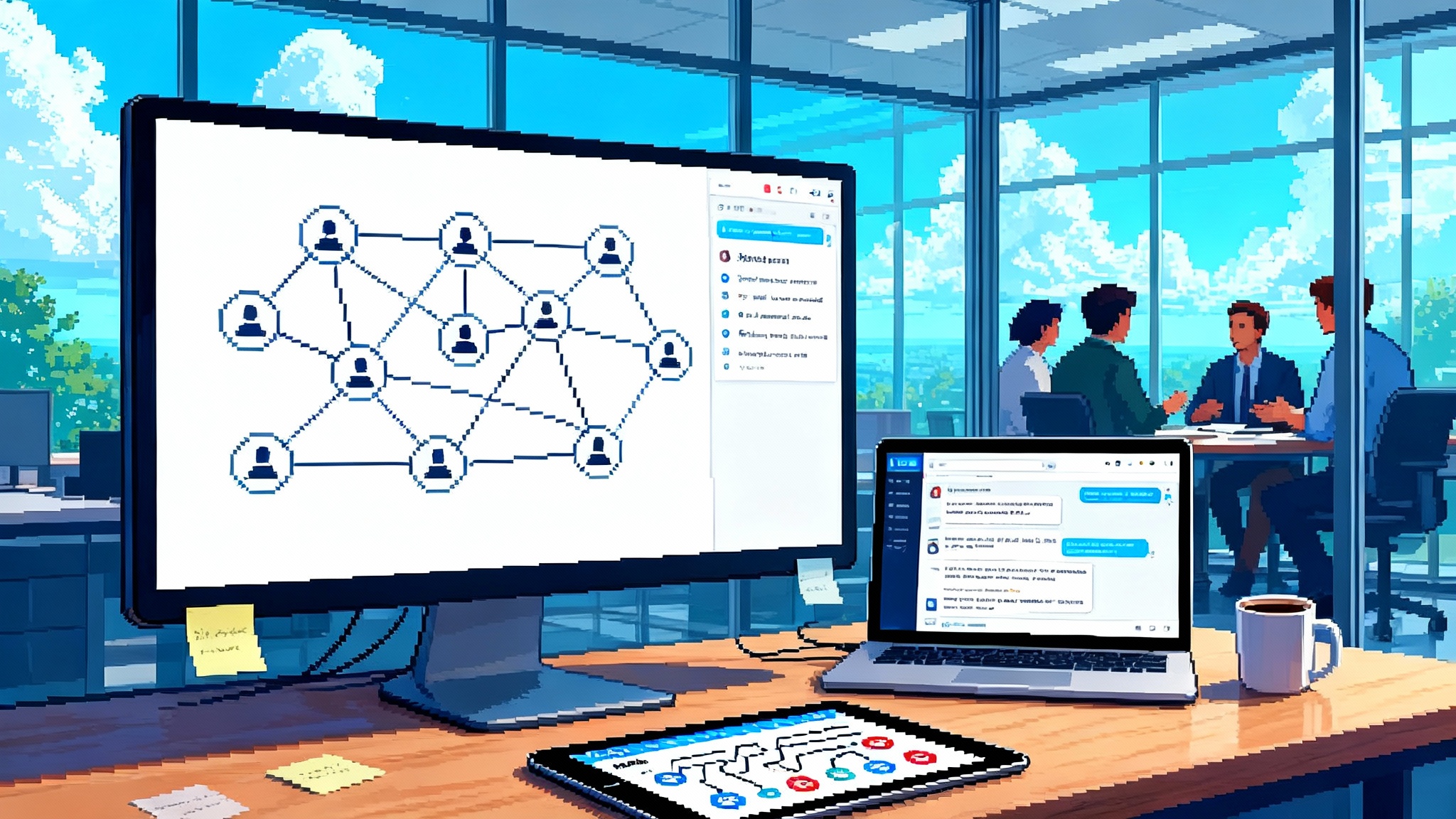

On September 25, 2025, Asana announced that AI Teammates are in beta, positioning them not as fully autonomous bots but as collaborative agents that live inside everyday work and answer to teams, not just individuals. The pitch is simple and bold. Give an agent the same context a project manager or operations lead has, ask it to work inside the same plans and rituals, then give administrators true control over data access and spend. That is the opposite of a one off copilot that lives in a chat box. It is a coworker model, not a chatbot model. See the details in the press note where Asana announced AI Teammates beta.

The core argument is blunt: autonomy alone is the wrong goal for enterprise software. Complex work is social, multi step, and governed. Real teams move through planning, execution, review, and budget checkpoints. Agents that understand those rhythms can help. Agents that ignore them create rework.

What shipped and what is different

Asana’s AI Teammates run inside the Asana work management platform. They see goals, projects, tasks, owners, dates, and dependencies. They can draft briefs, create tasks, assign work, summarize progress, and route requests. They are configured around three planks that matter in practice:

- Context. Agents read and write inside the same plan humans use. They inherit team goals and project structures and they keep memory of how the team works.

- Checkpoints. Agents show their steps and wait for feedback at key moments. You can review, approve, or redirect before they commit changes.

- Control. Administrators can set permissions, visibility, and usage limits. Finance leaders and admins can keep a handle on credit consumption and data access.

If you have tried a copilot that drafts a nice paragraph but cannot file a ticket with the right fields or cannot respect role permissions, these planks read like a design response to very specific pain.

Why the next wave favors embedded and governed agents

Think about a seasoned launch program manager. They do not only write tasks. They knit together inputs from design, legal, sales enablement, and support. They know who can approve a price change, who owns the final sign off, and which dependency will slip first. They remember last quarter’s playbook and they adapt it to this quarter’s constraints. That is not just text prediction. That is context, process, and governance.

One off copilots struggle here for two reasons:

- They orbit a single user, so their memory is local. They forget that the team renamed the campaign, that finance changed the budget code, or that legal must review every claim above a threshold.

- They sit outside the work surface. You end up copying notes from chat into projects, then cleaning up after the agent.

Team embedded agents flip both. They share context with everyone because they live in the same plan. They act directly on the workload and can be held to the same checkpoints as the rest of the team. That is why this collaboration first design is likely to beat generic copilots. It turns agents into process participants rather than suggesters on the sidelines.

This direction is visible across the ecosystem. Google is pushing the workspace itself to coordinate multi agent workflows, as seen in our look at the Gemini Enterprise multi-agent OS. In software delivery, GitHub is becoming the console for coding agents, which we explored in Agent HQ for coding agents. And in go to market motions, CRM is turning into the control plane for agents, a trend we covered in Agentforce 360 CRM control plane. Asana’s move fits that broader pattern. Put the agent where the work lives and give it rules.

The Work Graph advantage: durable memory and cross functional awareness

Asana’s differentiator is its Work Graph, the data model that maps people, tasks, projects, goals, and the relationships among them. If the spreadsheet is a list and the document is a canvas, the Work Graph is a city map for work. It locates every building, the roads that connect them, and the zoning rules that govern how things move.

Put an agent in that map and three things happen:

-

Durable memory. An agent no longer forgets what it did last week. It can trace decisions to the project where they were made and keep a running institutional memory embedded in the work itself.

-

Cross functional awareness. When a marketing task links to a product milestone that depends on an IT change, the agent can see the chain and anticipate risk. It can flag a likely slip or propose a mitigation because the relationships are modeled, not implied.

-

Governed action. Because permissions and ownership live in the same model, the agent can act only where it is allowed. That is the difference between a draft that suggests and an agent that can actually do the work.

For a primer on why this model matters, see the Asana overview of the Work Graph. It makes clear how relationships give structure and memory to work and why an agent can operate responsibly when embedded in that structure.

What early customers are doing already

Early use cases cluster where repeatable process meets cross functional coordination. Three patterns stand out.

-

Program Management Office launches. Launches and cross functional programs involve orchestration, not just drafting. An AI Teammate can spin up a standard plan from a brief, map tasks to owners, and watch the critical path. When legal asks for substantiation or security needs a penetration test report, the agent can add dependencies, route approvals, and put a checkpoint before anything ships. It can produce weekly leadership summaries that actually reflect the live plan, not a stale slide.

-

IT triage and service management. In higher volume environments, a teammate can categorize incoming tickets, fill missing fields by asking the requester, deduplicate reports, and route to the right queue. When patterns emerge, the agent can propose a knowledge base article, add the new article to the standard response, and report on deflection rates. The key is that the agent updates the same system humans work in, so there is no swivel chair.

-

Marketing operations. Think of content pipelines that span intake, production, and review. A teammate can enforce naming conventions, watch brand terms, and draft first pass copy for variants, then file the right tasks for design or legal. It can keep an eye on cross tool compatibility, which limits broken handoffs between design, web, and analytics. When the campaign goes live, it can assemble a real report, pulling from the tasks and approvals that show what actually shipped.

Notice the common thread. These are not toy demos. They are workflows that chew up hours for project coordinators and producers. The agent takes on the glue work and the ritual work, which is where teams gain time back.

What to watch before general availability in Q1 FY27

Asana says general availability is expected in Q1 FY27. That means the next few quarters will be about scale and proof. If you are considering a pilot, keep a close eye on three things.

-

Cost controls. Ask how usage is measured and limited. You want administrator dashboards with clear credit meters, budget alerts, and per agent rate limits. Ask whether you can set department and project level budgets. Ask if there are safe defaults so that a pilot cannot run up a surprise bill.

-

Governance defaults. Review permission inheritance and default scopes. The agent should respect role based access and project privacy out of the box. You want simple, visible checkpoint settings. Ideally you choose when an agent must ask for review before acting and where it can act autonomously. Audit logs should show every action, the trigger, and the outcome.

-

Interoperability with developer tools and CRM. Real programs hop between tools. If your engineering teams live in GitHub or Jira and your go to market teams live in Salesforce or HubSpot, you want the agent to hand off work without breaking context. Evaluate the quality of these integrations under load. Does the agent propagate required fields and keep links fresh. Can it update status in both places when a ticket closes.

These are not nice to haves. They decide whether an agent remains a pilot project or graduates to a production teammate.

A four week playbook to evaluate AI Teammates

You do not need a lab to run a serious evaluation. You need a structured test with live work, a tight scope, and clear success criteria. Here is a pragmatic plan you can run in four weeks.

Week 1: choose a workflow and baseline

Pick one workflow with high coordination cost. Examples include a quarterly launch plan in the Program Management Office, a high volume IT request queue, or a creative production pipeline. Define success. For launches, it might be fewer at risk tasks and on time delivery. For IT, it might be first response time and deflection. For marketing ops, it might be throughput and rework.

- Access and permissions. Provision the agent like a contractor. Give it only the projects it needs. Turn on audit logs.

- Templates and rituals. Feed the agent your standard operating procedures and templates. Define where it must pause for review.

- Baseline. Capture two weeks of metrics before the pilot to compare.

Week 2: agent drafts and routes, humans approve

- Let the agent create tasks from briefs, apply templates, and route approvals. Keep a human in the loop at every checkpoint. Measure time to plan and error rates.

- Ask the agent to summarize status for the weekly standup or leadership report. Compare to a human generated report for accuracy.

Week 3: allow autonomy in low risk steps

- Allow the agent to deduplicate tickets, add fields, and assign to the right queue. Require review for any escalation or high severity change.

- In marketing ops, allow the agent to generate first drafts and enforce naming conventions automatically. Require review before assets move to final review.

Week 4: assess and expand

- Compare pre and post pilot metrics. Look for lift in throughput, reduction in rework, and lower coordination time. Audit the logs for any surprising actions.

- If results hold, widen the scope slightly while keeping the same controls. If results are noisy, tune the context the agent reads, then try again.

Scoring rubric. Grade on five criteria: context fit, checkpoint quality, governance and audit, integration quality, and cost predictability. Rank from 1 to 5 and require a minimum score of 4 in each to move from pilot to production. This prevents a shiny demo from displacing needed controls.

How this reframes the category

For a decade, work management tools have been judged on user interface, templates, and integrations. The agent era adds new vectors.

- Data model as a feature. A list can store tasks. A work graph can store relationships. Agents need the latter to reason about who, what, when, and why.

- Collaboration surface as the console. If the agent works where the team works, the collaboration surface becomes the console. Checkpoints, comments, and approvals are not bolted on, they are the agent’s control panel.

- Governance as a product. A customer that cannot set default scopes, audit actions, and cap spend will not let agents touch critical work. These are product features now.

If you compare vendors, look for these traits. If a vendor sells a chat window and a library of prompts, be cautious. If they show you a model of your work and how the agent moves through it under control, lean in.

Concrete examples you can copy

-

PMO launch navigator. Seed your plan with a standard template. Ask the agent to add dependencies as each function adds its tasks. Require review when it changes dates or scope. Have it prepare a weekly risk register that flags ownerless work, slipped dependencies, and tasks without acceptance criteria.

-

IT ticketing specialist. Give the agent access to the service intake project and the knowledge base. Allow it to categorize, fill missing fields by asking the requester, deduplicate, and assign to the correct queue. Require review when it proposes policy changes. Track first response and resolution times and the share of tickets resolved without escalation.

-

Marketing ops creative partner. Provide brand guidelines, approved phrases, and naming conventions. Let the agent generate first pass copy, enforce naming, tag assets, and route legal approvals. Require review for anything that leaves the draft stage. Track throughput and the ratio of revisions per asset.

Each example treats the agent like a junior teammate with a strong memory and good habits, not a wizard. That framing lowers risk and increases adoption.

Risks and how to manage them

- Overreach. The fastest way to stall a rollout is to give an agent too much authority too soon. Start with narrow scopes, require checkpoints, and add autonomy carefully.

- Hidden spend. Set budgets and alerts on day one. Pair administrators with pilot leads so usage and cost are reviewed weekly.

- Integration drift. Work does not live in one tool. Test the handoffs to developer tools and customer relationship systems under load. Broken field mappings create silent failures.

Treat these as known risks, not surprises. Plan for them in the pilot design.

What success looks like after the pilot

By the end of a good pilot, the team should be able to point to concrete, measured outcomes. Expect to see fewer tasks stuck without owners, fewer missed dependencies, and shorter time to a ready plan. Expect that weekly status reports take minutes instead of hours because the agent can pull from the live plan. Expect clear audit trails so that managers and compliance can see who did what and when.

The cultural signal matters too. When an agent participates in planning rituals and passes through checkpoints like any other teammate, it earns trust. That trust is the prerequisite for scale.

The upshot

The shift from chatty copilots to team embedded and governed agents is real, and Asana’s AI Teammates are a clear marker. They ship inside live plans, learn from the same context that humans use, pause at checkpoints, and give administrators control. That is what work needs. It is also the path to making agents useful in the messy middle of cross functional coordination.

Between now and general availability in Q1 FY27, the best move is to run a tight pilot that measures outcomes, tests governance, and pushes on integrations. If the results hold, you will have a new kind of teammate. Not a chatbot that suggests, but a collaborator that helps teams ship.

That is the breakthrough. Not autonomy as an end, but collaboration that compounds.