AWS AgentCore turns agent ops into an enterprise runtime

AWS Bedrock AgentCore elevates agent operations into a real platform with a secure runtime, memory, identity, gateway, and observability. Learn how to move from notebook demos to production fleets and what to do first.

The day agent operations became a platform

For two years, most enterprise agent projects have followed a familiar arc. A dazzling demo in a notebook. A tangle of framework code stitched to internal APIs. A fragile cache called memory. Logs scattered across half a dozen services. It works until it does not. The climb from clever prototype to a reliable production fleet is steep, and many teams end up maintaining brittle glue instead of shipping durable outcomes.

Amazon is trying to change that curve. On July 16, 2025, AWS introduced Bedrock AgentCore in preview. On October 13, 2025, it moved to general availability, expanding security and operations features in the process, as detailed in the AgentCore GA announcement on October 13, 2025. Days later, AWS added a second deployment path so teams can iterate faster, documented in the AgentCore direct code deployment update. The intent is clear. Agents should run like real software, not like a science experiment.

This piece offers a pragmatic deep dive on what AgentCore changes for engineering teams. We will look at how its layers shift AgentOps from do it yourself glue to a dependable runtime, what it means for security and governance, how to migrate from notebooks to fleets, and why a standardized agent runtime unlocks interoperability across frameworks and tools in 2026.

What AgentCore actually is

Think of AgentCore as two things at once. It is the factory floor where agents do their work. It is also the control room that keeps the factory safe, observable, and integrated with the rest of your enterprise.

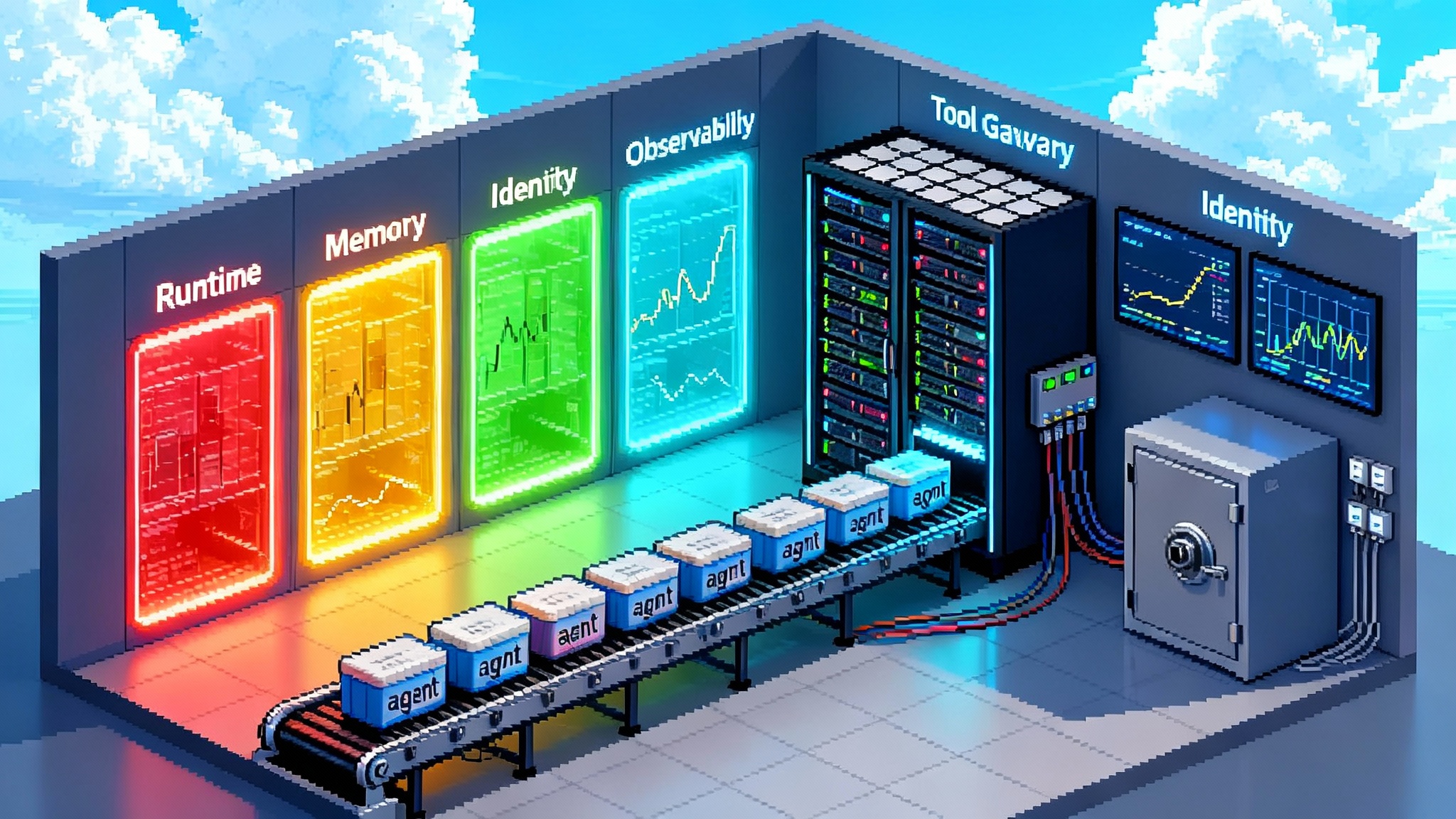

AgentCore is composed of modular services that can be used independently or as a stack:

- Runtime. A secure, serverless execution layer with session isolation, long running support, and checkpointing.

- Memory. Short term and long term memory options, plus a self managed strategy when you need custom extraction and consolidation.

- Identity. User and agent identity, token management, and delegated access to tools and data.

- Gateway. A tool gateway that speaks open protocols so agents discover and call tools without one off integrations.

- Observability. End to end traces and metrics with CloudWatch dashboards and OpenTelemetry compatibility.

- Browser tool. A cloud based, secure browser runtime so agents can complete web workflows at scale.

- Code Interpreter. Sandboxed code execution for safe, auditable compute inside agent tasks.

Each module stands on its own, but the real value shows up at the seams, because seams are where most agent projects fail. AgentCore standardizes those seams so teams can build faster and operate with confidence.

The runtime layer: from orchestration to operations

In most do it yourself setups, the runtime behind an agent is a mix of a framework event loop, a task queue, and a cloud function or container that you hope does not time out. That makes long running, multi step work fragile and forces teams to work around infrastructure rather than design the best workflow.

AgentCore Runtime gives each session a secure, isolated execution environment with support for long running work measured in hours. Preview material emphasized up to eight hour execution windows with checkpointing so a session can recover gracefully from failures. That matters for complex work like claims adjudication, financial closing, supplier onboarding, or identity proofing, where an agent may need to wait on human approval, poll a batched system, or coordinate with another agent.

General availability added support for an Agent to Agent protocol in the runtime, which opens the door to multi agent systems where one runtime can safely hand off work to another. On November 4, 2025, AWS added a direct code deployment path alongside container based deployment. That gives teams two practical modes. Upload a code package to move quickly at the start, then shift to container images once you stabilize dependencies and need tighter control. If you target ARM64 on Graviton, the starter toolkit smooths the build process so you can avoid wrestling with Docker files on day one.

What does this look like in practice? Picture a trade surveillance agent for a bank. It ingests alerts, runs enrichment queries across internal systems, requests an analysis from a model, calls a policy tool, and creates tickets for human investigators. With a long running session, the agent can keep its state across these steps, wait for a policy verdict, and checkpoint progress so a node failure does not lose context. If an identity token for a data source expires mid investigation, Identity can refresh it without breaking the session. If the ticketing system times out, the runtime can retry with backoff and record that behavior in a trace. What once required a homegrown orchestration layer becomes a few configuration choices.

Two patterns change immediately when you adopt Runtime:

- Session centric design. Instead of kicking off a new function for every step, you model a coherent session that persists state, enforces identity, and logs cleanly from start to finish.

- Policy driven scale. Scaling becomes a matter of policy, since the runtime can spin up thousands of sessions in parallel while applying the same quotas, concurrency limits, and safety checks.

Memory: short term recall and long term context

Early agent projects often treat memory like a cache or a text file on disk. That can make demos look smart, but it usually creates correctness and compliance problems in production. AgentCore offers two directions. Use the managed short term and long term memory services for simplicity, or bring your own logic when you need control over extraction, summarization, retention, and redaction.

Short term memory lets a session remember decisions and intermediate results so the model does not repeat expensive or risky work. Long term memory supports durable context across sessions, such as customer preferences, policy constraints, or operational histories. The self managed option lets teams plug in domain specific rules for what to store and how to retrieve it. That matters in regulated environments where you must prove how a memory was formed, what fields were redacted, and when the memory expires.

A useful rule of thumb is to make memory a first class design choice:

- Interaction copilots. Favor short term memory with retrieval against a vetted knowledge base. Keep retention windows tight and audit the fields that persist between sessions.

- Process agents. Define exactly what long term facts you store and why. Capture provenance and redaction rules. Treat memory schemas and retention as code that security and data teams can review.

Identity: agents that act like responsible users

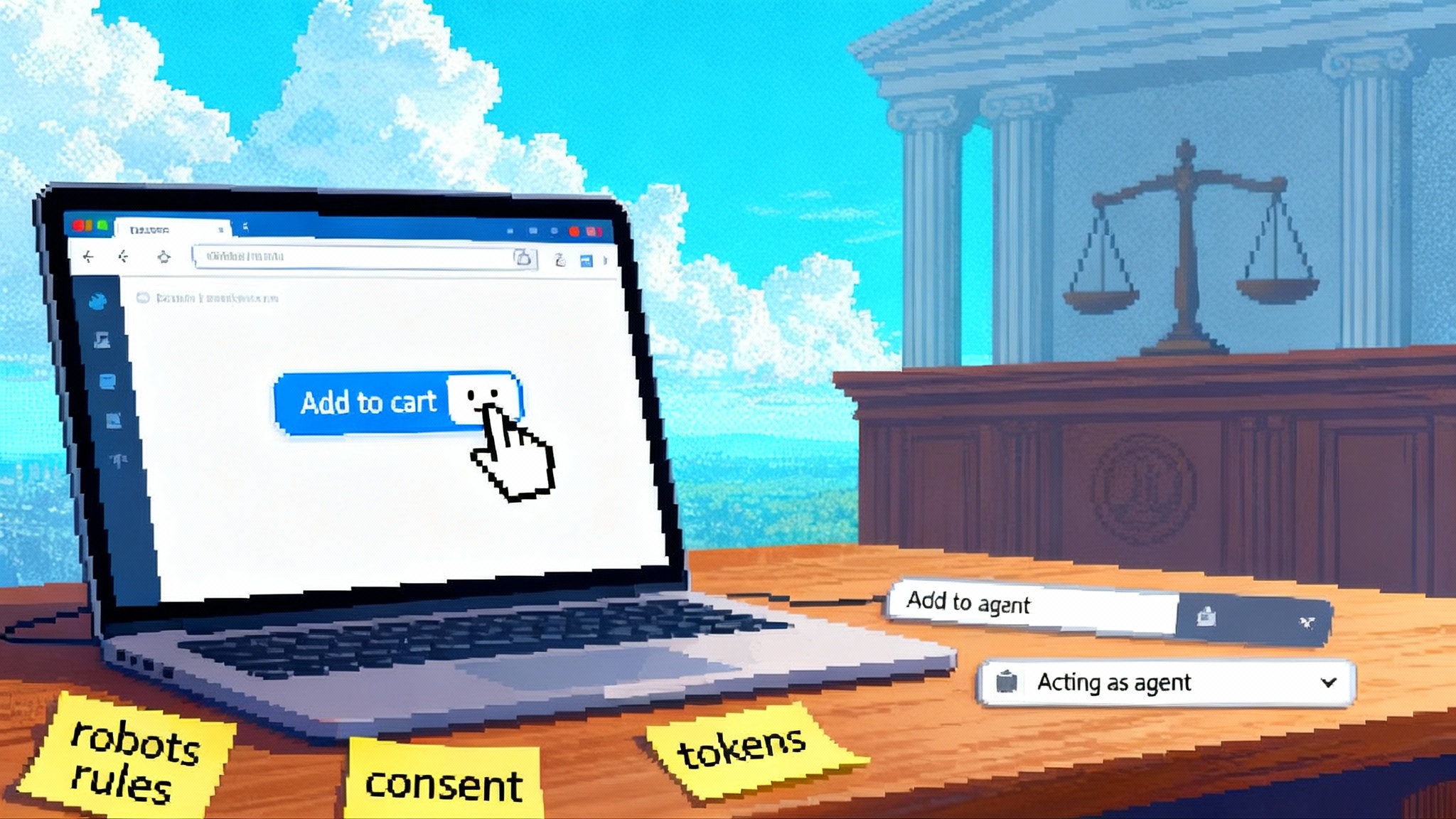

Agents need to act on behalf of someone. Sometimes that is a named user in your identity provider. Sometimes it is a service identity with constrained permissions. The hard part is making that work across many tools without scattering secrets.

AgentCore Identity handles both user identity and agent identity. It integrates with providers like Amazon Cognito, Microsoft Entra ID, and Okta, stores and rotates tokens securely, and allows the Gateway to enforce identity aware authorization to tools. That means an agent sees and invokes only what it is allowed to use, with audit trails to prove it.

If you are thinking about least privilege, start here. Give your marketing analytics agent a narrow role for reading campaign data and writing to an approved dashboard. Give your finance agent a role that can read invoices and create draft payments but cannot release them without a human in the loop. Then verify those behaviors in traces.

Observability: from mystery to measurable

Agent systems are probabilistic, which makes observability non negotiable. AgentCore Observability provides traces from prompt to tool call to response, with logs and metrics landing in CloudWatch without custom plumbing. Because the telemetry is OpenTelemetry compatible, you can integrate with partners like Datadog, Dynatrace, and specialized agent observability tools like LangSmith or Langfuse.

The goal is not just pretty dashboards. It is operational guardrails. You can set service level objectives for task success rate, latency, and cost per task. You can break down variance by model choice, tool latency, and memory hits. When a session fails, you can replay from a checkpoint. When a model update changes behavior, you can compare traces and roll back. These are standard software practices brought to agents.

Gateway and built-in tools: standardized tool use

Agent tools have become the bottleneck. Every enterprise has hundreds of internal APIs and a growing list of external services. Instead of teaching each agent framework a new integration, AgentCore Gateway turns tools into discoverable endpoints that speak open protocols such as Model Context Protocol. If you have an internal API for customer refunds or a vendor search system, the gateway can expose them as tools that any framework can use without custom adapters. The Browser tool and Code Interpreter add safe ways to handle web workflows and controlled code execution, which are two of the most abused patterns in ad hoc agent builds.

The payoff is faster onboarding. A compliance team can publish a policy tool once and have every agent use it. A sales operations team can expose a quoting tool with fine grained access rules. Tool behavior becomes a product with versions and change logs, not a snippet someone copied from an old repo.

Security and governance: policy that travels with the agent

AgentCore’s security model is opinionated in a good way. VPC and PrivateLink support put agent sessions and tools inside your network boundary, not on the public internet. CloudFormation resources and tagging let you treat agents like infrastructure as code with clear ownership and cost allocation. Identity aware authorization and token vaults reduce the sprawl of secrets.

Governance is not just about blocking bad things. It is about proving good behavior. With AgentCore you can:

- Trace a decision from user input to model call to tool invocation to result, which helps with audits and debugging.

- Enforce least privilege with roles scoped per agent and per tool, which reduces blast radius.

- Define retention and redaction for memories and logs, which aligns with privacy obligations.

- Require human approval for specific actions such as payment releases or account changes, which keeps irreversible actions under controlled workflows.

The hardest challenge is often cultural. Teams must agree to treat agent systems like production services from day one. AgentCore makes that possible. It does not make it automatic.

A pragmatic migration playbook

Most teams are sitting on a pile of promising prototypes. Here is a step by step plan to move from notebooks to fleets.

-

Inventory and classify. List agents and candidate use cases. Classify them by interaction pattern. Interactive copilot, background batch, or tool automation. Note data sensitivity, required tools, expected latency, and whether the agent acts for a user or as a system principal.

-

Pick one bounded pilot. Choose a workflow that matters but will not derail the company if it misbehaves. Define a crisp service level objective for success rate, latency, and cost per task. Decide upfront what is allowed to happen automatically and what always requires human approval.

-

Choose a framework and lock the contract. AgentCore is framework agnostic, so pick what your team knows. LangGraph, CrewAI, or LlamaIndex are all fine choices. The important part is to define the contract between the framework graph and the runtime session. Inputs, outputs, and how failures surface.

-

Package the agent. Start with direct code deploy if iteration speed matters. Move to container based deployment once the agent stabilizes or when you need system dependencies. If you package containers, target ARM64 on Graviton for cost and performance. Set conservative concurrency limits and timeouts on day one.

-

Wire identity before tools. Create a service role for the agent and user roles for any human participants. If your tools support OAuth, integrate through Identity so the agent never sees raw secrets. If your tools are internal APIs, expose them via Gateway and restrict by role. Validate that traces show the expected principal for each tool call.

-

Make memory a design choice. For interaction copilots, start with short term memory and retrieval from a vetted knowledge source. For process agents, define exactly what long term facts you store and why. Add retention windows and redaction rules. If you have domain specific extraction logic, adopt the self managed option so you can prove how a memory was formed.

-

Instrument the golden path first. Add structured logging and traces for the main success path before you expand coverage. Record prompt versions, tool versions, and model identifiers in every trace. Set budgets for cost per task and alert when you exceed them.

-

Add human in the loop where the stakes are high. For any action that changes money, inventory, or customer data, require explicit approval. Implement this as a tool that the agent calls to submit a proposed action. Store the proposal and the decision in the same trace as the agent’s run.

-

Test failure and recovery. Kill the agent mid run and confirm checkpoint recovery. Expire a token and confirm Identity refresh behavior. Force a tool 503 and confirm retry with backoff. Randomly delay the model response and confirm the session does not time out unexpectedly.

-

Only then scale and diversify. Increase concurrency. Add more tools. Consider multi agent patterns using agent to agent handoffs. Introduce canary deployments with model and prompt variants. Keep one rollback button within reach.

Follow this playbook twice and you will notice the shift. Your team spends more time reasoning about workflow and policy, and less time fighting infrastructure.

How AgentCore fits the broader stack

AgentCore is part of a larger move toward standardized agent runtimes across clouds and data platforms. Google and Vertex are emphasizing multi agent orchestration and safe code execution, covered in our look at Vertex AI Agent Engine unlocks A2A. Data clouds are turning warehouses into operational substrates for agents, explored in Snowflake Cortex Agents go GA. On the build side, teams are productizing how they assemble agents, which we unpack in Agent Bricks production pipeline.

A common runtime for agents has three big consequences for the next year:

-

Interoperable tools. With a gateway that speaks open protocols like Model Context Protocol, a tool can be published once and used across frameworks. Vendors in analytics, support, and IT operations can ship tools as products rather than one off connectors. Enterprises get governance and reuse.

-

Portable state and policy. Memory and identity that travel with the agent make it possible to run the same workflow in different environments with the same behavior. You may prefer one model in a specific region and a different model elsewhere. If the runtime standardizes sessions and policy, the swap is practical.

-

Clear operations contracts. Observability that looks like other production services means platform teams can set expectations that translate to the business. Incident response, dashboards, and cost controls become familiar. That familiarity lowers organizational friction to deploy agents in customer facing processes.

Expect the first half of 2026 to bring more cross vendor interoperability. Tool vendors will target MCP and adjacent standards. Frameworks will optimize for runtimes that offer isolation and long sessions without extra plumbing. Platform teams will write the first agent service level objectives that map to outcomes rather than token counts. The winners will be the teams that make their tools, policies, and memories explicit products.

What to watch next

There is plenty left to mature. The industry needs a portable agent state format so teams can migrate without rewiring every checkpoint. Policy versioning should be first class and diffable, the way we treat infrastructure as code. Observability will grow more opinionated about the events that matter for safety and compliance. Web workflows will need better primitives for bot attestation so agents can complete tasks without human babysitting while staying accountable.

Inside most companies, the biggest leap is organizational. Platform and security teams need a shared rubric for agent readiness. Product teams need clear boundaries for autonomy. Finance teams need a view of cost per task that maps to value. AgentCore offers the hooks to build that rubric and measure those outcomes.

The bottom line

AgentCore moves agent work from clever prototypes to disciplined operations. The runtime handles isolation and long sessions so workflows survive the real world. Memory and identity make decisions and actions traceable to the right context and the right principal. The gateway and built in tools eliminate one off integrations. Observability makes outcomes measurable and improvable.

If you have a backlog of promising demos, stop adding glue. Pick one workflow, adopt the runtime, wire identity and memory with intent, and put traces on every step. Treat the agent like a service. When the seams are standardized, you can finally focus on the work that makes your business competitive. That is the promise of AgentCore and a sensible path to bring agents into production at scale.