GitHub Agent HQ: Mission Control for the Coding Agent Era

GitHub reframes the repository as mission control for coding agents. With Agent HQ, AGENTS.md, a native MCP tool registry, and third party agent support, teams can orchestrate, govern, and measure software work at scale.

From repository to mission control

On October 28, 2025, GitHub introduced Agent HQ, a product that treats the repository as the coordination point for humans and software agents. In GitHub’s framing, Agent HQ is where you assign, steer, and verify the work of multiple coding agents across GitHub, Visual Studio Code, mobile, and the command line. The launch also previewed support for third party coding agents and enterprise controls for identity, policy, and budgets. If models are the engines and tools are the attachments, orchestration is the transmission. Agent HQ is that transmission for code work, and it lives where your issues, branches, and pull requests already live. For background, see Introducing Agent HQ on the GitHub blog.

Why orchestration matters now

The last two years have been about bigger models and longer context. The next year will be about dependable execution. Orchestration decides which agent should do what, with which permissions, using which tools, in what order, under which guardrails. It is the difference between a clever assistant and a reliable teammate.

Think of orchestration as the missing layer that turns model power into shipped code. Without it, prompts sprawl, context gets lost, and teams rely on ad hoc chat threads. With it, plans become explicit, identity is first class, and change flows through the same review and merge rules that already govern your repo.

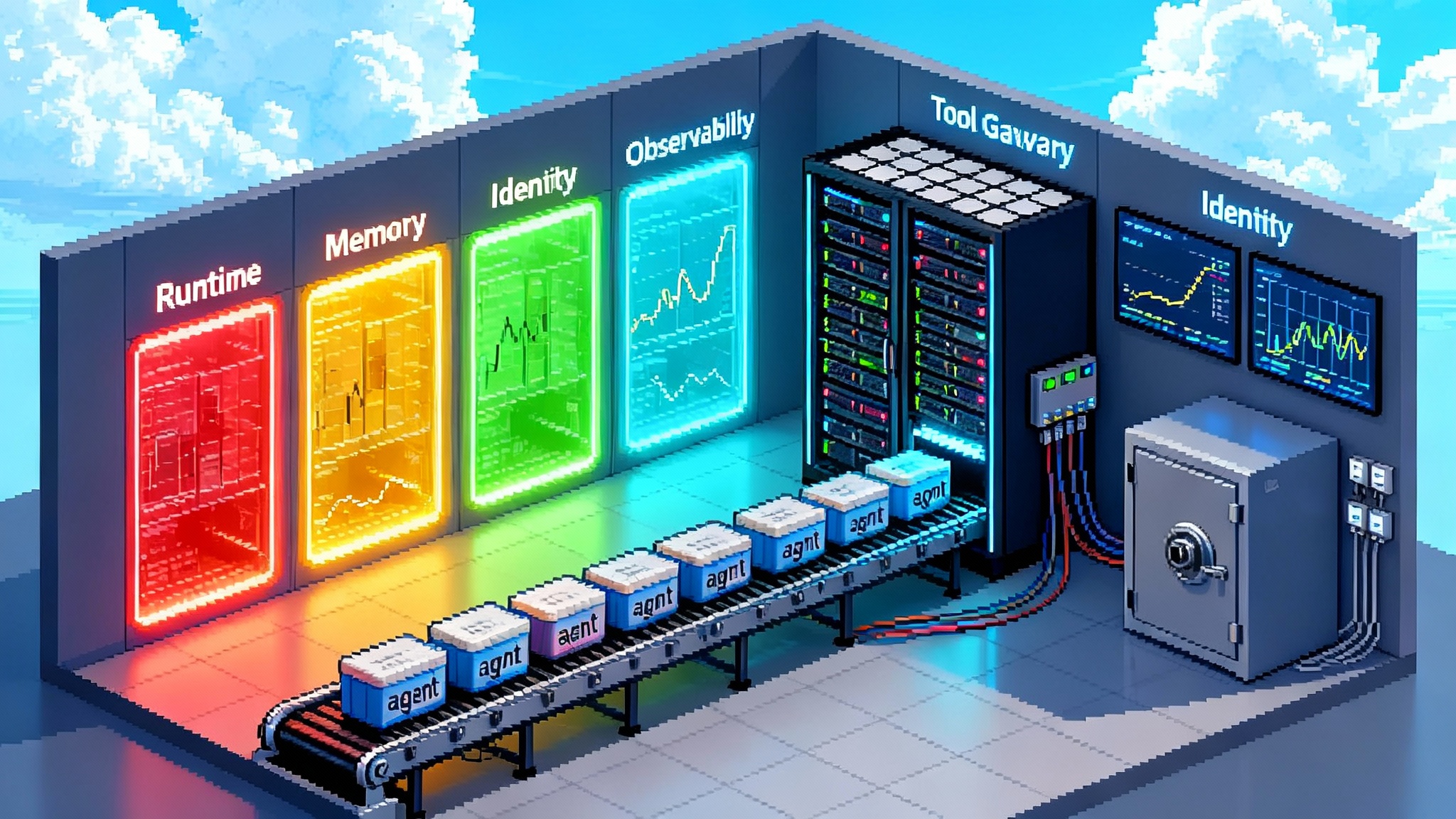

What Agent HQ actually includes

Agent HQ is not only a new UI. It combines four pieces that together turn a repository into a multi agent runtime:

- Mission control for multi agent tasks. Assign one or more agents to scoped tasks, run them in parallel, and track progress with the clarity of a continuous integration dashboard.

- AGENTS.md as a repo native contract. Define conventions, commands, and guardrails in Markdown so guidance travels with the code and evolves under version control.

- A native Model Context Protocol registry. Expose approved tool servers in Visual Studio Code so agents can discover and use capabilities like design, billing, monitoring, and documentation without bespoke adapters.

- A path for third party agents. Use agents from multiple vendors under one roof while keeping identity, policy, budget, and observability consistent.

Together, these pieces place GitHub at the center of the agent workflow. The unit of planning is not a chat reply. It is a proposed software change with a plan, tests, and an audit trail.

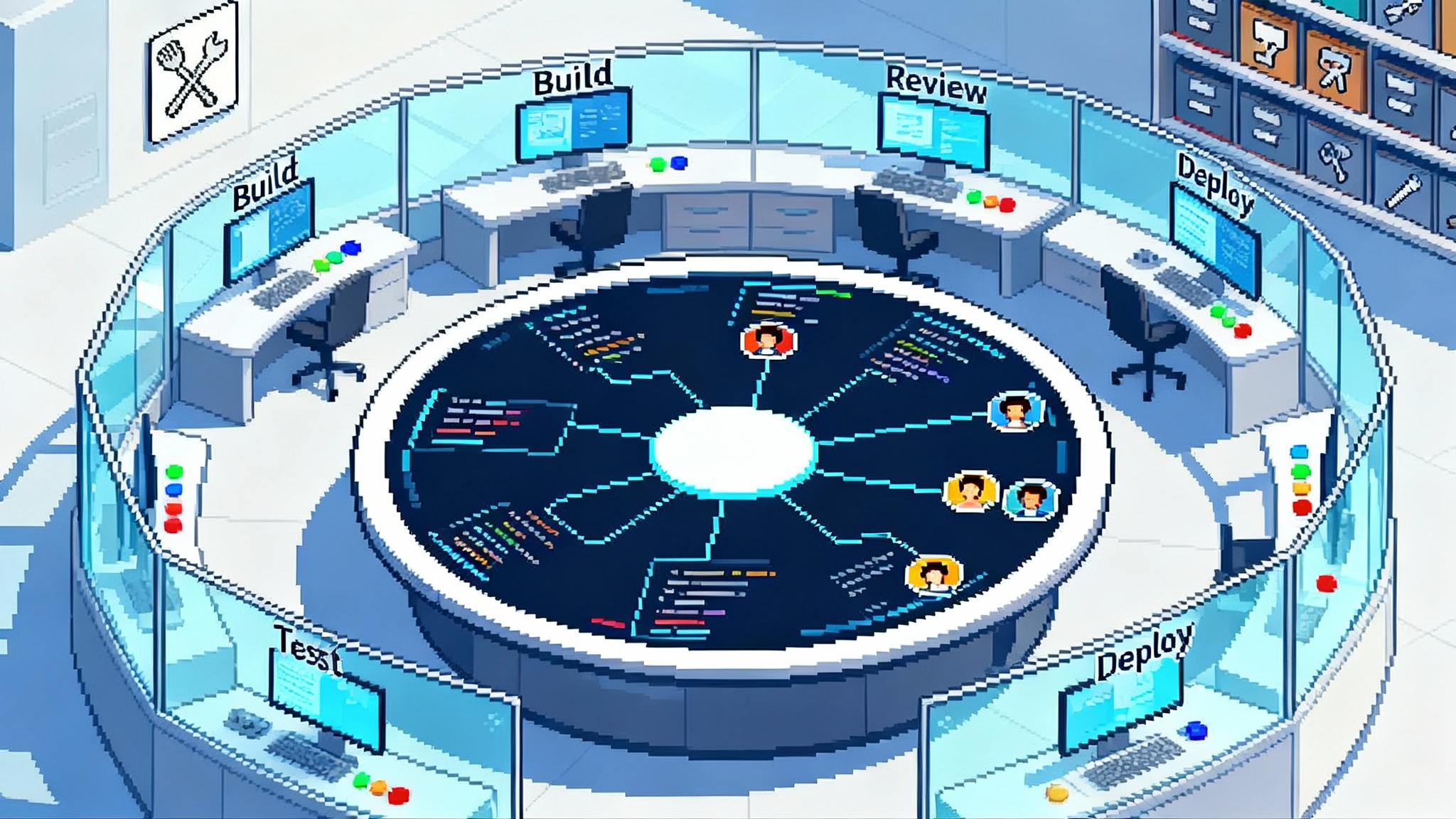

Mission control in practice

Mission control functions like an operations room for development work.

- Plan the work. Choose the target branch, required checks, and acceptance criteria. Keep plans short and testable. Tie each step to an owner, human or agent.

- Run with permissions. Each agent has a distinct identity with scopes that match the task. Branch protections and code owners remain in charge. Agent created pull requests follow the exact same rules as human ones.

- Compare and pause. Run two agents against the same issue to compare plans. Pause a run that starts to loop. Reassign a task without losing history.

- Observe outcomes. Track completion rate, human intervention, review time, and policy triggers. These metrics let you convert agent experiments into accountable teammates.

The benefit is not only speed. Mission control makes the workflow legible. You can answer who changed what, why they changed it, which tools they used, and which policies allowed it to proceed.

AGENTS.md as the shared contract

AGENTS.md is a plain Markdown file that encodes how work gets done in your repo. It is the README for nonhuman collaborators. It can specify build steps, test commands, coding standards, naming patterns, security guardrails, and review checklists. Because it lives in version control, it stays in sync with code and propagates to forks.

The format has gained momentum as a community effort with a reference specification and examples. That matters because every agent needs a predictable place to discover ground rules. For details, see the AGENTS.md open format repository.

A practical mental model helps. When you onboard a new teammate to fix flaky tests, you hand them a starter packet with build steps, test shims, allowed libraries, and examples of good pull requests. AGENTS.md is that packet, kept current by the same review practices you already use for code.

Patterns that make AGENTS.md effective

- Keep instructions specific and testable. Replace “write good tests” with “use table driven tests for handlers and mock network calls”.

- Localize guidance in monorepos. Put an AGENTS.md in each package and keep the root file short. Cross link to the package files.

- Encode constraints, not just preferences. If tests must not hit the network, say so and include a passing example.

- Align policy with the contract. If you require a pull request checklist, enforce it with a repository rule and keep the checklist in AGENTS.md.

Model Context Protocol tools, natively discoverable

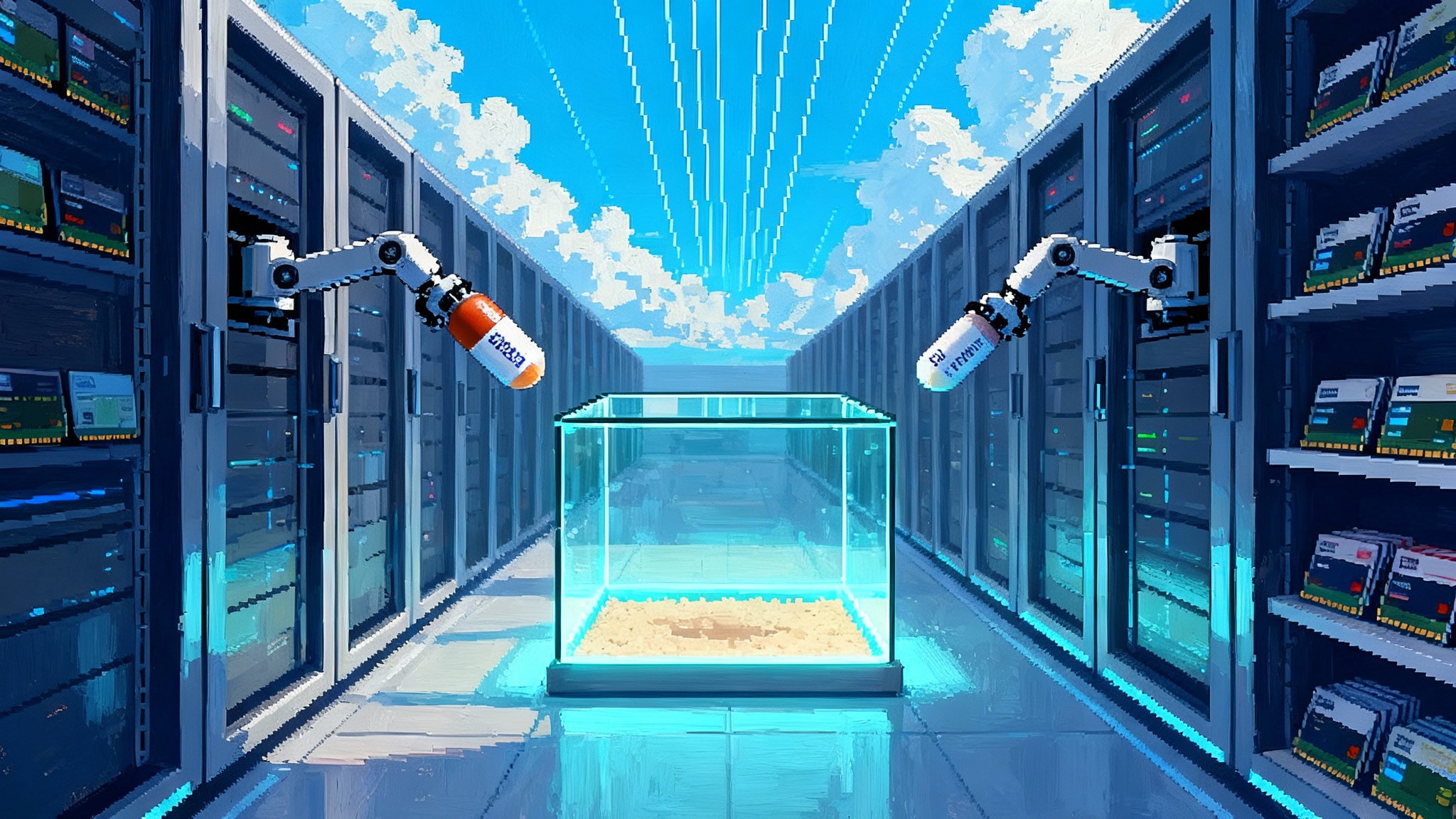

Tools are where agents become useful. Model Context Protocol, often shortened to MCP, standardizes how agents discover and invoke capabilities like Stripe for billing, Figma for design assets, Sentry for error context, Notion for docs, and more. GitHub’s native MCP registry in Visual Studio Code exposes approved servers so teams can enable specialized capabilities without embedding one off adapters in every agent.

This unlocks scale. A payments team can bless the Stripe server with scopes that match staging and production. A design systems group can enable read access to Figma for refactor work only. Security can restrict destructive tools to sandboxes. Because the registry is part of the same environment where agents run, setup becomes a policy decision, not a bespoke integration project.

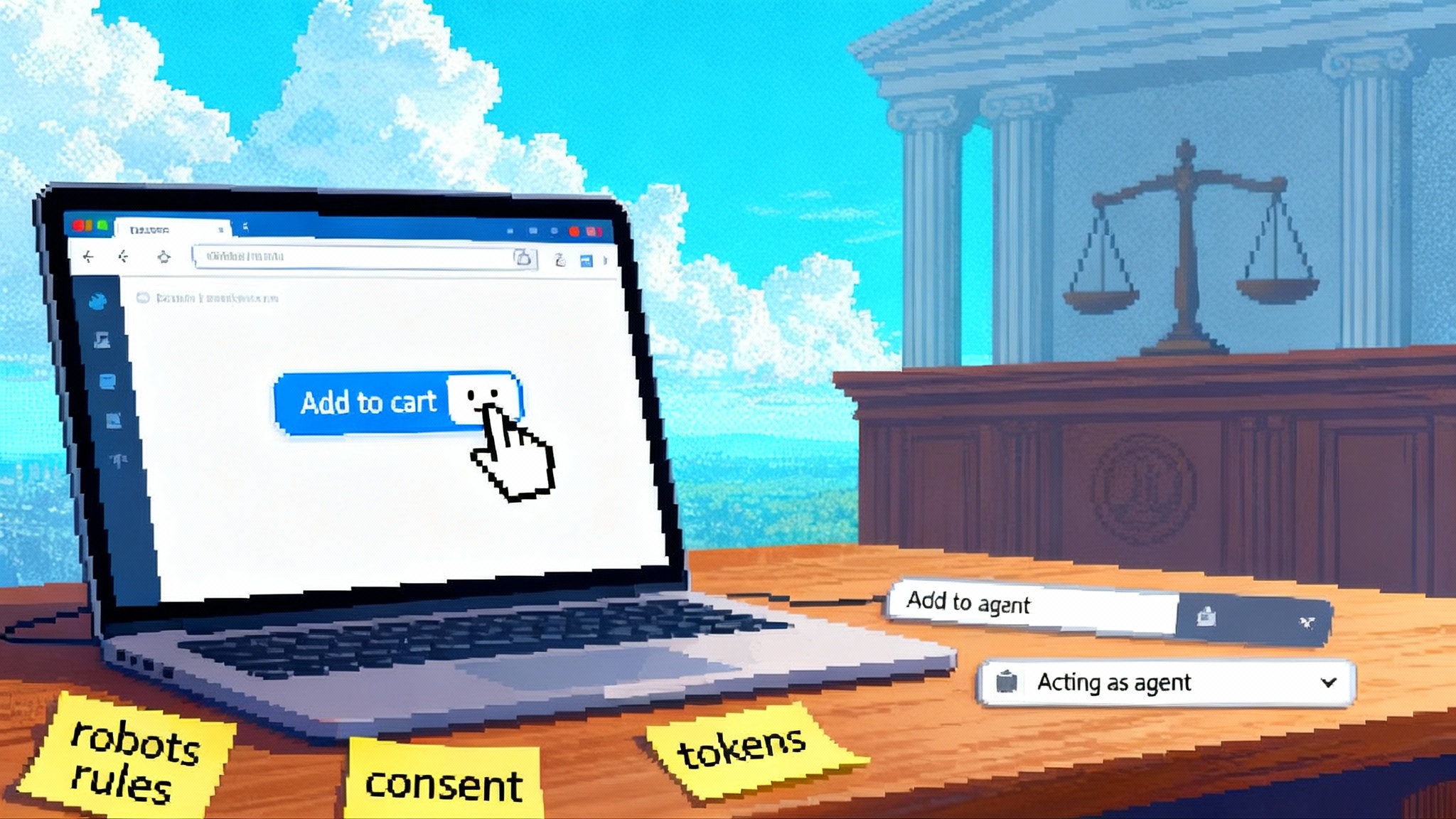

Third party agents under one roof

GitHub has set the expectation that agents from multiple vendors will be usable inside Agent HQ, likely as part of paid Copilot subscriptions. That signals two things. First, GitHub is not trying to win through model lock in. Second, GitHub wants to be the neutral, governed place where teams orchestrate agent work regardless of who built the agent or the model behind it. The platform controls identity, policy, budgets, and observability. Vendors compete on planning quality, tool use, and code outcomes.

Repositories become multi agent runtimes

When you put these pieces together, a repository starts to look like a small company. It has roles, runbooks, policies, and a queue of work. Agents are staff who check in changes under their own identities. Mission control routes tasks, AGENTS.md trains them, the MCP registry equips them, and your pull request rules hold everyone to the same standards.

Consider a concrete scenario. Your team needs to migrate logging across five services and update dashboards.

- Capture the plan in mission control, including branch strategy and checks.

- Select two agents, one focused on application code, one focused on observability tools.

- Equip both through the MCP registry with access to logging libraries and monitoring systems.

- Rely on AGENTS.md rules like “prefer structured JSON logs” and “add a table driven test for each handler”.

- Run tasks in parallel, compare plans and diffs, and review two pull requests per service.

This is orchestration in action. It is very different from asking one chatbot to “make the code better”.

How developer workflows change

- Planning moves ahead of execution. Mission control’s plan view turns vague prompts into step by step tasks with owners and acceptance criteria. That reduces rework and makes agent work auditable.

- Pull requests become the contract. Agents propose changes through normal pull requests with linked issues, tests, and explanations. Reviewers can request plan updates or assign a different agent to revise.

- Parallelism becomes normal. A single maintainer can coordinate multiple agents on related tasks without context fatigue. This favors small, well scoped branches and pushes teams to codify conventions in AGENTS.md instead of in chat logs.

- Tools are permissioned once, used many times. The MCP registry and enterprise policies turn integration into configuration. Teams save engineering effort and reduce risk by centralizing scopes, identities, and audit trails.

- Metrics become the lever. With identity and policy first class, you can track acceptance rate per agent, average review time per change type, and the effect of stricter policies on lead time.

Governance and risk, handled as part of the flow

- Identity and least privilege. Give each agent a distinct identity tied to your organization. Grant repository and environment scopes that match the task. Use environment secrets and revoke unused access on a schedule.

- Policy as code. Enforce branch protections and code owners for agent identities. Write repository rules that block merges if required tests, security scans, or change management steps are missing. Point reviewers to the AGENTS.md location in your policy docs.

- Budget controls and rate limits. Track spend per agent and per project. If a run starts to loop, mission control should pause it and require human approval to continue. Treat budget ceilings like cloud compute limits.

- Observability. Emit events for agent actions and route them to your monitoring stack. Create dashboards that show cycle time, rework rate, and defect escapes for agent changes compared with human only changes.

- Data boundaries. Use sandboxes for destructive tools. Make test data the default. Require a second reviewer for any agent change that touches secrets, access control, or billing code.

A 30 day pilot with measurable KPIs

A one month rollout gives you evidence without derailing roadmaps. Below is a pragmatic plan.

Week 1: scope and guardrails

- Choose two repositories with steady issue flow and strong tests. Prefer one service and one front end or library.

- Create AGENTS.md with sections for build commands, test commands, style rules, security footguns, and a pull request checklist. Add a short plan template at the top.

- Enable mission control for a small group of maintainers. Create distinct agent identities with least privilege. Apply branch protections and code owner rules to agent pull requests.

- In Visual Studio Code, enable the MCP registry. Approve two read only servers that match your pilot use cases, for example Sentry for error context and a docs tool for changelog updates.

- Define KPIs: pull request lead time, review time, change failure rate, rework rate, number of agent tasks completed, and agent acceptance rate without human edits.

Week 2: first tasks and parallel runs

- Select five well scoped issues per repo. Examples include converting logging to a new format, updating a deprecated library, or refactoring a component with tests.

- Run two agents in parallel on similar tasks to compare plans and outputs. Require both to attach their plan to the pull request.

- Track identity, policy triggers, and manual intervention. Enforce that agents must add or update tests with every change.

- Hold a daily review where maintainers score clarity of plans, code quality, and test completeness.

Week 3: raise the stakes and add tools

- Expand to fixes that touch more files, such as adding input validation or improving error handling along a critical path.

- Approve one write capable tool in the MCP registry in a staging sandbox, for example a deployment tool for test environments or a documentation updater.

- Introduce cost tracking per agent. Set a budget ceiling and alert when 80 percent is reached.

Week 4: hardening and executive readout

- Introduce a security or compliance task, such as applying a new secure configuration or updating a dependency with known vulnerabilities. Require additional review.

- Compare agent metrics against a human only control set from the same month using comparable issue types.

- Hold a blameless postmortem on any regressions. Update AGENTS.md with new guardrails and examples.

- Produce a one page readout with goals such as:

- Pull request lead time reduced by 20 percent or more.

- Review time reduced by 25 percent or more through clearer plans and test evidence.

- Agent acceptance rate without edits above 60 percent for well scoped issues.

- Change failure rate no worse than the human baseline. If worse, list fixes to AGENTS.md and policies.

- Unit test coverage delta positive on agent changes.

- Cost per merged change compared with human only baseline, including review time savings.

If targets are missed, keep the pilot in Week 4 until they are met. The goal is not speed at any cost. The goal is predictable, traceable progress at a lower cost per unit of value.

Where Agent HQ fits in the ecosystem

Agent HQ is part of a broader shift toward real runtimes for agent work. Platform teams will recognize echoes of service meshes and CI pipelines, only now applied to code planning and change control.

- If you are exploring enterprise wide agent ops, compare GitHub’s approach with AWS AgentCore as an enterprise runtime. Both emphasize policy, identity, and standardized tooling.

- If you care about turning agent output into a reliable flow of changes, look at the Agent Bricks production pipeline. The emphasis on packaging and review complements mission control.

- If your use cases require stronger execution control, review Vertex AI Agent Engine on execution. It highlights code execution and agent to agent patterns that pair well with GitHub’s governance layer.

These comparisons are not either or. Many organizations will mix platforms based on language ecosystems, compliance needs, and tool preferences. What matters is that orchestration, identity, and policy become first class across your stack.

Metrics that matter for leaders

Leaders do not need every diagram. They need a small set of metrics that predict quality and throughput.

- Acceptance rate without edits. Percentage of agent pull requests merged without human code changes. Track per agent and per change type.

- Lead time to merge. Time from plan approval to merged pull request. Watch how policy changes and tool access affect this number.

- Review time per pull request. A proxy for plan clarity and test evidence. Expect it to drop as AGENTS.md matures.

- Change failure rate. Post merge regressions tied to agent changes. Compare to a human baseline and investigate deltas.

- Rework rate. How often humans request plan revisions or code rewrites. Use this to refine AGENTS.md and tool scopes.

- Cost per merged change. Include model calls, tool usage, and reviewer time. Favor metrics per category of work, not overall averages.

Pitfalls to avoid

- Vague prompts and vague plans. If a plan is not testable, the pull request will be noisy. Require acceptance criteria up front.

- Global secrets and wide scopes. Least privilege is non negotiable. Use environment secrets and time boxed grants.

- Chat only guidance. Codify conventions in AGENTS.md and enforce them with repository rules, not reminders in Slack.

- Skipping the control group. Always compare to a human only baseline so you can defend the business case.

- Ignoring cost alerts. Pausing runaway loops early saves time and money.

What this means for the next year

- Teams that capture conventions in AGENTS.md and enforce them through policy will scale agents without chaos. Their repos will behave like well run shops with clear playbooks.

- Vendors that package expertise as agents plus MCP tools will find buyers. Mission control gives them a storefront with measurable outcomes.

- Platform teams will gain influence. Curating tools, shaping AGENTS.md templates, and setting policy will become strategic work that improves developer productivity and reduces risk.

The bottom line

Agent HQ is not a new chatbot. It is a control plane for software work. Mission control coordinates tasks. AGENTS.md teaches agents how your shop operates. The MCP registry equips them with safe, approved tools. Third party support widens the talent pool. Together, these pieces promote the repository from storage to studio, a place where many hands can work in parallel without stepping on each other.

If you lead an engineering team, start a 30 day pilot now. Treat agents like teammates with identities, budgets, and rules. Measure their output the same way you measure human work. The organizations that learn to orchestrate will ship faster with fewer surprises and will do so with a traceable record of how every change came to be.