GitHub Copilot Agent Goes GA, DevOps Adds a New Teammate

GitHub’s autonomous coding agent for Copilot is now generally available, bringing draft pull requests, auditability, and enterprise guardrails to the software lifecycle. Pilot it in 30 days and measure real impact.

The news and why it matters

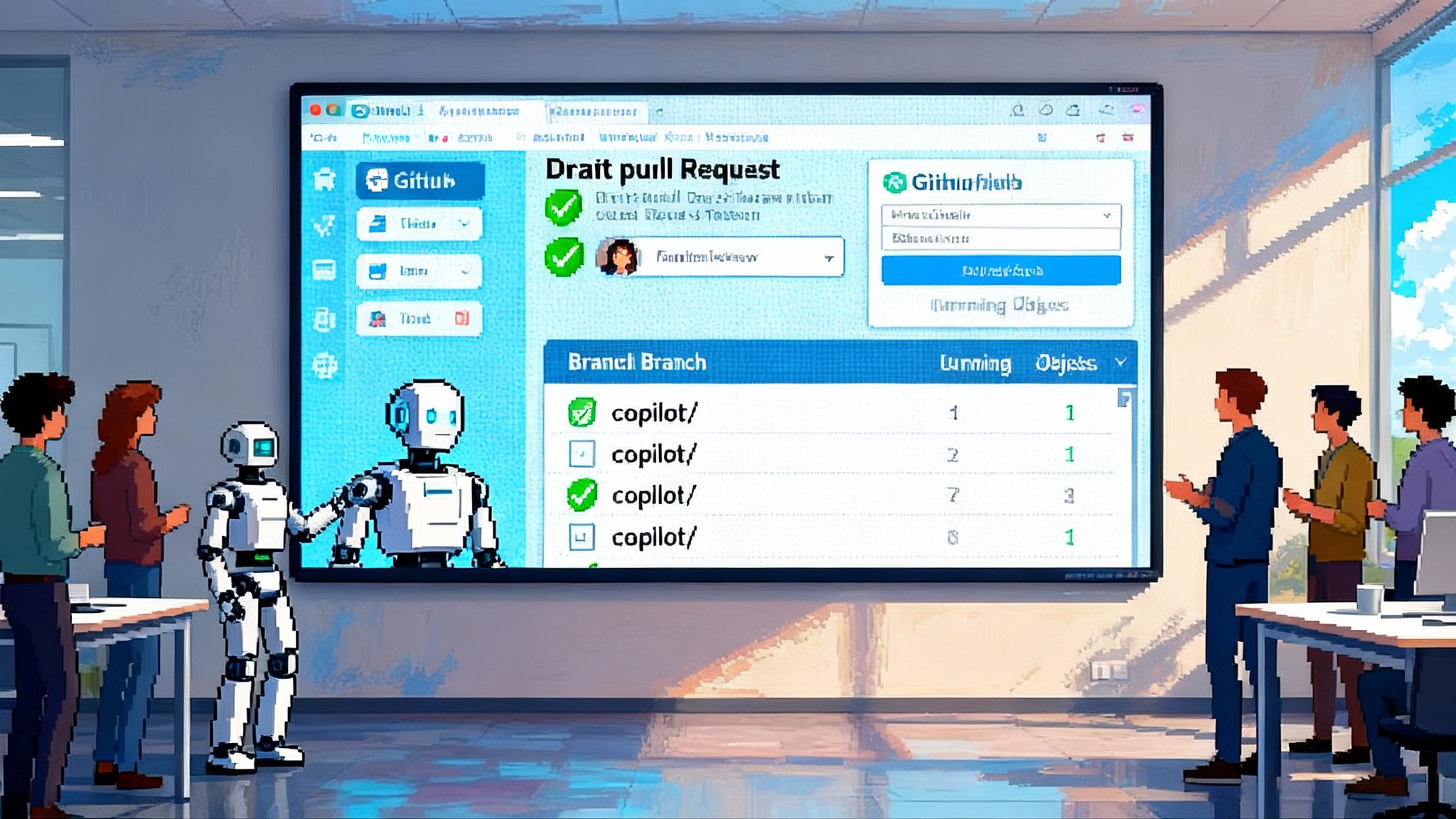

On September 25, 2025, GitHub’s autonomous coding agent for Copilot reached general availability. The agent can take a task, work in the background, and return a draft pull request for you to review. It behaves like a teammate who follows your rules, only it runs inside GitHub’s infrastructure and respects your controls. That combination is the breakthrough. It elevates agents from novelty to a standard part of a modern software team. For the full scope of capabilities, see GitHub’s announcement, Copilot coding agent is now generally available.

Think of it as adding a junior developer who documents what they did and never bypasses your gates. The practical effect is that teams can move routine code tasks off the critical path while keeping risk inside a managed boundary.

How the coding agent actually works

- You assign a task by labeling or assigning an issue, by using the agents panel in GitHub, or by asking from your editor.

- The agent spins up an ephemeral environment powered by GitHub Actions. It checks out the repository, runs tests and linters, and makes scoped changes.

- It opens a draft pull request on a dedicated branch, attaches session logs, and asks you for review. You can comment in the pull request to request changes, and it will iterate.

- When you approve, your normal continuous integration and deployment gates run. If you require more checks, the agent waits.

Each step leaves an artifact you already understand: issues, branches, pull requests, and workflow logs. There is no side channel to chase.

Why this is different from vibe coding in an editor

Many teams have tried chat in the editor to auto edit code. That feels helpful in the moment, yet it often breaks down in production work. Editor-only flows tend to be ephemeral and opaque. You paste a prompt, accept edits across many files, and now you own whatever happened. There is little audit trail. Controls are weak. It is easy to over trust and hard to review.

The coding agent flips the default by living where your software lifecycle already lives. Changes arrive as draft pull requests. Reviews are mandatory, not optional. Tests and rulesets stand between proposal and merge. If you use required reviewers, code owners, or rules for protected branches, the agent must meet them. If your team tracks compliance in pull request metadata, the agent produces that metadata. In short, it turns autonomy into something auditable and reversible.

This shift mirrors a broader pattern in the agent ecosystem, where agents become dependable once they plug into real workflows and policy engines. We covered a similar inflection point when Claude Sonnet matured and pushes agents from demos to dependable work.

The safety rails that make autonomy safe

GitHub designed the agent to work inside the protections you already enforce:

- Draft pull requests only. The agent cannot mark ready for review, cannot approve, and cannot merge. A human must do that.

- Limited branch access. It writes to a prefixed branch and respects branch protection and required checks.

- Workflow gating. Continuous integration does not run on the agent’s draft pull request until an authorized human approves. That blocks premature execution of pipelines that touch secrets or production.

- Ephemeral environment. The agent runs in a temporary Actions runner, then shuts down. It does not persist local credentials.

- Scoped internet access. A firewall limits what the agent can reach unless you configure exceptions.

- Attribution. Commits include co authoring markers that tie work back to the human who delegated it, which helps with audit and ownership.

If you want the detailed list of guardrails and constraints, start with About GitHub Copilot coding agent.

Where this lands in the stack

It helps to place the coding agent next to familiar tools:

- Issues and backlog. You use labels to route work to the agent. It is strongest on contained tasks such as bug fixes, small features, test coverage, refactors, and documentation.

- Pull requests. The agent is a consistent draft pull request author. You review, request changes, or close it like any other contribution.

- Actions. The agent’s compute lives here. You can preinstall dependencies, configure larger runners, and enforce timeouts through a dedicated setup workflow.

- Policies. Enterprise and organization controls decide who can use the agent and where. Repository level settings can opt specific codebases in or out.

This is not a sidecar tool. It is a first class contributor operating under your existing rules. The approach aligns with other enterprise oriented agent platforms, such as the way AWS AgentCore’s September update makes agents enterprise native.

From pilot to production in 30 days

The fastest path to value is to pick a clear slice of work, configure the rails, and measure outcomes. Use this playbook as a template and adapt names and numbers to your environment.

Week 1: Foundation and guardrails

Objectives

- Define scope. Choose two to four repositories with good test coverage and an active backlog. Aim for services with stable dependencies and clear code owners.

- Turn on the feature. Enable the Copilot coding agent policy for a pilot group. Restrict to selected repositories at first.

- Configure the sandbox. Add a

copilot-setup-steps.ymlworkflow that installs dependencies, seeds test data, and sets timeouts. Prefer standard Ubuntu runners. If builds are heavy, configure larger GitHub hosted runners for the copilot environment. - Lock down branches. Require pull request reviews from code owners. Keep required status checks. Disallow force pushes on default.

- Establish labels and routing. Create labels like

agent ok,agent docs, andagent test. Make your intake rule simple. Only issues withagent okare eligible.

Deliverables

- A short policy doc in the repository wiki that explains what the agent will and will not do, how to request it, and how to escalate.

- A review template that asks for risk level, change scope, and rollback notes.

Week 2: First tasks and feedback loop

Objectives

- Seed 20 to 30 issues tagged

agent ok. Mix small bug fixes, test gaps, and minor refactors. Avoid database migrations and public interfaces at first. - Time box the agent. Set a per task budget for Actions minutes and a maximum runtime. If it runs out of budget, it stops and reports.

- Observe the session logs. Ask the agent to explain why it made a change. Request at least one iteration per pull request to test the loop.

Deliverables

- First batch of draft pull requests authored by the agent. Request at least one iteration on half of them to exercise the feedback path.

- A daily review standup where engineers flag any surprising behavior and capture learnings.

Week 3: Expand scope with rules

Objectives

- Introduce change classes. Allow the agent to make confined feature additions behind flags. Permit cross file refactors that do not change public interfaces.

- Add repository level rulesets for high risk areas. For example, deny writes from the agent to a

migrations/path or to infrastructure directories until you explicitly allow it. - Start code review by the agent. Add the agent as a pull request reviewer on human authored changes for low risk repositories. Let it propose comments but keep human sign off.

Deliverables

- A second batch of issues that require small design choices. The agent proposes a plan in the pull request description. Reviewers validate before code lands.

- Updated guardrails documented in the wiki. Include clear examples of allowed and blocked patterns.

Week 4: Production readiness gate

Objectives

- Raise the bar on quality. Require green tests plus static analysis before approval on agent authored pull requests.

- Introduce a service level objective for agent changes. For example, under 2 percent revert rate in the pilot repos, and under 2 hours mean time to review.

- Begin limited production impact. Allow agent changes to reach production behind flags in one low risk service, with human controlled rollout.

Deliverables

- A go or no go decision based on the metrics below. If go, add two more repositories to the program and keep the same guardrails.

KPIs that signal true progress

Measure this like any change in delivery. Target specific improvements and track leading and lagging indicators.

Delivery and quality

- Cycle time for agent authored pull requests. Median from draft to merge. Target 30 to 50 percent faster than human only baselines for similar work.

- Review time. Median hours from request to first human review. Target under one business day.

- Rework rate. Percent of agent changes that required more than two iterations. Track by repository.

- Revert rate. Percent of agent changes reverted within seven days. Target under 2 percent in the pilot.

- Test coverage delta. Net coverage change on merged agent pull requests. Target non negative on average.

- Incident correlation. Count of incidents where the primary change was agent authored. Keep at zero in weeks 1 and 2, then under your normal change failure rate.

Throughput and efficiency

- Backlog burn down of

agent okissues per week. Target a steady increase without quality regressions. - Actions minutes consumed by the agent per merged pull request. Track by repository to find hotspots.

- Engineer focus time. Survey based proxy. Ask how many hours per week were reclaimed from toil. Watch for trend, not absolute truth.

Governance and safety

- Policy exceptions requested. Low is good. If this spikes, revisit guardrails.

- Unauthorized scope attempts. Count and review any blocked writes to protected paths or branches.

- Sensitive data access flags. If your audit log records network or secret access anomalies during agent sessions, investigate immediately.

Instrument these with GitHub Insights, search based queries on pull request metadata, and simple scripts against the audit log. Keep reports simple. Weekly snapshots are enough.

Governance guardrails that hold up at scale

- Eligibility and routing. Only issues tagged with preapproved labels are eligible. Use code owners to route reviews to the right people.

- Repository allowlists. Decide which repositories permit the agent. Default to off for critical systems until the metrics earn trust.

- Pull request checks. Require tests, security scans, and static analysis before approval. If you have required reviewers, keep them.

- Secrets hygiene. Use the

copilotenvironment for any secrets or variables the agent needs. Prefer read only tokens. Audit usage monthly. - Internet access. Keep the default firewall and only allow outbound destinations you actually need. Review this list quarterly.

- Time and resource limits. Configure workflow timeouts and job concurrency for the agent. If the agent hits the limit, it should fail fast and report.

- Change classes. Maintain a living table of what the agent can do. For example, yes to unit tests, no to new external dependencies without approval, yes to feature flags, no to schema changes during the pilot.

These practices mirror what many enterprises do to bring agents into regulated environments. The same playbook is how Salesforce users made agents safe at scale when Agentforce makes AI agents production grade.

A concrete example

Imagine a payments microservice with a healthy test suite. You create an issue: “Add idempotency keys to the refund endpoint. Include tests. Behind a flag. Update API docs.” You tag it with agent ok.

The agent spins up its environment. It inspects the refund handler, adds a middleware for idempotency based on request headers, writes tests for repeated requests, and introduces a feature flag check that defaults off. It opens a draft pull request on copilot/refunds-idempotency, includes a design note in the description, and tags you for review.

You request two changes in comments. First, use an existing cache library rather than a new one. Second, expand test cases to include concurrency. The agent pushes a second commit that replaces the library, adds tests, and updates the docs. You approve. Your checks run. The pull request lands. The change goes to production behind the flag. Later, you run a ramp up plan and monitor error budgets. You got a real improvement without stealing attention from roadmap work.

Cost and capacity planning without surprises

The agent consumes GitHub Actions minutes and model requests while it works. Track these in the KPIs above and set per task budgets. If your repositories build slowly, consider larger runners dedicated to the copilot environment. Keep timeouts strict in the setup workflow. The aim is predictable cost per merged pull request.

Practical tips:

- Treat the setup workflow as infrastructure. Cache dependencies, seed realistic test data, and prune work directories to keep cycles tight.

- Set a default concurrency for agent jobs so they do not saturate runners during peak hours.

- Tag all agent authored draft pull requests with a consistent label so you can break out spend by change source.

- If you run self hosted runners, isolate agent workloads onto a separate pool with scoped permissions and firewall rules.

How to avoid common pitfalls

- Do not start in your most complex repo. Pick stable services with fast tests.

- Do not skip the review template. It drives repeatable decisions and better audit trails.

- Do not allow silent scope creep. Use labels to control intake and reject tasks that do not fit the allowed change classes.

- Do not run the agent without a green baseline on your tests. You will waste cycles and lose trust.

- Do not hide the metrics. Share weekly KPI snapshots with the team and leadership. Let the data decide the next steps.

What this unlocks next

Once the basics are in place, you can extend the agent’s reach. Add repository specific setup steps to prewarm caches. Feed it system context through approved interfaces. Allow it to propose changes across multiple repositories, with human orchestration for integration and rollout. Treat it like a specialist who is great at consistent, structured work. Keep humans in the loop for design, tradeoffs, and risk.

This is the same north star we have seen across the ecosystem, where platform hooks and policy shape behavior. That is why efforts like AgentCore and Vertex show up in enterprise roadmaps, and why GitHub’s integration into pull requests and rulesets matters more than raw model quality. The story is not just smarter models. It is better placement in the software lifecycle.

The bottom line

General availability turns the Copilot coding agent into a dependable teammate, not a demo. It works through draft pull requests, runs inside an Actions sandbox, and obeys your policies. That is what makes agentic DevOps practical. Leaders can point it at real backlogs, keep the review culture intact, and measure impact with clear KPIs. Do the one month rollout, learn fast, and scale what works. The teams that master this loop will deliver more, with fewer interruptions, while preserving the safety that production demands.