GitHub Copilot’s Coding Agent Hits GA: A Teammate, Not a Tool

GitHub Copilot’s coding agent is now GA and ready to own end to end tasks. It plans changes, runs tests in isolated runners, opens draft PRs, and works within branch protections and review gates.

From helper to teammate: what changed at GA

For years, AI in the editor acted like a fast pair of hands. It suggested snippets, explained code, and helped you type a little faster. The GitHub Copilot coding agent that reached general availability changes the center of gravity. Instead of hovering in your IDE, it moves into the workflow where software delivery actually happens. It accepts a task with clear scope, plans the edits, runs builds and tests in isolation, opens a draft pull request, and iterates until the work is accepted.

Critically, the agent does not ask you to reinvent your governance. It works inside the repositories, branches, and pull requests you already use, and it respects your existing policies, including GitHub branch protection rules. That shift matters because you are no longer copy pasting suggestions into a promising branch. You are delegating a bounded unit of delivery to a teammate that can close the loop without leaving the guardrails of your platform.

If you are exploring broader agent strategy, this release fits with how enterprises are adopting platform native autonomy. For more on the organizational side of agents, see our guide to a playbook for enterprise AI and how leaders are balancing control, scale, and trust.

What the coding agent actually does

At a high level, the agent executes a delivery flow any modern team will recognize:

- Intake and scoping. You give the agent a short brief. It might come from an issue, a backlog item, or a comment on a pull request. The agent expands that into a plan that lists files to touch, tests to run, dependencies to review, and potential risks.

- Working branch. The agent creates a dedicated branch with a clear, traceable name, often referencing the ticket or issue number.

- Isolated execution. The agent runs builds and tests in clean runners so results are deterministic and auditable. On GitHub, this typically means ephemeral machines spun up by Actions. If you want the details, read how hosted runners work.

- Changes with context. The agent edits code and configuration, adds or updates tests, executes the test suite, and narrows the diff when failures appear. It learns from failures in the context of the branch.

- Draft PR. It opens a draft pull request that explains what changed, why it changed, and what to check in review. It links to passing or failing checks and includes a checklist for reviewers.

- Review ready. When checks pass, the agent marks the PR ready for review. If reviewers leave comments, it proposes follow up commits or asks clarifying questions.

- Governance aware. If your repository requires code owners, signed commits, or linear history, the agent works inside those boundaries rather than bypassing them.

This is not magical autonomy. It is a disciplined teammate that knows how to navigate branches, CI, and reviews. That is enough to unlock real value.

Where it shines: low to medium complexity changes

You will see the largest return when the task has clear scope, good tests, and limited blast radius. Examples include:

- Dependency bumps that need small code adjustments. The agent updates versions, scans for breaking API changes, amends calls, and extends tests for the new behavior.

- Framework migrations within a minor version. Think swapping one logger or HTTP client for another where interfaces are similar and call sites are easy to find.

- Config and policy edits that demand crisp PR hygiene. Security headers, linter upgrades, formatting rules, container base images, and Git hooks are all good fits.

- Feature flags and toggles. The agent can wire a flag, add defaults, update documentation, and provide targeted tests, then open a draft PR for product or platform owners.

- Test authoring and coverage improvements. Straightforward unit tests for functions with clear inputs and outputs are a sweet spot.

- Cross repository touch ups that follow a mechanical pattern. With the right permissions and a global plan, the agent can open a series of coordinated PRs.

Two quick heuristics for fit: first, the change can be understood by reading a handful of files. Second, correctness is captured by automated checks that already run in your pipeline.

Where to be careful

There are tasks the agent should not own at the start of your rollout:

- Architectural shifts that cut across services. When coordinated updates to contracts, data models, and SLAs are needed, keep a human in the lead and route mechanical sub tasks to the agent.

- Security critical code paths. Cryptography and authentication logic have subtle failure modes. Let the agent assist with tests and documentation, not the core change.

- Performance sensitive work. The agent can propose optimizations, but profiling and trade offs demand deep domain knowledge.

- Product decisions and UX. It can scaffold code and tests, but usability and product fit belong to teams that own the roadmap.

As with any teammate, the goal is not to hunt for tasks it cannot do. It is to route the right work to the right worker.

How it fits enterprise governance

The best part of a platform native agent is that it lives inside the controls you already operate. That minimizes new risk and reduces adoption friction.

- Identity and permissions. Run the agent with tokens that have scoped access. Start with least privilege, restrict write access to selected repositories, and require approvals for sensitive actions.

- Branch protections and code owners. Because the agent works through PRs, you retain policy coverage. Required reviews, status checks, and signed commits continue to apply under your GitHub branch protection rules.

- Audit trail. Every action is recorded as commits, comments, and workflow logs. This yields a paper trail for compliance teams and incident reviews.

- Secrets handling. Keep credentials out of code and use environment secrets. Prefer short lived tokens. If you cannot rotate a secret safely, do not let the agent touch that path.

- Supply chain checks. Run SAST, dependency scanning, container scanning, and IaC policies on agent created PRs. If a scanner flags a risk, have the agent propose a fix or route to a human quickly.

- Data boundaries. Avoid broad admin scopes in early pilots. Limit repository scope, define a data classification for content the agent may access, and log cross boundary reads.

For a view of how agent programs evolve across platforms, see how enterprises are blending models and runtimes with multi model agents in M365.

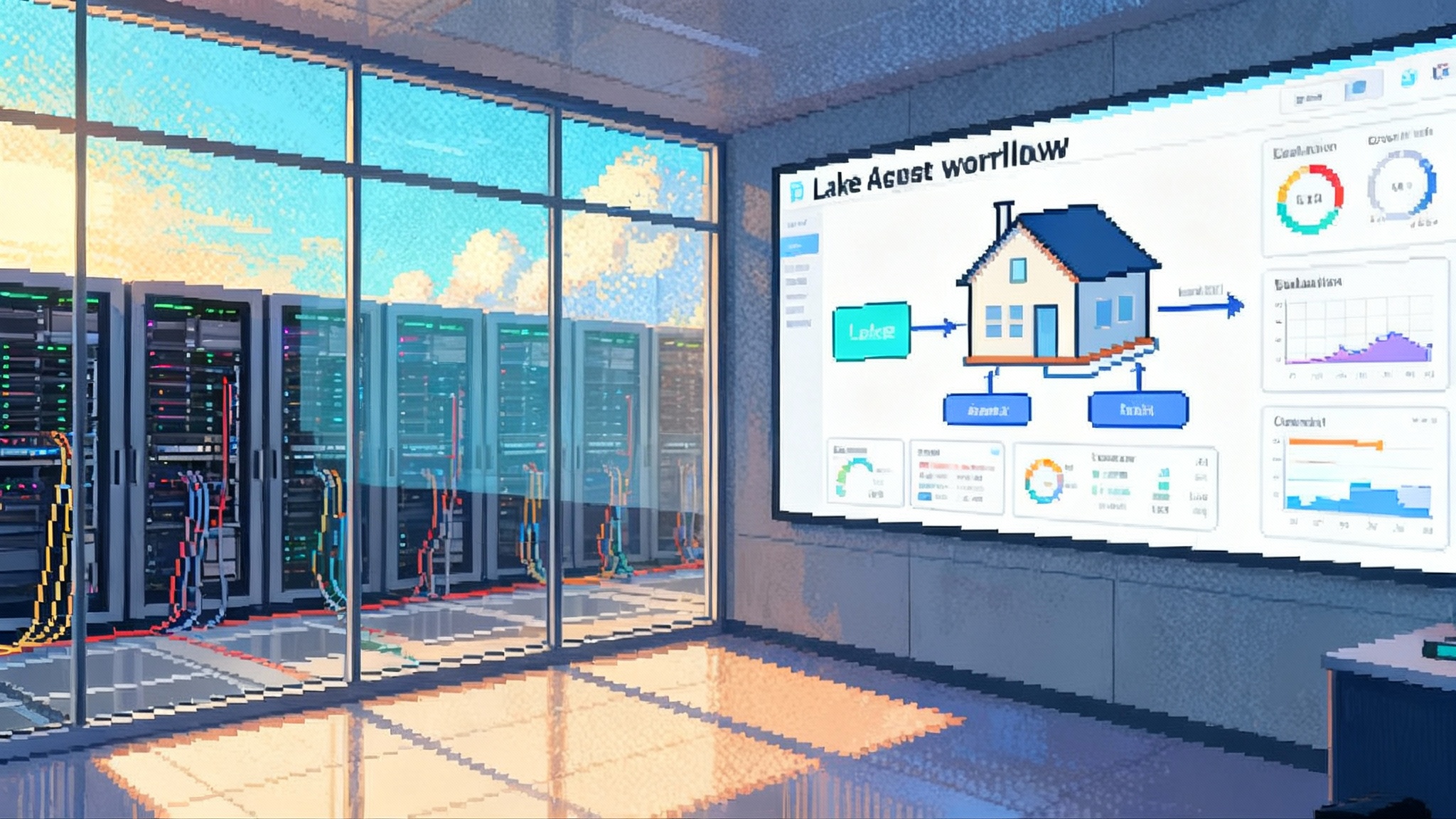

The enterprise rollout playbook with KPIs

A successful rollout is staged, measurable, and reversible. Use three phases with goals, guardrails, and metrics.

Phase 1: Pilot

- Goal. Prove the agent delivers clean PRs for low complexity tasks in a handful of repositories.

- Scope. Two to four squads, 10 to 20 repos, limited write permissions, weekdays only for the first two weeks.

- Guardrails.

- Require draft PRs for all agent work.

- Require at least one human approval.

- Block merges outside business hours.

- Cap agent initiated PRs per day per repo to avoid review overload.

- KPIs. Baseline first, then track improvements:

- Agent PR acceptance rate. Target 70 percent by week three.

- PR cycle time. Median time from PR open to merge. Aim for 20 to 30 percent reduction.

- Review turnaround. Median time from first review request to first human response. Target 25 percent faster due to clearer PR hygiene.

- Change failure rate. Share of agent PRs that trigger a rollback or hotfix within seven days. Keep under 3 percent.

- Rework rate. Share of agent PRs needing more than two revisions. Keep under 20 percent.

Phase 2: Expansion

- Goal. Extend to medium complexity changes and additional repositories.

- Scope. Six to twelve squads. Let teams opt in. Add repositories with strong test suites and clear owners.

- Guardrails.

- Introduce an allowlist of change types, such as logging normalization, framework minor updates, test coverage additions, and infrastructure as code refactors.

- Add a simple rubric for task eligibility that reviewers can apply in under one minute.

- KPIs.

- Lead time for change. From request to production for agent driven changes. Target 30 to 40 percent improvement.

- Agent contribution share. Percent of PRs opened by the agent. Aim for 10 to 20 percent in participating repos.

- Reviewer load. Average reviews per engineer per week. Keep stable while throughput increases.

- Guardrail violations. Attempts to push to protected branches, bypass checks, or edit sensitive files. Keep near zero.

Phase 3: Scale

- Goal. Treat the agent as a standard teammate for a defined class of work.

- Scope. Platform wide where tests and policies are strong. Expand to cross repository sequences coordinated by a release manager.

- Guardrails.

- Add service level objectives for agent PRs. For example, 95 percent of agent PRs pass checks on first try and 99 percent include test evidence.

- Maintain rollback playbooks and a freeze switch if metrics regress.

- KPIs.

- Throughput. Merged PRs per week per repo. Use control repositories for comparison.

- MTTR for regressions. Time to detect and fix issues that reached production.

- Engineering satisfaction. Pulse surveys on PR quality, clarity of diffs, and speed. Target net positive sentiment.

Operational tips that raise your odds

- Provide an onboarding form for change requests. Require a brief, acceptance criteria, and a test strategy so the agent can plan work.

- Adopt a pull request template that the agent fills. Include motivation, scope, risk, rollback plan, and test evidence.

- Keep CODEOWNERS current so reviews route to the right people.

- Add labels such as agent candidate, needs human plan, or blocked by tests to triage the queue.

- Define an escalation path. If the agent cannot resolve a failing check after two iterations, it should tag a human owner and pause.

- Set concurrency limits so review bandwidth stays healthy. More PRs are good, but too many at once creates churn.

- Curate starter tasks each sprint. A small backlog of agent friendly items keeps the pipeline warm and predictable.

Governance checklist for your security team

- Identity and access. Dedicated identity with least privilege. Write scopes should be narrow. Admin access off limits for pilots.

- Secrets management. Use environment or OIDC based secrets. Avoid long lived tokens. Rotate every 90 days or less.

- Policy compliance. All agent PRs must pass static analysis, dependency scanning, and policy checks before review starts.

- Change control. Draft PR required, code owner review required, merges only after all checks pass.

- Audit. Retain logs for workflow runs, comments, and commits. Tag agent PRs for easy search.

- Incident response. If an agent change triggers an incident, have a playbook to pause the agent, roll back, and review scopes.

A simple rubric for task selection

Before sending work to the agent, run this five question check. If you answer yes to at least four, you are likely in the sweet spot:

- Is the impact limited to a small number of files or modules?

- Are there clear tests that capture correctness for this change?

- Is the plan explainable in three to five bullet points?

- Is rollback trivial if something goes wrong?

- Is there a named owner in CODEOWNERS who will review within one business day?

What success looks like in six months

Your backlog of small but important items is lighter. Developers spend more time on product work and architecture. Reviews arrive with tighter diffs and well structured descriptions. Dashboards show faster cycle time and steady or lower change failure rates. Most importantly, teams view the agent as a dependable teammate that handles the unglamorous work well and asks for help when needed.

To sustain that momentum, conduct a quarterly review of:

- Contribution mix. What percent of merged PRs came from the agent, and in which categories?

- Quality signals. Flake rates, test coverage in agent PRs, and post merge incidents.

- Policy drift. Any shortcuts eroding your governance, such as ad hoc exceptions for protected branches.

- Developer sentiment. Are reviewers finding PRs easier to understand and quicker to approve?

If results soften, reduce scope, refresh the allowlist, and retrain the organization on the rubric above. The goal is steady compounding gains, not a one time spike.

Common pitfalls and how to avoid them

- Flaky tests masquerading as regressions. If tests are unreliable, the agent will waste cycles on noise. Triage flake and fix tests before scaling.

- Overbroad permissions. Convenience is tempting, but broad write scopes invite mistakes. Keep scopes narrow and transparent.

- Unclear ownership. If no one feels responsible for a code path, reviews stall. CODEOWNERS is not a suggestion. Keep it current and explicit.

- Big bang tasks. Break work into small units with crisp acceptance criteria. The agent thrives on bounded scope.

- Hidden side effects. Config changes that affect multiple services can surprise you. Treat shared config like code with tests and rollbacks.

Final thought

The goal of AI in software is not to replace judgment. It is to automate the boring and accelerate the straightforward so people can spend time where insight matters. A coding agent that operates entirely through branches, checks, and reviews is a meaningful step toward that goal. Start small, measure real outcomes, and let results guide your expansion. Over time, you will build a reliable partnership between engineers and an agent that respects your policies, writes clean diffs, and ships small improvements daily.