Atlas Agent Mode: The Browser Becomes an AI Runtime

ChatGPT Atlas introduces Agent Mode, a supervised browser agent that navigates, fills forms, and stages tasks inside the page. See how micro-permissions and memory reshape checkout and everyday productivity.

Breaking: the browser becomes an agent runtime

On October 21, 2025 OpenAI introduced ChatGPT Atlas, a new browser that treats on-page tasks as first-class work for an agent, not just a chat. In Atlas, Agent Mode can navigate, click, type, and complete multi-step flows while you supervise. OpenAI describes this as a move toward a true assistant that lives where your work happens, not beside it. The announcement included a preview of Agent Mode for Plus, Pro, and Business users, with Atlas on macOS initially and other platforms to follow. You can read the official overview in Introducing ChatGPT Atlas.

That one move changes the frame for product teams and site owners. Until now, the web has been a stage and users were the actors. With Agent Mode, the browser itself becomes a runtime where software, not just people, performs tasks under your direction. The result will ripple through commerce, productivity, and security.

From chat box to first-class actor

The last generation of assistants lived in chat windows. They explained pages, drafted emails, and summarized research. But when it was time to act, you switched back to the page and did the work yourself. In Agent Mode, the assistant works in the page. It follows links, fills form fields, scrolls hidden sections, and handles error states. You can pause, interrupt, or take over at any time, but the default posture is action.

A useful mental model: before, the assistant was a sports commentator in the press box. Now it puts on a jersey and steps onto the field. That step requires new rules, protective gear, and playbooks. It also demands that websites expose clearer signals about what actions are possible and what consent looks like.

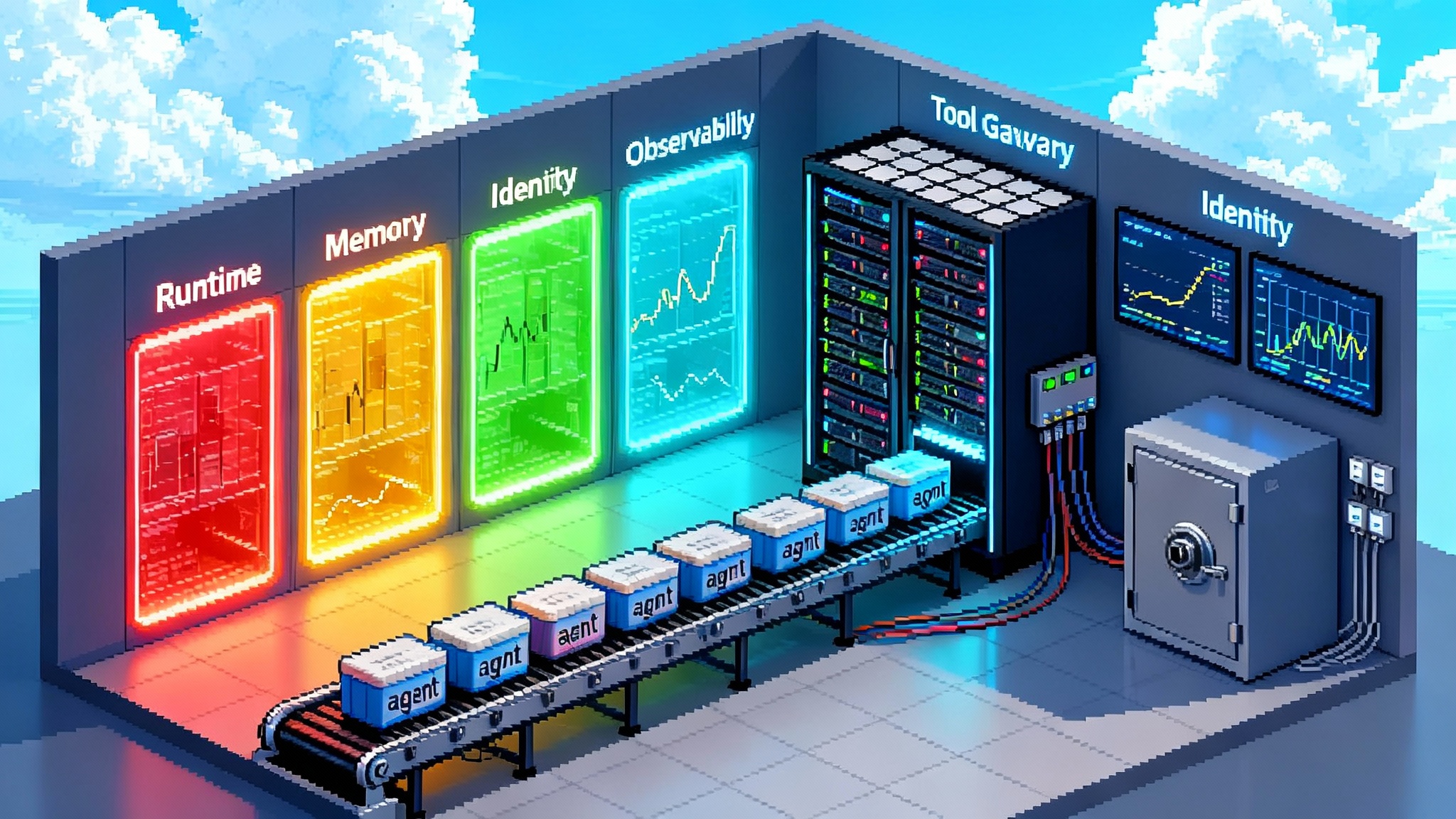

How in-browser autonomy actually works

Atlas pairs a model that can perceive and reason about the page with a tightly sandboxed controller that issues the same events a human would. The engineering team explains that agent-generated input is routed to the page renderer rather than through privileged browser layers. That preserves the boundary between the agent and the browser controls. It also composites popups and off-tab widgets back into a single frame so the model always sees the full interface. For deeper detail on these constraints and the logged-out ephemeral sessions Atlas uses to isolate agent runs, see the engineering post on the new Atlas architecture.

The key principle is least privilege. Agent Mode cannot install extensions or run local code. It does not read saved passwords or system files. Agent sessions can run in a logged-out container with fresh cookies and discarded state. The model still needs your approval for sensitive actions and certain sites will force a visible pause. This is autonomy with a seatbelt.

Memory with a seatbelt

Atlas introduces browser memories that are private to your account and adjustable. Think of them as sticky notes the browser can use to be less repetitive next time. They might include your preferred clothing sizes for a retailer, the city you always fly from, or a company legal entity for invoices. You can toggle visibility on a site, purge history, or run in a mode where no memories apply.

When paired with Agent Mode, this has a specific impact. Repetitive online tasks become faster not because the agent is reckless, but because it can remember harmless context and reuse it safely. A team coordinator could, for example, ask the agent to gather bios for ten speakers, extract headshots, and populate a draft page. The memory allows the agent to keep your preferred image crop and file naming convention without asking every time, yet avoid touching credentials or payment methods. For developer pipelines that want to bridge chat and action, OpenAI AgentKit for production reality shows how teams can define reusable actions and wire them to real systems.

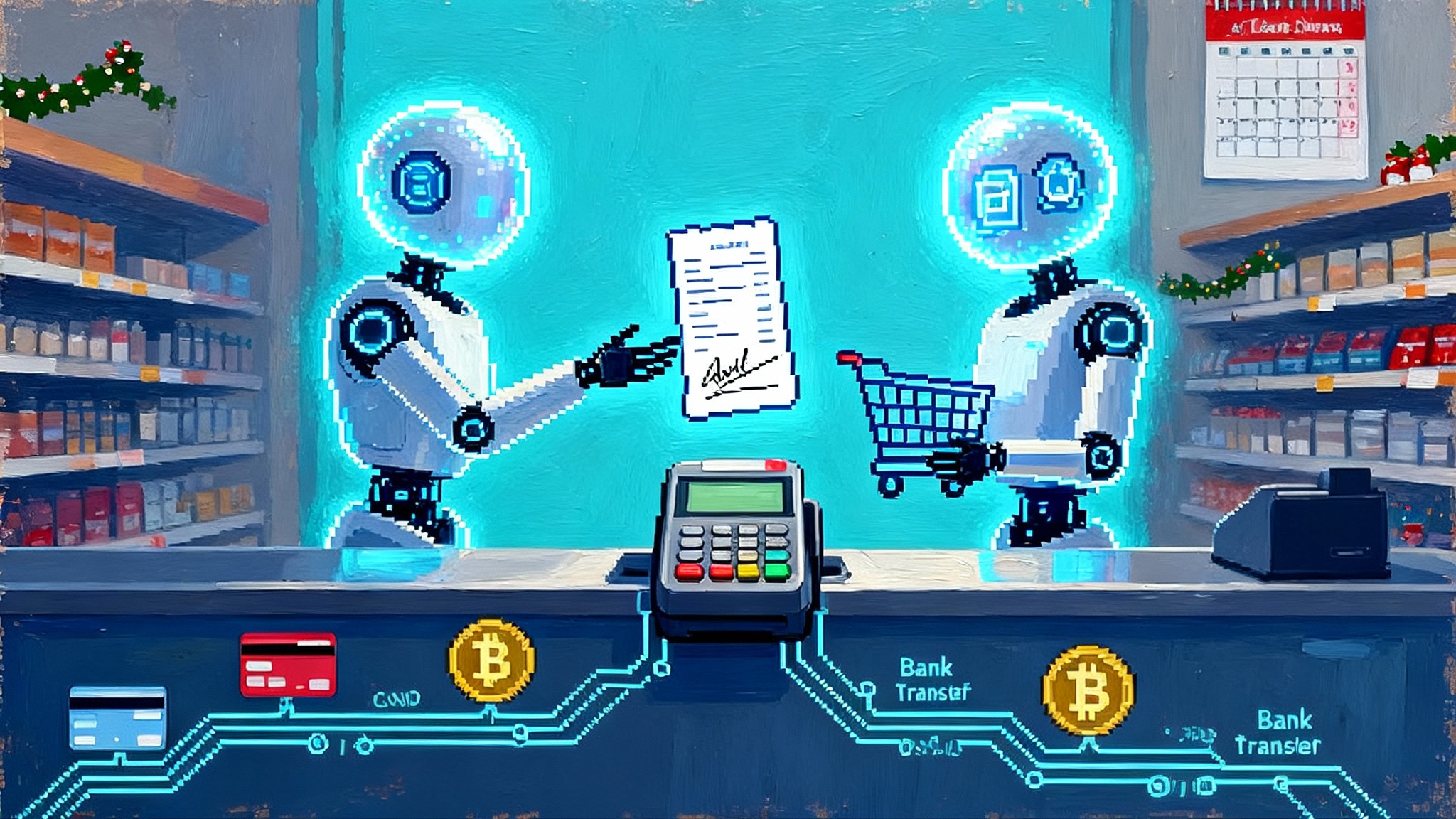

Micro-permissions reshuffle online commerce

The big idea is not that the agent can click buttons. It is that users can grant a chain of granular approvals. Instead of a blanket take over my browser, Agent Mode nudges toward micro-permissions that reflect user intent. Examples:

- Add two medium shirts from Retailer A to cart, but do not checkout.

- Fill a claims form through step three and save as draft for me to review.

- Compare three hotel options within my budget and hold the refundable one.

- Prepare a grocery cart with substitutions allowed, then wait for me.

The permission is the sentence. That sentence translates to a bounded set of page interactions. Commerce teams should assume that 2026 traffic will include a mix of human clicks and agent-originated sequences following these constraints. Two practical implications follow.

First, shopping flows will split into staged and final. The agent will do the research and staging. The user will bless and pay. That means Save for later and Review order screens become the conversion choke points. Build them with agents in mind. Make it trivial to resume or modify staged carts. Logically separate product selection from payment authorization.

Second, the power center shifts from the homepage and search engine results to the task boundary where the agent hands back control. If that handoff is smooth, you keep the customer. If not, the agent will take the user elsewhere. Payment networks are already exploring agent-aware verification, as seen in Visa Trusted Agent Protocol, which aligns neatly with staged holds and just-in-time consent.

Productivity becomes consumer-grade RPA

Robotic process automation brought bots to enterprise back offices. Agent Mode brings a consumer-grade version to the web. Consider three examples:

- A freelance designer asks the agent to pull her last six invoices from two platforms, reconcile paid status, and draft reminder emails. She approves and sends.

- A parent asks the agent to fill the school registration portal with known data, collect missing details, and stop before any payment. Review happens at the end.

- A product manager asks the agent to scrape a set of competitor changelogs, tag features, and open a spreadsheet with the summary and links, then drafts a weekly brief.

The pattern is the same. Humans set direction, pick the stopping point, and approve. The browser becomes a repeatable workbench, not just a document viewer.

The new attack surface and what it means

When software drives a browser, familiar security problems get sharper teeth. Three deserve special attention.

- Indirect prompt injection. A page that includes hidden instructions could influence an agent to take actions not in the user interest. Even benign sites can carry injected content through comments or ads. OpenAI mitigates with pauses, logged-out runs, and limits on system access. Sites should still assume agents will visit and prepare for defense in depth.

- Consent fatigue. If every step triggers a pop-up approval, users will blindly accept. If approvals are too sparse, risk climbs. The right pattern is contextual. Reserve strong confirmations for money movement, data sharing, and access escalation. Use quiet banners for routine steps.

- Data leakage. An agent should not fetch unrelated tabs, autofill store, or local files. Atlas keeps a strict boundary by design and supports isolated sessions for risky tasks. Sites should avoid placing sensitive content in the same origin as public pages when possible, and should partition cookies to limit collateral access.

Security teams should treat agent traffic like a new client class. Start with separate monitoring. Add rules that slow or block sequences that look like automated scraping without user benefit. Most important, invest in better on-page affordances that help agents explain what they are doing back to the user. Transparency lowers both risk and support cost.

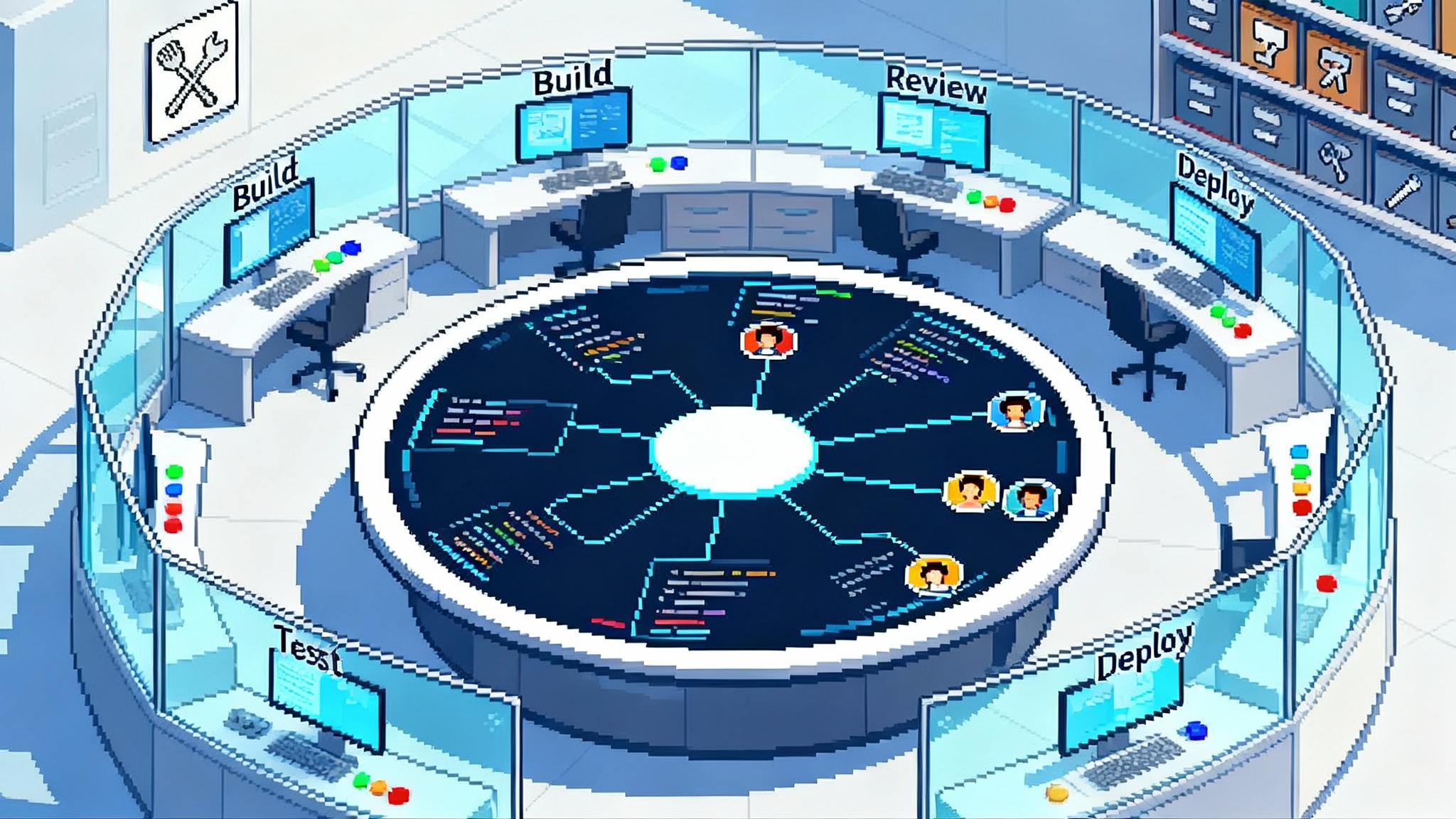

A 2026 playbook: make your site agent-ready

Here is a concrete plan for product and engineering teams. The goal is to let human-supervised agents complete useful work safely and predictably.

1) Ship structured actions

- Publish a small agent manifest at a stable path that lists the actions your site supports. Examples include AddToCart, StartReturn, BookAppointment, SubmitClaim, SaveDraft.

- For each action define required and optional fields, preconditions, side effects, and idempotency keys. Think of this as an intent contract that agents can discover.

- Encode actions in a page-level script or a well-known endpoint your team can version. Tooling such as OpenAI AgentKit for production reality can help you model actions and test flows end to end.

2) Expose checkout and intake endpoints

- Create clean endpoints for staged checkout and staged intake. Staged checkout means all data is validated and persisted, but payment is not authorized. Staged intake means a draft is created with a server-side identifier.

- Support idempotency and clear error codes so an agent can retry without duplicate orders.

- Provide a sandbox flag that produces a non-binding preview for planning flows. Agents can then do dry runs without touching production.

3) Design consent that matches risk

- Add a just-in-time approval page that summarizes the action, scope, and time limit. Keep it short and specific.

- Allow per-action caps. For example, permit up to one order under a given dollar amount or one appointment per calendar week.

- Offer a single-page consent log where users can review and revoke scopes. Make it easy to see which agent actions were taken and when.

4) Make telemetry agent-observable

- Emit structured events that describe state transitions in the flow: FormValidated, AddressNormalized, InventoryConfirmed, DraftSaved, PaymentReady, Completed. Include human-readable messages that an agent can quote back.

- Provide an event stream via a JavaScript data layer and duplicate high-value events in response headers so headless flows can see them.

- Tie events to a stable correlation id so agents can link steps across navigations.

5) Tune authentication for supervised agents

- Prefer short-lived tokens and scoped sessions. A staged checkout scope should not grant shipping address changes or password edits.

- Support step-up challenges at the point of money movement. Consider one-time passcodes or platform wallets such as Apple Pay. Have a fallback path that an agent can prepare but the user must execute.

- Publish a refresh policy. If an agent loses auth mid-flow, it should know whether to retry, save draft, or hand back.

6) Engineer for resiliency

- Use deterministic form field names and labels. Avoid content that shifts or disappears without clear triggers.

- Offer explicit errors and recovery suggestions. Instead of Something went wrong, emit Email already in use. Suggest password reset or alternate email.

- Separate business logic from presentational quirks. Agents can learn patterns faster when logic is consistent.

7) Make pages read like data

- Add structured markup for products, prices, availability, and policies. Consistency helps both search and agents.

- Provide semantic hints for important buttons. Use descriptive aria-labels and a stable order of operations.

- If areas should not be automated, publish a clear robots-for-agents signal. A simple meta tag is not a security barrier, but it sets expectations and reduces accidental misuse.

8) Build guardrails for money movement

- Require explicit confirmation for final payment. Consider a separate confirmation origin to prevent clickjacking.

- Support a temporary hold state so agents can stage an order and reserve inventory without charging.

- Issue a receipt payload that agents can store or forward to the user email, including a machine-readable schema.

9) Plan for returns, cancellations, and support

- Expose StartReturn and CancelAppointment as first-class actions, not hidden behind multi-step support portals.

- Provide clear eligibility and fees in structured form so the agent can warn the user before they approve.

- Emit a support-ready transcript that lists steps taken and error codes. You will save your team time when something fails.

10) Measure the right things

- Track staged conversions that later become real. Tie draft ids to completed orders and attribute appropriately.

- Monitor handoff latency. If users hesitate at the final approval page, improve clarity.

- Watch failure loops by action type and fix the underlying blockers rather than blocking agents.

What this means for platforms and ecosystems

Retail platforms like Shopify and marketplaces like Amazon will face increasing pressure to publish action manifests and to support staged flows. Payment providers like Stripe and Adyen can benefit by making one-time, capped authorizations easy to approve. Identity providers can offer agent-aware scopes that reflect real-world tasks instead of monolithic read or write access.

Browsers and operating systems will compete on guardrails and smooth consent. The best platform will be the one that lets agents do complex work while keeping risk contained and user intent legible at every step. Google is moving in the same direction with agent-friendly checkout primitives, as seen in Google's AP2 rails for checkout, which complements Atlas by making the last mile of payment more predictable for supervised agents.

Implementation patterns that work

Teams that have already piloted agent-led flows share a few battle-tested patterns:

- Stage early, commit late. Let the agent handle everything up to the irreversible action. Keep the irreversible step small and well labeled.

- Prefer text to pixels. Agents read the DOM. Descriptive labels, aria attributes, and status text are more reliable than pixel-perfect cues.

- Make retries safe. If you cannot guarantee idempotency, you cannot let an agent retry on a transient error. Design for safe repeats.

- Expose state in headers. Response headers that echo state transitions are a simple way to make headless sequences legible.

- Summarize for humans. Build a summary component that the agent can populate and the user can bless. This is where trust is won.

Anti-patterns to avoid

A few traps will waste time and erode trust:

- Hidden navigation. If your flow loads critical steps inside iframes or off-screen elements without clear triggers, expect brittle automation.

- Surprise side effects. Clicking Next should not silently subscribe, resell data, or charge fees. Surprises will cause agent vendors to block your domain.

- Monolithic permissions. Do not ask for a single Accept all scope for unrelated actions. Split scopes to match intent.

- Opaque failures. A 500 with no message forces an agent to guess. Every failure should include a human-readable explanation and a machine-parsable code.

What to build next

- Agent-aware manifests and validators. Open source tools that lint your action definitions and run cross-browser checks.

- Handoff components. Widgets that show what the agent staged and let users adjust before approval.

- Sandboxes for dry runs. Test harnesses that simulate your production flows with realistic errors and inventory behaviors.

- Consent logs with diff views. Show exactly what changed and what the agent did, step by step.

- Progressive disclosure prompts. Help the agent ask for only what it needs, when it needs it.

The bottom line

Atlas makes the browser a place where software can act under human supervision. That move sounds simple, but it rewires how the web gets work done. Autonomy happens inside the page. Memory keeps the routine parts fast. Micro-permissions shift power to the exact moment when a user approves the thing that matters. For commerce, that means staged carts and clean handoffs. For productivity, it means repeatable wins on the messy web you already have. For security, it means new guardrails and clearer contracts.

Teams that adapt early will design their flows around actions, not pages, and around approval, not attention. The web has always been a giant set of forms and buttons. In 2026 those forms will start talking to agents. The winners will be the sites that know exactly what they want those agents to do, how to let them do it safely, and how to hand control back to the humans who asked for the work.