ChatGPT Agent is the first mainstream computer-using AI

On July 17, 2025, OpenAI turned chat into computer use. ChatGPT Agent researches, clicks, and acts inside a virtual computer with narration and approvals. Explore strengths, limits, and how to put it to work.

The day the cursor started working for you

On July 17, 2025, OpenAI moved the agent conversation from lab demos to daily life. ChatGPT now runs tasks on its own virtual computer, navigating sites, filling forms, running code, and packaging results without you babysitting every click. In practical terms, it is the first broadly available agent that can both think and use a computer on your behalf.

OpenAI calls this capability ChatGPT Agent, unifying two threads that had matured for months: browser actions that let the model click, scroll, and submit, and a multi step research mode that plans deep investigations with citations. The launch connects those strengths into one permissioned loop that plans, acts, checks, and presents, all inside a single conversation. For the official framing, see the OpenAI post, Introducing ChatGPT Agent launch.

What changed on July 17

Before this release, ChatGPT could browse and it could generate outputs, yet it relied on you to glue the steps together. The July launch added a unified agentic mode that does the glue work. It decides when to research, when to open sites, when to run code, and when to ask you for permission to proceed. You stay in the loop through an on screen narration of its actions, and you can pause or take over any time.

The practical difference is scope. You no longer ask for a list of hotels and then click through yourself. You ask for a weekend trip within a budget and constraints, and the agent researches options, drafts an itinerary, opens the booking sites, and prepares to check out after confirming details like dates and card choice. The same pattern applies across knowledge work and personal tasks.

What ChatGPT Agent does well today

Think of early strengths as four families of workflows. These are the places where the agent already feels like a reliable coworker.

1) Calendar and inbox triage

- Pull upcoming meetings, highlight conflicts, and propose moves with suggested times.

- Draft summaries of important threads and surface unaddressed requests.

- Prepare a daily brief that ties calendar items to recent company news or project docs.

2) Shopping and booking flows

- Research options against constraints like price windows, loyalty programs, room setup, or dietary needs.

- Navigate checkout forms, ask you to log in, and confirm quantities or shipping.

- Build a shopping list and place a grocery order that matches a meal plan for a set number of guests.

3) Report creation and analysis

- Synthesize findings across many sources, then turn the result into a shareable doc or slide deck.

- Track metrics in recurring updates, retrieving data from connected sources and refreshing charts on a schedule.

- Produce competitive briefs or vendor comparisons, with citations and an appendix of source snippets when you need auditability.

4) Code and spreadsheet workflows

- Inspect a repository, summarize recent changes, draft small fixes, and open merge requests after tests pass.

- Import CSVs or query a warehouse connector, clean messy data, and deliver a working spreadsheet with formulas and conditional formatting.

- Build quick scripts, run them in the sandbox, and drop the outputs into your workspace for review. For perspective on developer workflows, see how Copilot’s coding agent goes GA.

These strengths share a pattern. They combine multi step reasoning with deterministic clicks and keystrokes, and they end in artifacts you can inspect. The agent is at its best when goals are clear, data is available, and the final action requires your explicit permission.

How the unified loop works

The agent operates like an intern who learned to narrate their work. You describe the task. The agent proposes a plan, starts acting, and shows what it is doing in real time. When the next step has material consequences, such as spending money or sending a message, it requests confirmation. If a site needs authentication, the agent hands control to you to log in securely, then resumes.

Under the hood, the unification matters. Research planning provides the backbone for evidence gathering. Browser actions give the hands and eyes for the web. ChatGPT stitches them together with conversational checks that keep you aligned. The result is a reliable loop that can switch between reading and doing without you having to translate instructions at every turn.

Reliability and safety guardrails

Two user facing protections define the experience.

- On screen narration. You see a running description of each step. When the agent opens a page, scrolls, or selects a form field, you are told what and why. This helps you catch wrong turns quickly and it teaches you how the agent thinks about a site or document.

- Action confirmation. For anything that changes the world beyond your tab, you decide. The agent asks before it buys, sends, or deletes. You can interrupt or take manual control at any step.

OpenAI pairs those with platform controls. Browser actions run inside a virtual computer that is isolated from your machine. Sensitive steps like sign in require you to take over. Research outputs keep citations so you can spot check. Usage is rate limited by plan, and OpenAI is shipping updates iteratively with visible release notes. For availability, plan limits, and the Operator integration timeline, see the ChatGPT agent release notes.

Early usage and limits, compared to earlier demos

Plenty of agents have been demoed over the last two years. Many were impressive, but they struggled to graduate from scripted videos to repeatable daily use. The introduction of ChatGPT Agent changes the baseline in three ways.

- Distribution. It rides inside the ChatGPT product that millions already use. You do not need a new app or a developer setup. You select agent mode, describe a task, and go.

- Unification. Earlier tools tended to pick either research or action. The new mode blends both by default, so it can read ten sources, fill a form, and produce a deck without changing tools.

- Control. Narration and confirmations move the experience from magic show to accountable assistant. You can watch the process unfold, correct it mid run, and approve the moments that matter.

There are boundaries. The agent is still slower than a focused human for quick one step tasks. It can misread a confusing layout or a non standard control. Some sites block automation or require multi factor steps the agent cannot perform alone. Long running tasks may time out. And like any model, it can draw the wrong conclusion when sources disagree or when a page changes during the run.

OpenAI also enforces usage limits that shape adoption. Higher tiers receive larger allowances than entry tiers, with the option to extend via credits. In practice that means you pick high value tasks first and automate the boring middle of your week before you ask it to do everything.

The emerging agent UX patterns

The ChatGPT Agent interface is a blueprint worth copying. If you are building on top of agents or designing internal tools, expect these patterns to become standard.

- Live narration panel. A visible step list gives trust and a debugging surface. People want to see what just happened and what comes next.

- Permission gates. Buttons for approve, edit, or take over at critical steps anchor accountability.

- Evidence view. For research outputs, inline citations and source excerpts reduce guesswork.

- Re run and branch. The ability to tweak a parameter and re run from a step reduces time to correction.

- Human in the loop by design. The system is collaborative, not only autonomous. It should feel easy to pause, intervene, and resume.

These patterns are not nice to have. They are the only way people will let agents touch money, data, and customers at scale.

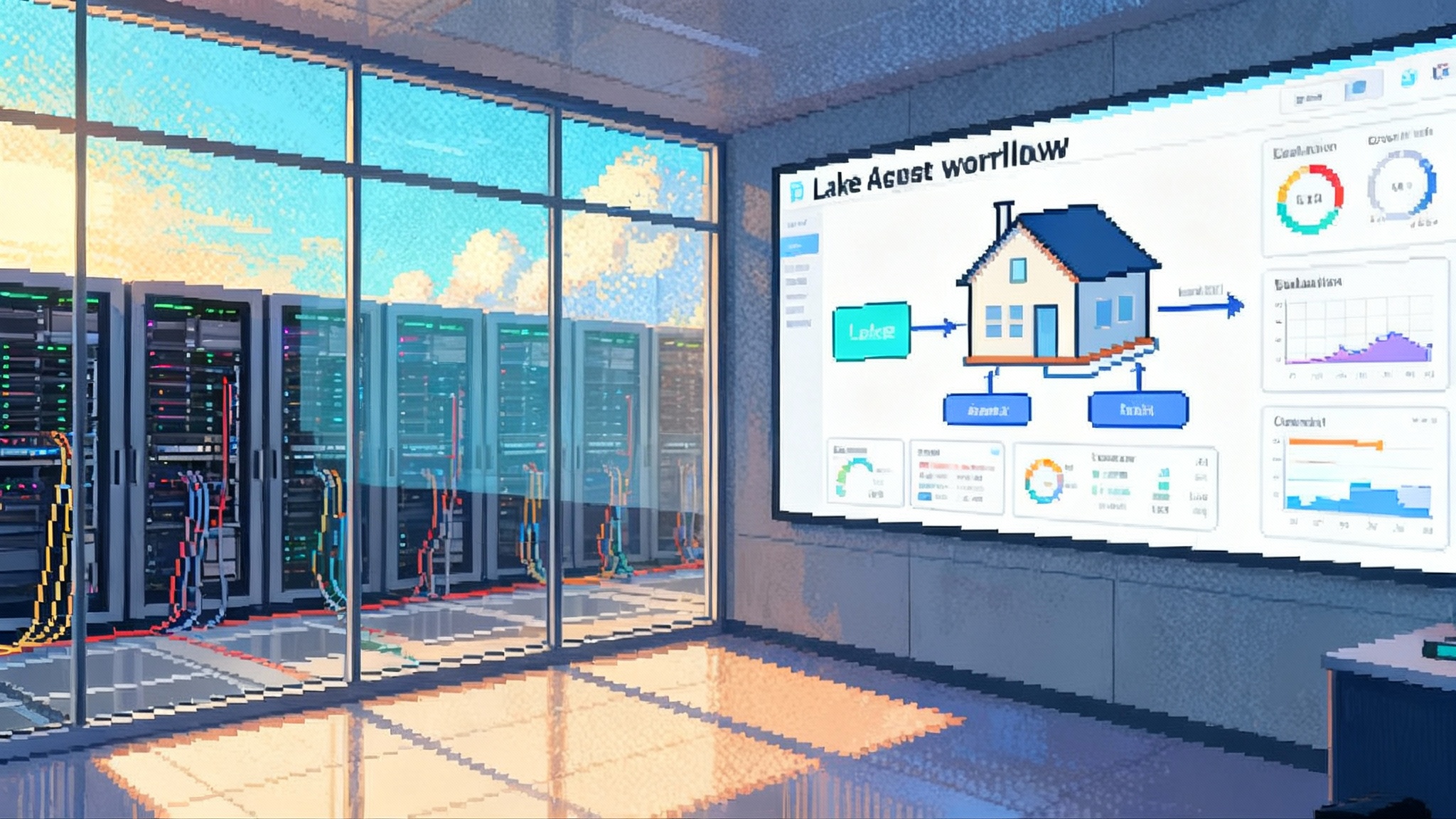

AgentOps is the new DevOps

As soon as an agent acts in the world, you need operations. Reliability is not just about model accuracy. It is about end to end task completion with clear failure modes. A workable AgentOps stack should include:

- Traces and replay for every run, including the DOM state or API response that caused a decision.

- Deterministic test flows for critical surfaces, such as cart checkout or calendar edits, with golden pages and staged data.

- Safety rules that block specific actions unless the agent has satisfied a checklist, such as matching a vendor ID or detecting a captcha.

- Step level metrics that track planning depth, action success, retries, and human interventions.

- Clear rollback paths when the agent encounters a hard block or a high risk step.

Teams that instrument these pieces will learn faster and ship safer. They will also discover higher return tasks because traces show where time is lost and where human approvals bottleneck the loop. For a platform lens on control and trust, see Agentforce 3 focus on control.

Connectors and the integration race

Agents are only as useful as the data and controls they can reach. The launch emphasizes authenticated connectors for calendars, inboxes, files, and developer tools. That unlocks the compound tasks that feel most valuable, like drafting a client brief that ties news, CRM notes, and upcoming meetings, or preparing a weekly metrics packet that pulls from analytics and saves to a team folder.

For the ecosystem, this sets the incentive. Vendors will compete to expose cleaner, safer connectors with fine grained permissions, readable schemas, and simulator environments for testing. Expect new roles to emerge around connector quality, with SLAs for uptime and versioning that do not break agent flows without deprecation windows. For commerce and real world transactions, Google’s work on APIs shows why better rails matter. See how AP2 unlocks agent commerce.

Enterprise teams should treat connectors as part of identity and access strategy. Use SSO, scope tokens to least privilege, and put agents in groups that mirror job functions. Build audit trails that tie agent actions to human requesters. That way, the approvals you see on screen map cleanly to what compliance needs to see in a review.

Near term enterprise adoption

Enterprises will move in concentric circles, starting with low risk and high repetition. Good first targets include internal reporting, data cleanup, spreadsheet maintenance, procurement comparisons, and inbox or calendar hygiene. These tasks already have human approvals baked in and clear success criteria.

From there, teams can graduate to customer facing workflows with human review checkpoints, such as triaging support tickets, scheduling follow ups, or importing vendor quotes. The trick is to instrument everything and set conservative limits on spend, volume, and scope while the agent learns your environment.

Procurement and security teams will ask familiar questions. Where does the agent run. How do we control access. What does it store. How are credentials handled. The launch answers many of these with the virtual computer approach, explicit logins for sensitive steps, and the visibility that narration provides. Your job is to map those answers to your policies and to adjust the approvals to your risk tolerance.

What to build right now

If you are a product leader or founder, the window just opened for focused, agent powered products that feel native to this new interaction model.

- Specialize on a vertical where the agent can finish the job, not only draft. Closing the loop is the value.

- Wrap a small number of high value connectors with great UX and rich traces. Fewer, better integrations beat a long list that half works.

- Invest in simulation. Build fake but realistic sites and data so you can regression test the agent at the DOM and API level.

- Match approvals to mental models. People prefer to approve baskets of related actions instead of dozens of micro steps.

- Deliver clear artifacts. End every run with slides, spreadsheets, or pull requests that are ready to edit.

- Instrument the path to success. Measure time to first approval, retries per step, and rate of human interventions.

The platform race after July 17

OpenAI’s move reframes the competition. The starting line is no longer chat that can browse. It is a general purpose agent that can research, click, and deliver. That shifts attention from raw model scores to task completion, approvals, and connector quality. It also puts UX front and center, because narration, control, and artifacts are what make this safe and useful.

Expect every major platform to respond with clearer agent modes, richer connectors, and more visible safety affordances. Expect a wave of AgentOps tooling to help developers ship reliable flows, and a wave of developer education on tracing, testing, and approvals. And expect the developer ecosystem to cross pollinate. Ideas pioneered in one product will spread quickly to others, as we already see with coding agents. If you are tracking this evolution, pair this article with Copilot’s coding agent goes GA for a developer view of where agentic work is heading.

The bottom line

ChatGPT Agent marks the moment when the cursor began to carry its own weight. It can already triage your day, research decisions, buy what you need with your say so, ship a report, and hand you a working spreadsheet. It tells you what it is doing, asks before it acts, and leaves you with artifacts you can trust.

There will be missteps as sites change and use cases grow. There will be weeks when a release makes a workflow worse before it gets better. But the direction is set. An agent that can plan and act is now part of normal software. The teams that learn to collaborate with it, and to instrument it, will move faster than those that wait for perfection.

You do not have to wait for a perfect agent to get value. You need tasks that matter, approvals that fit, and a habit of watching the narration as it learns your world. Start small, measure, and let the cursor do more every month.