Databricks Agent Bricks makes AgentOps an automated pipeline

Agent Bricks debuted in Beta on June 11, 2025. A September 25 partnership with OpenAI brought frontier models into Databricks. Learn how it turns hand-tuned prompts into auto-evaluated, MLflow-traced agent pipelines you can operate at scale.

Breaking: Agent Bricks turns agent building into a pipeline

On June 11, 2025, Databricks announced Agent Bricks in Beta, positioning a new way to create production-grade agents on enterprise data. The value proposition is straightforward: describe the task, connect governed data, and let the platform auto-generate synthetic examples, evaluations, and tuning loops that push quality and cost toward explicit targets. See the official Agent Bricks Beta announcement.

Momentum accelerated on September 25, 2025 when Databricks and OpenAI announced a multi-year partnership that brings OpenAI’s latest models into the Databricks Data Intelligence Platform and makes them first-class citizens inside Agent Bricks. The companies framed a one hundred million dollar investment that places frontier intelligence next to governed enterprise data. Read the Databricks and OpenAI partnership.

Beyond attention-grabbing press releases, the deeper shift is operational. Databricks is trying to move agent operations, often called AgentOps, from an artisanal craft to an engineering discipline. Instead of teams hand-tuning prompts and hoping they generalize, Agent Bricks bundles auto-evaluation, synthetic data generation, and MLflow 3.0 observability into a repeatable loop that looks and feels like continuous integration for agents.

From hand-tuned prompts to an automated optimization loop

Many enterprise agent projects still follow a brittle path. A team crafts prompts, trials a few examples, adds retrieval, then pilots. Performance is fuzzy, costs are unpredictable, and regressions are hard to diagnose because traces and repeatable tests are missing.

Agent Bricks flips that model by establishing a tight feedback loop that any software leader will recognize:

-

Define the objective in plain language. For example, extract key fields from invoices, validate against policy, and produce structured outputs to a target schema.

-

Attach governed data. Unity Catalog or an equivalent governance layer preserves lineage and access controls while giving the agent a curated view of relevant corpora.

-

Generate synthetic data and task-aware benchmarks. Synthetic data does not replace real data. It accelerates coverage. The system creates realistic, edge-case-rich prompts and expected outputs aligned to your domain, then blends them with curated real-world samples as you collect them.

-

Auto-evaluate with LLM judges and human-in-the-loop checks. Language model judges grade relevance, factuality, safety, and format adherence. Humans review a calibrated slice to catch subtle failures and keep judges honest.

-

Optimize for cost and quality. The loop tunes model choice, context strategy, tool usage, and step orchestration to hit the targets you set. You choose the cost and accuracy tradeoff instead of inheriting a vendor’s default.

-

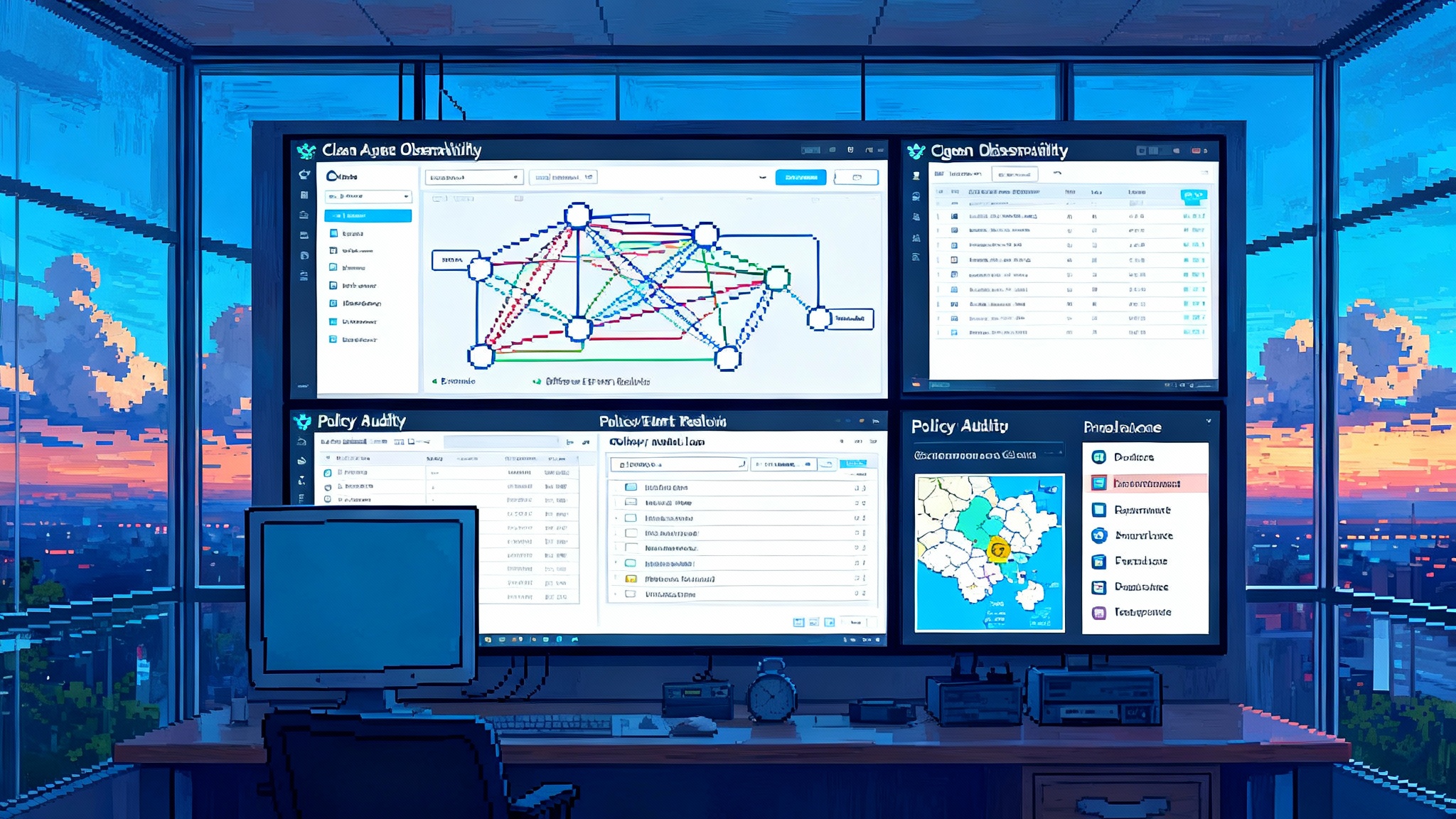

Observe and iterate with MLflow 3.0. MLflow traces record inputs, outputs, tools, latency, and cost per request. That makes it possible to reproduce failures, compare versions, quantify regressions, and feed production traces back into evaluation sets.

Think of it as standing up a factory line for agents. Prompts, tools, and retrieval are your parts bin. Synthetic data and evaluations are your gauges. MLflow is your telemetry. The outcome is not a single perfect prompt. It is a living pipeline that continually rebalances cost, accuracy, and safety as your workload and policies evolve.

What actually changes inside the engine

To see why this matters, consider a claims processing example.

-

Before Agent Bricks: A team writes a long prompt for a large language model to extract claim numbers, dates, and policy checks. It works on the first ten documents, struggles on the next fifty, sometimes hallucinates policy language, and costs spike as prompts grow.

-

With Agent Bricks: The team states the task, connects a governed folder of sample claims, and lets the system generate hundreds of synthetic variations that include edge cases like missing dates, handwritten notes, ambiguous policy riders, and out-of-order pages. LLM judges score correctness and format compliance. MLflow captures traces for every run across models and settings. The pipeline discovers that a smaller model with a crisp schema and two tool calls beats a larger model with a verbose prompt, cutting cost by sixty percent while improving accuracy on the hardest cases.

Mechanically, four levers matter most:

-

Data leverage: Synthetic data fills the long tail of edge cases so you can test the agent like you test software. As real-world traces arrive, they are added to the library and reweighted for relevance.

-

Evaluation leverage: LLM judges and targeted metrics make quality measurable. You can draw a cost to quality curve and pick a point deliberately rather than guessing.

-

Observability leverage: MLflow 3.0 traces and metrics make failure analysis fast. You see which tools, prompts, or retrieval chunks contributed to an error and why.

-

Orchestration leverage: Agent Bricks can restructure a multi-step agent so expensive reasoning is used only when necessary. Cheap checks run first. Costly calls are reserved for ambiguous cases.

The net effect is a system that learns from usage. As new document types or policy rules appear, the evaluation set grows, the optimization loop retunes, and the factory keeps running.

A pragmatic pilot plan you can run next month

Below is a four week plan that balances speed with rigor. Adjust the timeline to your risk tolerance and data availability.

Week 0: Scoping and guardrails

- Choose one narrow, high-value task where quality can be measured objectively. Examples include invoice extraction to a target schema, tier one support answer selection, or contract clause classification.

- Define unacceptable failure modes up front. Examples include no personally identifiable information outside policy and zero tolerance for mislabeling high risk clauses.

- Establish a baseline. Capture current manual cost per item, time to decision, and error rate. You need a baseline to quantify gains.

Week 1: Ground truth and data paths

- Build a seed ground truth of two hundred to one thousand examples with clear labels. Use real data where permitted. Redact sensitive fields if policy requires.

- Specify a target schema and validation rules. For text extraction, define each field, allowed values, and cross field checks.

- Configure governance. Lock data access through your catalog. Set role based access and audit policies before the first run.

Week 2: Evaluation harness and first optimization loop

- Stand up the evaluation suite. Include LLM judges for correctness, relevance, and safety, plus deterministic validators for schema adherence and cross field logic.

- Generate synthetic variations to fill coverage gaps. Include adversarial examples like missing fields, contradictory instructions, or noisy attachments.

- Run the first sweep. Compare models, context windows, retrieval strategies, and tool selections. Track an accuracy metric such as F1 for extraction or exact match for answer selection, alongside per item cost and latency.

Week 3: Cost to quality tuning and shadow traffic

- Draw the cost to quality frontier from your sweeps. Select the best two or three configurations for further testing.

- Push the top configuration into shadow mode against real traffic. Collect traces and human feedback. Add real mistakes back into the evaluation set.

- Introduce safety checks. Add guardrails for sensitive data fields, prompt injection, and retrieval drift. Verify auditability with end to end traces.

Week 4: Production gate and rollout plan

- Define a production acceptance threshold. Example criteria: exceed ninety five percent exact match on evaluation and ninety eight percent schema validity, with average per item cost under one and a half cents and p95 latency under two seconds.

- Wire alerts and dashboards on MLflow metrics for cost, latency, and error types. Configure circuit breakers for quality drops and budget overruns.

- Start with a staged rollout. Route ten percent of traffic, monitor, then ramp.

This plan yields a go or no go decision in about one month. If the pilot clears the bar, you have a path to scale. If it does not, you have trace backed evidence to improve data, evaluation design, or orchestration.

Risks you should plan for, and how to mitigate them

Evaluation overfitting

- Risk: The agent scores well on the test set but misses on real inputs. This happens when synthetic data is too narrow or tests are not refreshed with production traces.

- Mitigation: Treat evaluation like a product. Continuously add real-world mistakes to the library. Rotate LLM judges and keep a blinded human sample for calibration. Measure the delta between evaluation and shadow traffic.

Governance blind spots

- Risk: Teams move quickly and bypass data access controls or fail to log critical steps. That can derail compliance reviews and slow approvals.

- Mitigation: Enforce cataloged access from day one. Require that every production call emits a trace with inputs, outputs, latency, and cost. Make this part of the definition of done.

Data leakage

- Risk: Sensitive data flows into prompts, model providers, or logs that are not approved. In regulated settings this risk is decisive.

- Mitigation: Classify fields and mask at the source. Use policy enforced routing for external model calls. Store traces in governed tables with column level protections. Redact or tokenize sensitive values in logs while preserving auditability.

Evaluation drift and judge bias

- Risk: LLM judges can encode bias or drift with model updates, shifting scores over time.

- Mitigation: Version judges and pin them for evaluation runs. Maintain periodic human audits. Alert on sudden shifts in judge distributions.

Runaway cost

- Risk: Helpful new features quietly increase prompt length, tool invocations, or context windows.

- Mitigation: Set budget guardrails at the pipeline level. Emit per request cost. Block merges that exceed budget thresholds without explicit approval.

How this compares to the broader agent landscape

Agent Bricks is part of a wave of platforms that are making agents operational at enterprise scale. Where it focuses on the factory loop and deep integration with governed data, other offerings emphasize adjacent strengths. If you are scanning the landscape, compare Databricks with:

- Google’s ecosystem for enterprise agents, reflected in our look at Agent2Agent and Vertex AI Engine. See the Vertex AI Engine overview for how planning and evaluation are converging there.

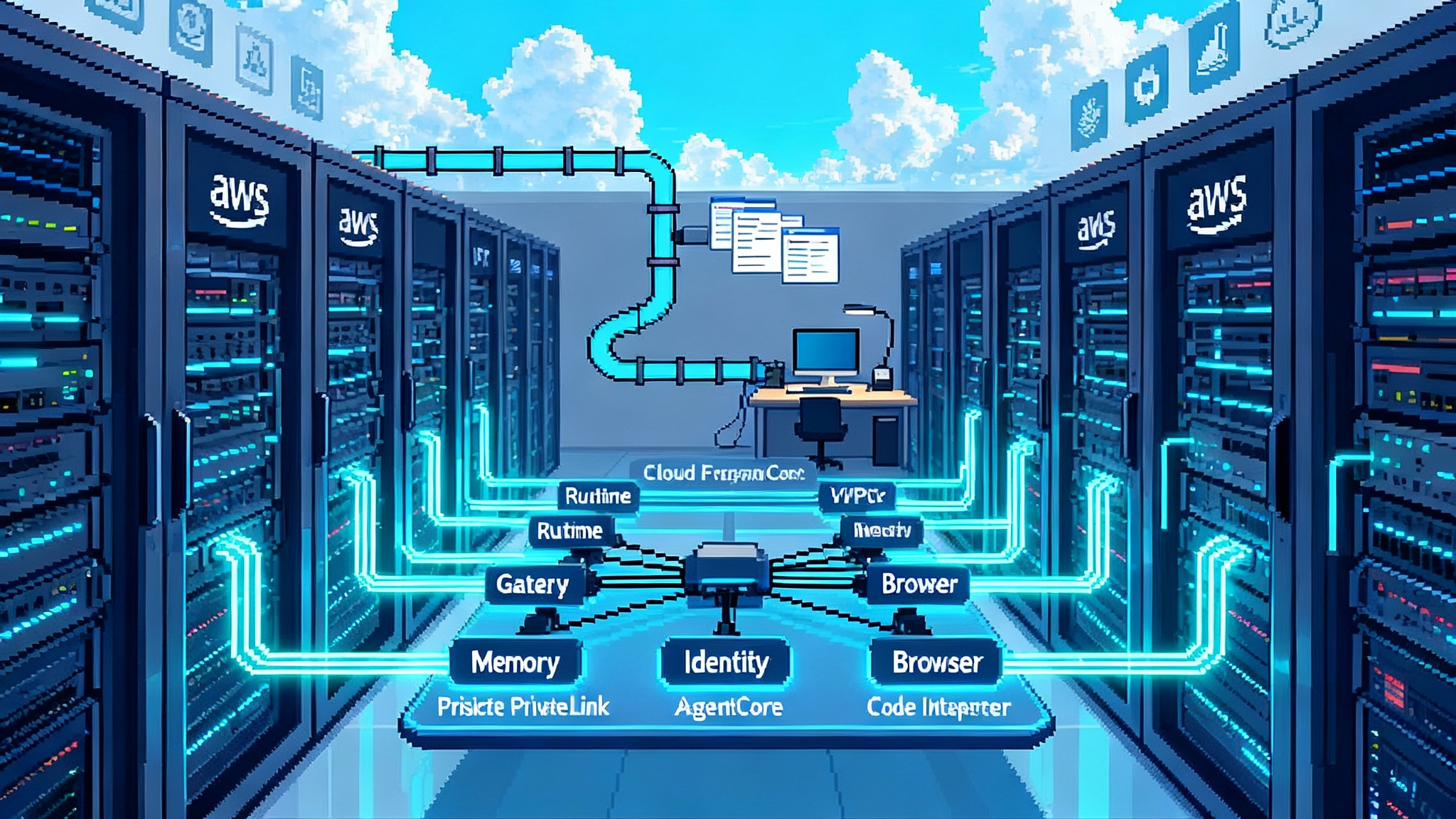

- Cloud native agent frameworks that emphasize security, deployment posture, and cost controls. Review the AWS AgentCore September update for how cloud primitives are becoming agent aware.

- App centric agent platforms that make builders productive quickly. Our piece on ChatGPT Apps outlines how packaging, distribution, and governance can be streamlined. Read ChatGPT Apps as platform for that perspective.

These comparisons help frame when the Databricks approach is a clear fit. If your workloads already live in Databricks and your governance runs through Unity Catalog, Agent Bricks reduces glue code and procurement time. If your priority is deep integration with other cloud managed services or a specific app marketplace, a different platform may fit better.

Wiring traces and state into an agent native datastore like AgentsDB

Agent native systems benefit from a purpose built data layer. To get the full value from Agent Bricks and MLflow 3.0, persist the right primitives in a datastore designed for agents, such as AgentsDB. Here is a practical pattern that teams have used to reduce time to triage and improve iteration speed:

- Ingest MLflow 3.0 traces as first class events. Each trace should include request id, user or system actor, input digest, retrieved documents, tool calls, outputs, scores from LLM judges, cost, latency, and version tags for prompts and orchestrations.

- Store agent state transitions. If the agent uses a planner, a memory, or a tool use graph, persist those states with timestamps. This enables replay, counterfactual testing, and temporal analytics.

- Normalize retrieval artifacts. Persist chunk identifiers, similarity scores, and the embedding version that produced them. This allows you to analyze retrieval drift and swap embedding models without losing lineage.

- Keep a governed feature view. Derived features like requires human review, schema valid, or confidence score should be materialized for analytics and routing.

- Index for operational queries. Common workloads include finding requests with the same input digest that failed schema, showing cost regressions by version, and replaying all errors for the last twenty four hours with tool outputs attached.

With that schema in place the benefits are durable:

- Faster triage: When a stakeholder asks why an answer was wrong, you can display the exact trace, document chunk, and tool call that drove the wrong decision.

- Safer iteration: You can test a new orchestrator or retrieval index against last week’s traffic with a single command and record outcomes side by side.

- Better evaluation: Production traces, especially the difficult ones, flow back into evaluation sets and improve your judges.

What to do next if you are the sponsor

- Pick one use case that you can measure tightly. Approve time for data labeling and evaluation design. Do not skip this step.

- Ask your team to show you the cost to quality curve after the first sweep. If they cannot, you are still in the art phase, not the factory phase.

- Require MLflow 3.0 traces for all runs that influence a decision. If it was not traced, it did not happen.

- Decide up front which data can leave your boundary. Pin model providers and routes accordingly.

The bottom line

Agent Bricks is not just another wrapper around a language model. It is an attempt to industrialize agent development by bringing the levers of software engineering to agent operations: measurable quality, repeatable evaluations, and complete observability. With the June Beta and the September partnership, Databricks has positioned Agent Bricks as an agent factory that sits next to your governed data and shortens the path from idea to production.

Adopt it with intent. Bring a clear objective, invest in ground truth, set real guardrails, and wire traces into an agent native datastore like AgentsDB so your lessons persist. If you do, you will not just ship a better agent. You will build a durable capability to ship many of them, faster, safer, and at a cost you can choose rather than guess.