Microsoft’s Security Store signals the agent era for SecOps

Microsoft has launched Security Store inside Security Copilot, a curated marketplace for agents that plug into Defender, Sentinel, Entra, and more. Here is what it unlocks, the risks to manage, and a 30 60 90 day rollout plan.

The news, and why it matters

On September 29 and 30, 2025, Microsoft introduced Security Store, a curated marketplace built into Security Copilot that lets security teams discover, buy, and deploy agents and solutions that plug directly into Defender, Sentinel, Entra, Purview, Intune, and more. Think of it as an app store purpose built for security operations, with commerce, governance, and integration in one place. Microsoft describes Security Store as a security optimized storefront for both Microsoft and partner built agents and software as a service offerings, with streamlined procurement and deployment tied to existing Microsoft billing. That is a high stakes change, so start with Microsoft’s own Microsoft Security Store overview.

This is not simply another marketplace badge. It is a signal that vertical agent marketplaces are arriving in earnest. Horizontal app stores focus on broad utility. A vertical agent marketplace focuses on a specific operational domain and the graph of data, permissions, and actions inside it. For SecOps, that graph is your alerts, incidents, identities, devices, data classifications, and playbooks. When a marketplace understands that graph, agents can be deployed faster, permissions can be scoped precisely, and outcomes can be measured against real security metrics.

If you have been following the broader agent trend, this announcement fits a pattern. Enterprise vendors are moving from point features to platform primitives that make agents production ready. We have already seen proof points in other domains, from how enterprise agents are real to the way Copilot Agent goes GA changed developer workflows. Security Store brings that same platform mindset directly to SecOps.

What Security Store unlocks for security teams

Security Store’s early design choices point to three unlocks that matter on day one.

1) Customizable Security Copilot agents

Security teams can now build or buy agents that automate repeatable work and present decisions to humans where it counts. Microsoft’s recent Security Copilot updates include a no code agent builder and packaging that makes agents feel like reusable, governed building blocks. Microsoft also notes that partner built agents are available alongside Microsoft’s own, and these agents can be deployed and operated inside the Security Copilot portal once purchased.

2) Tight integration across the Microsoft stack

Because Security Store is built for the Microsoft security stack, agents can work where analysts already live. A triage agent can pull context from Defender alerts, correlate with Sentinel incidents, inspect Entra sign in anomalies, and reference Purview data classifications during an investigation. The value is not that the agent is smart. The value is that the agent can see and act across these products with a consistent identity and permission model. Microsoft’s Sentinel team frames this as moving from tool hopping to agent led workflows. For a clear description of how custom agents ride Sentinel’s graph based context, see Microsoft’s post on agentic AI in Sentinel.

3) Faster procurement inside existing guardrails

Security Store uses Microsoft’s commercial marketplace rails. For buyers, that means private offers, Microsoft Azure Consumption Commitment alignment, and consolidated billing. For operators, it means a separation between purchasing in Security Store and configuring or supervising agents in Security Copilot. The practical effect is fewer calendar days between we need automation for X and the agent is in production with audit trails.

The bigger shift: vertical agent marketplaces

A vertical agent marketplace professionalizes agent deployment in three ways.

- Standard packaging: Agents arrive with manifests describing inputs, outputs, required permissions, and supported actions. This enables consistent approvals and repeatable rollouts.

- Contextual integration: Because the marketplace knows the domain, it can prewire connectors, schemas, and common playbooks. Less time goes to plumbing and more to outcomes.

- Commerce and compliance built in: Billing, entitlements, and audit live next to the agent. That reduces shadow procurement, one off contracts, and unclear ownership.

If general app stores are like a mall, vertical agent marketplaces are like a hospital supply chain. Everything on the shelf is certified for clinical use, labeled for the ward, and traceable for audits. That is how agent adoption becomes normal enterprise work rather than novelty projects.

Risks to manage from day one

New power surfaces new risks. Treat these as design constraints, not afterthoughts.

- Over permissioned actions: Agents that can isolate devices, revoke tokens, or change access policies must be scoped tightly. Prefer just in time permissions, role based access scoped to a sandboxed subscription or resource group, and privilege elevation only for the duration of an approved action.

- Opaque autonomy: If an agent executes a playbook without a human in the loop, you must be able to see what it did, why it did it, and how to undo it. Require step by step action logs with inputs, prompts, retrieved context, and resulting API calls.

- Model drift in operations: As models or skills are updated, behavior can change in subtle ways. Pin versions and require a change window with canary rollout before switching.

- Data handling: Ensure the agent’s retrieval and enrichment do not expose sensitive data beyond policy. Tokenize or mask high risk fields and keep data processing in the tenant where possible.

- Vendor lock without guardrails: If a partner agent uses proprietary skills, insist on an exit strategy. Ask for action level compatibility with native Microsoft connectors and documented data schemas.

A 30 60 90 day rollout plan for CISOs

Below is a practical rollout plan that balances speed with safety. Use it as a template and adapt to your environment.

Day 0 to 30: Prove value safely

- Name an agent product owner and a risk partner. Make them jointly accountable for outcomes and guardrails.

- Inventory the top three repetitive workloads that delay response. Common candidates include phish triage queues, low severity alert noise, and repetitive incident write ups.

- Stand up a non production Security Copilot workspace tied to a test Sentinel instance and a subset of Defender data. Enable audit logging.

- Deploy one Microsoft built triage or investigation agent with read only permissions. Require human in the loop approval for any action.

- Define baseline metrics. Capture current mean time to triage, mean time to resolution, false positive rate, and backlog older than 24 hours.

- Write an agent control policy. Include permission scopes, approval gates, rollback steps, and an incident forensics logging standard.

Day 31 to 60: Expand scope and introduce actions

- Add one partner or custom agent that drafts incident reports from Sentinel incidents and Purview labels. Keep actions disabled. Measure time saved per report.

- Enable a small set of reversible actions with approval. Examples include enriching indicators, assigning incidents, and requesting user verification through a trusted workflow.

- Run a canary for a containment playbook in a segmented lab. The agent should propose isolating a device in Defender or tightening a Conditional Access policy in Entra, but require a human to click approve.

- Start a weekly agent review. Inspect five random agent runs, compare to policy, and tune prompts, skills, or permissions.

- Validate vendor support. Open a support ticket for each agent to measure responsiveness and clarity of guidance.

Day 61 to 90: Production scale and automation where safe

- Promote your highest performing agent to production with a measured set of auto approved actions for low risk scenarios. A common example is closing alerts that match a stable suppression rule and attaching the rationale.

- Introduce a vulnerability remediation agent that files change requests with prefilled patch steps and risk statements, routed to your change advisory board.

- Add a rollback agent. For every automated action, there must be a one click undo that reverts a device, identity, or policy to a known good state.

- Integrate agent telemetry into your executive dashboard. Show time to value in days, percent of incidents touched by an agent, and analyst hours reclaimed.

- Conduct a red team exercise that targets your agent approvals. Prove that the agent cannot be coaxed into unsafe actions by adversarial prompts or data poisoning.

A builder’s guide to the first high value agents

Start with four agents that pay back quickly and exercise the stack without undue risk.

1) Alert triage agent

Job: Reduce noise and speed first decisions.

How it works:

- Ingests Defender for Endpoint alerts and Sentinel incidents.

- Correlates with Entra sign in risk, device risk, and user risk scores.

- Enriches with known good baselines and recent change windows.

- Proposes a disposition and next step with a rationale that cites the data used.

Guardrails:

- Read only in the first two weeks. No closure without human approval.

- Require the agent to attach a minimal evidence pack that includes the alert timeline, device or account context, and any playbook used.

2) Incident report drafting agent

Job: Turn investigations into consistent write ups.

How it works:

- Reads the Sentinel incident, gathers timeline, artifacts, and analyst notes.

- Pulls Purview data classifications to label data exposure accurately.

- Produces a report using your approved template with an executive summary, technical details, and recommended follow ups.

Guardrails:

- Mask sensitive data by default. Require a toggle to include full artifacts.

- Keep the report in draft until a human signs off.

3) Containment playbook agent

Job: Propose and execute reversible containment steps.

How it works:

- Suggests actions like device isolation in Defender, token revocation in Entra, or a temporary Conditional Access rule.

- Runs a preflight check that shows expected blast radius and dependency impact.

Guardrails:

- Start in propose only mode. Then allow auto approve for low risk patterns such as isolating a single unmanaged test device.

- Every action must generate a rollback plan and provide a one click revert.

4) Vulnerability remediation agent

Job: Close the loop between detection and change management.

How it works:

- Pulls prioritized vulnerabilities from Defender Vulnerability Management.

- Bundles patches by maintenance window, app owner, and risk score.

- Files change requests with prefilled technical steps and potential side effects.

Guardrails:

- No direct patching in early phases. Require change approval and an owner sign off.

- Maintain an exceptions list for systems with safety or uptime constraints.

Prompts and skills that work

- Use short, directive prompts that cite the objective, the allowed actions, and the evidence required for a decision. Avoid open ended language.

- Break skills into small, testable components. For example, a correlate sign in risk to alert skill or a generate incident summary skill. Version and test each skill independently.

- Keep a changelog for prompts and skills. Tie each change to a metric movement to avoid prompt drift without accountability.

Vendor evaluation criteria for agent marketplaces

When you open Security Store, you will see Microsoft and partner agents. Evaluate them with the same rigor you apply to managed detection and response or security information and event management purchases.

- Identity and permission model: Does the agent support least privilege with resource scoping, time bound elevation, and per action consent? Can you map required permissions to your roles without creating new superuser roles?

- Auditability and forensics: Are prompts, retrieval results, and downstream API calls logged with immutable timestamps and correlation identifiers? Can you export logs to Sentinel for long term retention and hunting?

- Safety and policy fit: Can you require human approval for specific actions and enforce that at runtime, not just in configuration? Does the agent support deny lists and policy as code checks before execution?

- Integration depth: Does it natively integrate with Defender, Sentinel, Entra, Purview, and Intune using supported connectors and event schemas? How does it handle throttling or partial outages?

- Quality and reliability: What is the false positive rate under load during a phishing storm? Ask for test results or run your own game day with replayed traffic.

- Extensibility: Can you add or replace skills, prompts, or models without vendor professional services? Is there a manifest you can version control?

- Data residency and privacy: Where do prompts and retrieved data reside? Can you keep processing in tenant with customer managed keys and no data logging outside your boundary?

- Support and ownership: Who picks up the phone during an incident? What is the mean time to close a P1 ticket? Is there a published security response policy for the agent itself?

- Commercial mechanics: Can you buy it against your Microsoft Azure Consumption Commitment with private offers? Are licenses metered by action, seat, or incident and how will that map to your cost centers?

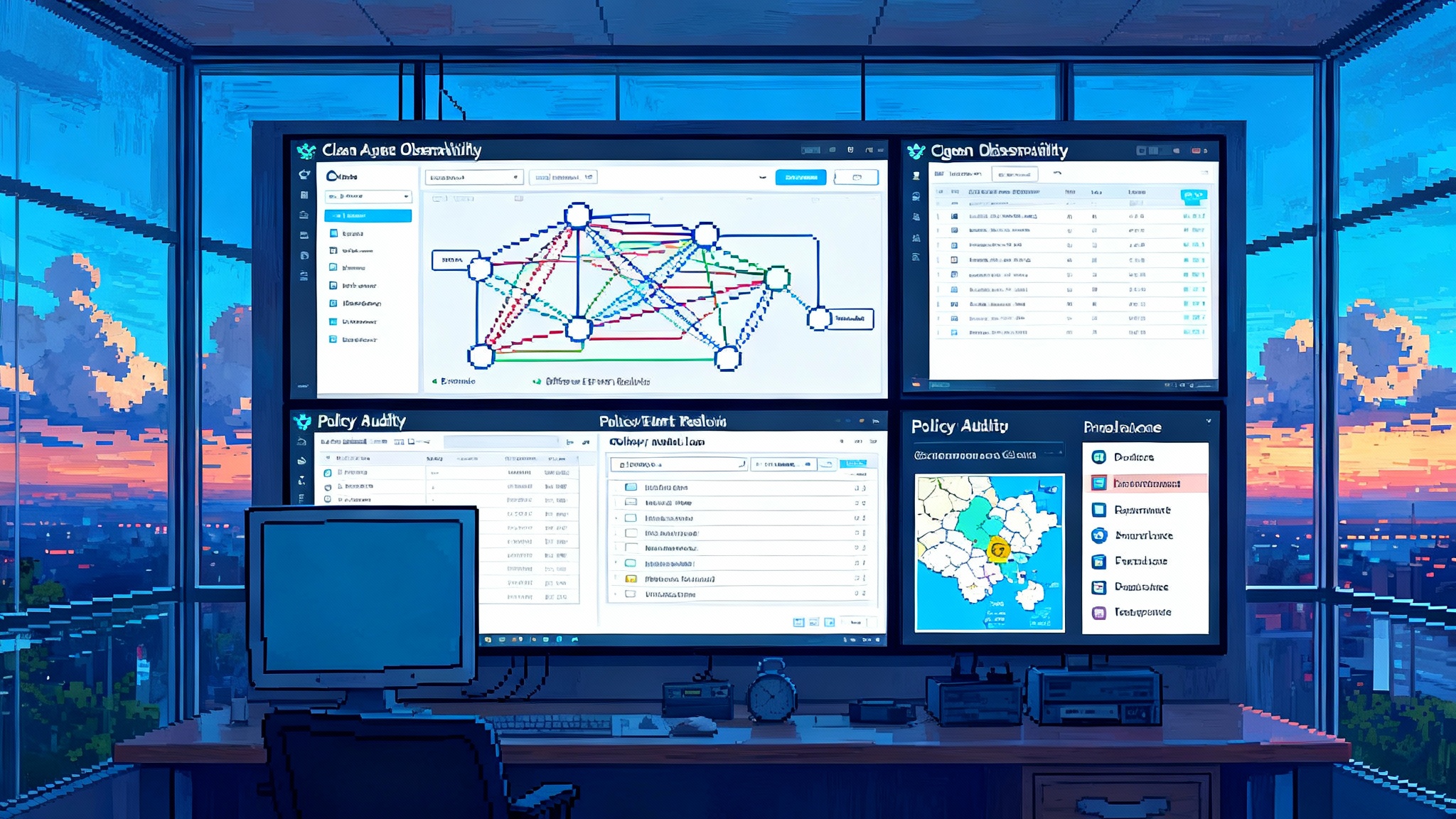

KPIs to track from day one

- Mean time to triage and mean time to resolution, broken out by agent assisted and agent automated paths.

- Percent of alerts or incidents touched by an agent, and percent fully resolved by an agent with human approval.

- Backlog older than 24 hours for specific alert classes.

- False positive rate for agent suggested closures, measured by post incident review.

- Analyst time per incident write up, before and after the report drafting agent.

- Rollback rate and time to rollback for agent initiated actions.

- Time to value in days from purchase to first production use with guardrails in place.

- Weekly control adherence, defined as the percent of sampled runs that met evidence and approval requirements.

- Cost per handled incident, inclusive of agent licensing and infrastructure.

Set quarterly targets and review them in your operational metrics meeting. If an agent is not moving a metric that matters, fix it or retire it.

Practical governance patterns that work

- Change windows and canaries: Treat agent upgrades like code pushes. Use staged rollouts with a clearly labeled canary population.

- Separation of duties: Keep purchasing in Security Store separate from runtime control in Security Copilot. Require a second approver for permission scope changes.

- Evidence by default: Make evidence packs mandatory attachments for closures. If the agent cannot justify a decision, it does not get to close the ticket.

- Fail safe posture: When the agent encounters an ambiguity, have it escalate for human review rather than taking a risky action.

What this means for the ecosystem

Security Store is a strong signal that agent marketplaces will be defined by domain depth, not model novelty. For vendors, the message is clear. Bring real integration and real governance, or native agents will pass you. For buyers, the advantage is speed without sacrificing control. You get a curated shelf of agents that already fit your stack and your billing. The tradeoff is to avoid complacency. A curated shelf is not a guarantee of fit for your environment.

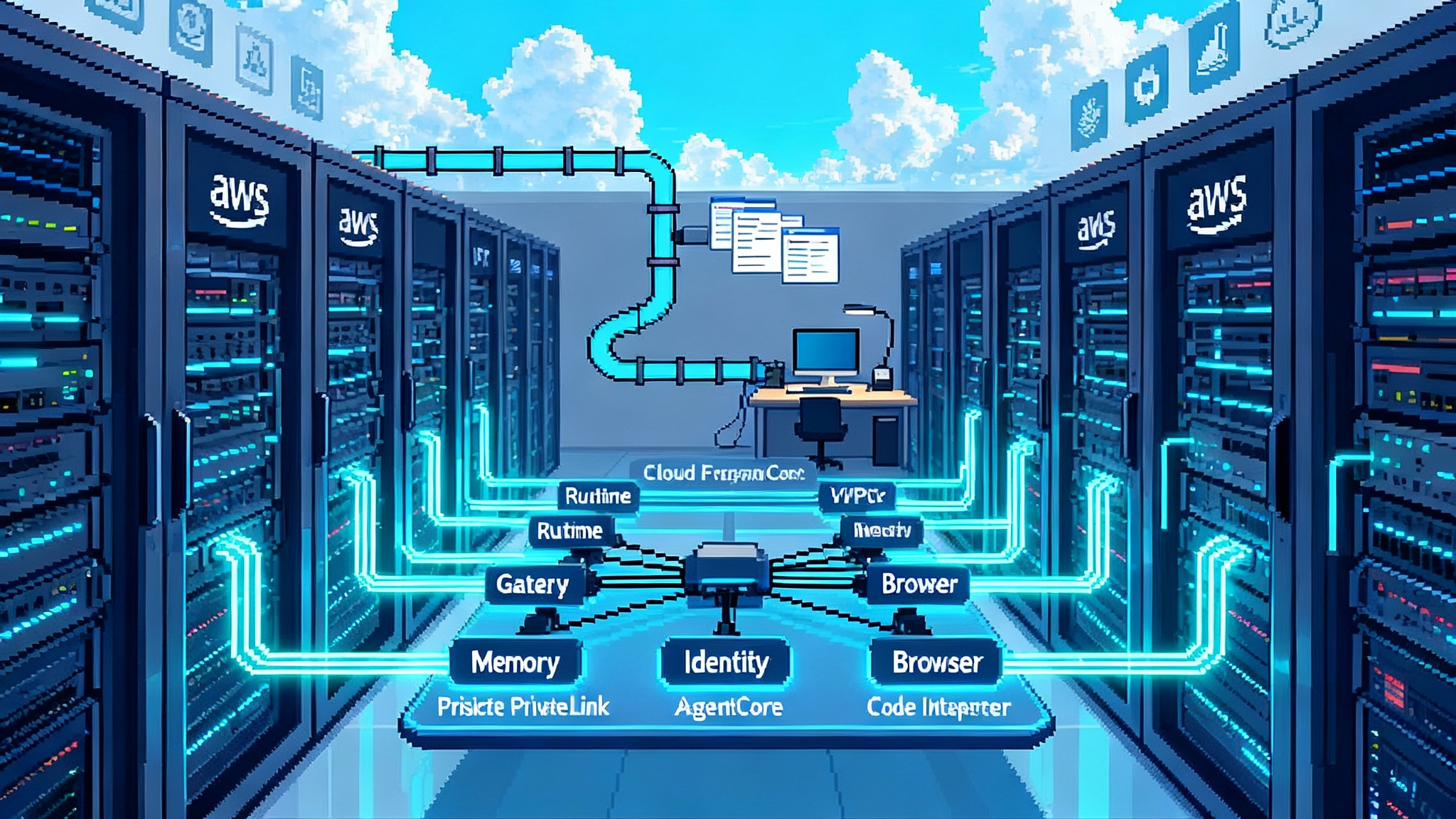

If you are mapping your broader agent strategy, look at how other ecosystems are converging on similar patterns. The AWS AgentCore September update shows how cloud platforms are baking in the primitives that agents need to run safely at scale. The theme across vendors is consistent. Agents become normal when packaging, policy, and commerce live next to the runtime.

The bottom line

Microsoft’s Security Store turns the idea of agent powered SecOps into an operational choice. You can start small, in your environment, with guardrails you control, and measure value week by week. Pair a vertical marketplace that understands your graph with a disciplined rollout, and the result is less swivel chair work and faster, safer responses. The organizations that treat agents like products, not pilots, will pull ahead first.