Notion 3.0 Puts Agents In Your Docs. That Changes Everything

Notion 3.0 puts AI agents inside the workspace where work lives. With real memory, inherited permissions, and traceable runs, teams can automate production tasks without leaving their system of record.

The news: agents move into your system of record

Notion just shipped its biggest update yet, placing artificial intelligence agents directly inside the workspace where teams write, plan, and track work. In Notion 3.0 the agent is not a chat window sitting next to your tools. It is inside the tools. The launch materials describe agents that can plan, create pages, update databases, and run multi step workflows across your workspace with memory and permissions intact. That makes this release different from a bot you message and then babysit. It turns the wiki and the database into an automation surface. Read the official notes in the Notion 3.0 agent release notes.

Why is this a breakthrough for mainstream adoption? Because most teams already keep their source of truth in Notion or tools like it. When the agent lives in that source of truth, it can act with context, respect existing guardrails, and leave behind traceable changes. That trifecta solves the three blockers that have kept chat first agents on the sidelines: weak memory, unclear permissions, and throwaway outputs.

The core idea: work where the records live

Think of a company’s workspace as a city grid. Pages are buildings. Databases are streets and utilities. A chat first agent is like a helicopter dropping supplies from above. It can help, but it often misses the exact address and leaves you to clean up. A workspace native agent is a courier with keys to the right doors. It knows the floor plan, follows the visitor log, and signs the clipboard on the way out. The result is useful work that lands in the right place, with audit trails by default.

Here is why that matters.

- Memory that is real: The agent’s memory is not a private scratchpad. It is your workspace itself. When instructions, style guides, and project context are written as pages, the agent reads them as living documents. You update a page. The agent updates its behavior. No re prompting.

- Permissions that mirror your org: The agent inherits access from your workspace and databases. If a database grants row level access only to a regional sales lead, the agent will not blast updates across the globe. The governance you already maintain becomes the agent’s boundary.

- Long running tasks with traceability: The agent can run multi step sequences measured in minutes, not seconds, and write each step into the workspace. Every action is reversible, commentable, and searchable.

Chat first agents vs embedded agents

Chat first agents in consumer apps are great for exploration. You can brainstorm a draft, ask for a summary, and move fast. But they struggle with production work for teams because they sit outside the system of record. The handoff from chat to execution is brittle. Copy paste is the bridge, and it breaks constantly.

Embedded agents flip that. The chat is optional. The main interface becomes the place where your data is already curated. The agent reads from reference pages, looks at database schema, and writes back into the same structures. That allows unfamiliar teammates to trust the output because it shows up where expected and respects naming conventions, owners, and status fields.

If chat first was an idea lab, embedded agents are a factory floor. The lab is where you play with possibilities. The factory is where work ships on a schedule with quality checks.

Memory that lives in your wiki

Most teams have tried to teach an assistant their voice or process, only to find themselves repeating the basics every week. Workspace native memory changes that. You can point the agent at a page called Writing Guidelines or QA Checklist. Store product glossaries, reviewer preferences, and definitions of done as pages. The agent treats those pages as its living brain.

A concrete example: A product marketing team keeps a page that explains style rules and preferred claims for a family of features. They also maintain a database of customer references with tags for industry and region. The agent uses the page to match tone and the database to select a relevant quote. When the team updates either, results change the next time it runs. There is no separate fine tuning pipeline to maintain.

Three design principles for memory

-

Put policy in pages, not prompts. Instructions you want to endure should live as editable documents. Prompts can call those pages by name.

-

Make examples canonical. Keep a short gallery of approved artifacts. The agent should sample from those examples first.

-

Let memory decay by design. Archive pages that should stop influencing output. If the agent reads the workspace, your archive policy becomes a memory policy.

Permissions as built in guardrails

The update highlights tighter control inside databases, including row level permissions. That is more than a compliance checkbox. It is what lets an agent act without constant oversight. If the workspace limits who can see or edit specific records, the agent carries that same constraint.

Picture a Customer Success database with client health scores. The agent can draft follow up tasks only for accounts a manager is allowed to view. If a consultant joins for a quarter, you share specific pages and rows. The agent sees exactly what the consultant sees, nothing more. You are not inventing a separate permission model for automation. You are reusing the one you already trust.

A simple permission map

- Databases the agent can read and write, listed by page path

- Views allowed for bulk edits, with clear filters

- Properties the agent may modify, including allowed values

- Roles who can approve escalations and external actions

Long running tasks, now inside your tools

Many real workflows do not end with a single click. They involve branching logic, fallbacks, waiting for data, or iterating through hundreds of records. Notion’s update emphasizes agents that can run multi step sessions that last long enough to finish substantive work, like building a project plan, generating pages at scale, or cleaning a backlog.

Because execution happens in the workspace, every step leaves a trail. Comments, version history, and database changes are visible where the team already reviews work. That means you can approve a draft, roll back a bad edit, or discuss a decision in the same place it happened. In practice, this cuts the hidden cost of monitoring an agent through screenshots or side channels.

A trace you can trust

- One run equals one row in a Run Log database

- Links to diffs for content edits and property changes

- Counts of records read, touched, and reverted

- Duration and cost fields for every session

The automation surface: wikis and databases

A wiki and a set of databases are a big canvas. Embedded agents turn each table and page into a target for reliable actions. Here are three examples you can run on day one.

- Quarterly business review prep: Point an agent at your Customer Notes database, Customer Health dashboard, and the company’s writing guidelines page. Ask it to build a QBR packet for each top account: one page with last quarter outcomes, renewal risk analysis, recommended next steps, and a slide outline. The agent writes the packet pages and tags owners. A manager skims and approves in the same place.

- Launch plan factory: Product teams often create the same artifacts each release. Give the agent a template for a launch plan, a page with approval policies, and a database of marketing channels. The agent populates the plan, drafts copy variations for each channel, and assigns tasks with due dates based on your standard lead times. There is no copy paste across tools because the plan lives where work already lives.

- CRM cleanup with rules you trust: Sales ops keeps a deals database with strict permissions. The agent scans for missing contacts, stale stages, and incorrect owners, then proposes fixes in a staging view. A human reviews a filtered board and clicks Approve. The agent applies updates only to approved rows. Your audit trail shows who approved and when.

The competitive context

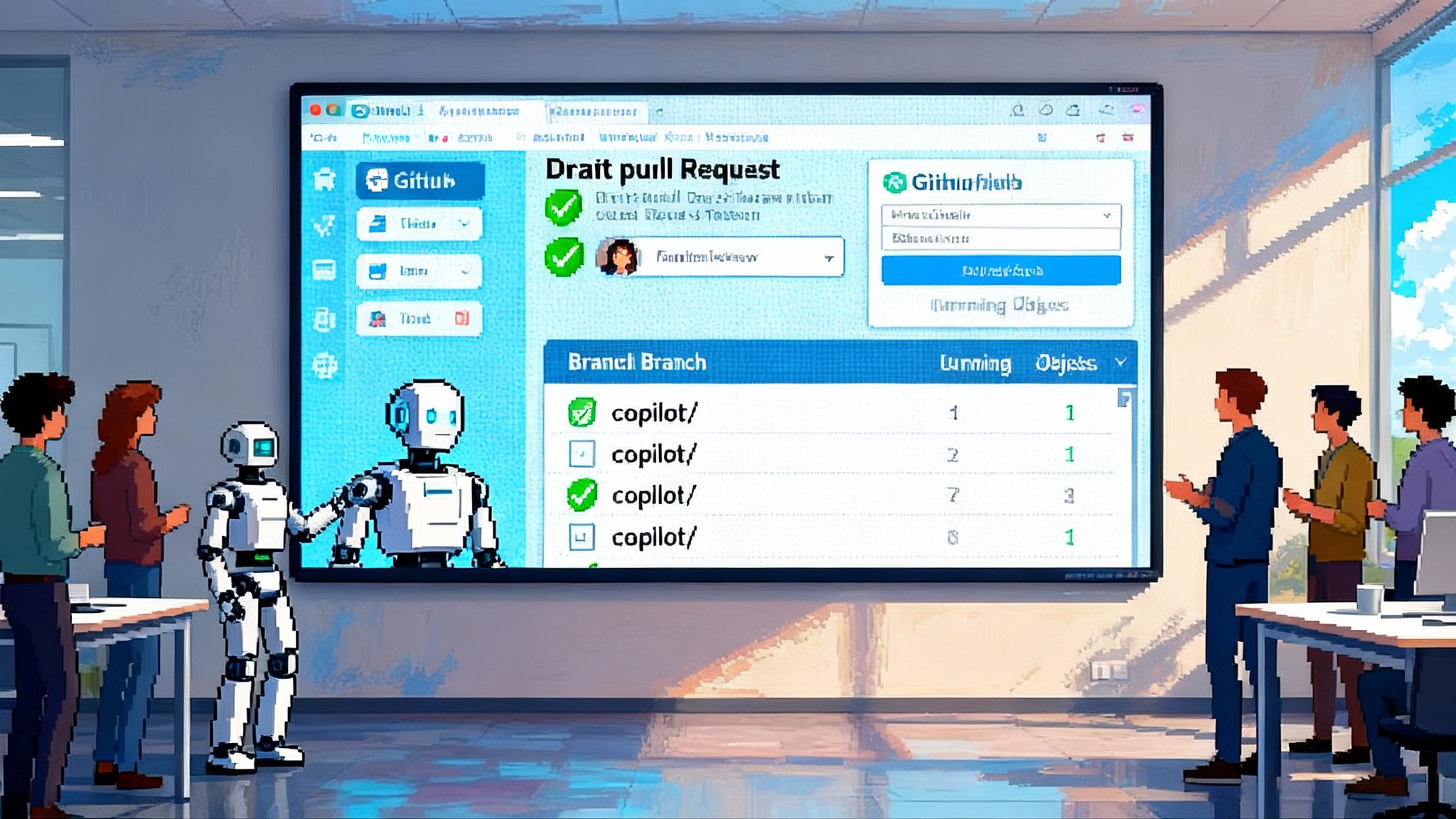

Large platforms have been racing to place assistants near work. Microsoft ships Copilot across productivity suites. Google places Gemini features around documents, sheets, and drive storage. Atlassian, Airtable, Coda, and Monday.com each ship their flavor of automations and actions. The difference here is depth inside the system of record rather than a universal chat at the edge. Notion’s launch also arrives as the company leans into enterprise scale. For an outside view on momentum, see CNBC on Notion's revenue milestone.

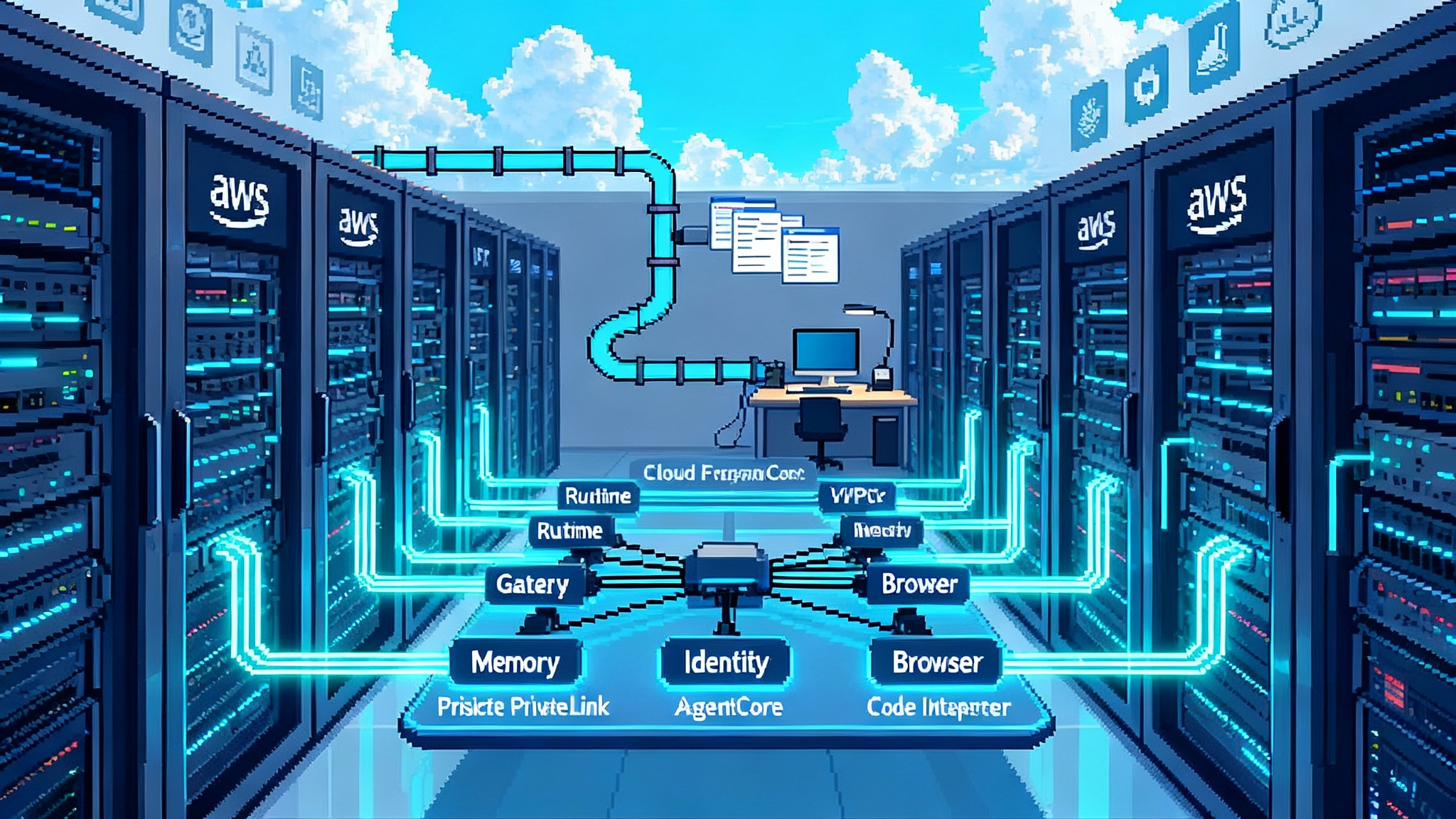

This shift also aligns with a broader pattern we have been tracking. Platforms are racing to make agents native to their core products. For context, explore how agent platforms are converging in our piece on agents as a native platform, how the infrastructure is getting enterprise grade with AWS AgentCore goes enterprise native, and how software delivery teams are adopting copilots as teammates in GitHub Copilot Agent adds a teammate.

Playbooks teams can use this quarter

Workspace native agents are powerful. Power needs discipline. Here is a starter playbook that any team can adopt in thirty days.

1) Governance you can explain

- Define an agent owner. One person is accountable for behavior and change management.

- Create an Agent Instructions page. Include tone, definitions of done, escalation rules, and a safe list of databases the agent can touch. Keep it short and living.

- Start with a permission map. List which databases and views the agent can read and write. Align this with your existing roles. Avoid one off exceptions.

- Set a change window. Agents change capabilities frequently. Allow updates during a weekly window and document changes on the Instructions page.

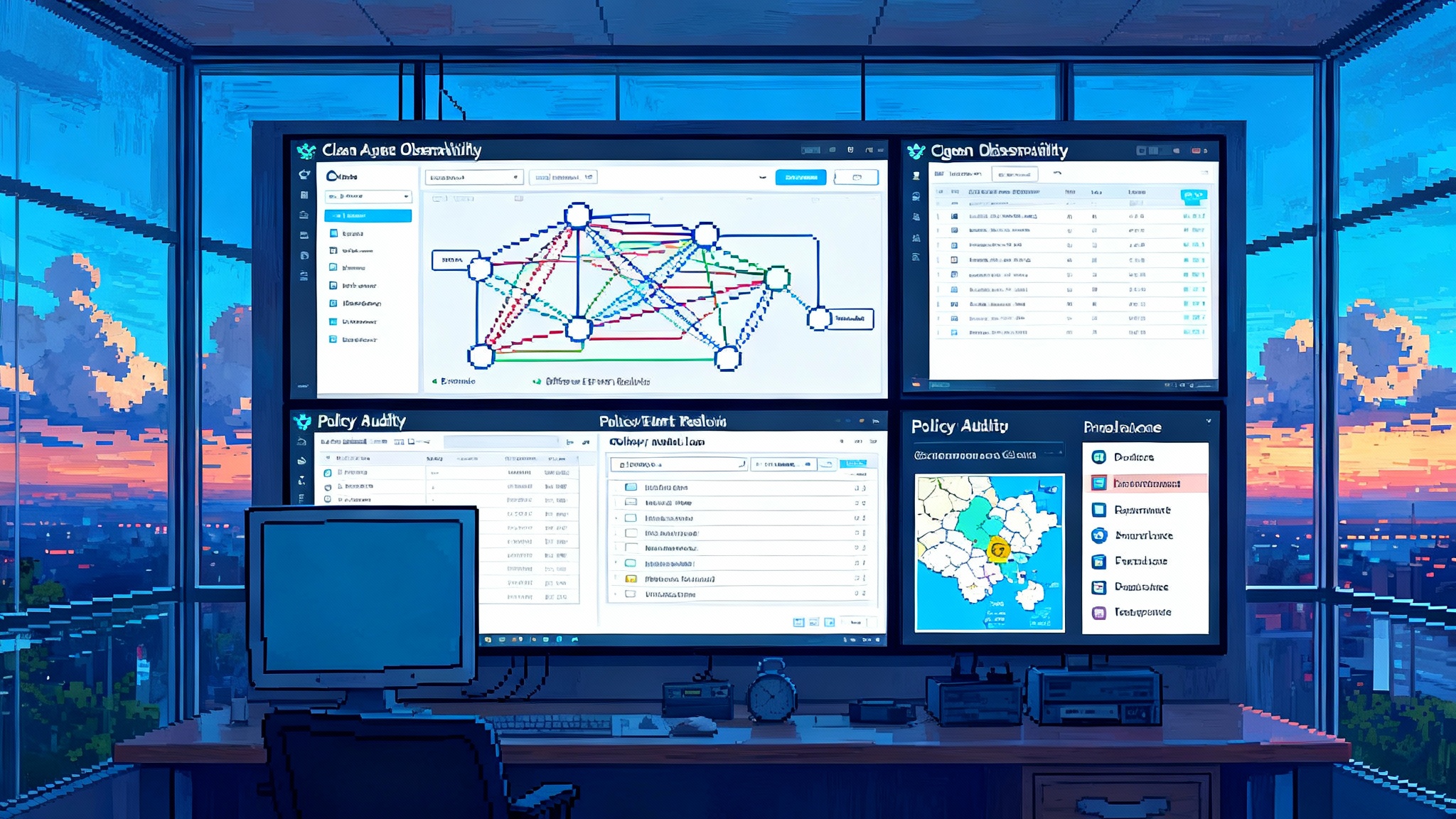

2) Observability you can act on

- Log runs to a database. One row per run with fields for initiator, scope, duration, records touched, success state, and cost.

- Capture diffs. For content edits, store before and after summaries with links to version history. For database changes, store counts by property.

- Create a Run Health dashboard. Show success rates, average duration, top failure reasons, and the top five records with repeated failures.

- Set alerts with simple rules. Example: alert if a run touches more than 200 rows, or if success rate drops by 20 percent week over week.

3) Human in the loop where it matters

- Use staging views. The agent writes proposed changes to a staging database or view. A human approves by moving cards to Ready. The agent then applies changes.

- Require approvals for external actions. If the agent emails customers or posts to channels, it must request sign off from a named reviewer.

- Teach the agent to ask. If a rule triggers uncertainty, the agent creates a comment that pings the owner with a short list of options.

4) Cost and safety controls

- Scope by default. Give the agent a narrow view until it proves reliable. Increase scope only after two consecutive green weeks on the Run Health dashboard.

- Cap run time and volume. Limit the agent to a set duration and a maximum number of rows per run. Increase only with evidence.

- Rotate reviewers. Assign a weekly reviewer role so bias does not drift. Review the instructions page at the same time.

What to pilot by team function

- Product and design: Ask the agent to generate discovery summaries from research notes, then create draft specs linked to user stories. Require a reviewer to approve property mappings before anything moves to the sprint board.

- Sales operations: Let the agent create quarterly territory pages, fill them with account lists, and assign follow ups based on health scores. Keep email sends in review until leadership approves templates.

- Customer success: Use the agent to triage customer feedback into themes, link them to product areas, and propose the top three actions per theme. Maintain a human first policy for any customer facing message.

- Marketing: Have the agent generate a campaign brief from a strategy page and a database of past campaigns, then create tasks with due dates mapped to your lead time rules. Keep performance projections as drafts for analyst review.

- People operations: Allow the agent to draft onboarding checklists based on role pages, create a schedule of first week tasks, and post updates in a private channel. Require approval before any changes to compensation or policy pages.

The next 3 to 6 months: what this signals

- From chat to canvases: Expect the default agent interface to be a canvas page or database view, not a message thread. Vendors will ship more block level and view level actions because that is where trust lives.

- Standardized audit and run logs: Buyers will ask for structured run logs at the database level, including diff links and cost estimates. Vendors that expose logs in the system of record will win.

- Row level permissions become table stakes: If your databases cannot restrict by row, your agents cannot be trusted with partial access. Competitors will rush to match this.

- Agent templates replace macros: Teams will build and share reusable agent playbooks that combine instructions pages, staging views, and approval workflows. Think starter kits for launch plans, QBRs, or data hygiene.

- Procurement moves faster: Security reviews will shift from separate agent products to addenda on the workspace you already use. That reduces time to value.

- Model choice inside products: Vendors will expose state of the art models behind the scenes while keeping the workflow stable. Teams will care more about observability and less about which model wrote a sentence.

What to do this week

- Pick one workflow where output lives in Notion today. Scope it to one database and two pages of instructions.

- Create a staging view and a review queue. Decide who approves. Decide what counts as good.

- Instrument run logs. Even a simple database with five fields will pay off. Success, duration, records touched, cost, initiator.

- Run two iterations. After each run, edit the instructions page and permissions. Watch the metrics and only then expand scope.

Risks and failure modes to avoid

- Silent drift: Agents improve fast, and small product changes can shift behavior. Solve with a change window and a weekly review ritual.

- Hidden permissions gaps: A public page can leak into runs through backlinks or related databases. Solve with a safe list of databases and a consistent naming scheme for sensitive pages.

- Cost spikes from bulk operations: A mis scoped run that touches thousands of rows can surprise your budget. Solve with hard caps and alerts.

- Approval fatigue: If every action requires a human click, the novelty wears off. Solve by approving classes of changes once you build trust, and keep human review only for externally visible or irreversible actions.

The takeaway

Embedding agents inside the system of record is the unlock. Memory is not a prompt. It is a page that anyone can edit. Permissions are not a new concept. They are the same roles your team already uses. Long running tasks are not scary if you can see each step and roll back changes inside the tools you trust.

The path forward is practical. Start narrow, measure everything, and keep a human in the loop where outcomes meet customers. As agents learn to live in our documents and databases, the most valuable work you can do is to teach them how your organization thinks. Write it down. Give it structure. Let the agent run. Then watch your system of record turn into a system of action.