A Whisper On Your Finger: Sandbar’s Stream Ring Arrives

Sandbar’s Stream Ring puts a whisper ready assistant on your finger. Here is why a ring can beat pendants, how hardware and models must be co designed, and what this shift means for developers and users in 2026.

On November 5, a quiet launch that could be loud

Sandbar’s Stream Ring arrived on November 5 with a promise that sounds almost like science fiction: press a fingertip, whisper into your hand, and an assistant captures the thought without announcing it to the room. The company positions the product as a tool for quick, private exchanges that organize themselves into notes and tasks, and as a small companion that can speak back in a voice you choose. According to Wired’s first look at Stream Ring, preorders opened at 249 dollars for silver and 299 dollars for gold, with U.S. shipping targeted for summer 2026. That price point, the whisper first capture, and the choice to ship later rather than rush are the top signals of an emerging category shift.

If you squint, you can see the broader story: the center of gravity for agents is moving from chat boxes on phones to ambient, on person devices you do not have to look at. It begins with something as humble as a ring, and the key phrase is whisper native. This is not voice as a billboard. It is voice as a note to self that an agent hears as clearly as if you were alone.

Why a ring beats a pendant in real life

A ring and a pendant both bring the microphone closer than a phone in your pocket, but they change social dynamics and physics in opposite ways.

- Microphone distance and noise. A pendant sits 20 to 30 centimeters from your mouth in a busy environment, so it battles wind, traffic, and cafeteria clatter. It needs aggressive noise reduction and beamforming. A ring can be cupped two to five centimeters from your lips. At that distance, even a soft whisper yields a higher signal to noise ratio. You need fewer algorithmic heroics, which means fewer mistakes on names, numbers, and addresses.

- Activation and privacy. Chest worn devices often rely on wake words or tap to talk on clothing. Wake words are socially awkward and prone to false triggers. A ring is a natural push to talk. A half second press is private, positive control. You do not shout across a room to turn on your thoughts.

- Social acceptability. Whispering into your hand is a familiar gesture. We already cover our mouths to be discreet. Talking into a pendant reads as broadcasting. The ring is a diary you hold closed with your palm when you need to.

- Battery and size. Pendants can house larger batteries and speakers. Rings have a tighter energy budget, which forces design discipline. The tradeoff is worth it if proximity lets you run lighter models, lower microphone gain, and shorter inference paths.

- Cloud dependence risk. The Humane Ai Pin shutdown in early 2025 is a cautionary tale for cloud bound wearables. When a device’s core functions die with the servers, trust in the category suffers. See The Verge report on Ai Pin shutdown for the timeline and lessons.

Whisper native is a hardware plus model handshake

Whisper native is not a marketing flourish. It is a systems claim. To deliver a satisfying whisper interaction without false activations, cut off sentences, or awkward repeats, multiple components must be co designed and tuned as a unit.

1) The acoustic front end

- Microphone choice and placement. At whisper distances, low self noise microphones matter more than heroic arrays. A ring can bias for a single, high quality microphone with a tuned port rather than a chest array that must fight room acoustics.

- Mechanical isolation. The finger taps, scrapes pockets, and knocks mugs. Gaskets and mechanical decoupling reduce handling noise that would otherwise swamp a low level voice.

- Analog path. Automatic gain control must ramp quickly without pumping. Set the floor low enough to catch a quiet syllable, but clamp peaks so sudden laughter does not clip and crash the transcription.

- Voice activity detection. Think of it as a gatekeeper that decides when to stream audio to the model. With a ring, push to talk is the first filter. Add a whisper optimized detector that tolerates breathier phonemes and faster cutoffs, and your false start rate plummets.

2) The wake stack and the no wait rule

Latency is the user interface. From press to first token, the system needs to feel instant. Under about 250 milliseconds, most people will not notice a lag. That budget must be split across Bluetooth, buffering, acoustic preprocessing, transcription, and the first model response.

- Stream transport. Keep packet sizes small and jitter tolerant so the first chunk of audio reaches the model in tens of milliseconds, not hundreds.

- Streaming transcription. Partial results unlock the turn taking. If the system begins to decode within 100 to 150 milliseconds, the assistant can reason while you finish the sentence. That is how a whisper exchange feels like thinking out loud rather than dictating to a machine.

- Local first, cloud when needed. A condensed, on device speech model handles wake, endpointing, and initial transcription. Larger language models can be consulted for complex tasks, but the first beat should land locally so you feel haptics or hear a soft tone immediately.

3) The battery budget, stated plainly

Rings do not bend the laws of physics. Space for cells is tight, and the finger adds a thermal ceiling. That forces rigorous power accounting.

- Duty cycles over brute force. Capture occurs in short bursts. Keep radios dark by default. Use push to talk to avoid hot mics and wasted frames. Store only what you need for the current turn.

- Efficient inference. Compress the speech and language model layers and quantize to low precision where it does not hurt intelligibility. Offload to a small digital signal processor when possible. The goal is tokens per joule, not abstract benchmark glory.

- Smart haptics and audio. Acknowledgments should be felt, not heard, unless you are wearing earbuds. The ring can limit loudspeaker use to public safe volume and reserve longer audio for headphones. That preserves both privacy and battery.

4) Data flow and failure modes

Whisper native also means failure is private by default. If the connection drops, the ring should save the audio snippet locally and mark it for retry. If the assistant crashes, your raw thought should still become a note rather than vanish. Controls must be physical and obvious: a long press to erase the last capture, a pattern to mute all microphones, a light that signals state clearly.

The ring as input device, not a phone replacement

The smartest framing is to treat a ring as a mouse for voice. Like a mouse, it is not expected to render a page or hold your files. It provides fast, precise intent. The stream of intent is then handled by an agent on your phone or in your earbuds. That model aligns with how people will actually use these devices in public: short requests, minimal feedback, and a clean transcript or task created out of sight.

When the phone is nearby, the agent can use its radios, accelerators, and storage. When the phone is away, the ring should still be useful for offline capture and lightweight commands. This layered capability is how the category avoids the honey trap of shipping yet another phone by proxy.

Why this signals a shift away from chat apps

Chat apps taught hundreds of millions of people to ask computers for help in text. The problem is that many high value moments happen when your hands and eyes are busy: keys in one hand, coffee in the other, brain full of a half formed idea. A chat window is the wrong shape for that.

A whisper native ring matches the moment. You turn a fleeting thought into a structured note, a task, a calendar change, or a shopping list without breaking stride. Even more important, the cost of asking a small thing goes down. You do not need to unlock a device, navigate, or worry about who is watching your screen.

What developers should build in 2026

If rings and other micro wearables become common in 2026, developers need tools and patterns that assume audio first, intermittent interaction.

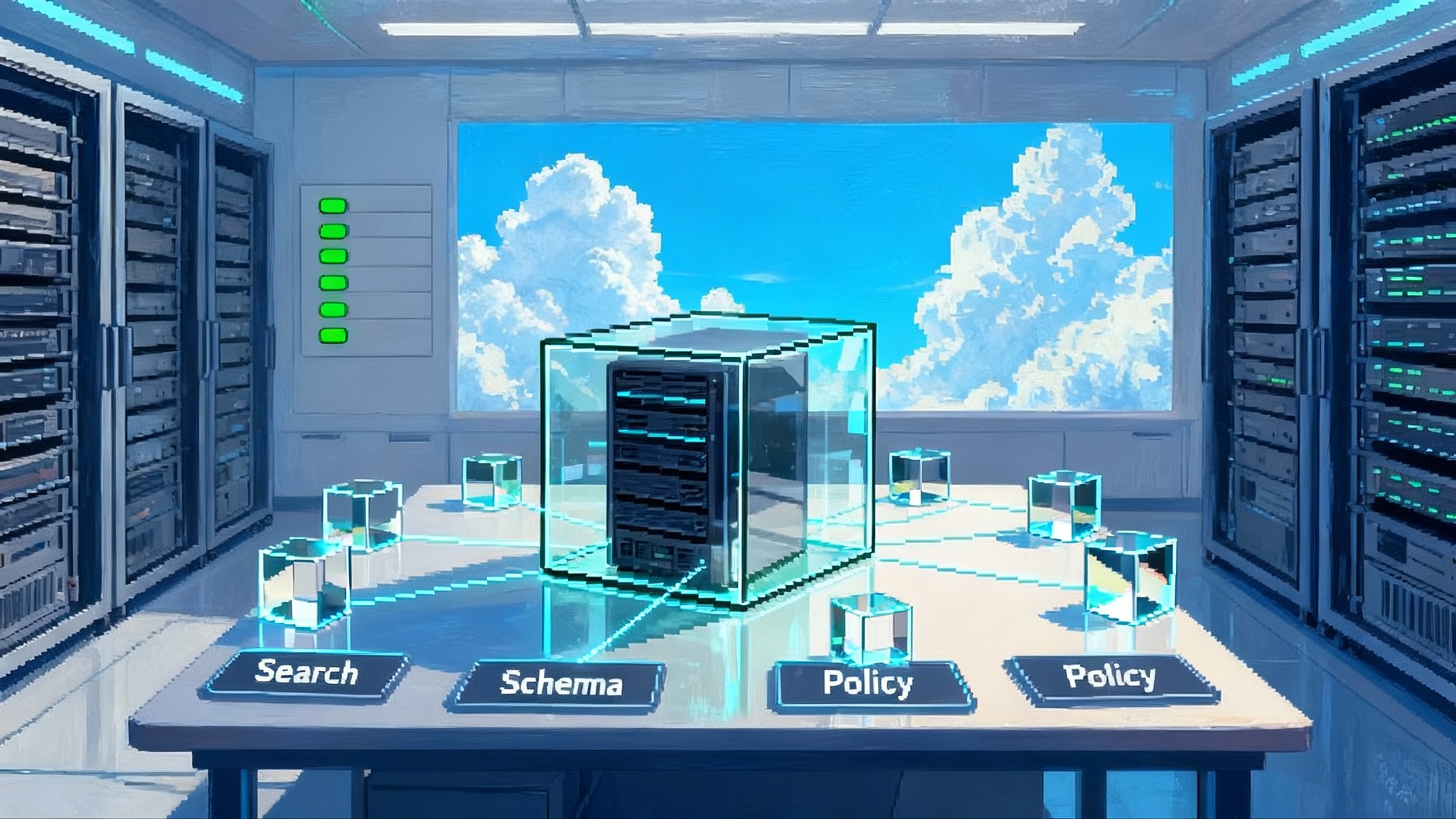

- Intent schemas, not chat logs. Agents should accept compact, typed intents derived from speech, with confidence scores and timestamps, rather than unstructured paragraphs of text. That unlocks fast and robust function calls to calendars, notes, and to do apps. For orchestration patterns across many small skills, study how a Meta Agent orchestrates AI teams and adapt its contract driven approach to audio events.

- Event driven thinking. Users will pause mid sentence, change their minds, or correct a name. Expose events like partial transcript update, entity confirmation, and end of turn so applications can act before the user finishes speaking. As you design state machines, remember that silence is also a signal.

- Low latency response surfaces. Provide patterns for haptic confirmations, one second summaries, and structured payloads the phone can render later. Think queue now, decorate later. The payoff is a sense of momentum without chatter.

- Local capabilities by default. Ship offline grammar packs for your core domain so the easiest tasks run without a network. Use larger models only when needed and warn users when leaving the device. Database interactions will need constrained, auditable calls. Approaches like Agentic Postgres for safe parallelism show how to keep fast paths deterministic and explainable.

- Developer ergonomics. Voice first agents still benefit from strong IDE support. Systems that can compose scaffolds and refactor flows quickly will win. The move toward agent assisted coding, as seen in Composer that rewrites the IDE playbook, hints at how teams will prototype and iterate conversational states.

- Clear privacy contracts. A simple, verifiable policy that says what audio is stored, what text is stored, and for how long. Offer export pathways to common tools such as Notion or Obsidian without lock in.

Privacy economics: what changes when whispers pay the bills

On device processing shifts both the cost structure and the value proposition.

- Subscriptions make more sense than ads. A whisper is not an ad slot. Users who trust a ring to hear them will pay a small fee to keep their data private. Price the tiers so the free plan remains useful and the paid plan offers real power without turning captured thoughts into bait.

- Cost of inference becomes a product lever. If your local models are efficient, you can afford generous free usage. If every turn hits a large cloud model, you must charge more or rate limit. Expect new metrics in 2026 such as tokens per joule per day and cost per minute of local transcription.

- Data gravity flips. The richest contextual data sits on the phone and ring, not in a vendor cloud. Winners will let users grant narrow, revocable access to that context so agents can help without vacuuming every detail of their lives.

- Compliance as feature. Simple audit trails for who or what touched a transcript, plus on device redaction of sensitive entities, will be a selling point for families and businesses.

Everyday adoption: where a ring fits in real life

- The commute. You whisper a three part reminder while walking. The agent parses it into tasks and calendar blocks, then vibrates to confirm each entity it recognized. If it missed a name, you correct it with a two word follow up without stopping.

- The meeting. Instead of recording the whole conversation, you capture only your own thoughts in the moment. The agent drafts a one paragraph summary of your next steps and leaves the rest to the team’s shared tool.

- The store. You ask for the list to play back in your earbud and swipe the ring to check off items. No big voice prompts. No phone out.

- The kitchen. You quietly ask for a substitution while a baby naps in the next room. The agent answers with haptics and a short phrase at low volume.

The pattern is micro interactions that reduce mental overhead. Success here is not measured in wow demos but in friction removed.

Metrics that matter in 2026

If you want to judge whether whisper native agents are real, watch these numbers and behaviors:

- Whisper word error rate. Not just overall accuracy, but accuracy on names, numbers, and short commands at low volume.

- End to end latency. Time from press to first acknowledgment and time to a complete, useful response.

- Battery honesty. Day long claims that include realistic mixed use and cold weather.

- Privacy defaults. Microphones off unless pressed, no wake words unless explicitly enabled, clear local deletion.

- Reliability percentiles. How often the device completes a simple capture in one shot, not counting retries.

The open question: where the voice lives

Rings solve input elegantly. Earbuds solve output. Phones and laptops solve heavy lifting. In 2026, expect tighter choreography among the three. A ring can hand off longer responses to earbuds or a car speaker. Earbuds can detect that you are already on a call and delay non urgent agent replies. Phones can render the full transcript with entities, links, and actions, but only after the moment has passed.

We will also see healthy competition from other form factors. Glasses are a natural fit for longer interactions with private displays. Wristbands offer more battery and sensors for health. Pendants will evolve with better microphones and local models. Yet the ring’s superpower is the oldest one in computing: the quickest path from intention to action.

The bottom line

Sandbar’s launch is more than a new gadget. It is a sign that agents are escaping the chat window and learning to live in the seams of daily life. The winners will be the teams that sweat the details most people never see: microphone ports, analog gain curves, quantization strategies, haptic patterns, and graceful recovery when the model misunderstands. A ring that listens only when asked, responds without delay, and remembers only what you consent to save is not just better ergonomics. It is better computing. If 2025 was the year we talked to demos, 2026 can be the year we quietly talk to ourselves and our tools finally keep up.