Adobe’s Agent Orchestrator Makes CDPs Plan and Act

On September 10, 2025, Adobe made AI Agents and the AEP Agent Orchestrator generally available, turning the CDP into a control plane that plans and executes across RT-CDP, Journey Optimizer, AEM, and partner tools.

Breaking: the CDP becomes a control plane

On September 10, 2025, Adobe said its AI Agents and the Adobe Experience Platform Agent Orchestrator are generally available, positioning the customer data platform as the coordination layer for how enterprise agents plan and act across marketing stacks. In effect, the CDP becomes a control plane that understands identity and consent, while agents translate business goals into executable plans across applications. Adobe announced general availability of AI Agents, a step that raises expectations for what a CDP is supposed to do.

The headline is simple. If a customer data platform knows the who, agentic software can decide the what and when, and the orchestrator ensures the how and why are governed, auditable, and measured. Taken together, this yields a system that can brief, execute, and learn inside your marketing tools without waiting for weekly standups or quarterly roadmaps.

From chat to goals: what changes now

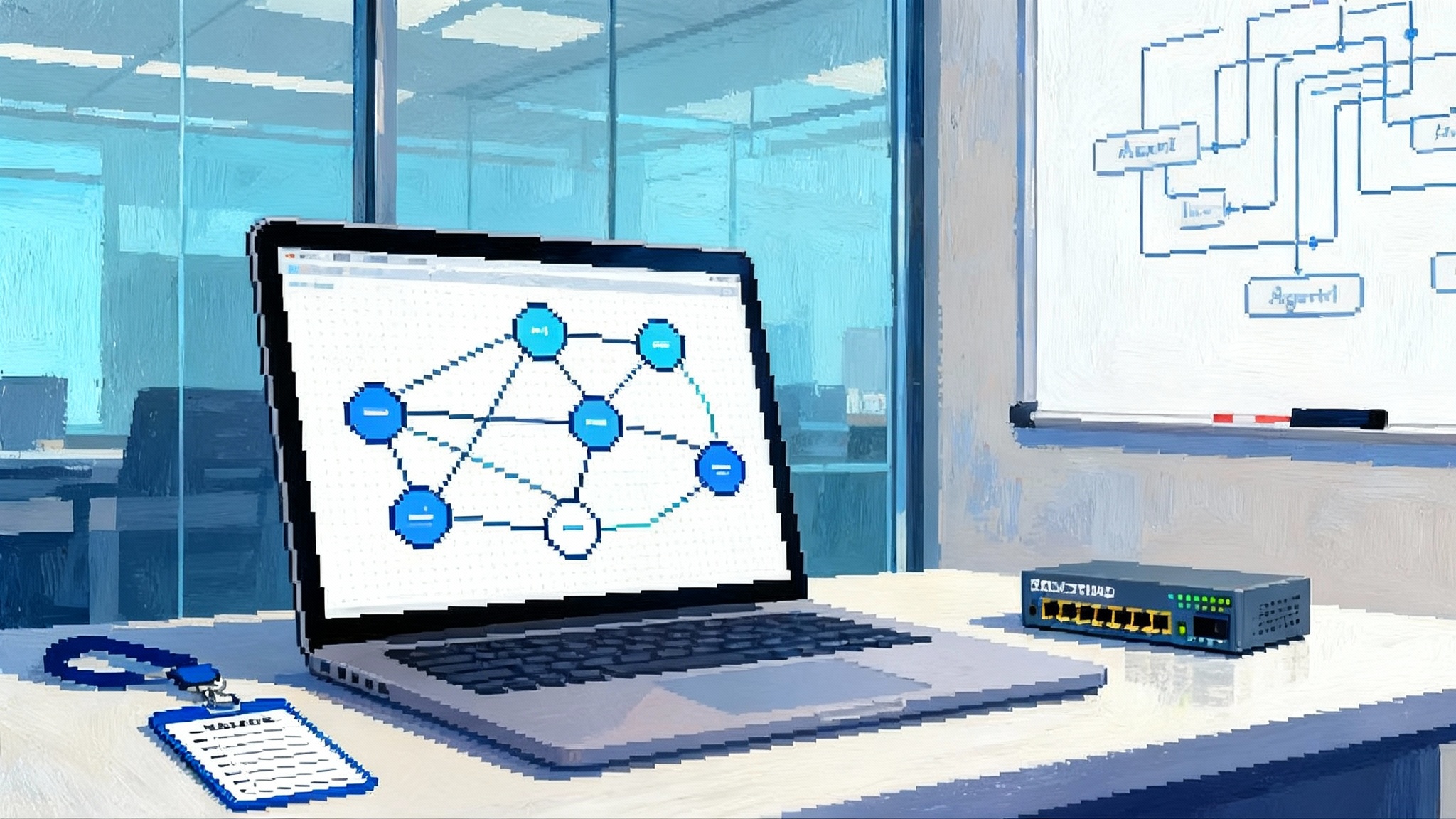

Most chatbot projects answered questions in a single session. They lived inside one channel and were confined to one model. The AEP Agent Orchestrator starts from a different place. It sits on top of Adobe Real-Time Customer Data Platform for identity and audiences, Adobe Journey Optimizer for cross-channel journeys and decisioning, Adobe Experience Manager for content, and it reaches into third-party tools for service, commerce, and analytics.

Where chatbots waited for prompts, agents hold explicit business goals such as increasing activation among first-time buyers in a specific region or recovering revenue from cart abandoners without hurting margins. They propose plans, take steps, and report outcomes with provenance. That shift from reactive answers to proactive planning is the practical difference teams will feel in the first month.

Think of it like air traffic control for enterprise actions. RT-CDP provides a live radar of customers and segments. Journey Optimizer is the flight scheduler. AEM is the hangar of content and templates. The orchestrator allocates runways, enforces rules, and coordinates with other control towers when a plan crosses into third-party airspace.

How agents coordinate across Adobe and beyond

The AEP Agent Orchestrator exposes a common contract for agents to declare capabilities, request data with consent, trigger actions in applications, and log outcomes. Adobe previewed a portfolio that included Audience, Journey, Experimentation, Data Insights, Data Engineering, Site Optimization, and Product Advisor, along with partnerships across infrastructure, service, analytics, and enterprise systems. That preview now becomes real. For background on the shape of that portfolio and ecosystem, see Adobe's March Summit release detailing ten agents.

Operationally, the breakthrough is not that agents exist, but that they cooperate. A Journey Agent can request a fresh audience from the Audience Agent, which in turn can ask the Policy service to validate consent types. Both can request AEM for content variants that honor brand rules and accessibility. If the plan includes a service recovery step in a help center, the orchestrator can call into a partner ticketing system, then reconcile outcomes back to the unified profile in RT-CDP.

Human in the loop, by design

Autonomy without governance is a nonstarter for regulated or brand-sensitive teams. The orchestrator bakes in human checkpoints that are contextual and reversible.

- Approval gates: Before an agent can add a new attribute to an audience definition or enable a new channel, the orchestrator routes a review task to the right owner with diffs of what will change and estimates of impact.

- Budget and risk thresholds: Teams can set guardrails like daily message caps, discount ceilings, or maximum bid changes by channel. Agents must request exceptions, and those requests are auditable.

- Rollback and containment: If lift or service metrics degrade past a threshold, the orchestrator can automatically revert the last change set, pause the agent plan, and alert an operator.

- Attribution of actions: Every step carries provenance. Who approved, which policy applied, which model version predicted, which dataset was used. That level of tracing makes postmortems and compliance reviews tractable.

This is not slow. The idea is to move fast with brakes that work.

The emerging agent ops stack

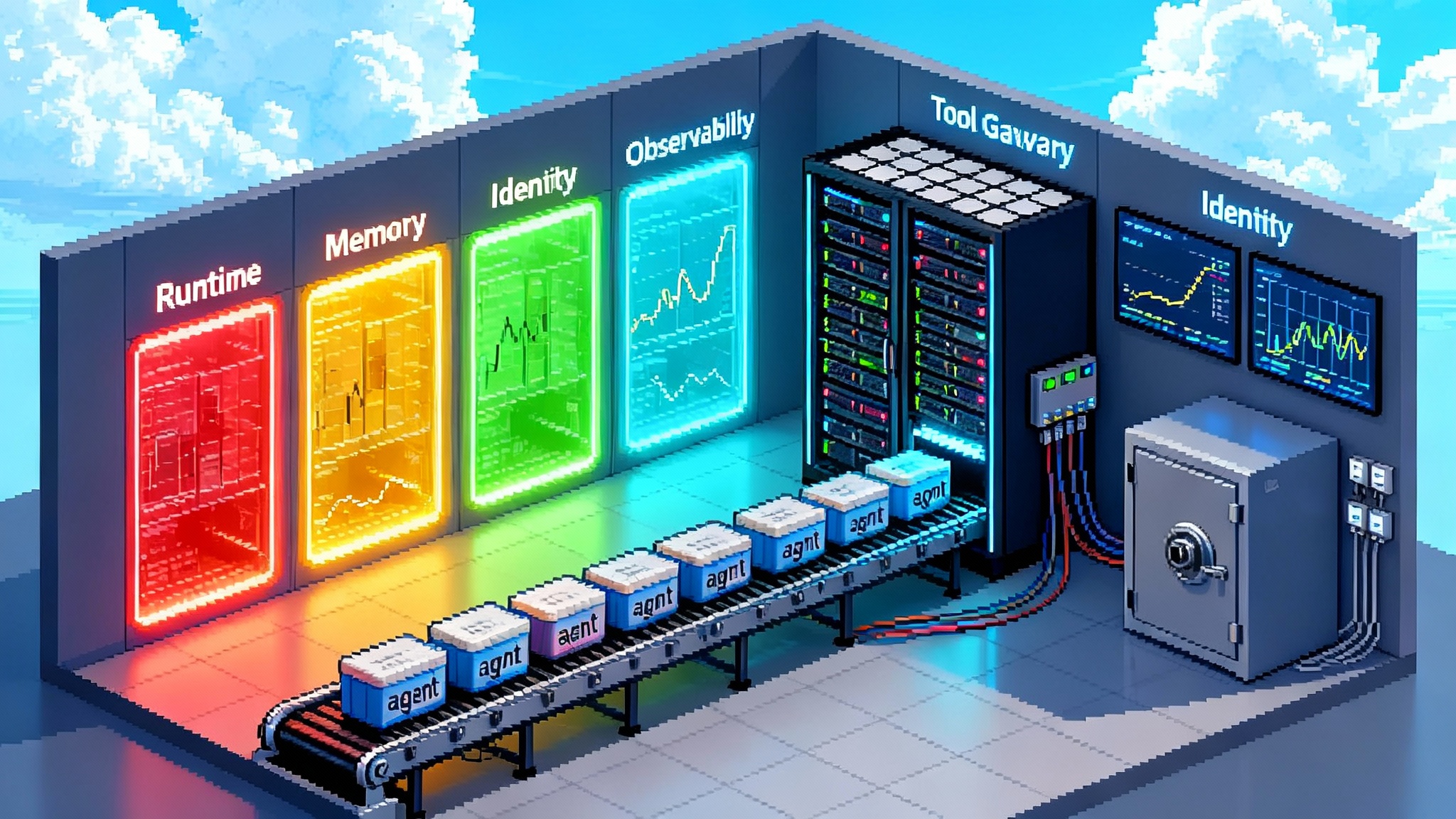

Enterprise teams will quickly discover that successful agent programs need an operating model. Three layers matter most today.

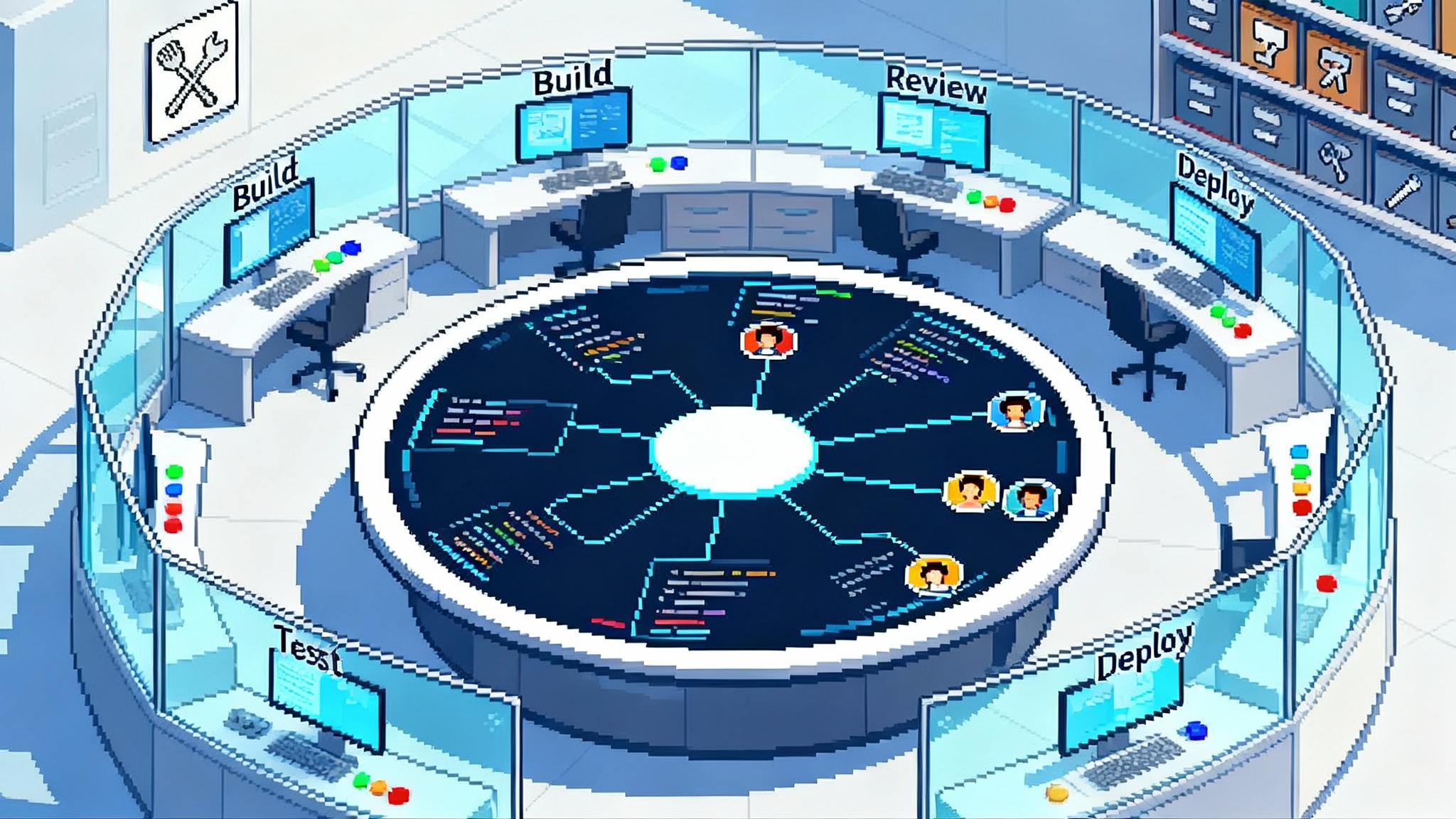

1) Orchestration

This is the real-time brain that translates goals into plans and coordinates calls across RT-CDP, Journey Optimizer, and AEM, plus partner systems. It handles multi-agent planning, conflict resolution, and dependency management. It must understand identity, channel availability, and content readiness. In practice this means the orchestrator prioritizes actions, sequences them, and enforces service-level objectives. For example, it can stagger batch audience recalculations so they do not collide with journey sends, and it can prioritize high-value segments for content assembly.

2) Policy

Policies express data rights, brand constraints, offer eligibility, and risk tolerances. Good policy is not a document, it is a machine-readable contract the orchestrator enforces at runtime. For marketing teams, that looks like consent filters at profile and segment levels, channel frequency caps that compound across agents, and eligibility rules that prevent conflicting offers. This layer should integrate with identity resolution so policy can be enforced not only on a profile but on a household, account, or device cluster.

3) Telemetry

Telemetry captures the loop from action to outcome. At minimum, track what an agent attempted, what was approved or blocked, what was delivered by channel and time, and what changed in business metrics. Useful telemetry aggregates at three horizons: minute-to-minute observability, day-to-day performance against goals, and quarter-to-quarter learning that informs new policies and agent goals. Telemetry is what turns an early win into a durable program.

Practical note: If you are standing up this stack in a heterogeneous environment, the patterns described in related ecosystems can help. See how the browser itself can act as an agent runtime in Atlas Agent Mode browser runtime and how cloud runtimes formalize agent operations in AWS AgentCore enterprise runtime. For teams focused on developer workflows, codifying actions as tools with OpenAI AgentKit for production shows how to move from prototypes to repeatable execution.

Near-term wins you can bank

Teams do not need a grand unification of agents to see results. Here are three pragmatic wins that the orchestrator makes easier to deliver in the next two quarters.

Win 1: Autonomous audience building that respects policy

What it is: The Audience Agent proposes or refines segments based on a stated outcome. The operator sets a goal such as increase activation rate among first-time buyers by 15 percent without increasing cost per acquisition above target. The agent explores signals across web, app, and offline events in RT-CDP, proposes segment rules with contribution estimates, and simulates reach and overlap with existing programs.

How it runs: A policy check enforces consent types and geographic constraints. The operator sees a diff that highlights which attributes or events are new to the definition, approves changes, and schedules the segment for activation to paid media and owned channels. If the plan risks over-messaging, the orchestrator adjusts channel allocations automatically.

What to measure: Time to first viable audience drops from days to hours. Overlap waste shrinks, which should show up as lower audience collision rates and fewer suppression errors. Tie the work to a single revenue goal or a clear cost metric so you can attribute value without guesswork.

Win 2: Journey experimentation that runs itself with a human throttle

What it is: The Experimentation Agent uses Journey Optimizer to propose tests and allocate traffic. You define a business metric, for example increase second purchase within 30 days among new buyers. The agent builds hypotheses such as cadence changes, channel swaps, or offer sequencing, and it wires up treatment arms with AEM content variations, pricing guards, and frequency caps.

How it runs: A human approves the experiment plan. The agent ramps traffic based on a risk budget and stops early if the confidence threshold is hit. It then stores learnings in a library so future plans are seeded with what worked and what failed.

What to measure: Time from idea to live test, number of experiments run per month, and the percent of journeys governed by an active test. Many teams see a double-digit lift when they move from one test per quarter to continuous experimentation, mostly because speed lets them retire losing ideas faster.

Win 3: Real-time personalization that blends content and intent

What it is: The Product Advisor or Site Optimization Agent adapts content on the fly based on RT-CDP signals. AEM provides scoped content variants that are brand-safe and accessible. The agent selects a variant, checks eligibility, and logs the choice.

How it runs: For a retailer, the agent can tailor a homepage module for first-time visitors from a specific campaign, then persist the preference into the profile so email and app messages reinforce the same story. For a telecom, the agent can detect when a customer is out of data and push a contextual upsell in app while preventing an email that would conflict with the service message.

What to measure: Fewer contradictory messages, lower unsubscribe rates, higher click-through on the modules the agent controls, and improved conversion among exposed cohorts. Add guardrails so discounting does not become the only lever the agent learns to pull.

A concrete example: one plan, many tools

Imagine a home goods retailer heading into a holiday weekend. The merchandising team wants to move overstocked outdoor furniture without discounting more than 10 percent on average. Marketing sets a goal for the orchestrator: sell through 35 percent of inventory in ten days with margin discipline and minimal customer complaints about messaging volume.

- The Audience Agent finds a cohort of backyard shoppers who engaged with summer content, adds a segment rule for purchasers of grills in the last 90 days, and simulates reach by channel.

- The Journey Agent builds a three-step plan. Mobile push with a content block highlighting backyard bundles, an onsite module for return visitors showing complementary items, and a follow-up email only if no purchase occurs within 24 hours.

- A policy check enforces frequency caps across push and email, applies consent by region, and blocks any use of sensitive attributes.

- AEM assembles three variants per channel that reuse approved imagery and copy. Accessibility checks pass and brand contrast ratios hold.

- The Experimentation Agent allocates 20 percent of traffic to a cadence variant that tries a same-day app inbox message instead of email.

- Telemetry flows back into RT-CDP. The orchestrator sees an uptick in customer service contacts in a specific region and pauses push there until the content is adjusted.

Net result: the team meets the sell-through goal with a 7 percent average discount, email volume drops due to better onsite conversion, and the test shows that app inbox performs strongly among loyalty members and poorly among guests. Those findings seed the next plan.

What changes for your organization

You will need two new motions and one new team habit.

- Goal-to-plan briefs: Instead of asking for assets or segments, product and channel owners should write agent briefs that state the business outcome, constraints, and what counts as success. A one-page brief is enough.

- Approval and rollback routines: Decide which changes require approval, what can ship inside a budget, and how to roll back. Practice the rollback in a sandbox so muscle memory exists before you need it.

- Shared telemetry reviews: Replace channel dashboards with a single weekly agent review that asks what plans ran, what worked, what to retire, and what to try next. Make it a ritual.

What is next: standards, marketplaces, and agent-to-agent coordination

Three arcs will shape the next year.

- Interoperable agent standards: Teams will want a way to declare capabilities, policies, and telemetry events in a consistent schema across vendors. That lets a Journey Agent from one company request a plan from an Experimentation Agent from another without custom glue. Expect early profiles to look like APIs that describe intents, actions, and constraints. The emergence of cross-vendor patterns is already visible in adjacent ecosystems such as the cloud runtimes and browser-side agents explored in AWS AgentCore enterprise runtime and Atlas Agent Mode browser runtime.

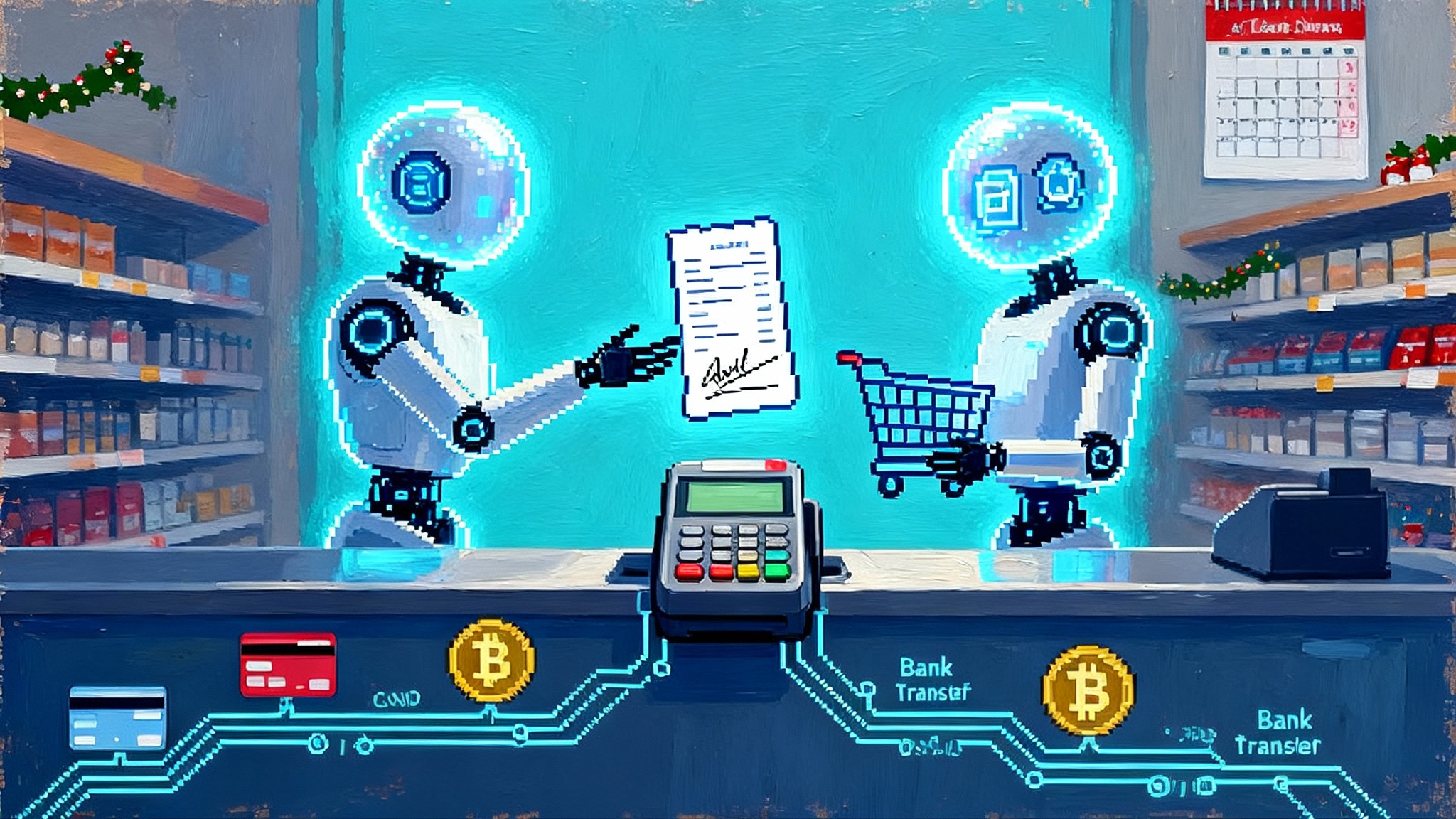

- Marketplaces: Once capabilities are standardized, expect marketplaces of vetted agents and plan templates. A retailer might subscribe to a price elasticity agent from a consultancy, or a B2B company might license a sales outreach agent tuned for its industry. Procurement will care about audit trails, privacy posture, and the right to inspect training data sources.

- Agent-to-agent coordination: Inside a single brand, agents will need to negotiate scarce resources such as message slots and discount budgets. Across brands, expect collaboration patterns to emerge in service and logistics that look like interline agreements in airlines, where one agent books capacity or resolves an issue with another on behalf of a shared customer.

Risks to manage without slowing down

- Policy drift: As you add sources and destinations, it is easy for a policy exception to become the norm. Use automatic policy checks at compile time and run time, and alert on silent policy bypasses.

- Automated busywork: Agents generate plans easily, which can flood teams with low-impact changes. Tie every plan to a measurable outcome and sunset agents that do not contribute.

- Content debt: Real-time personalization fails when the content library is thin. Invest in reusable components in AEM and set a target for coverage by segment, device, and accessibility state.

- Opaque models: If teams cannot explain why a plan changed, trust erodes. Require model versioning and attach explanations to every action that affects a customer.

- Siloed telemetry: If results live in tool-specific dashboards, learning stalls. Land telemetry in a shared store and make it queryable by plan, audience, channel, and experiment.

A 90-day starter plan

- Week 1-2: Identify one business outcome and write a goal brief. Map the data and channels involved. Choose one or two agents to start, such as Audience and Experimentation.

- Week 3-4: Define policies as machine-enforced rules. Consent, frequency caps, eligibility, and discount budgets. Configure approval gates and rollback.

- Week 5-6: Stand up telemetry. Decide the core events and metrics. Wire outcomes back to RT-CDP and your analytics source of truth.

- Week 7-8: Run the first plan in a sandbox. Practice approvals and a rollback. Validate that content variants exist for key segments.

- Week 9-10: Launch to a small cohort. Review telemetry daily. Let the agent adjust within budget.

- Week 11-12: Publish results and learnings. Decide whether to scale, pivot, or shelve. If you scale, add one more agent to the plan and repeat. Store your findings as a template other teams can reuse.

The bottom line

With general availability, Adobe is not only shipping a new feature. It is redefining how a customer data platform is used. AEP now looks like a control plane for agents that can aim at business outcomes, plan across RT-CDP, Journey Optimizer, AEM, and partner tools, and improve based on telemetry. The result, when teams operate it well, is not a fancier chatbot. It is a compound system that plans, acts, and learns in the open.

Enterprises that treat agents as colleagues with budgets and guardrails will get faster experimentation, tighter personalization, and clearer links between marketing work and business outcomes. The technology is here. The advantage will come from how you run it.