Agent Bricks and MLflow 3.0: Turning Point for Enterprise AI

Databricks unveiled Mosaic AI Agent Bricks and MLflow 3.0 with built-in evaluations, tracing, and governance. Learn why this stack changes production agents and how to build and ship across AWS, Azure, and GCP.

Why this launch is different

On June 11, 2025 at Data + AI Summit, Databricks introduced Mosaic AI Agent Bricks alongside MLflow 3.0. The combination lands at a perfect moment for enterprises that want real outcomes from agents, not just demos. Built-in evaluations, standardized tracing, and governance that works across clouds move agents from experiments to durable production services. This article explains what changed, why it matters for CIOs and engineering leaders, and how to build a domain agent that ships with guardrails.

What is Mosaic AI Agent Bricks

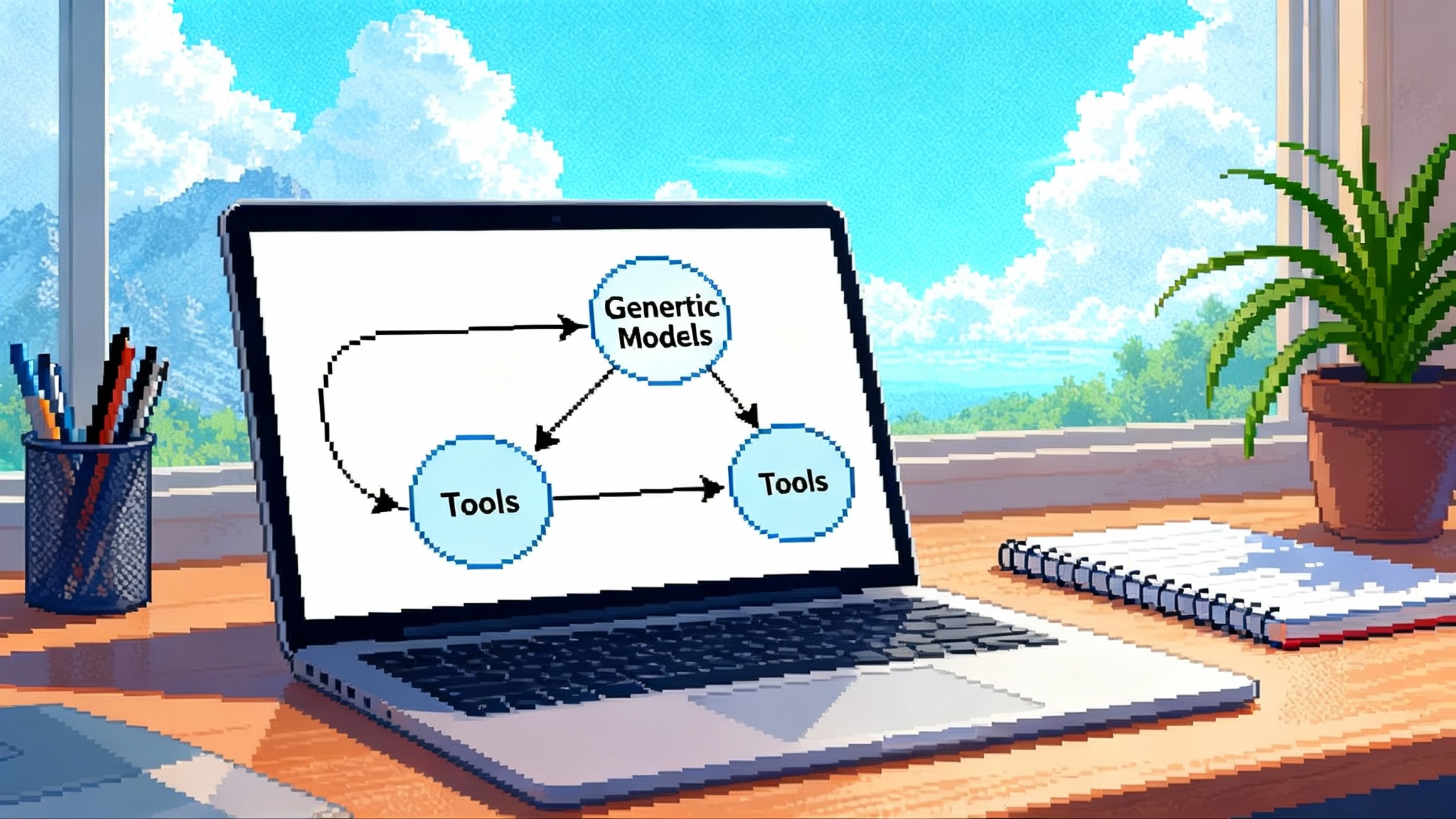

Agent Bricks packages the essential building blocks for enterprise agents into a consistent stack. Instead of stitching together ad hoc prompts, scattered tools, and handwritten logging, teams get primitives that cover the full lifecycle.

Key ideas:

- Composable skills and tools that expose secure functions to the agent, such as search, database lookups, ticket updates, or payment operations.

- Orchestration patterns that coordinate retrieval, tool use, planning, and reflection without forcing an all-or-nothing framework rewrite.

- Built-in evaluations that let teams measure task success, safety, latency, and cost before and after deployment.

- Observability by default through structured traces and metrics that make agent behavior inspectable, reproducible, and debuggable.

- Governance hooks that align with data catalogs, access controls, and audit logs.

The result is not a single agent product. It is a consolidated approach that fits into your data platform, model choices, and cloud posture.

MLflow 3.0 as the backbone

MLflow has long been a standard for experiments and model packaging. With 3.0, its role expands from model tracking to agent lifecycle.

- Unified tracing: Each agent interaction can emit structured spans that tie together prompts, retrieved context, tool calls, outputs, and errors. This connects the dots across models, data, and code.

- Evaluation stores: Offline and online evaluations live beside your runs, with datasets, metrics, and thresholds versioned for repeatability.

- Model and pipeline lineage: Teams can track which datasets and policies informed a particular agent config, then reproduce or roll back safely.

- Registry and approvals: Promotion paths move from dev to staging to production with checks that include eval results and policy conformance.

If you are new to MLflow, the MLflow documentation overview is the fastest way to align your team on concepts and APIs.

How this differs from last year’s agent kits

Many teams tried agent frameworks in 2024 and hit similar walls:

- Eval gaps: It was hard to write tests that match business outcomes. Teams measured output similarity rather than task success.

- Observability gaps: Logs were unstructured. Root cause analysis across LLM output, vector retrieval, and tool execution was painful.

- Governance gaps: Security reviewers asked for lineage, approvals, and policy enforcement that DIY stacks could not provide.

Agent Bricks plus MLflow 3.0 address these head on. Evaluations are first class. Traces are structured and queryable. Governance is designed into the workflow instead of stapled on.

A simple domain example: revenue operations agent

To ground this, imagine a revenue operations agent that helps sales leaders forecast more accurately and accelerate stuck deals. The agent can read opportunity data, summarize pipeline risks, draft next-step emails, and file follow-up tasks.

Desired outcomes:

- Improve weekly forecast accuracy by 5 to 10 percent.

- Reduce average time to next customer touch by 30 percent for stalled opportunities.

- Keep data access within policy and log every sensitive action for audit.

Step 1: Define the golden path

Write 10 to 20 user stories that represent real work, such as:

- Summarize top 10 risks in this quarter’s pipeline and list one action per risk.

- Draft an email to the decision maker for Opportunity X that references last meeting notes and proposes two options.

- Create three follow-up tasks in the CRM for deals with no activity in 14 days.

These stories become your evaluation set. Each one has a pass or fail rubric that a reviewer can score and that a policy checker can enforce.

Step 2: Attach governed data and tools

Map the minimum data the agent needs: opportunities, contacts, meeting notes, and product catalogs. Attach them using governed connectors so that the catalog enforces entitlements. Tools should be explicit, such as create_task, update_stage, or draft_email. Give each tool clear input and output schemas and associate them with policies like allowed business units or maximum email recipients.

Step 3: Build retrieval that earns its keep

Use retrieval for the agent’s briefing, not as a blind dump of documents. Limit context to the specific opportunity, the last three meetings, and relevant product notes. Tag every retrieved chunk with provenance and retention rules.

Step 4: Program the decision loop

Your loop might follow this structure:

- Gather inputs and fetch the briefing via retrieval.

- Ask the planner to choose up to two tools to call.

- Enforce policy checks before tool execution.

- Execute tools and capture results in the trace.

- Produce a final response that cites the data used.

Keep the loop constrained to avoid runaway calls. Set a hard limit on tool invocations per task and a cost ceiling per interaction.

Step 5: Design evaluations that matter

Use a mix of checks:

- Task success: Did the forecast summary identify the same top risks as a human reviewer on the golden set?

- Safety and policy: Did any email draft include non-public pricing or PII without approval?

- Latency and cost: Are median and p95 latencies within SLOs? Is the cost per task sustainable at projected volumes?

- User satisfaction: Thumbs up or down with reasons on each action.

Wire these into MLflow so every run and deployment carries its evaluation record.

Step 6: Run offline evaluations before you ship

Replay the golden set against your agent in batch. Examine failures and iterate. Look for systematic misses such as poor retrieval for certain product lines or tool calls that fail due to schema mismatches.

Step 7: Promote to staging, then production with guardrails

Move the agent to staging with approvals based on evaluation thresholds. In production, keep sampling live traffic back into the evaluator. Use a canary to limit blast radius. When metrics drift or policy checks fail, automatically roll back to the last safe configuration.

Cross-cloud deployment patterns

Enterprises rarely live on a single cloud. Agent Bricks encourages patterns that avoid lock-in while respecting data gravity.

- Single cloud with federated data: Keep the agent runtime and state in one cloud, but read governed data through cross-account connectors from other clouds.

- Hub and spoke: Stand up a central control plane for policies, evals, and lineage. Deploy lightweight runtimes near the data in each cloud.

- Per-region isolation: Duplicate the agent stack per region to meet data residency, then aggregate anonymized metrics for global evaluation.

Keep secrets centralized, standardize on the same trace schema, and use the same evaluation rubric everywhere. That is how you compare apples to apples across environments.

Governance that earns executive trust

Executives will ask how to stop an agent from going out of bounds and how to prove what happened later. The right answer is layered control.

- Catalog-first access: Register all data with the catalog and grant the agent a scoped identity. Use tags for sensitivity levels and product lines. The agent should never bypass the catalog.

- Policy-aware tools: Tie each tool to a policy. For example, create_task may be allowed for all sales reps, but update_stage might require manager approval. Enforce policies inline, not only as after-the-fact audits.

- Pervasive audit logs: Every retrieval, tool execution, and output should land in a tamper evident log. That makes incident response and postmortems factual rather than anecdotal.

- Identity and authorization: Integrate with your identity provider so the agent can act as a user or on behalf of a group. For context on why identity matters, see how identity as the control plane changes how teams design agent access.

Governance is not a tax if you bake it into the developer experience. When engineers can preview policy impact during development and see traceable approvals in promotion workflows, velocity goes up, not down.

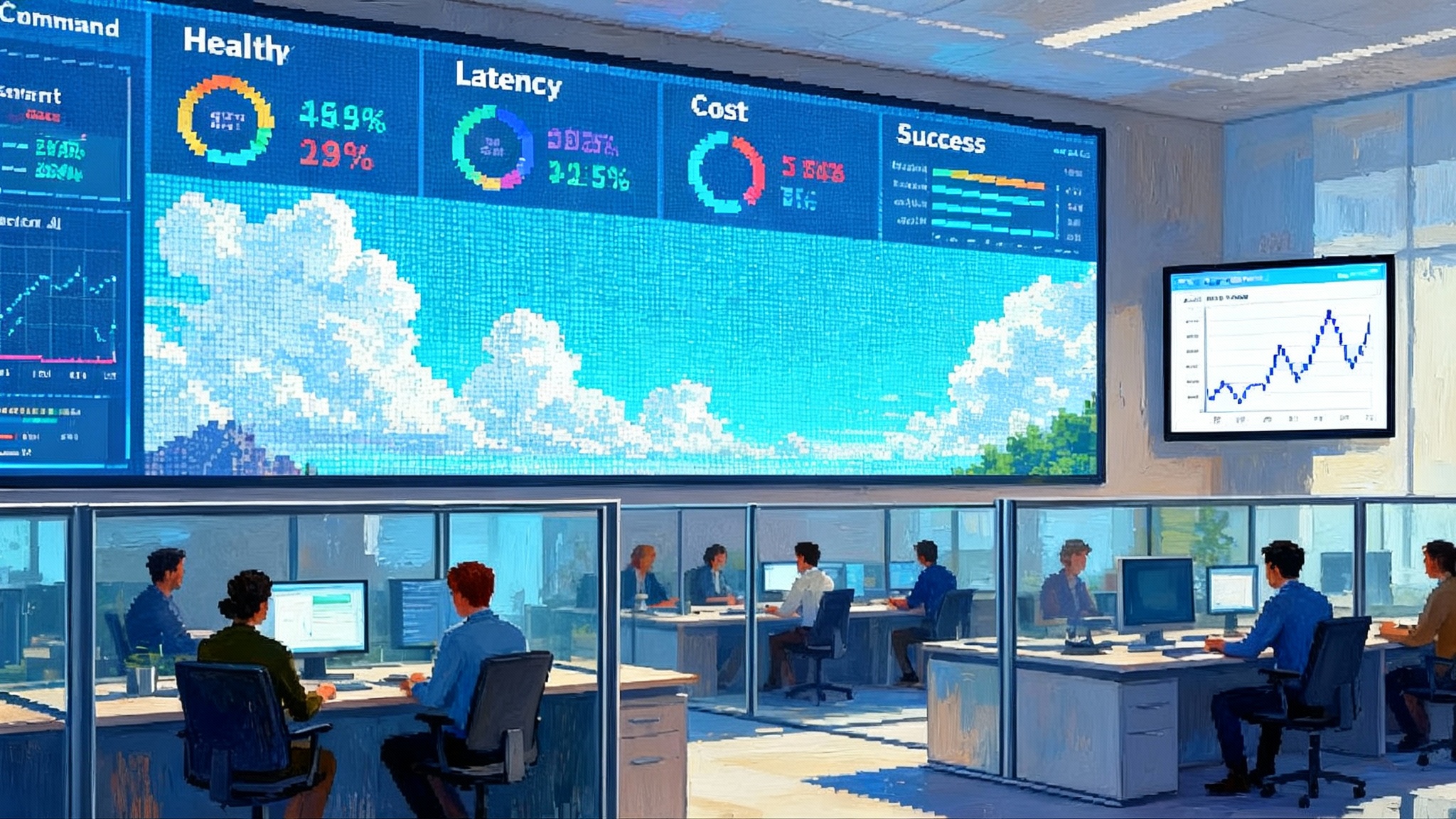

Observability that shortens the feedback loop

Agents fail in messy ways. Without strong observability, you end up guessing. With structured traces and evaluations, you can answer key questions in minutes:

- Which retrieval chunk led to a wrong recommendation?

- Which tool failed and why did the schema not validate?

- Which model change increased cost without improving accuracy?

Centralize traces, tag them with business context like region and product line, and build dashboards that segment by user cohort. When business leaders ask why a metric moved, you will have an evidence trail.

External signals and the broader ecosystem

Enterprises are converging on a few patterns. Edge platforms push low latency actions closer to users, identity systems govern which actions are allowed, and application vendors are recasting workflows as agents.

- For a view from the edge, consider how edge-native agent execution enables fast, local decisions with remote tools.

- For go-to-market and adoption strategy, study how agent platforms compete on control, scale, and trust rather than just raw model quality.

These perspectives complement the Agent Bricks approach inside your data platform.

Hands-on recipe: building your first production agent

You can build a first version in a week if you resist the urge to boil the ocean.

- Pick one outcome that is measured by a number you already report, such as reduction in time to resolve or improvement in forecast accuracy.

- Draft 15 tasks that represent that outcome. Write pass or fail rubrics that a human can apply quickly.

- Wire one data source through the catalog. Do not connect five. Start simple.

- Expose two tools only. Draft email and create task, or search knowledge base and update status.

- Implement a compact loop with clear limits on tool calls and token budget.

- Log traces and run offline evals against the 15 tasks. Fix the top five issues.

- Ship to a pilot group and collect thumbs up or down with reasons.

- Scale gradually by adding one data source or tool at a time, each with its own evaluation set.

Throughout this recipe, treat MLflow as your source of truth. Runs, metrics, eval results, and artifacts live together so anyone can reproduce the state.

Integrations and change management

Agents often change how teams work. Plan for adoption as much as architecture.

- Stakeholders: Bring security, legal, and operations into the design from the start. Show them traces and evaluations early.

- Playbooks: Document failure modes and how to recover. Include rollback procedures and safe defaults when a policy fails.

- Training: Teach users how to write effective prompts and when to hand off to humans.

- Communication: Share weekly reports on task success rate, latency, and user satisfaction.

A shared language around evaluations turns debates into measurable improvements.

30-60-90 day plan for CIOs

This adoption plan assumes you start in early October 2025 and want a production agent before year end.

Days 1 to 30

- Select one business outcome and nominate an executive sponsor.

- Form a two pizza team with a product lead, a data or ML engineer, and a platform engineer.

- Stand up the baseline stack with Agent Bricks and MLflow 3.0.

- Define the golden set of 15 to 20 tasks and rubrics.

- Connect one governed dataset and two tools.

Days 31 to 60

- Run offline evaluations and close the top quality and policy gaps.

- Pilot with 20 to 50 users. Capture thumbs up or down and reasons for every interaction.

- Add a second dataset or tool only if it improves the specific outcome.

- Set SLOs for latency and task success. Establish rollback criteria.

Days 61 to 90

- Promote to production with approvals tied to evaluation scores.

- Enable sampling for online evaluations and monitor drift.

- Expand access to the next business unit. Document the playbook and share dashboards.

Common pitfalls and how to avoid them

- Too much scope: Shipping an everything agent is slower than shipping a useful agent for one team.

- Shallow evaluations: If your rubric does not match a business outcome, you will optimize the wrong behavior.

- No policy enforcement: Auditing without prevention means you find problems after customers do.

- Opaque traces: Free text logs slow incident response. Prefer structured spans with typed fields.

- Ignoring cost: Track cost per successful task and make it a first class metric.

How to talk about results

Executives care about outcomes they already track. Present results like a product manager, not like a research paper.

- Task success rate on the golden set and in live traffic.

- Median and p95 latency against the SLO.

- Cost per successful task compared to the old workflow.

- Policy violations per thousand actions and time to remediation.

When you report these consistently, trust accelerates. That trust unlocks more use cases.

Where to learn more

If you want a first principles overview of the Databricks launch, the Databricks announcement recap is a good starting point. Pair it with the MLflow documentation overview to align your engineering teams on the evaluation and tracing model.

The bottom line

Mosaic AI Agent Bricks with MLflow 3.0 is the clearest sign that enterprise agents are leaving the lab. Evaluations become a habit. Observability is automatic. Governance is designed in, not bolted on. If you focus on one business outcome, ship with a tight tool set, and make evaluations your compass, you can move from proof of concept to production in a single quarter.