Agent Factories Arrive: Databricks, OpenAI, and GPT-5

Databricks moved agent building to the data platform, then partnered with OpenAI to bring GPT-5 capacity inside. Learn why data-native factories beat model-first tools and how to ship a reliable agent in 90 days.

The week the enterprise agent story changed

On June 11, 2025, Databricks introduced Agent Bricks, a new way to build production-grade agents directly on enterprise data. The announcement planted a flag: the center of gravity for agents would move from model-first tools to data-native factories that live where your tables and logs already sit. It became more than a statement of intent on September 25, 2025, when Databricks and OpenAI revealed a multi-year, one hundred million dollar partnership to bring OpenAI models, including GPT-5, natively to Databricks customers. Together, those moves created a simple picture with big implications. The fastest path from idea to in production agents is a factory attached to your data floor, not a standalone model lab miles away.

If you want the official receipts, read the Databricks announcement that outlines the Agent Bricks components in detail in the Databricks Agent Bricks launch details. A few months later, the OpenAI partnership formalized the supply of frontier models, with GPT-5 named as a flagship option inside the platform in the Databricks and OpenAI partnership announcement.

The headline is not only about models arriving in a new place. It is about a build system that assumes the hard parts of enterprise adoption are data-bound problems. This is the core shift: treat agents like workloads that belong to your data platform, with governance, lineage, telemetry, and real-time features included by default.

From model-first tools to data-native agent factories

A model-first approach starts with a state-of-the-art model and then tries to drag data, prompts, evals, and controls to it. That stack can work in a prototype. In production, the gravity reverses. The jobs, catalogs, and security rules live inside the data platform. The cost anomalies, runtime traces, and quality metrics live inside the platform’s observability plane. The organization has muscle memory around versioning, access control, and audit trails there.

A data-native agent factory flips the order of operations:

- Begin with the task and the data that defines it. Point the factory at governed sources in Unity Catalog, the same way you would register a Gold table for analytics.

- Auto-generate synthetic task data that looks like your domain data. Use it to pressure-test the agent against failure modes before touching live traffic.

- Auto-build task-specific benchmarks and baseline judges so that quality is measured in domain-native terms. Think of it as unit tests for reasoning and retrieval, tailored to your schemas and text fields.

- Route models based on cost and accuracy tradeoffs. Some tasks prefer a large frontier model. Others prefer a compact open weight model plus a retrieval step. The factory treats this like an optimization problem, not a belief system.

The reason this matters for time-to-production is straightforward. If your agent is born in the place where permissions, lineage, and data contracts already exist, you do not spend months stitching them later. You let the platform do what it already does for data applications, now applied to reasoning applications.

What Agent Bricks actually does

Agent Bricks wraps the agent lifecycle into components that are native to the Databricks platform:

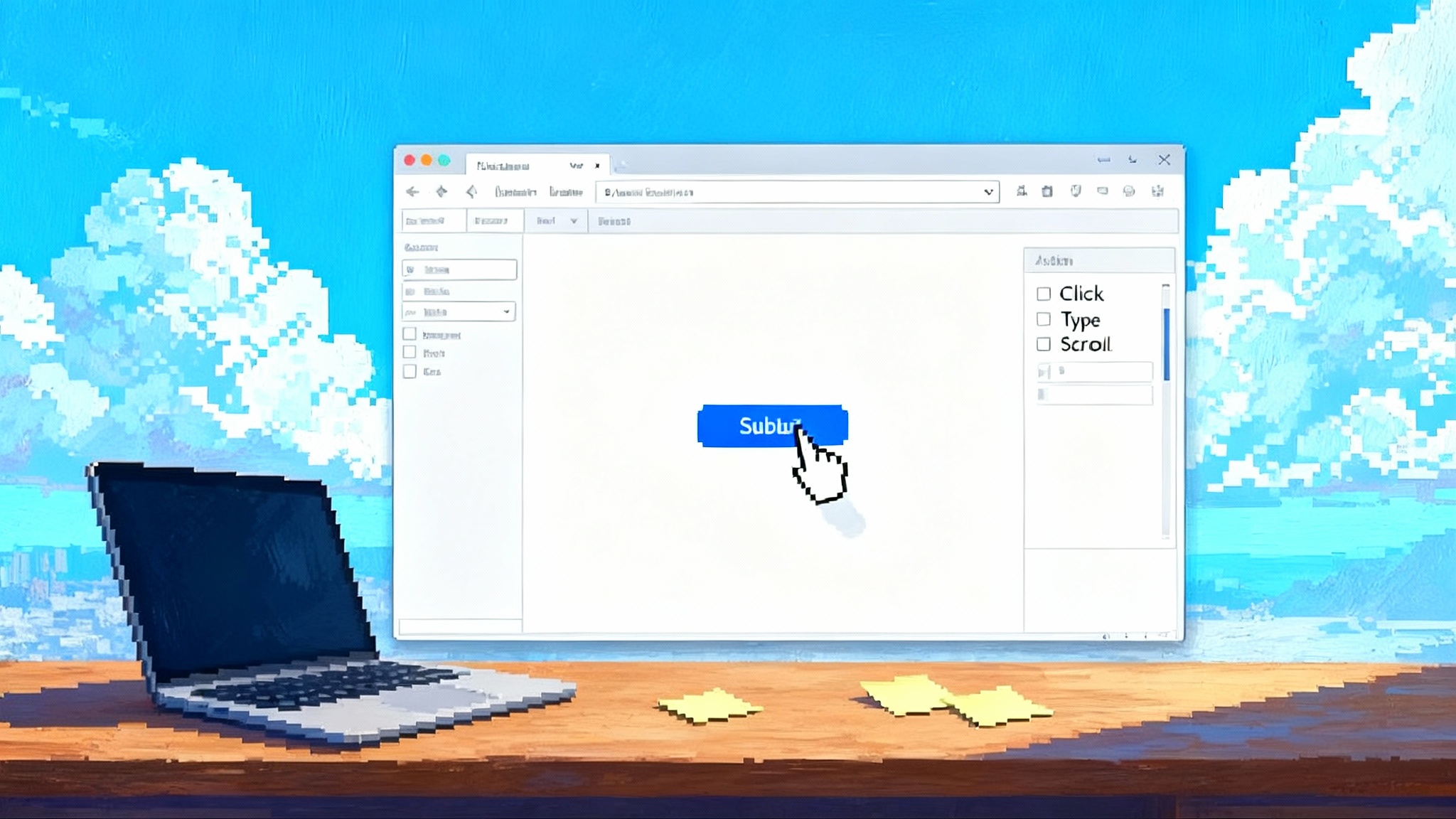

- Task capture. You describe the agent’s job in plain language and provide pointers to curated data. This yields a task graph that becomes the blueprint for everything that follows.

- Synthetic task data. The system generates domain-like data to amplify scarce examples. For an insurance claims agent, this might include thousands of realistic claim narratives with variations in damage types, locations, and policy constraints.

- Auto-benchmarks and judges. The factory builds evaluations that reflect your task’s ground truth. For a customer-care summarizer, an auto-benchmark might score factual consistency against the transcript and penalize hallucinated refund policies.

- Model search and tuning. It runs controlled trials across a portfolio of models, retrieval strategies, and tools, then proposes the cheapest configuration that hits your quality floor. You approve the Pareto-optimal choice rather than hand-tuning prompts for weeks.

- MLflow 3.0 tracing. Every run, prompt, tool call, retrieval, and judgment lands in trace logs so you can debug an agent like a distributed system rather than guessing at prompts. The same place your team already tracks models now tracks agents.

- Unity Catalog governance. Data lineage, permissions, and usage policies follow the agent. If the source table’s access changes, the agent inherits that change. No side channels, no special exemptions.

You end up with a production artifact that looks normal to the platform. It can be versioned, tested, rolled back, and costed the way a streaming job or feature pipeline can.

Why the OpenAI deal matters more than a logo slide

Many platforms can call an OpenAI endpoint. The difference with the September 25 partnership is supply chain and scheduling. Databricks is committing real money and integration work so that GPT-5 and other OpenAI models are first-class citizens inside the data platform. That means the factory can treat frontier models as interchangeable parts, not foreign objects.

Two practical effects follow:

-

Capacity and locality. Frontier capacity is reserved and routed from inside the platform’s control plane. That reduces the chance that your peak hour performance is at the mercy of someone else’s quota.

-

Comparable evaluations. Because Agent Bricks runs task-aware benchmarks inside the same environment, you can compare GPT-5 to open weight or smaller models on your exact tasks, with your retrieval settings, at your real data scales. Decisions become less emotional and more numerical.

If your objective is to ship a claims adjudicator, an underwriting assistant, or a clinical evidence extractor, the difference between a generic model endpoint and a resident factory is the difference between a loose set of parts and an assembly line with a quality gate.

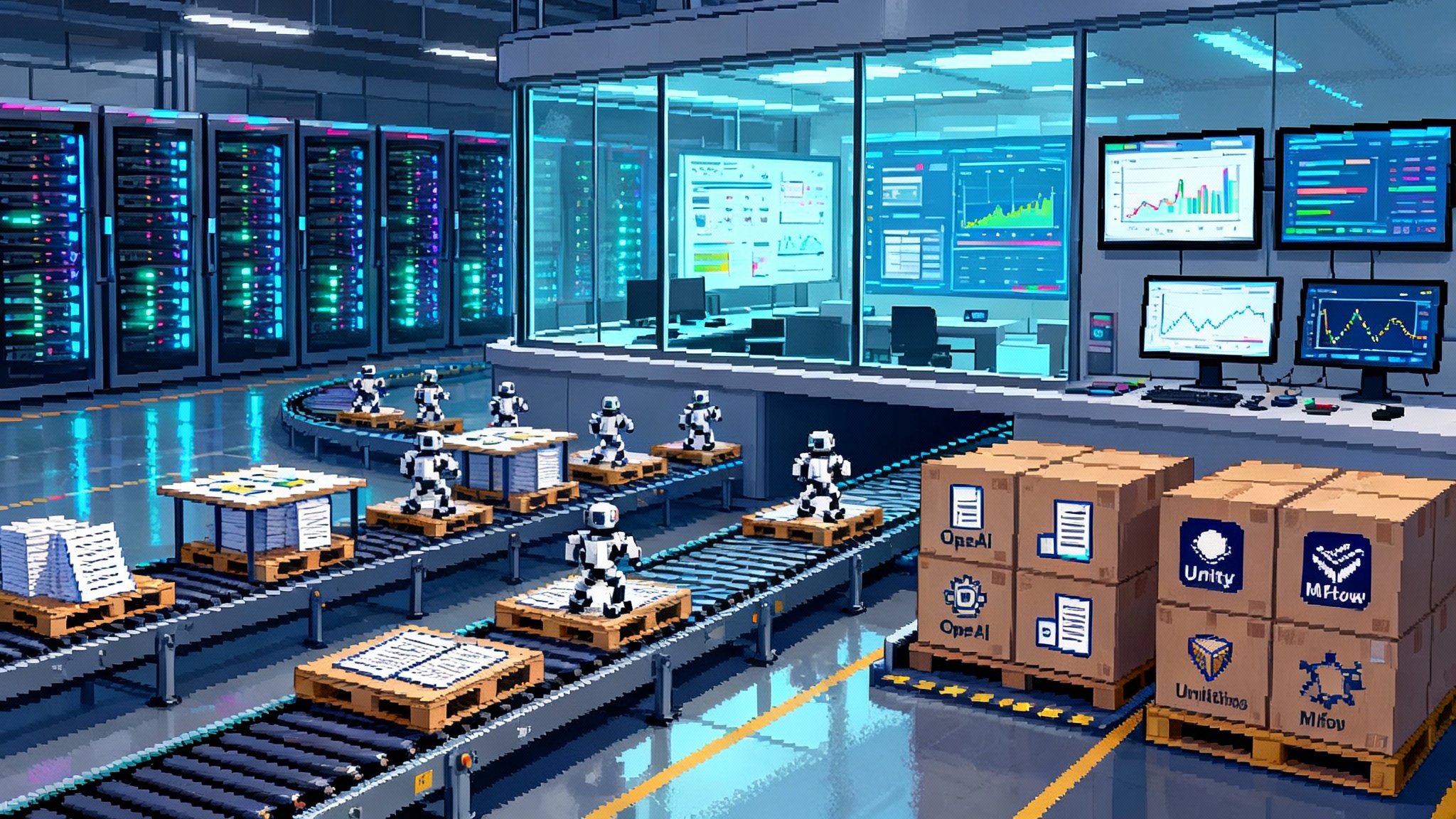

The assembly line for agents

Think about an automotive plant. There are stations for stamping, paint, inspection, and road testing. In a data-native agent factory, the stations become:

- Data selection. Pull features from curated Delta tables. Apply policy constraints from Unity Catalog so the agent never sees disallowed fields.

- Synthetic data generation. Stress test the agent with long-tail scenarios before they happen in production. Mix in adversarial examples that target failure modes like policy hallucinations or tool misuse.

- Evaluation at the door. Before promotion, the agent must clear a quality bar on auto-benchmarks. For example, precision on extracting invoice totals must exceed 98 percent, and reasoning must justify deductions with references.

- Trace review. Engineers review MLflow 3.0 traces to spot tool loops, retrieval misses, and prompt drift. A bad interaction is as visible as a 500 error in a microservice.

- Cost discipline. The factory tracks tokens and tool calls per task. If cost rises faster than quality, the agent is flagged for model routing or retrieval tuning.

- Deployment with guardrails. The agent goes live behind policies that enforce data access, action limits, and human-in-the-loop thresholds.

An assembly line like that shortens the build-to-prod cycle because it collapses discovery, evaluation, and governance into the same pipeline. You are not moving artifacts across five vendors and three shadow databases.

A pragmatic CIO playbook

You do not need to bet the company to get started. The playbook below gets value while avoiding lock-in.

- Pick one blueprint that hits a measurable outcome in 90 days.

- Good first candidates: document information extraction for invoices or claims, knowledge assistance over governed wikis, or customer conversation summarization for quality assurance. These have clear metrics, low blast radius, and strong data coverage.

- Success looks like cost per task, accuracy against labeled fields, and time saved per operator.

- Wire real-time features deliberately.

- If you need up-to-the-minute context, invest early in streaming tables and a modest feature store footprint. For a support agent, feed it last 24-hour ticket metadata and live entitlements so answers reflect reality.

- Set a service level for feature freshness. For example, five minutes for purchase events, one hour for entitlements. Publish those as contracts so product teams can plan around them.

- Bake evaluation into the workflow.

- Maintain a canonical test set that covers 80 percent of your traffic and 20 percent spiky edge cases. Regenerate synthetic long-tail cases monthly.

- Track three numbers on a single wallboard: quality on the auto-benchmark, cost per thousand tasks, and latency at the 95th percentile. Put red lines where business tolerance ends. Treat regressions like production incidents.

- Route models, do not marry them.

- Use the factory to compare GPT-5, smaller commercial models, and open weight options on your own tests. Build an internal model routing policy document that states when to use which family based on cost and risk.

- Keep the abstraction at the task level. The agent chooses models the way a database chooses a query plan. Humans set the guardrails and budgets.

- Guard against lock-in with three concrete moves.

- Data portability: Keep core artifacts in open formats. Delta tables for data, portable prompt templates and test suites, and MLflow for traces and metrics.

- Tool portability: Define critical external actions behind a broker. For example, calling a refund service should be a stable action name with a contract, not a brittle direct binding.

- Multi-venue readiness: Once your first agent is stable, perform one lift-and-run exercise to a secondary runtime. You do not need active multi-cloud, but you do need an exit plan.

- Put governance on rails.

- Unity Catalog or equivalent sets who can see what. Add policy tags like PII and export controls that travel with the data into the agent. Use them to block sensitive attributes in prompts and tool calls.

- Add human review thresholds for actions with monetary impact, and log every decision with inputs and justifications to your observability stack.

- Organize for shipping, not for research.

- Build a small cross-functional crew: a data engineer who owns features, a platform engineer who owns deployment and budgets, a product manager who owns the benchmark, and one subject matter expert who approves outcomes.

- Keep sprints short, two weeks at most. Each sprint should produce a measurable change in quality or cost on the dashboard.

How this outpaces generic agent hubs

Generic hubs are attractive because they promise everything everywhere with a few clicks. For enterprises, the challenge is that every meaningful agent depends on governed data, real-time context, and traceable actions. If you must backhaul data to the hub, recreate governance, and invent a new tracing story, your time-to-prod stretches and your risk surface expands.

We have argued before that control shifts to the platforms that own the data and the rules. See how this thinking evolved in our view of Agent hubs as the control plane. When the factory lives inside your data plane, you inherit the right policies and you debug with the right traces. You also make smarter cost decisions because you can A/B models on your own workloads, not on generic leaderboards.

The data-native factory wins on four fronts:

- Data gravity. It works where your lineage, permissions, and quality controls already live.

- Continuous evals. Auto-benchmarks and LLM judges tied to your task beat generic leaderboards.

- Operational telemetry. MLflow 3.0 traces turn debugging from folklore to forensics. You can follow a bad answer back to the retrieval miss or the tool call that timed out.

- Cost control. Model routing and synthetic augmentation let you buy quality intelligently. Many tasks can achieve target accuracy with a cheaper model plus retrieval, and the factory will prove it on your metrics.

This is not an argument against innovation outside the platform. It is an argument that the shortest route to outcomes in complex organizations runs through the systems that already manage the data and the rules.

What changes for your roadmap

- Budgeting. Treat agent capacity like any other compute plan. Commit for baseline capacity and spike for campaigns.

- Skills. Upskill data engineers on retrieval design and evaluation crafting. The ability to write a good benchmark is now as valuable as writing a good transformation job.

- Vendor posture. Preference vendors that publish clear benchmarking and tracing hooks. If you cannot read the agent’s traces, you cannot own the risk.

As more platforms expose native agent factories, expect the procurement conversation to mirror what we have seen in other agent ecosystems. For example, the way AWS packages deployable building blocks in AWS AgentCore makes AI deployable or how the edge becomes a programmable backend in Cloudflare remote MCP as backend shows how deployment and governance are becoming the differentiators.

The open question everyone will ask

Does a data-native factory box you into one platform forever. The honest answer is no if you choose open formats and keep one foot in portability. Databricks supports open source conveniences like MLflow and Delta that travel. If you couple them with a disciplined internal abstraction for tools and a clear routing policy for models, moving agents becomes a scheduled project, not an existential rewrite. You might not choose to move, but having the option changes the power dynamic.

A helpful way to think about portability is to write a one-page runbook that lists the artifacts you would carry to another runtime: prompt templates, test suites, traces, feature definitions, policy tags, and action broker contracts. If you cannot export an item in that list without heroic effort, it is a risk to document and mitigate.

A concrete blueprint to try this quarter

- Use case. Information extraction on vendor invoices.

- Data. Curated Delta table of invoices and payment events, tagged for PII in Unity Catalog.

- Factory steps. Describe the task, auto-generate synthetic invoices for edge cases, create a benchmark that checks totals, currency, and due dates against ground truth.

- Model policy. Start with a compact model plus retrieval. If benchmark errors persist on currency conversion or line item edge cases, route to GPT-5 for those subcases only.

- Guardrails. No payment instruction changes without human approval. All actions logged with source document references.

- Acceptance. 99 percent accuracy on extracted totals, sub 1 cent mean absolute error, sub 2 seconds median latency, cost under a defined threshold per thousand invoices.

- Rollout. Shadow for two weeks with daily drift checks, then progressive rollout by supplier tier.

You can rinse and repeat this blueprint for claims, transcripts, or internal knowledge assistance. The pattern holds because the factory provides the loop: define, synthesize, test, trace, route, and ship.

Related moves across the field

Agent factories are not happening in isolation. We see the same gravitational pull in other enterprise stacks. In collaboration suites, communications platforms are turning conversation data into a first-class substrate for agents. In infrastructure, observability vendors are exposing traces as features for agent control. And in search, the crawl-to-index pipeline is being adapted to feed retrieval for domain agents rather than public search.

Our earlier analysis of Agent hubs as the control plane foreshadowed this shift. The difference now is that the data plane and the model plane are being fused at the factory level. That is why these announcements matter. They reduce context loss, shorten debug cycles, and improve cost predictability.

The conclusion, without the sugar

The move from model-first tools to data-native agent factories is not about fashion. It is about operations. Agent Bricks puts evals, synthetic data, tracing, and governance in the same workflow as your tables and streams. The OpenAI partnership ensures that frontier intelligence is a menu item inside that workflow, not an external excursion. If you adopt the factory mindset, the reward is not a demo on day one. It is a reliable release on day ninety that your auditors, engineers, and customers can live with. That is how agents stop being experiments and start being software.