AgentKit Turns ChatGPT Into a Programmable Agent OS

OpenAI unveiled AgentKit and an Apps SDK at DevDay on October 6, 2025, turning ChatGPT into a chat-first runtime for agents and in-chat apps. Here is what is new, why it matters, and how to ship safely from day one.

The day ChatGPT started to feel like an operating system

On October 6, 2025 in San Francisco, OpenAI announced two moves that tighten the loop between conversation and software: AgentKit and a new Apps SDK for ChatGPT. Together they shift chat from a prompt box to an operational surface where work actually gets done. Chat becomes the shell where you ask and verify. AgentKit becomes the process manager that plans and executes. The Apps SDK becomes the window toolkit that collects inputs and renders outputs without sending users to a separate interface.

This is more than a rebrand of assistants. It is a practical architecture for shipping production agents and in‑chat apps with controls that enterprises recognize. If you build software, that matters because the best distribution channel is the one your users are already in. And your users are already in ChatGPT.

What is actually new for builders

OpenAI’s releases fill the gaps that made early agent projects brittle.

-

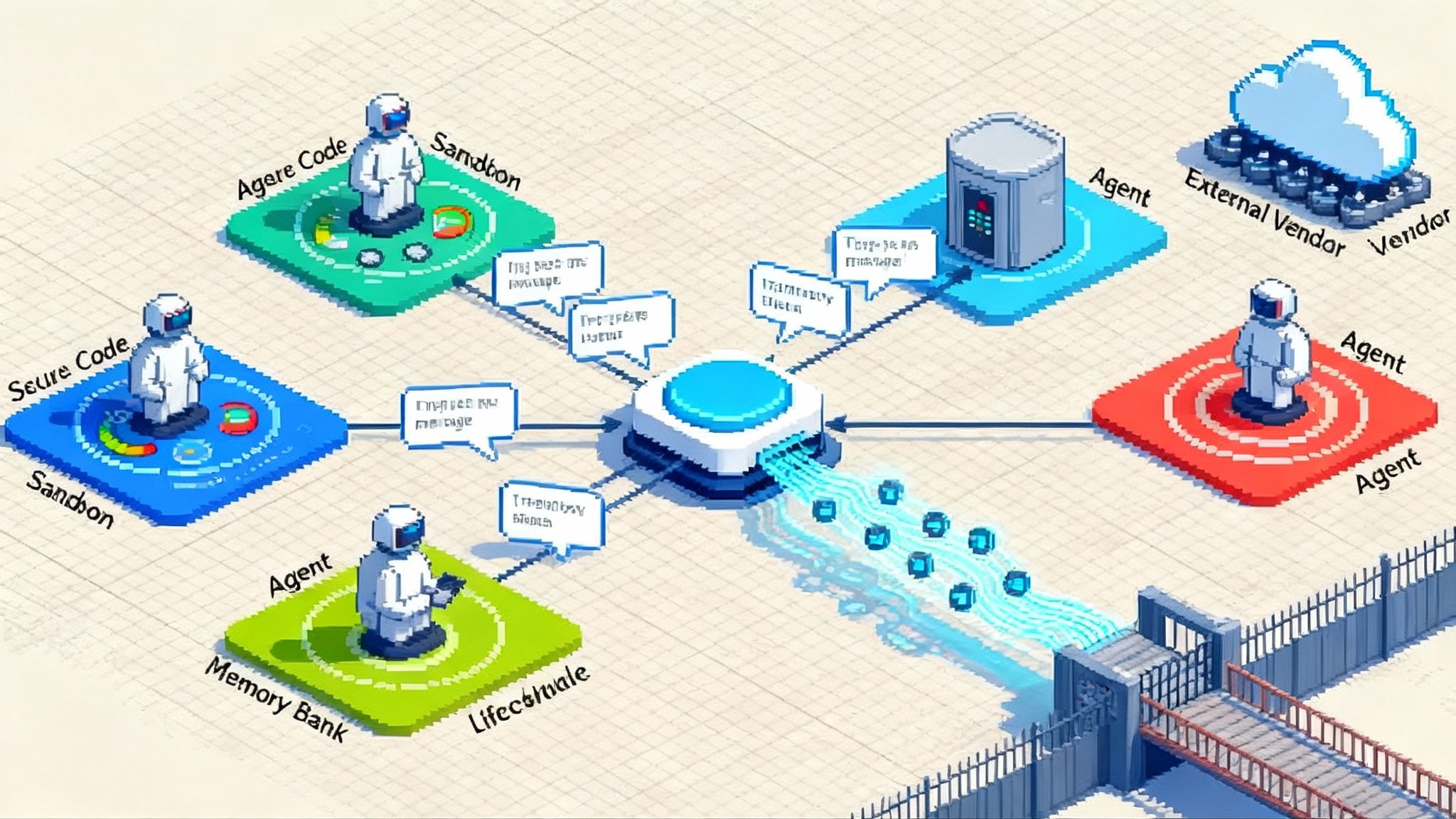

A complete agent toolchain: AgentKit adds a visual Agent Builder for composing multi‑step workflows, wiring tools, setting guardrails, and versioning agents so you can promote changes with confidence. It centralizes connectors in an admin‑controlled registry so access is explicit and auditable.

-

Embedded chat interfaces: With the Apps SDK, developers define both logic and interface for chat‑native apps. You present inputs, show tables, render confirmations, and complete short flows without leaving the thread. For many use cases you can skip building a second front end.

-

Evaluations and optimization: New evaluation capabilities let teams create datasets from real traces, grade behavior, and run automated prompt improvements. This turns ad hoc testing into a repeatable pipeline.

-

Enterprise controls: A global admin console ties identity, SSO, domain verification, and policy management to how agents and apps connect to data. Security teams get a single inventory of connectors and scopes.

-

Distribution inside ChatGPT: Apps run directly in ChatGPT and can authenticate to your backend so you reach a large installed base while serving existing customers with familiar login and entitlements.

To see the official details, start with OpenAI’s posts on apps in ChatGPT and Apps SDK and Introducing AgentKit for builders. Those two pages lay out the primitives, availability, and how the parts fit together.

Why this looks and feels like an operating system

The operating system metaphor helps translate fuzzy hype into working parts you can design against.

-

The chat thread is the shell: A command line for natural language. Invoke tools by name, chain steps, and inspect outputs inline. The shell already knows your context because the conversation carries state.

-

AgentKit is the process manager: An agent is a long‑lived process with goals, inputs, and permissions. The Agent Builder is your task scheduler where you define how work proceeds and which tools are allowed. Versioning and rollback act like signals and controls.

-

The connector registry is system preferences: Administrators decide which sources and actions are allowed, from internal knowledge bases to ticketing systems. Turning on a connector is like adding a printer on a laptop, but with scopes and audit trails.

-

The Apps SDK is the window toolkit: It gives you components and patterns so an agent can gather details, present options, and confirm actions without bouncing the user elsewhere. Dialogs live in the same thread as the reasoning that produced them.

-

Evals are the profiler and test harness: Measure behavior on real traces, compare versions, and ratchet quality with reinforcement fine‑tuning where it makes sense. The goal is not perfection. The goal is observable improvement and controlled change.

Taken together, you get a process model, a user interface, a permissions system, and a way to ship updates. That is a minimal operating system for agent software.

Patterns you can ship immediately

You do not need a research lab to make this useful. The fastest wins cluster around three patterns.

1) Agent‑powered onboarding

Onboarding is usually a series of high‑friction forms, checklists, and configuration screens. In a chat‑first runtime, replace that with an agent that asks for the right facts in plain language and sets things up for the user.

-

Example: A SaaS vendor ships an onboarding agent that reads a new customer’s contract, provisions a workspace, invites the right teams, pulls the first data sync through the connector registry, then validates setup with a short in‑chat checklist. The user never leaves the thread. The sales team stops hand‑holding every step.

-

Why it works: The agent references conversation history and attached documents, then drives setup through embedded components. The result is faster time to value and fewer support tickets.

2) Operations automations that watch and act

Many operations teams live between alerts and actions. An agent can triage, gather context, and propose the next step with explainable reasoning.

-

Example: For customer support, an agent fetches the user profile, recent orders, and policy constraints. It drafts a response, opens or updates a ticket with the correct template, and asks for human confirmation on edge cases in the chat. Over time, evaluation scores and fine‑tuning push more decisions into the automated path.

-

Why it works: The evaluation pipeline turns operations into measurable steps. Scopes in the connector registry give auditors confidence that the agent only touches what it needs.

3) In‑chat vertical apps

A number of workflows are small but frequent. A dedicated UI is overkill, while a chat‑native flow is perfect.

-

Example: A recruiting app lets a hiring manager rank screened candidates inside ChatGPT, request references, and schedule interviews with a single confirmation. Or a finance app runs a quarter‑end variance analysis and annotates results for a controller in one thread.

-

Why it works: The Apps SDK provides the interface primitives, and AgentKit orchestrates the logic. Distribution comes from the ChatGPT surface rather than paid acquisition for a separate site.

If you need inspiration on backends and integration surfaces, see how an edge‑first approach to connectors can work in our piece on remote MCP at the edge.

Building blocks that change day one for engineers

Here is what your engineering team can do differently in week one.

-

Design and version agents like services: Model each agent as a service with a manifest that lists tools, connectors, and scopes. Use version tags for releases and keep a changelog alongside prompt templates. Promotion to production becomes a routine deploy rather than a fragile experiment.

-

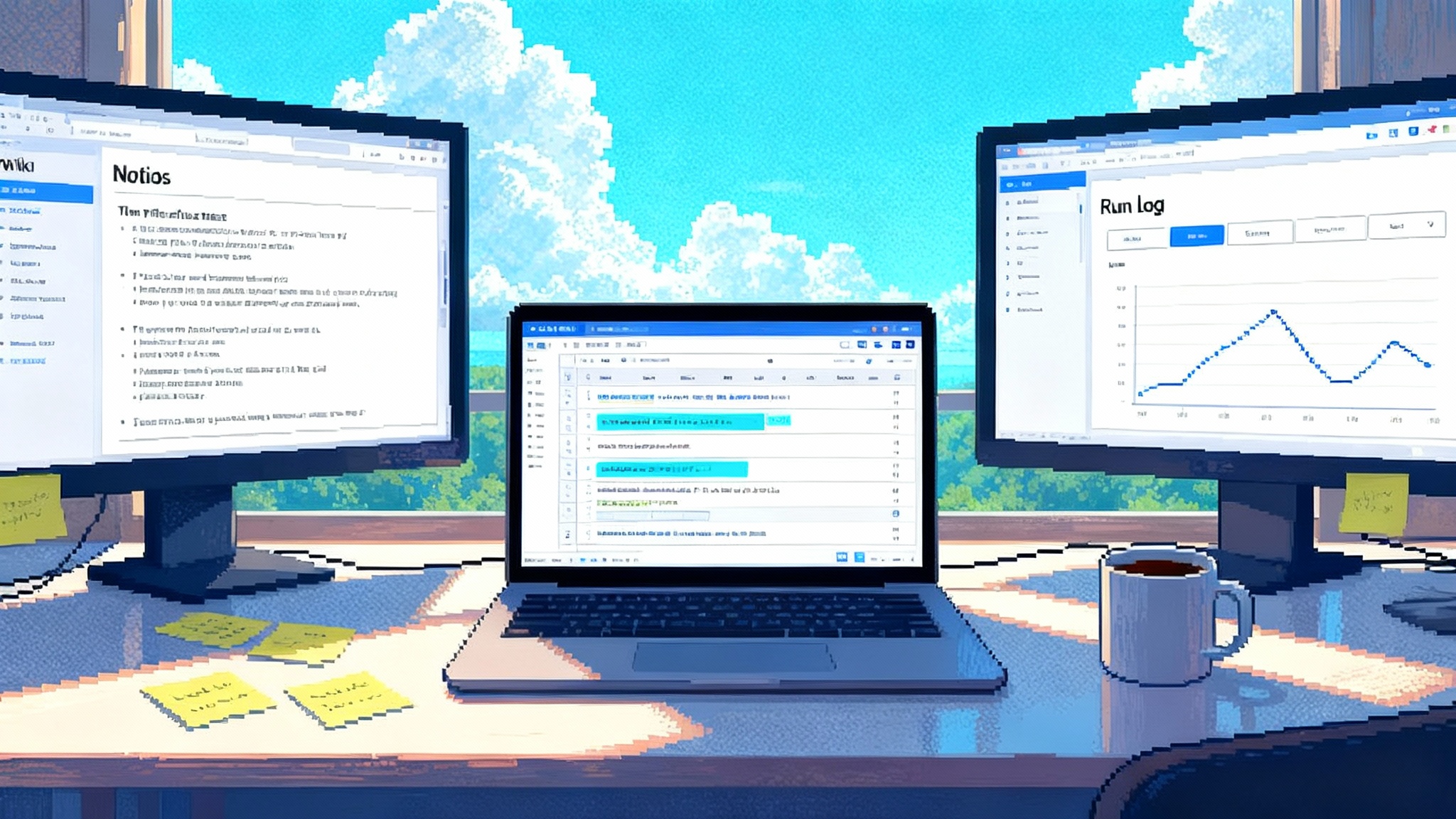

Treat evaluations as unit tests: Assemble a dataset that reflects your messy reality, not a lab benchmark. Include tricky edge cases, noisy inputs, and adversarial prompts. Run the suite as a gate on every change. Track a small set of metrics that map to business outcomes such as first‑contact resolution and time to resolution.

-

Separate connectors from prompts: Manage connectors in an admin plane with scopes and logs. Keep prompts and workflows in product engineering. This separation of concerns keeps access tight and iteration fast.

-

Build the interface once, ship everywhere: Use the Apps SDK primitives so the same conversational flow works in ChatGPT and, when needed, inside your own product via ChatKit. Avoid a second front end unless the workflow truly requires it.

-

Plan for rollbacks and kill switches: Build a control plane for your agents the same way you would for microservices. You want the ability to freeze a misbehaving version, route traffic back, and collect traces that explain the failure.

-

Align with AgentOps from day one: Instrument traces, store tool call metadata, and hash model and agent versions so you can reconstruct behavior. If you want deeper automation, compare your maturity with the practices in AgentOps as an automated pipeline.

GTM and AgentOps guardrails to ship safely at scale

GTM is go to market. AgentOps is the set of operational practices that keep agentic systems safe, observable, and accountable. Use this checklist to align product, sales, and security from the start.

-

Scoping and consent: Define the minimum data your agent needs. For every connector, specify scopes, intended uses, and retention. Make first use explicit inside the chat. Record consent events.

-

Identity and authorization: Tie every agent action to a user or service identity. Use sign‑in standards through your enterprise identity provider and map roles to what the agent can do. Log impersonation and delegated actions.

-

Red teaming and prompt security: Maintain a library of prompt‑injection and data‑exfiltration tests. Run them as part of your evaluations. Break the build when a regression appears. Reward engineers who discover holes.

-

Human in the loop by design: Decide where human confirmation adds value. Codify these gates using Apps SDK components rather than leaving them to habit. Sample a percentage of automated decisions for review.

-

Telemetry worth keeping: Store traces with inputs, tool calls, connector scopes, and outputs. Include hashes of model versions and agent versions. An audit log without enough detail will not satisfy your security team and will not help you debug incidents.

-

Explicit service level objectives: Write down which outcomes matter. For a support agent this might be first response time, deflection rate, and a quality score from human graders. Tie product reviews to whether the agent is moving those targets.

-

Pricing and throttling: Set usage tiers and rate limits early. Give sales a clear story about what is included and what costs more. Use platform rate controls to avoid runaway loops in the background.

-

Data lifecycle: Specify where prompts and outputs are stored, who can export them, and how long they live. Deidentify where possible. Describe your posture in a public privacy policy and in enterprise documentation.

-

Incident response: Create a runbook for agent incidents the same way you would for a production outage. Include on‑call rotations, severity levels, and rollback steps. Practice with game day drills.

How startups and incumbents can both win

This platform tilt does not pick favorites. It rewards clarity of focus.

-

For startups: You get instant distribution in ChatGPT plus a rapid way to ship a polished experience without a large front end team. Pick a narrow job to be done, build an agent that completes it end to end, and invest heavily in evaluations that reflect that niche. Aim to own a verb, not a category. When you need a dedicated UI, you can still use the same agent logic by embedding it.

-

For incumbents: Your advantage is data and trust. Use the connector registry and global admin controls to scope access, then build agents that shorten time to value in your existing product. Ship an in‑chat assistant that handles the messy middle of setup, migration, and configuration. Make sales easier by proving value in a conversation instead of a slide deck.

-

For both: Resist the urge to rebuild your whole application as chat. Start where chat removes friction. Keep deterministic flows where they are strong and add agents where the world is fuzzy and context‑heavy. If you are exploring transactions inside chat, our analysis of ChatGPT Checkout meets Stripe ACP outlines what reliable agentic commerce needs.

A quick plan for week one

-

Pick a workflow that annoys customers or your team because it requires context juggling. Examples include new account setup, escalations in support, or weekly account reporting.

-

Model the agent in AgentKit. Define its tools, the connectors it needs, and the small set of metrics you will use to decide if it is successful.

-

Build a simple in‑chat interface with the Apps SDK. Use confirmations and summaries where mistakes are costly. Favor clarity and speed over flourish.

-

Write an evaluation dataset from real past transcripts and tickets. Grade for correctness, helpfulness, and the specific business outcomes you care about.

-

Run a limited pilot with a kill switch, a rollback button, and daily triage of failed traces. Fold what you learn back into prompts, tools, and training.

-

Only after you hit your service level objectives do you widen the audience or offer monetization. Scale what you can prove, not what you hope.

What the early production stories tell us

Early adopters offer a few useful lessons. Customer support and sales are durable entry points because they have clear metrics and high‑volume data. Klarna, for example, publicly reported that its AI assistant handled a large share of customer chats while improving time to resolution. Sales teams have seen similar leverage by combining research, enrichment, and messaging into a single agent workflow that runs in the background and surfaces drafts for approval in the chat. The takeaway is simple. Production results show up fastest where you can observe outcomes, collect traces, and iterate weekly.

Two other patterns show up across teams that have gone beyond a demo. First, product, legal, and security sit in the same canvas earlier. When the Agent Builder makes gates and scopes visible, policy questions move from theoretical to specific. Second, the boring stuff matters. Teams that name versions, keep a changelog, and gate releases on evals unlock higher velocity later because they avoid fear‑driven freezes when something goes wrong.

The bottom line

AgentKit and the Apps SDK signal a new default. Chat is the place where work happens, not the pre‑sales demo. Agents are not side projects. They are services with versions, metrics, and guardrails. Interfaces live where the conversation already is. If you build software, your playbook is changing. Design agents like services, test them like production code, and ship them where your users already spend their attention. The teams that start now will not just bolt agents onto existing products. They will treat the chat thread as the operating system for work and they will own the workflows that matter most.