AWS AgentCore and Agents Marketplace Make AI Deployable

AWS just moved AI agents from experiments to production. With AgentCore and an Agents Marketplace, teams get identity, memory, tools, and observability built in. Here is what shipped and how to adopt it with confidence.

Agents just became a cloud primitive

On July 16, 2025, at AWS Summit New York, Amazon introduced Amazon Bedrock AgentCore and a new AI Agents and Tools category in AWS Marketplace. The message was simple and consequential. Agents are no longer clever demos held together by scripts. They are now first class cloud software with a managed runtime, identity, memory, tool access, and observability. AWS published the preview details, supported services, and pricing guidance in the News Blog announcement for AgentCore arrives in preview. The parallel Marketplace update is live under AI agents and tools in AWS Marketplace.

If you build or buy on AWS, this is the line where agents cross from experiments to deployable building blocks. The ingredients teams once handcrafted are now standardized services you can plug together and operate with the same discipline used for web apps and data pipelines.

What AgentCore is, in plain language

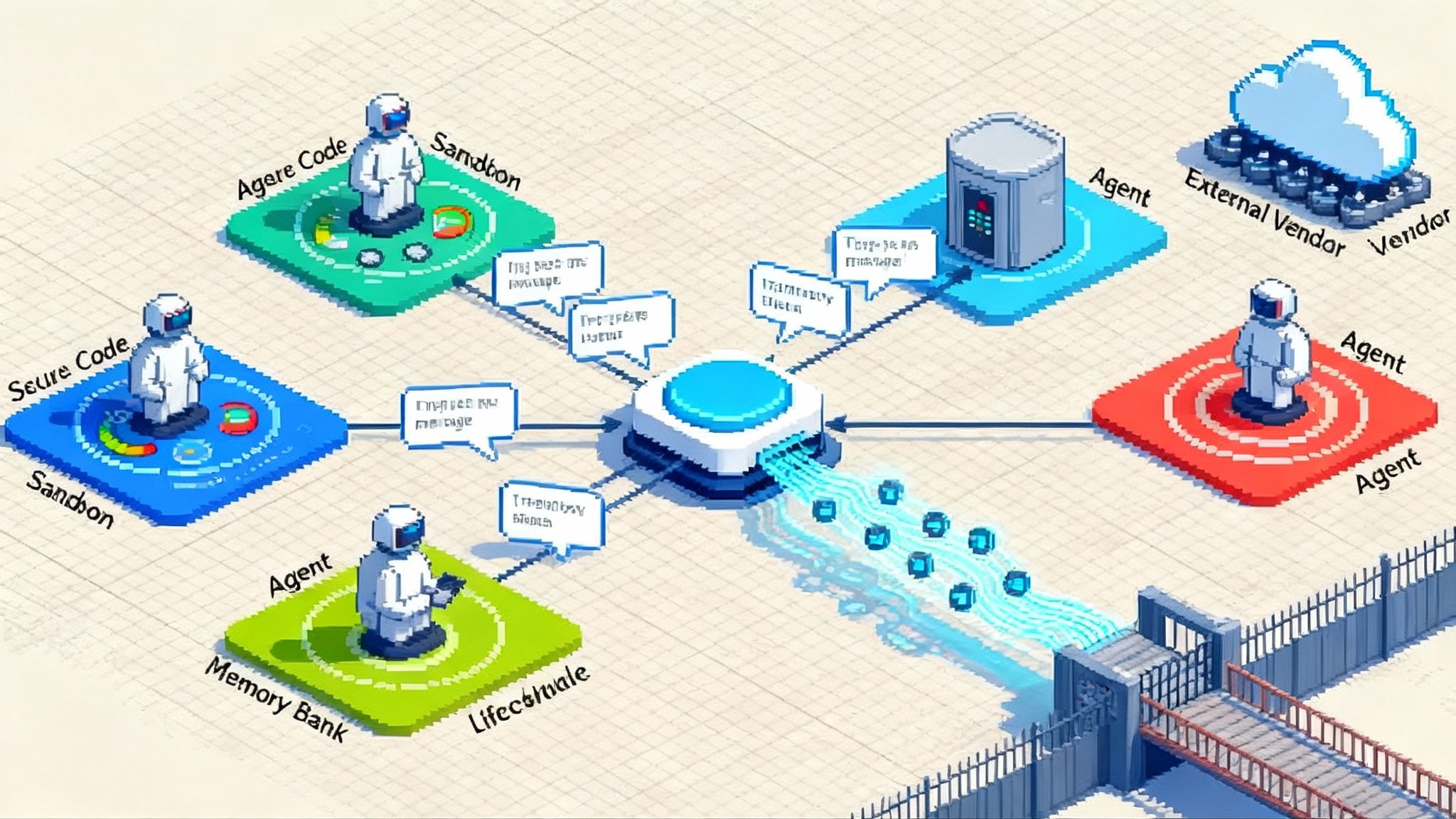

Think of AgentCore as a managed operating system for agents. It is not a monolith. It is a set of services you can adopt together or independently to make agents reliable and governable at scale. The building blocks are:

- AgentCore Runtime. A secure, low latency runtime that provides isolated sessions and supports long running jobs. Agents are not confined to short chats. They can execute extended workflows measured in hours without bespoke orchestration.

- AgentCore Memory. Built in short term and long term memory so an agent can retain context across interactions without teams standing up separate vector databases or session stores.

- AgentCore Gateway. A mechanism that converts your existing APIs and services into Model Context Protocol compatible tools with minimal code. Internal systems can be exposed to agents through a consistent protocol rather than one off adapters.

- AgentCore Browser Tool. A secure cloud browser environment where agents can click, navigate, and interact with web apps under policy and logging.

- AgentCore Code Interpreter. A sandbox for executing code safely across multiple languages for data transforms, file manipulation, and calculations.

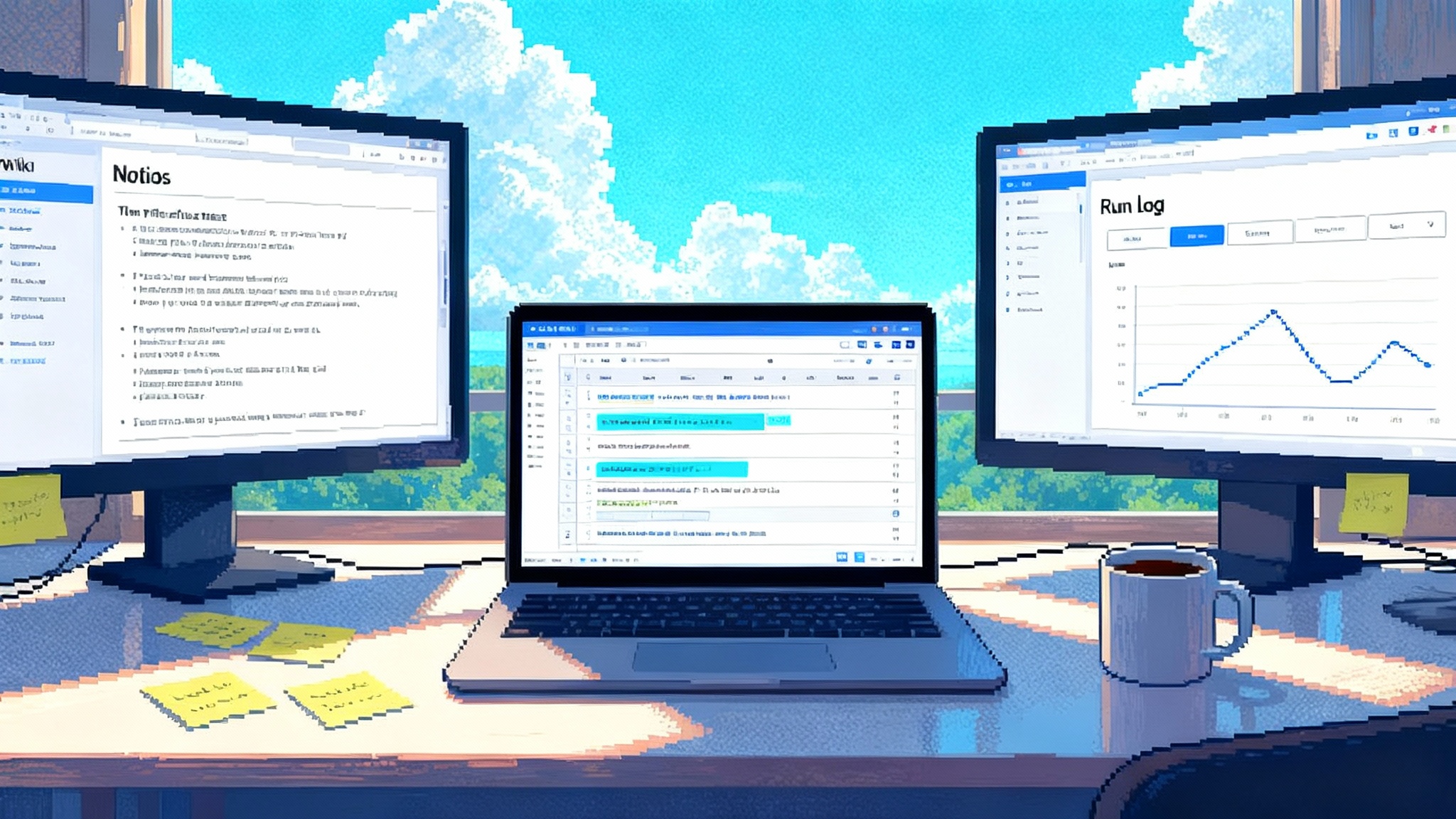

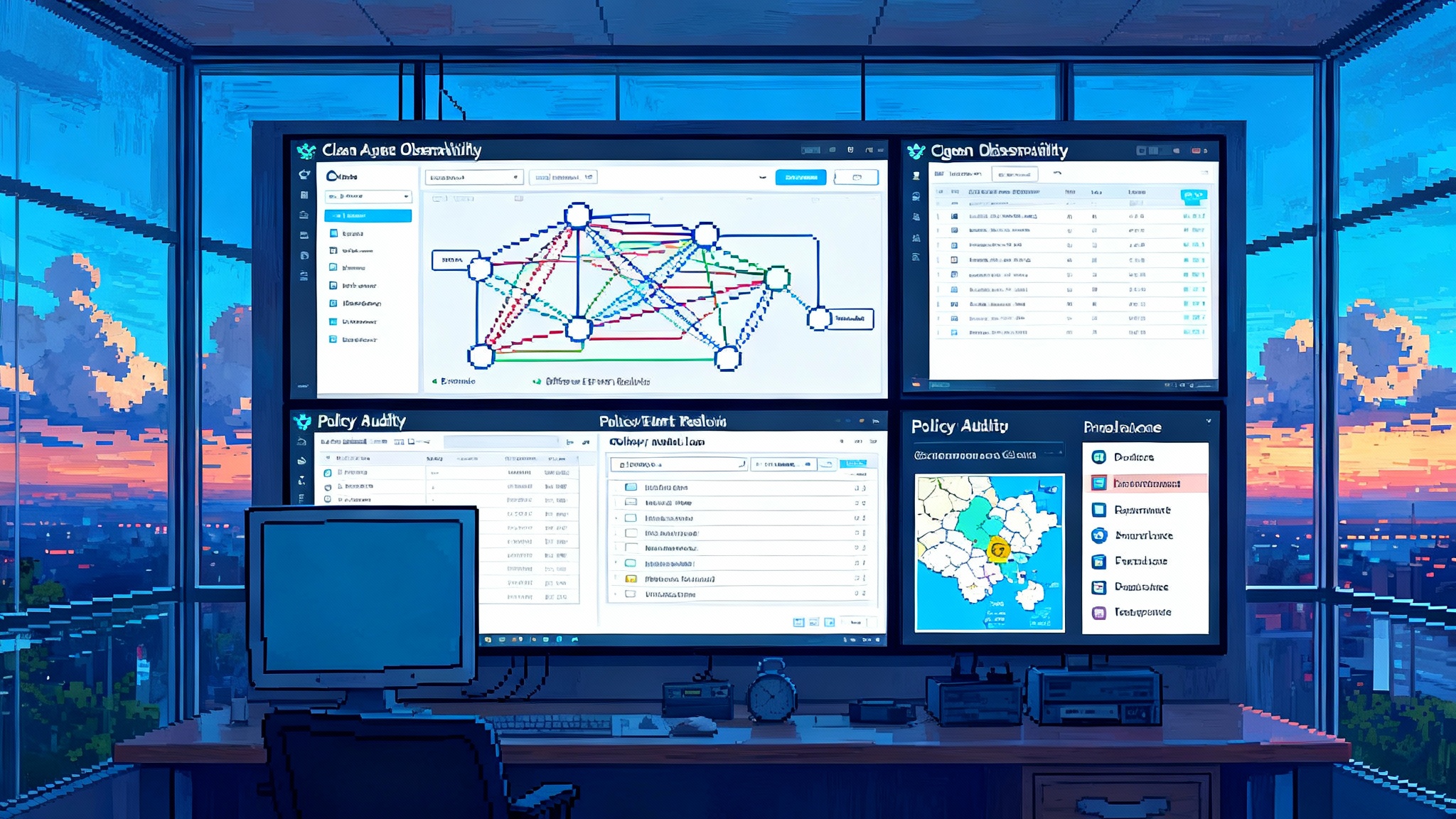

- AgentCore Observability. End to end traces, logs, and metrics for agent reasoning steps, tool calls, and outcomes, with dashboards powered by Amazon CloudWatch and compatibility with OpenTelemetry.

- AgentCore Identity. Integration with providers like Amazon Cognito, Microsoft Entra ID, and Okta so you can authenticate users to agents and so agents can assume well scoped roles when they access data or tools.

A crucial design choice is model and framework neutrality. AgentCore works with models inside or outside Bedrock, and with popular open source agent frameworks. That lowers the switching cost for teams that already standardized on a particular modeling stack.

What is actually shipping right now

- AgentCore is in public preview. It is available in multiple regions including US East N. Virginia, US West Oregon, Asia Pacific Sydney, and Europe Frankfurt. That footprint makes it feasible to run pilots close to users and data.

- The Agents and Tools category in AWS Marketplace is live. You will find third party agents and tools from partners, with listings that call out support for Model Context Protocol and agent to agent interoperability. Marketplace is the front door for procurement and deployment so buyers can move from browse to bill to run inside a familiar process.

- Preview pricing guidance is published. Teams can experiment during the preview window with clear cost expectations. That small detail enables structured evaluation rather than scattered proofs of concept.

The bottom line is a practical on ramp. Start with Marketplace listings for focused tasks, wire them into AgentCore Runtime and Identity, then ship a pilot in weeks rather than quarters.

Why memory, identity, tools, and observability matter

Most agent projects fail for the same reasons. The agent can reason but cannot remember. It can call a tool but no one can see what it did. It can browse but the access model is insecure. Operations cannot support it because it is a bundle of ad hoc scripts. AgentCore addresses those friction points directly.

- Memory turns a chatbot into a colleague. Without durable memory, an agent repeats questions and loses track of work. With Memory, a support agent can recall the last three troubleshooting steps for a customer and avoid wasted time. A claims agent can remember which documents were verified and which are pending.

- Identity aligns agents with your control plane. Instead of API keys buried in code, agents assume roles, obey least privilege policies, and produce auditable trails. That is the difference between a demo and a system your security team can approve.

- Tools are where work happens. Gateway makes internal systems addressable through a common protocol so you do not rewrite adapters for every agent or model. The Browser Tool covers the many systems that are still web front ends.

- Observability puts you back in charge. With traces and metrics, teams answer the basics. How often did the agent loop. Which tool calls timed out. What was the success rate for a specific task. That enables service level objectives for agents, not just traditional services.

MCP support cuts integration cost

Model Context Protocol has emerged as a pragmatic way to describe and connect tools, data sources, and memory to agents. By supporting MCP in AgentCore and labeling Marketplace listings with MCP and agent to agent capabilities, AWS reduces the number of bespoke adapters teams must build and maintain.

Here is how that plays out in practice:

- You have a customer support workflow that touches a ticketing system, a knowledge base, and a billing service. In the old world, the team that wrote the agent also wrote custom code for three integrations. In the new world, Marketplace listings and Gateway produce MCP compatible tool descriptions. The agent’s reasoner gets a consistent catalog of actions. You get fewer adapters to debug.

- You want two agents to collaborate. One triages incoming incidents. A second specializes in remediation. In the old world, they used different tool interfaces. With MCP, you enforce a shared protocol so handoffs are predictable and auditable.

For large organizations, fewer adapters translate into fewer vendor reviews, shorter security questionnaires, and faster procurement. For startups, it means a small team ships a deeper product surface without drowning in glue code. If you want a deeper dive into protocol centric designs, see how Cloudflare positions remote MCP at the edge.

What this unlocks for startups

- A path to distribution. Listing an agent or tool in AWS Marketplace places your product alongside core enterprise software. You benefit from AWS procurement, billing, and standardized contracts. For many buyers, that is the difference between an interesting trial and a signed order.

- A composable platform to specialize. Bring your own agent framework and model, rely on AgentCore for identity, memory, browsing, code execution, and observability, then focus on your secret sauce. A startup that automates vendor risk reviews can ship a specialized reasoning stack while using AgentCore to browse vendor portals, store long running context, and enforce entitlements.

- Cleaner unit economics. Long running sessions, code execution, and browsing can become a cost trap when infrastructure is hand assembled. AgentCore controls and metrics help teams measure and tune cost at the step and tool level. You can enforce caps, timeouts, and early exit rules.

Action for founders: design your product around MCP from the start. Expose capabilities as MCP tools and ensure your agent consumes MCP catalogs. That gives you instant compatibility with Gateway and improves fit with buyers who will filter by protocol support. For more on operationalizing the agent lifecycle, compare this launch with how Agent Bricks automates AgentOps.

What this unlocks for enterprises

- A standard operating model. Treat agents like microservices. Use AgentCore Identity to assign roles per agent. Use Observability to define and monitor service level objectives. Use Gateway to register tools the same way you register APIs. Platform teams can manage agents centrally rather than each business unit reinventing the stack.

- Faster time to value. A claims processing agent can be assembled by combining a Marketplace document understanding tool, a policy database exposed through Gateway, and the Browser Tool to work with a third party adjudication portal. Memory enables multi step cases that span days. Identity ensures minimum necessary access at each step.

- Audit ready governance. With OpenTelemetry compatible traces, you can show regulators and internal audit what the agent did, when, and why. You can reproduce a decision path and inspect every tool call and parameter.

Action for platform leaders: establish a central catalog of MCP tools for your enterprise. Every new internal data source or action should be exposed through Gateway with clear ownership, SLOs, and least privilege policies. Make agents consumers of that catalog rather than bespoke integrators. To see how the browser becomes a first class surface for agents, read our take on browser-native computer use arrives.

A 30 day evaluation plan that works

Use a cadence that validates the specific promises of AgentCore while keeping the blast radius small. The dates below are relative. Pick a start date and hold the line.

Week 1: choose a single, bounded workflow

- Pick something with measurable outcomes such as refund approval, new hire access setup, or monthly compliance evidence collection.

- Define success in numbers. Examples include first pass resolution rate, median time to complete, error rate, and cost per task.

Week 2: assemble the minimum viable agent

- Register three to five tools through Gateway and your identity provider.

- Wire up Memory with an explicit retention policy. Decide what the agent should remember, for how long, and why.

- Add Browser or Code Interpreter only if the workflow needs it. Do not start with every tool.

Week 3: instrument before scale

- Turn on Observability and build a simple dashboard. Track task success rate, average tool calls per task, loop count, and timeout count.

- Run failure drills. Intentionally break one tool and confirm the agent fails safe and emits a clear signal. Practice rollback.

Week 4: pilot with real users

- Route a small percentage of traffic through the agent with human in the loop. Collect user feedback alongside metrics.

- Produce a one page report that covers performance, cost, and governance posture. Decide to iterate, expand, or pause.

Risks to manage in the next quarter

New cloud primitives expand your power and your responsibilities. Three risks deserve attention.

- Governance drift

- Risk. Agents accumulate tools and permissions over time. Without clear boundaries, they perform actions you did not intend or act without a record.

- What to do. Treat agents as identities and workloads. For each agent, define a narrow role that specifies which tools it can invoke and at what scope. Use the Identity service to enforce it. Require human in the loop for material actions such as vendor changes or financial transfers. Log every tool call and ship traces to systems your audit and risk teams already use.

- Cost blowups

- Risk. Long running sessions, browsing loops, and code execution can turn a small pilot into a large bill. The more competent the agent, the easier it is to miss runaway behavior because the agent keeps trying to succeed.

- What to do. Put budgets and step limits in code. Cap the number of tool calls per task. Enforce conservative timeouts for Browser and Code Interpreter sessions. Use Observability to flag loops and retries at the workflow level, not just the model level. During the preview period, take advantage of trial pricing to run controlled load tests and capture cost curves before you scale.

- Lock in by convenience

- Risk. Deep integration with Identity, Observability, and Memory makes life easier now but harder to migrate later. Even with model portability, the operational footprint becomes sticky.

- What to do. Adopt portability patterns from day one. Express tools through MCP. Export traces through OpenTelemetry to a destination you control. Keep memory objects in formats that can be rehydrated elsewhere. Isolate domain logic in a layer that is not tied to runtime specific APIs. Document a second home, even if you never use it.

Competitive context without the hype

Other clouds and platforms are shipping agent stores and runtimes. The difference that matters is not the slogan. It is whether the runtime provides identity, memory, tools, and observability as first class services, and whether those services match how your organization already runs software. On that score, AWS has assembled a coherent set of primitives that align with enterprise operations.

It also matters that AgentCore does not force a single modeling choice. The ability to use models in or outside Bedrock and to keep your preferred agent framework reduces switching costs. That choice benefits buyers and encourages an ecosystem where vendors compete on outcomes, not on a captive stack.

Design patterns that pay off

- Least privilege agents. Give every agent a unique identity that maps to a narrow role. Scope tool permissions by task. Rotate credentials on a schedule. Record every elevation request and decision.

- Budget and quota guards. Define budgets per workflow. Enforce per step quotas for tool calls, browser minutes, and code interpreter runtime. Fail closed on overruns with clear error semantics.

- Observability first. Treat traces as part of the product. Include step names, tool parameters, and outcome tags. Route telemetry to a system your SRE and audit teams already know.

- MCP first interfaces. Wrap internal APIs with Gateway and MCP descriptions. Version tool contracts. Publish a centralized catalog. Measure tool adoption and deprecate early.

A note on the human loop

AgentCore makes it straightforward to insert human checkpoints where the risk profile demands it. You can require confirmations for high impact actions such as vendor changes, entitlement grants, or financial moves. You can also design nuanced rules. For example, allow fully automated execution under a threshold but escalate to human review above it. The point is not to slow down every task. It is to focus human attention where it changes outcomes.

How Marketplace shifts go to market

For vendors and internal platform teams, the new Marketplace category is more than a shelf. It packages agents and tools into something purchasing teams recognize. That means standardized terms, consolidated billing, and faster onboarding. Listings surface protocol support such as MCP and agent to agent interoperability so buyers can avoid dead ends.

If you are a vendor, the opportunity is to productize workflows, not just models. Ship an agent that solves a business problem end to end. Declare the tools it needs in MCP and provide controls for governance and cost. If you are a buyer, assemble a portfolio of agents and tools you can evaluate side by side, price on consistent units, and deploy through AgentCore with minimal engineering lift.

Near term outlook

Expect a fast iteration cycle through the rest of 2025. Marketplace listings will multiply. MCP will become the default way tools are described. Identity and observability features will deepen because those are the first asks from advanced buyers. Early adopters will push for tighter controls such as tool specific rate limits, policy simulation for agents, and runbooks that restart long running sessions after failures.

If you are still watching from the sidelines, the practical move is to run a focused pilot now. The preview period and Marketplace make that affordable and procedural. The goal is not to adopt agents everywhere. The goal is to establish the operating pattern so that when the business needs an agent for a real workflow, you already know how to ship it safely.

Conclusion

AgentCore and the Agents Marketplace do not make agents magical. They make agents manageable. By baking in memory, identity, tools, and observability, AWS has turned agent projects from artisanal builds into cloud software you can deploy with confidence. The technical story is strong, but the deeper impact is organizational. Platform teams can finally offer agents as a service to the rest of the company. Startups can package outcomes instead of demos. Buyers can compare options with shared protocols and shared controls. The advantage now goes to teams that operationalize while the stack is still settling but solid enough to run. The primitives have arrived. The rest is execution.