APIs Go Agent First: Theneo's Cursor for Your API

Theneo launches an AI-native API editor that treats agents as the primary user. With AI-readiness scoring, one-click MCP export, and BYOK privacy, teams can ship agent-usable endpoints and gate them in CI.

Breaking: APIs are flipping to agent first

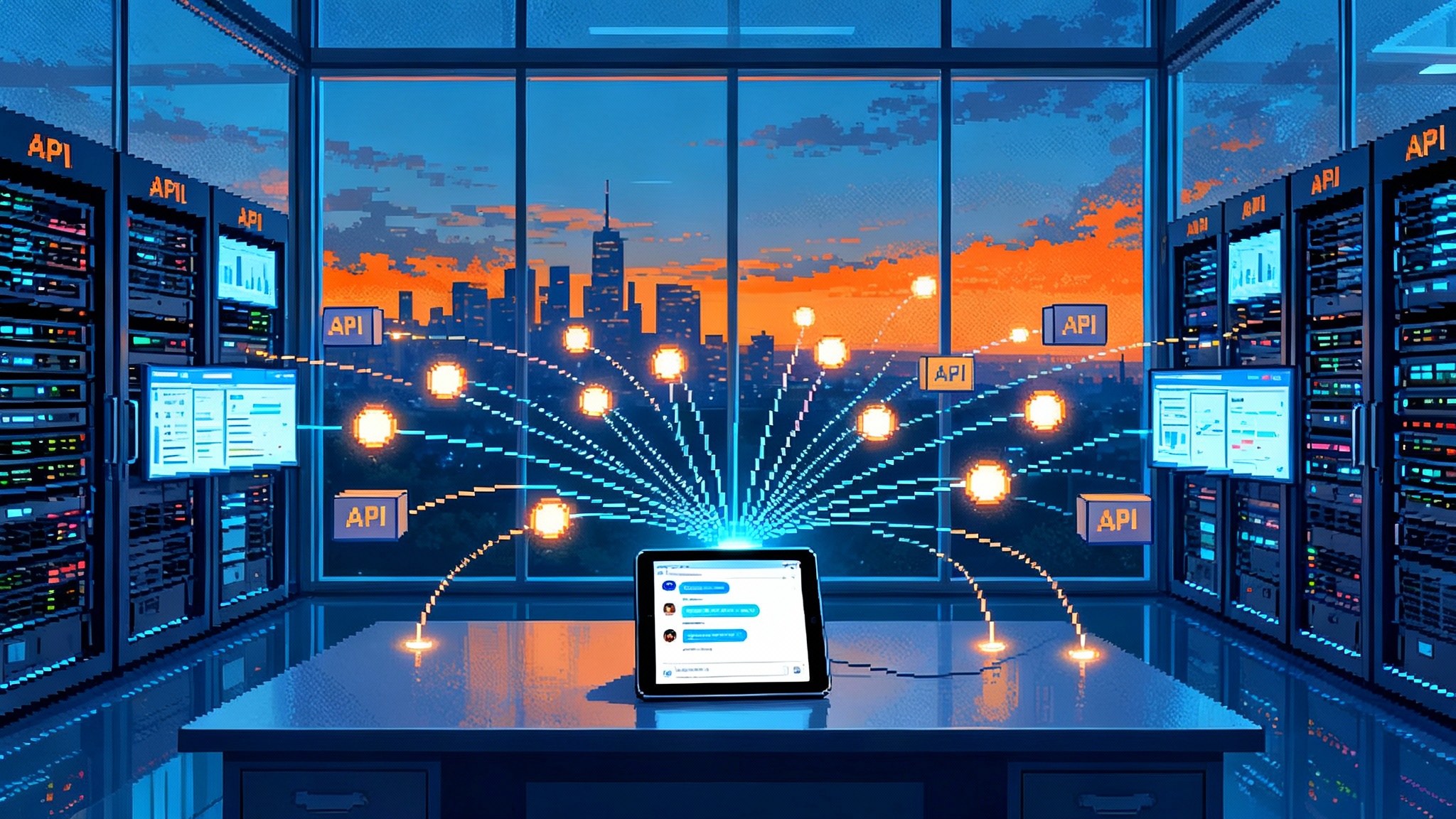

A quiet but meaningful shift just landed in the developer world. Theneo introduced Cursor for your API, a compact AI-native editor that generates, lints, tests, and exports an agent-usable handoff in one flow. The pitch is simple and bold: make API endpoints ready for large language model agents first, then help humans understand what those agents will do.

If you want a timestamp for this inflection point, look at the Cursor for your API launch. The editor consolidates four essential jobs. It helps you produce or import a clean OpenAPI description. It applies AI edits with human-reviewable diffs. It runs linting and request tests so you avoid brittle contracts. Then it exports a Model Context Protocol profile so an agent can discover capabilities and call your API correctly.

This is not just another developer tool. It is a signal that documentation written only for humans is giving way to machine-first contracts that must be executable, testable, and reliable under automation.

Why a machine reader is now the primary reader

For a decade, API tooling encouraged us to optimize for human readers. We wrote prose for developers, pasted examples, and shared Swagger UIs or Postman collections. Theneo’s move flips the order. The primary reader is a machine that will invoke your endpoints. The secondary reader is a human who wants to understand the behavior that machine will execute.

That mental model changes everything. It changes how you lint. It changes how you test. It changes what you consider a successful documentation release. It also lines up with broader movement across agents and orchestration. If you have been following how agentic systems are deployed in the wild, you have seen this pattern in other domains, from Meta Agent orchestrates chat-built teams to infrastructure where Agentic Postgres unlocks safe parallelism. API docs are the next surface to shift.

What makes Cursor for your API a breakthrough

The practical upgrades are direct and actionable:

- Generate or import OpenAPI, then ask the editor to propose edits. You accept changes through diffs as if you were reviewing a pull request.

- Lint with rules tuned for both human comprehension and agent consumption.

- Test requests in the same view so examples are not hypothetical. Execution catches drift that prose would miss.

- Export a single, agent-ready handoff using the Model Context Protocol.

- Protect privacy with bring your own model and key, so tokens and prompts stay under your control.

The best way to think about it is moving from a glossy brochure to a pilot’s checklist. The brochure looks nice. The checklist keeps you alive when the weather turns.

Agents first, humans second

Designing for agents first changes three core behaviors.

-

Optimize for unambiguous contracts. Agents cannot infer intent from vibes. They need type-safe schemas, consistent status codes, and clear error objects. Linting rules must surface places where a human would cope but an agent would fail. A classic trap is returning HTTP 200 with a string error message. A person might scan the body and adapt. An agent will parse the string and continue in the wrong direction.

-

Expose capability in a format an agent can register and reason about. That is where MCP comes in. It acts like a catalog of tools, prompts, and context endpoints that a client can discover without bespoke glue code.

-

Validate behavior in fully automated ways. Humans skim examples. Agents execute them. Your tests should behave like a lightweight agent harness that proves an endpoint matches its contract, including pagination, rate limiting, and idempotency paths.

From Swagger and Postman to CI-gated, agent-usable endpoints

Swagger and Postman defined a generation of API workflow. Author the spec. Pretty up the docs. Hand a collection to a human who will click around. The agent era needs a different path. Contracts must be checked where real code ships and blocked when unsafe changes appear.

That is where continuous integration earns a bigger job. In an agents-first world, CI does more than compile and run unit tests. It runs an agent-facing linter, verifies the OpenAPI contract against actual behavior, and fails the build when an agent would misunderstand the contract. If your docs say a field is required but the server accepts the request without it, that is a contract foot gun. The build fails, you fix it, then you ship.

The result is a new kind of release gate. Docs are not decorative pages. They are machine contracts that must pass before deployment.

AI-readiness scoring, explained with a concrete example

Cursor for your API surfaces Insights across design, developer experience, security, and AI readiness. The AI readiness question is blunt: if a competent agent had this description and nothing else, could it call your API correctly and recover from predictable errors?

Consider a simple invoices surface:

- POST /invoices to create a draft invoice

- GET /invoices/{id} to fetch details

- POST /invoices/{id}/finalize to finalize

A human-oriented spec might show one request and response for POST and GET and stop there. An agent-oriented spec needs more:

- Clear idempotency tokens for the create call so the agent can safely retry after a network failure.

- A standard error object that includes a machine-readable code and a human hint. Agents key off the code. Humans read the hint.

- Pagination fields that are consistent across list endpoints. If one uses next_cursor and another uses offset, an agent will stumble.

- Rate-limit headers with documented retry rules, including jitter, so agents avoid tripping global throttles.

An AI-readiness score can weight each of these. If your invoice list uses two different pagination patterns, you lose points. If your error schema is missing a code field, you lose points. If you provide one example per common failure, you gain points. The score is not a vanity metric. It directs fixes that move your API from human-tolerable to agent-robust.

One-click MCP export in plain language

Model Context Protocol is the transport and discovery layer that lets clients treat your API as a set of tools. Think of MCP like a standardized power plug. Use the standard and any compliant device works without a different adapter in every room. In this framing, your MCP export describes what tools exist, what inputs they take, and how to fetch documentation or context. An agent registers, reads that manifest, and begins calling your API using the rules you publish.

If you want the canonical description, Anthropic maintains the Model Context Protocol documentation. The short version for practitioners is this: MCP lowers the cost of being integrated by many agent clients because you integrate once in a standard form. The old approach produced one GitHub bot here, a custom plugin there, and a vendor specific connector somewhere else. The new approach is a single MCP handoff that travels.

Privacy reset with bring your own key

Security posture shifts when the primary consumer is a nonhuman client that can generate a lot of traffic quickly. Cursor for your API leans on bring your own key. Bring your own key means you choose the foundation model and keep the key local to your session. The editor does not store your model key or your spec. That is a small yet meaningful reset in privacy expectations. If you do not log prompts and generations to external systems, there is less risk of sensitive schema leaking through analytics. For regulated teams, that makes early experiments less fraught and approvals faster.

This aligns with a wider pattern we have covered across the stack. When agents act on behalf of users, teams want clarity on data boundaries and execution paths. For a media example, see how publishers set constraints in publishers take back AI search. The same instinct shows up in API platforms.

What happens next: three near-term shifts

-

Agent-facing OpenAPI linters become standard. Today’s linters check for vague descriptions and duplicate schema names. The next wave checks for agent-critical traps like boolean flags that invert semantics across endpoints, response bodies that change shape by status code without a documented union type, or long-running operations that require polling but never say so.

-

API-to-agent integration tests enter the CI pipeline. Test runners will simulate an agent workflow end to end. For example, create an invoice, finalize it, then simulate a network failure and assert that a retry with the same idempotency key returns the correct status. Vendors will ship reusable harnesses so platform teams do not rebuild the same scaffolding.

-

MCP becomes the default handoff from backend to agents. Instead of a custom connector per vendor, platform teams will maintain one MCP manifest that tracks capabilities, permissions, and rate limits. Internal platforms will publish a small registry of MCP servers, much like today’s service catalogs.

The seven-day retrofit playbook

No one wants a ground-up rewrite. Here is a practical one-week plan any startup can run to make an existing API agent ready. Each step targets about a day of focused work.

Day 1. Inventory the surface and pick one golden path

- Map your top three workflows by real usage. Choose one path that starts with a create call and ends with a verifiable business outcome. Example: create order, capture payment, issue receipt.

- Export your current OpenAPI and list the endpoints that appear in that path.

Day 2. Stabilize the contract

- Run an aggressive linter profile and fix unambiguous errors first. Normalize status codes and ensure every error returns a standard object with a machine-readable code.

- Add idempotency keys to all non-idempotent POSTs. Document retry rules explicitly.

Day 3. Make pagination and filtering consistent

- Pick a single pagination strategy and apply it everywhere on list endpoints. Cursor or page offset, but do not mix.

- Document max page size, default size, and an example for a deep page.

Day 4. Write agent-oriented examples

- For each endpoint in the golden path, add a success example and at least one failure example. Include a retryable failure and a permission failure. Ensure request and response bodies are real payloads, not lorem ipsum.

- Add a short decision tree to the description of the main tool. Example: if finalize fails with code invoice_not_draft, fetch the current invoice, check status, and choose the correct next call.

Day 5. Add agent-to-API tests and wire CI

- Convert those examples into runnable tests that exercise the golden path end to end. Simulate failure and retry where appropriate.

- Add these tests to your continuous integration pipeline. Fail the build on contract drift or missing error codes.

Day 6. Export MCP and register with a client

- Use Cursor for your API to export MCP from the refined spec. Keep the manifest in your repo so changes track with code.

- Register with a local MCP client to validate discovery. Confirm that tools appear, parameters validate, and examples are visible.

Day 7. Ship a private preview and gather traces

- Enable a limited preview with a small group of internal or design-partner users. Ask them to run a scripted task through an agent. Capture agent traces, including which errors and edges they hit.

- Use traces to adjust descriptions and error codes. If an agent got stuck on a permission failure, add a more explicit remediation hint or a follow-up tool.

At the end of seven days you have an agent-usable golden path, CI tests that prevent regression, and an MCP export that agent clients can register against. You also have a template for expanding to the next workflow.

What this means for platform teams

- Versioning becomes more conservative. Agents live longer than humans in tabs. When you bump a version, you may need to maintain shims for longer or provide clear upgrade hints in the MCP manifest.

- Observability shifts to behavior traces. Human readers give page analytics. Agents give call graphs that show loops, retries, and backoffs. You want both. Instrument endpoints so you know which error codes trigger retries and why.

- Docs become operational objects. They are not dead text. They are contracts, manifests, and runnable examples that connect into CI. Treat them with the same discipline you use for the code that implements them.

Anti-patterns to root out early

- Hidden behavior that changes by status code with no schema union. If a 200 body differs from a 202 body and you never document a union type, an agent will misparse.

- Pagination inconsistencies. Mixing offset and cursor pagination without guidance forces brittle client logic.

- Silent feature flags. If a response shape depends on a feature flag that is not represented in the contract, you will create phantom bugs.

- Error messages without codes. Humans can skim prose. Agents need a stable key.

A note on testing philosophy

You do not need an end-to-end agent runner to start. The pragmatic pattern is to test the golden path as if a careful agent executed it under uncertainty. That means simulating common faults: network blips, permission mismatches, stale idempotency keys, and partial pagination. As you scale, your test harness can begin to look like a staging agent, with backoff, retries, and decision logic. This is where Cursor for your API’s tight loop between spec, tests, and export is helpful. When a test fails, you fix the contract or the implementation in the same workbench.

MCP in team workflows

MCP also helps align platform teams and application teams. A backend owner can publish a manifest that captures available tools, rate limits, and permission scopes. An application team can adopt that manifest without writing yet another connector. Over time, organizations will keep a small internal registry of MCP servers that reflect current backend capabilities. That registry becomes the source of truth for agent integrations.

If you are new to MCP, start with the Model Context Protocol documentation, then export a minimal manifest from a single golden path. The minute you see a client register tools and call them correctly, the value becomes obvious.

Competitive landscape and adjacent moves

The shift to agent-first contracts will not happen in a vacuum. It will arrive alongside orchestration frameworks, data stores, and vertical agents that expect machine-stable APIs. We already see this in adjacent launches, from Meta Agent orchestrates chat-built teams to infrastructure-level systems that surface safe parallelism for tool execution. The common thread is simple: human intent becomes a plan that flows through tools, so tools must be consistent, describable, and testable.

The bigger picture and what to do now

The last era of API tools made documentation faster and prettier. The next era makes APIs reliably invocable by software that acts on behalf of people. Theneo’s Cursor for your API combines those assumptions in a single, lightweight editor. It bakes in AI-readiness scoring so you know where an agent will fall over. It exports MCP so you can integrate once and interoperate broadly. It encourages bring your own key so privacy is the default, not a buried setting.

If you build or buy API tooling, this is your cue to reframe your checklist.

- Ask whether your endpoints are ready for agents to call them at 3 a.m. without a human looking on.

- Put an agent in the test loop. Simulate failures and verify recovery paths.

- Fail the build when the contract lies. Let CI be the gate that protects users.

- Export a manifest that other teams can adopt without custom glue.

The human who reads your docs will still thank you. They will just be thanking you because their agent already finished the job.