Claude Sonnet 4.5 puts autonomous agents on enterprise roadmap

Anthropic’s Claude Sonnet 4.5 adds what enterprises lacked: sustained, reliable autonomy. With runs that stay on task for about 30 hours, agents can own outcomes across complex, multi day workflows.

The news, and why it matters now

On September 29, 2025, Anthropic introduced Claude Sonnet 4.5 and put a spotlight on something enterprises have been waiting for: durable autonomy. Early customer and internal runs cited roughly thirty hours of continuous, coherent work without hand holding, a step change from prior generations that often drifted after a few hours. A model that can keep a plan alive for an entire workday changes what you can safely automate. You can move beyond one off chat helpers and put an agent in charge of producing an artifact that stands up to review. As one outlet framed it, the release is aimed squarely at business users and long horizon work rather than short viral moments; see the Reuters report on Claude 4.5.

The core story is not a single benchmark or an isolated demo. It is the compound effect of extended focus, better tools, and safer scaffolding. An agent that holds context for hours can take ownership of outcomes, not just steps. That shifts the conversation from novelty to productivity.

From chat helpers to durable automation

For the past two years, many teams met agents as chat assistants or single click macros. They could draft, summarize, or call an API, but they struggled when the job stretched across datasets, approvals, and time. The limiting factor was not only raw intelligence. It was stamina, control, and trust. The result was a lot of promising pilots that stalled at the last mile.

Claude Sonnet 4.5 pushes past that plateau by prioritizing staying power. When an agent can stick with a plan across a workday, it can reconcile data, wait for a build to finish, check back on a metric, and assemble a final package without losing the thread. That reliability over time is what lets an agent graduate from sidekick to owner of a recurring job.

The kit that makes agents practical

Think of modern agent development like building with Lego bricks. For a while, teams had to whittle their own pieces. Now the bricks are sturdier and slot together with far less bespoke glue. Three are especially important for enterprises.

1) Sandboxed compute that behaves like a clean workstation

- What it is: A secure execution environment the agent can control to run Bash commands, manipulate files, and generate artifacts without touching production hosts.

- Why it matters: It reduces blast radius and makes runs reproducible. You can give agents real tools without handing them the crown jewels. Pinned packages and isolated filesystems turn a fuzzily described run into something you can replay, debug, and audit.

- Where it shows up: Anthropic exposes a code execution environment that provides exactly this shape of sandbox. Read the Anthropic code execution tool to understand how teams wire it into agent runs.

2) Persistent memory that survives the session

- What it is: Durable instruction and project memory that agents consult across runs. Examples include standardized runbooks, naming rules, policy checklists, and credentials routing stored as managed files.

- Why it matters: Memory stops agents from relearning your house rules every morning. It cuts onboarding time, prevents repeated mistakes, and enables continuous improvement as you refine the playbook. Long context windows help, but disciplined durable memory is what keeps behavior consistent between Monday and Friday.

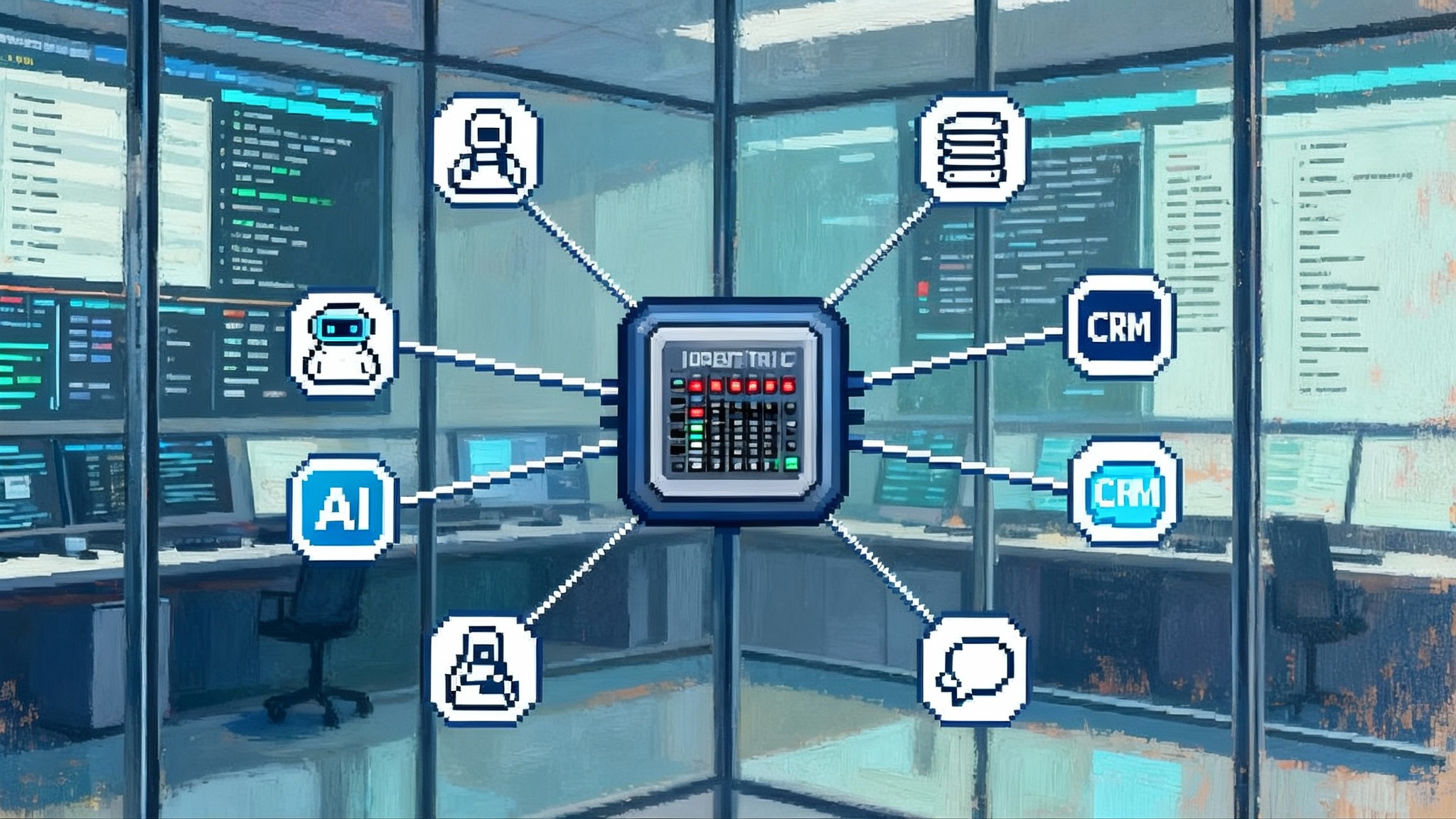

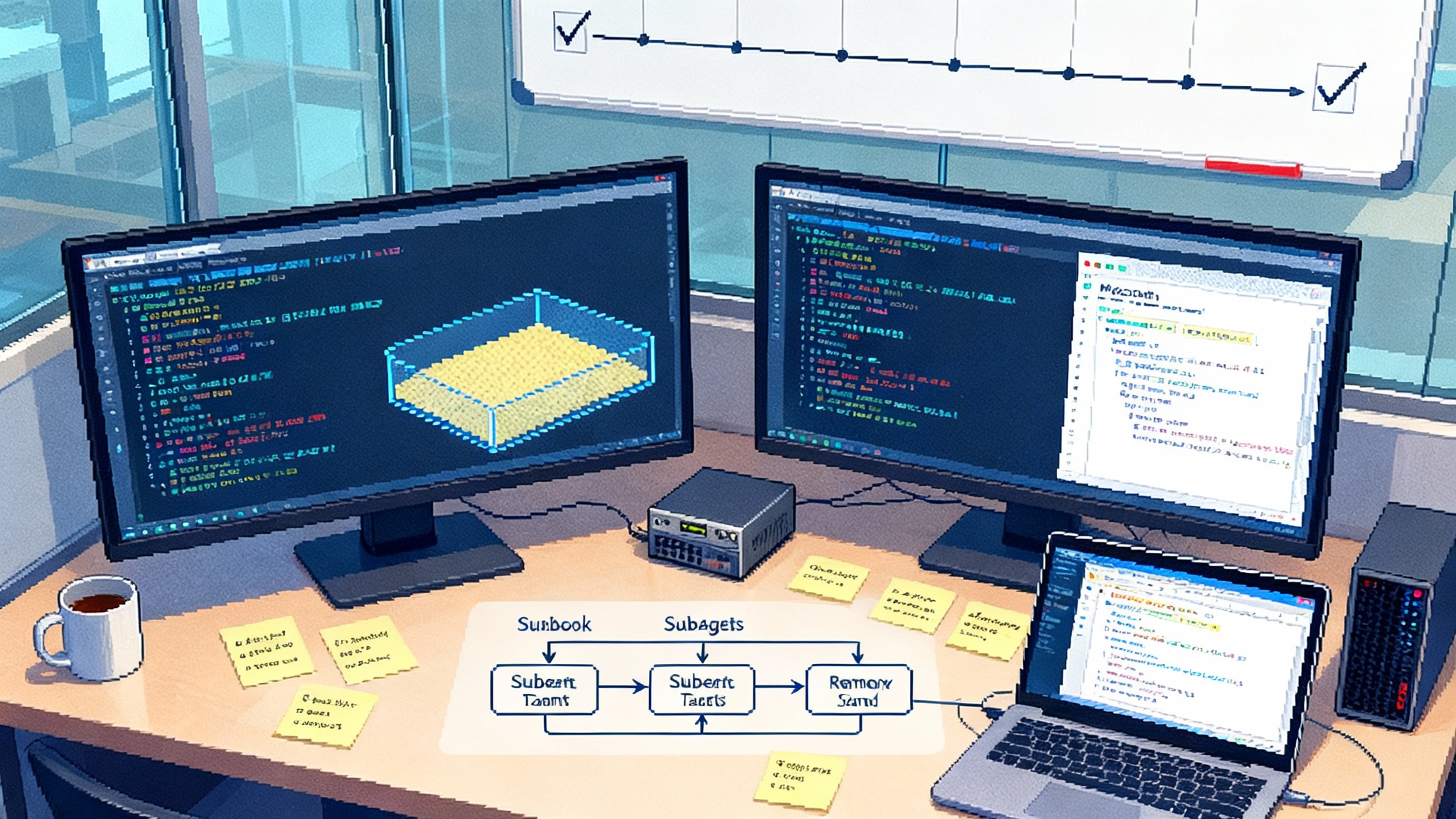

3) Multi agent orchestration with subagents

- What it is: A way to split a job into specialized roles. You might pair a code reviewer, a tester, a security checker, and a deployer, each with tools and guardrails tuned to a single task.

- Why it matters: Narrow agents behave better. Teams that cram everything into one do it all prompt see more loops and fewer safe decisions. Role clarity and least privilege make agents both faster and safer.

Put together, these primitives let you design agents like production services. You can provision an ephemeral workstation, load the house rules, delegate work to the right role, and produce an artifact with a trace of what happened.

Where to use it first: three near term plays

Start where extended focus and repeatability pay for themselves quickly. Here are three enterprise ready plays with concrete steps.

1) Security hunts that finish the job

- Trigger: A cloud alert, a suspicious login pattern, or an endpoint anomaly that would normally kick off a manual investigation.

- Agent plan: Spin up a read only sandbox session with access to logs and threat intel feeds. Collect indicators, correlate across authentication logs, endpoint telemetry, and network flow data, then write a timeline and recommended actions. If policy allows, open tickets, draft containment commands, and propose firewall changes for human review.

- Outputs: A signed playbook run record, a Markdown incident timeline, a diff of proposed rule changes, and a bundle of indicators of compromise for your knowledge base.

- Why Sonnet 4.5 helps: Hunts span hours and often require rechecks. The model’s ability to keep context and stay on task makes the difference between a partial lead and a complete incident report.

2) FP and A workflows that do not stop at the pivot table

- Trigger: The monthly forecast cycle or a working session to evaluate a pricing change.

- Agent plan: Mount a sanitized copy of revenue, bookings, and cost tables inside the sandbox. Reconcile data sources, validate joins against known constraints, run scenario analysis, produce bridge charts, and write an executive ready memo. If connected to staging, write back forecast snapshots and attach working files.

- Outputs: A versioned spreadsheet with checks, a chart pack, and a drafted narrative that cites assumptions and shows sensitivity intervals.

- Why Sonnet 4.5 helps: Finance work has long dependency chains and often stalls when analysts must babysit joins, units, and time buckets. A durable agent can push through these steps and bring a clean package to the review meeting.

3) Data operations runbooks that actually close the loop

- Trigger: A failed daily pipeline, schema drift, or a late arriving dimension that threatens downstream reports.

- Agent plan: In a controlled sandbox with read access to observability logs and limited write access to staging, follow your runbook. Identify the failing job, check upstream schema changes, generate and run safe remediation queries, regenerate downstream extracts, and post a signed summary with diffs.

- Outputs: A remediation pull request, a backfill job definition, and an audit note with before and after metrics.

- Why Sonnet 4.5 helps: This is classic long tail toil that benefits from steady attention and precise handoffs. A model that does not lose the thread over hours can finally turn the runbook into a closed loop workflow.

A pragmatic 90 day rollout plan

The technology is ready for prime time. Success still depends on how you roll it out. Use this plan to deliver value fast while earning trust.

Phase 0, week 0 to 1: Frame the bet and set the guardrails

- Scope: Choose two use cases from the list above plus one quick win unique to your stack.

- Access: Stand up a separate sandbox environment for agents with secrets vault integration and least privilege service accounts.

- Spend controls: Set per session and per day budget caps at both the sandbox and model layers. Require a spending label on every run so you can tie cost to outcome.

- Audit: Turn on structured logging for tool calls and file edits. Store run artifacts and logs together with retention aligned to your data policy.

- People: Name an operations owner, a security counterpart, and a finance partner. Give each explicit responsibilities for approvals and reviews.

Phase 1, weeks 2 to 4: Establish evals and service levels

- Define evals: Write 10 to 20 canonical tasks per use case that reflect messy reality. Include edge cases and failure modes. Score on completion, accuracy, and time to completion.

- Set SLAs: Define a service level that matters to the business. Example: 95 percent of incident timelines within 60 minutes for medium alerts, 99 percent of monthly forecast packages by 6 p.m. on day two.

- Baseline humans: Run the same evals with your current human only process. Capture time, accuracy, and rework. You need a clean baseline to prove progress.

Phase 2, weeks 5 to 8: Pilot with human override patterns

- Override patterns: Require explicit sign off before any write to production. Use two patterns. First, stop and ask checkpoints at risky steps. Second, run end to end in staging, then request promotion.

- Versioned prompts: Store agent role prompts and memory files in version control. Treat them like code. Every change should be reviewed, tested, and rolled back if needed.

- Fail safe behaviors: Define what the agent must do on uncertainty or error. Examples include revert, open a ticket, or request escalation when confidence drops below a threshold or when it sees policy keywords.

Phase 3, weeks 9 to 12: Scale, measure, and negotiate service levels

- Expand scope: Add one new subagent to each pilot that targets a known bottleneck, such as a tester in security hunts or a reconciler in FP and A.

- Tighten SLAs: Use pilot data to raise targets conservatively. Increase the share of runs where the agent can auto approve low risk steps.

- FinOps: Compare model spend to reclaimed hours and reduced error rates. Lock in a budget envelope and cost per outcome targets for the next quarter.

Controls that enterprises expect

Your goal is not to let agents roam the network. It is to codify the same controls you use for any production service.

- Evals and SLAs: Treat them as living documents. When you discover a new failure mode, add it to the eval suite and the runbook.

- Spend caps: Enforce per run, per user, and per project caps. Combine agent side budgets with platform level limits so a runaway process hits a hard stop.

- Audit trails: Capture tool calls, files touched, prompts, and model versions. Require a signed summary at the end of every run with the artifacts attached.

- Human override: Standardize when a person must sign off. Use confidence thresholds and allow a break glass role that can interrupt or kill any run.

- Separation of duties: Split who writes subagents, who approves memory changes, and who approves production writes. No single person should control all three.

If your identity and access strategy is maturing, you can go further. Treat identity and authorization as first class surfaces in your agent platform. For a broader perspective on why that matters, see how enterprises are turning identity as the control plane.

How to measure success in the first 90 days

Pick a small set of metrics that tie spend to outcomes. Use targets you can adjust for your environment.

- Completion rate on evals: Aim for 85 percent end to end success on your task suite by day 30 and 93 percent by day 90.

- Mean time to completion: Target a 40 percent reduction versus human only baselines by day 90 on at least two use cases.

- Rework rate: Fewer than 1 in 10 agent outputs should need substantial rewrite by day 60, and fewer than 1 in 20 by day 90.

- Cost per outcome: Reduce the fully loaded cost to produce the defined artifact, such as a forecast package or incident timeline, by at least 25 percent by day 90.

- Safety incidents: Zero production write incidents without an associated approval. Zero credential exposure events. Track near misses and convert them into tests.

These numbers do more than fill a dashboard. They help you decide where to grant more autonomy, where to keep tighter human control, and where to invest in new subagents. As you do, benchmark your progress against the broader agent ecosystem. Many teams report meaningful gains once they adopt modular patterns like Agent Bricks and MLflow 3.0.

Architecture patterns that work

- Use the sandbox like a job runner: Treat the execution environment as a short lived workstation. Pin versions, mount only what is needed, and snapshot the working set for reproducibility. The code execution tool is designed for this and keeps work contained.

- Keep memory boring and versioned: Store policies, naming rules, and runbook snippets in managed memory files. Review them like code. Long context helps, but durable memory is what keeps behavior consistent.

- Prefer several simple subagents over one complex generalist: A reviewer that only comments, a tester that only runs tests, and a deployer that only writes manifests are easier to trust and tune.

- Promote artifacts, not conversations: The right output is a pull request, a chart pack, a remediation plan, or an incident timeline, not a transcript. Artifacts support audit, handoffs, and automated checks.

- Build in the network edge when it helps: If parts of your workload run closer to where data is produced, consider how agents will execute there and how they will hand off to your core systems. For context on why that matters, revisit the rise of first mainstream computer using AI and how agents began to operate real software on behalf of users.

Practical playbooks and pitfalls

Below are field tested moves that raise your odds of success and the gotchas that stall teams.

- Start with sanitized data: Give the agent a clean slice of reality, not a toy dataset. If the sandbox can mount a recent, scrubbed snapshot of production, the outputs will look like what your reviewers expect.

- Pin your toolchain: Treat the sandbox like a build environment. Freeze versions for the duration of a run. When a package updates, drive the change through a pull request in your agent repository.

- Write runbooks with if statements: Plain language runbooks are not enough. Include decision points, confidence thresholds, and explicit fallbacks. Turn the runbook into a script the agent can follow deterministically.

- Document expected failure modes: Write down three common ways the agent can be wrong for each use case and how it should fail safe. If a join fails, revert and open a ticket. If a test suite is flaky, retry up to a limit, then escalate.

- Track cost per artifact: Tie spend labels to outcomes, not tokens. You want to know what it cost to produce a clean forecast or a complete incident package, and how that compares to your human only baseline.

Common pitfalls to avoid:

- Piloting in a vacuum: If you run a demo with special permissions and bespoke tooling, you will not learn how the agent behaves under the controls your production teams require.

- Overfitting the prompt: Teams often massage prompts until one run looks great, then discover that small changes break the flow. Version prompts, test them against a messy eval suite, and avoid brittle hacks.

- One agent to rule them all: It is tempting to pour every tool and permission into a single agent. Resist that urge. Subagents with least privilege are safer and easier to debug.

- Ignoring identity and logs: If you cannot answer who did what and when, you will hit a wall with compliance and change management. Wire identity into the sandbox and capture a full audit.

What changes with Sonnet 4.5 staying power

You do not need to rethink your entire stack. You do need to change how you measure success. Instead of asking whether the next token is likely correct, ask whether the whole job completes with acceptable accuracy, speed, and cost. With extended autonomy, that becomes a tractable goal. The model can now carry a plan across hours, maintain a working set, and hand off between specialized roles without losing the plot.

This reframing also helps you negotiate service levels. For example, a single alarming misstep in a chat session used to sink trust for weeks. In a productionized agent program, you can point to completion rates, time to completion, and rework rates. You can show how controls caught a risky step, how the agent reverted, and how the runbook was updated to prevent a repeat.

The bottom line

Enterprises have been stuck between tantalizing demos and brittle agents that needed constant babysitting. With Sonnet 4.5, Anthropic is packaging the pieces that let teams ship durable automation: a safe place to work, memory that persists, and a way to divide labor among focused roles. The headline capability is not just that the model thinks better. It is that it stays with the job long enough to finish it. Pair that with a conservative rollout and tight controls, and you have an automation program that can pay back inside a quarter and compound from there. For a broader industry view on why modular agent building blocks matter, see how teams are adopting Agent Bricks and MLflow 3.0. And to understand how identity centric policies become the guardrails for autonomy at scale, explore identity as the control plane.

Finally, if you want to follow the product level details and availability signals, the Reuters report on Claude 4.5 provides a useful snapshot of the September 29 announcement and business positioning. Use that context to align your rollout with the model options and access you plan to secure in the next quarter.